⚠️ WARNING: This report contains both explicit adult content, as well as references to (though zero direct depictions of) confirmed Child Sexual Abuse Material. Adalytics has already notified the Federal Bureau of Investigation (FBI), Department of Homeland Security HSI Special Agents (DHS HSI), the National Center for Missing & Exploited Children (NCMEC), and the Canadian Centre for Child Protection (C3P) of the potential Child Sexual Abuse Material. The Canadian Centre for Child Protection analyzed a number of images reported by Adalytics, and believed they met the definition of child sexual abuse material under Canadian law.

This empirical research report – which is based on publicly available information - deals with the issue of digital advertising transparency and “brand safety” and, thus, must necessarily focus on language and/or ideas that many (including the authors of this report) may consider offensive. Specifically, this report contains depictions or mentions of adult pornographic content which may not be suitable for all audiences or for the workplace environment. The report does not include any direct depictions of confirmed CSAM which were already reported to law enforcement. Reader discretion is strongly advised.

Executive Summary

imgbb.com (and its affiliate, ibb.co) is an ad-supported, anonymous, free, image and sharing website. This website does not require users to register before being able to upload and share a photo on its platform. The website itself appears to go to lengths to hide its own ownership - the website does not communicate who owns it, and all of its WhoIS domain records are redacted. According to third party sources, this website has over forty million page views per month - more monthly page views than the websites of the Financial Times, Los Angeles Times, Politico, or the website of the Library of Congress.

During the course of conducting research on how US Government ads were served to bots and crawlers, Adalytics unintentionally and accidentally came across a historical, archived instance where a major advertiser’s digital ads were served to a URLScan.io bot that was crawling and archiving an ibb.co page which appeared to be displaying explicit imagery of a young child. Adalytics immediately ceased reviewing the archive of the explicit child content, and reported the page to the FBI, DHS HSI special agents, the National Center for Missing & Exploited Children (NCMEC), and the Canadian Centre for Child Protection (C3P).

ibb.co and imgbb.com appear to allow anonymous upload of photos without requiring users to register. Furthermore, the websites appears to allow users to hide specific photos from Google and Bing search results, by instructing the search engines to mark the pages as “noindex” via HTML meta tags.

According to the National Center for Missing & Exploited Children (NCMEC), imgbb.com has been notified dozens of times over the course of 2021, 2022, and 2023 that the platform was hosting child sexual abuse materials (CSAM).

Reddit appears to block or ban imgbb.com and ibb.co photo sharing links from its own platform (reddit.com).

In addition to hosting CSAM, imgbb.com and ibb.co appear to host explicit adult content as well as potentially copyright infringing materials. The website also appears to host explicit depictions of canine-human bestiality and zoophilia, which may construe animal abuse.

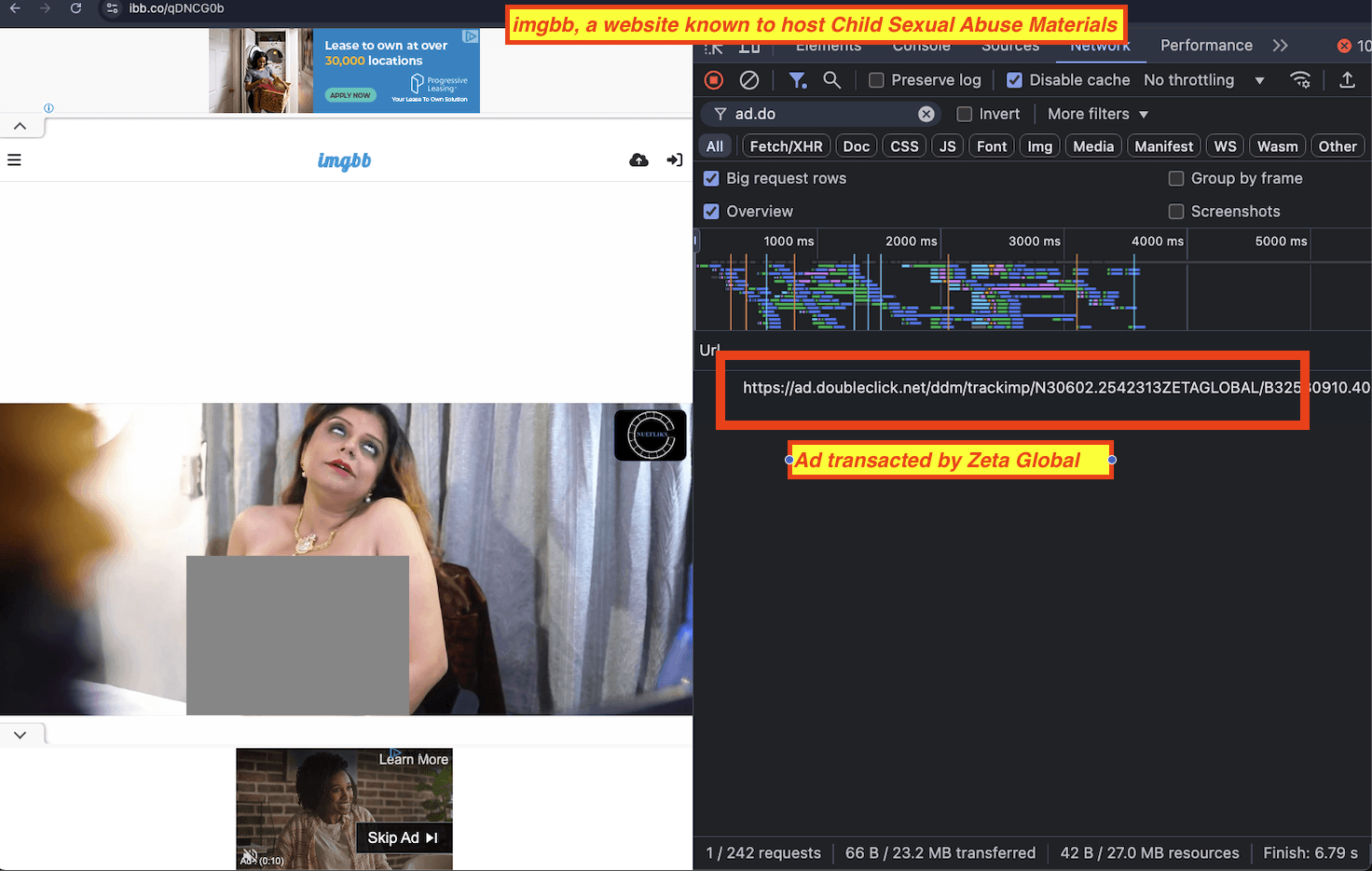

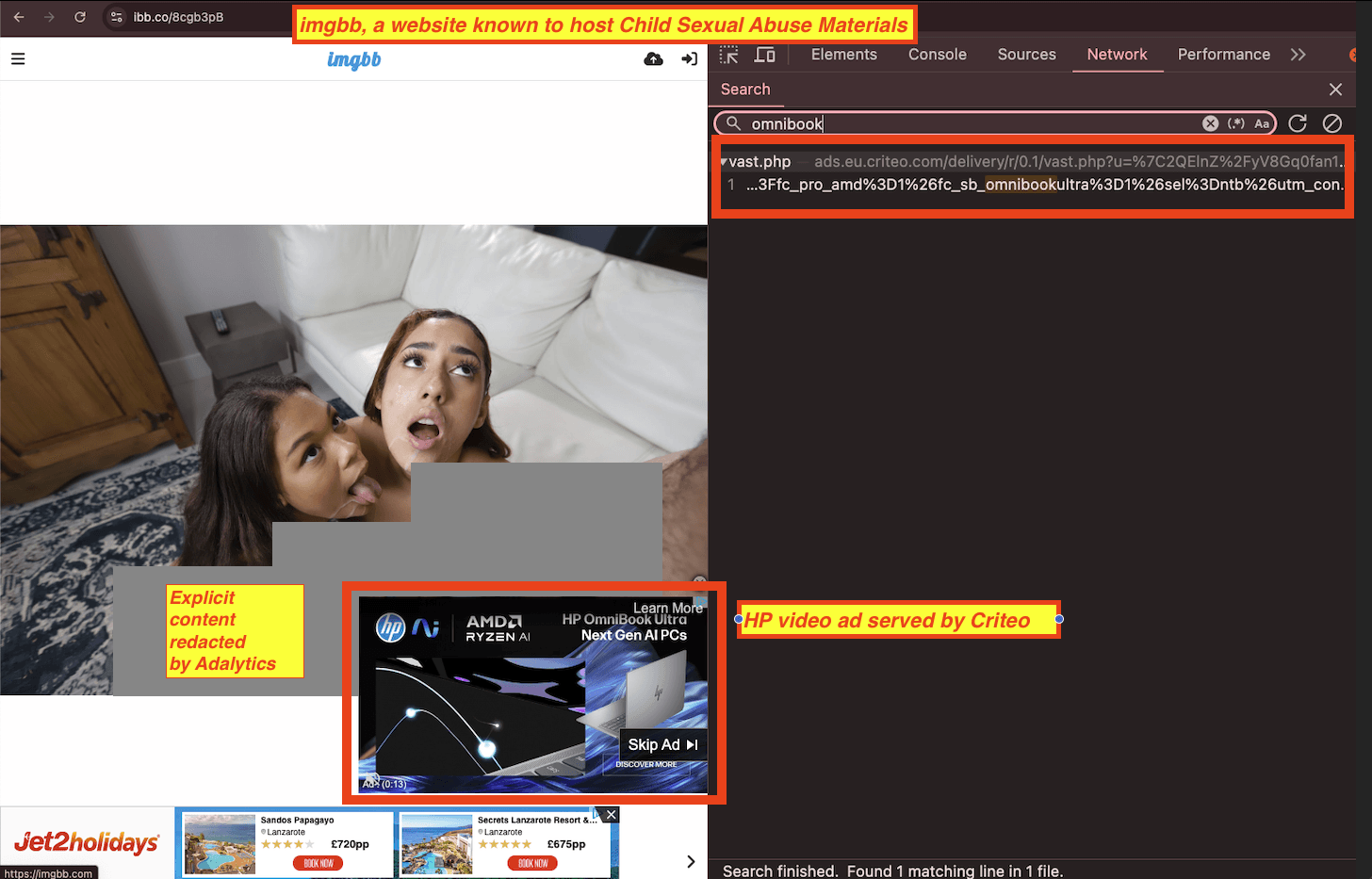

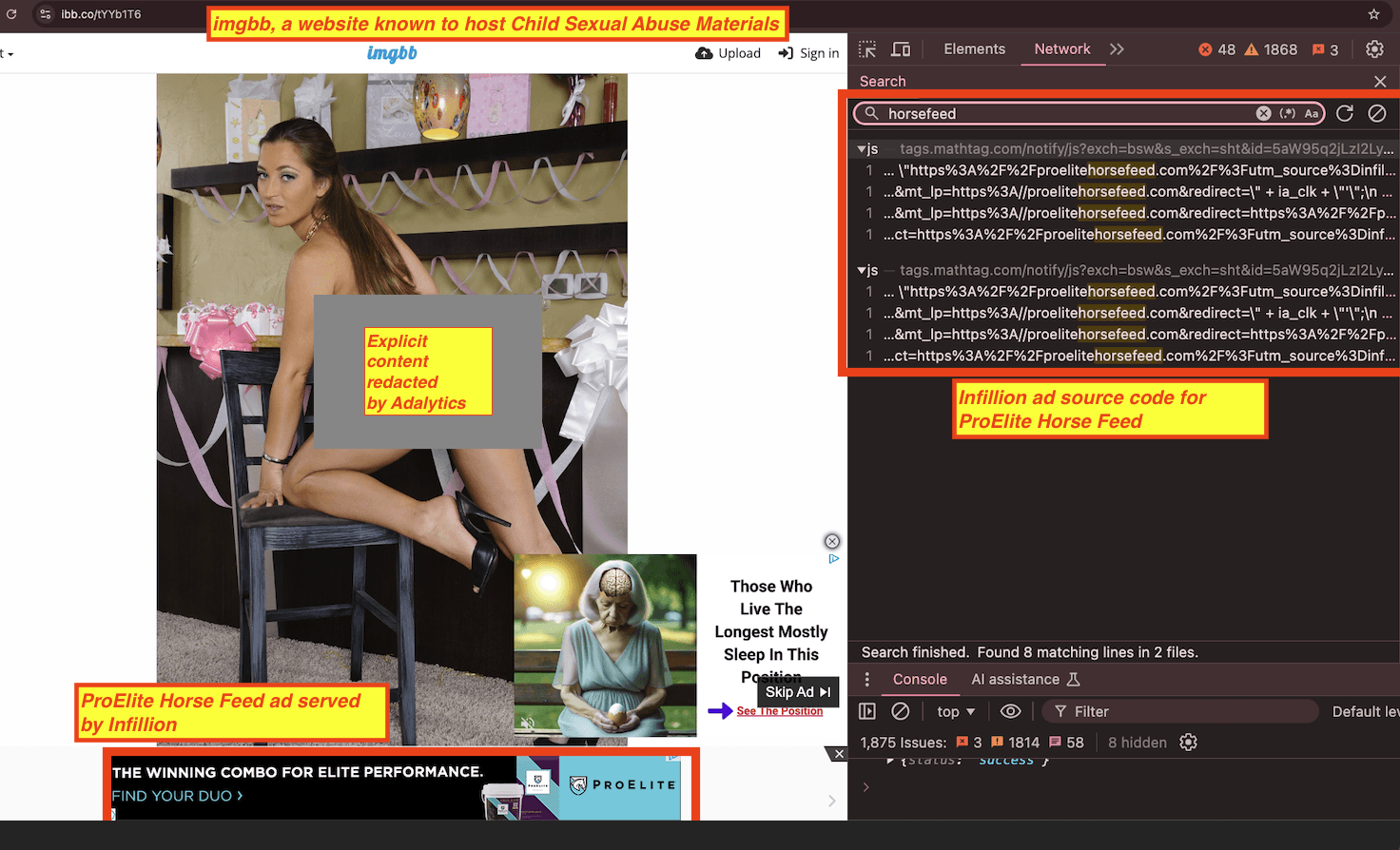

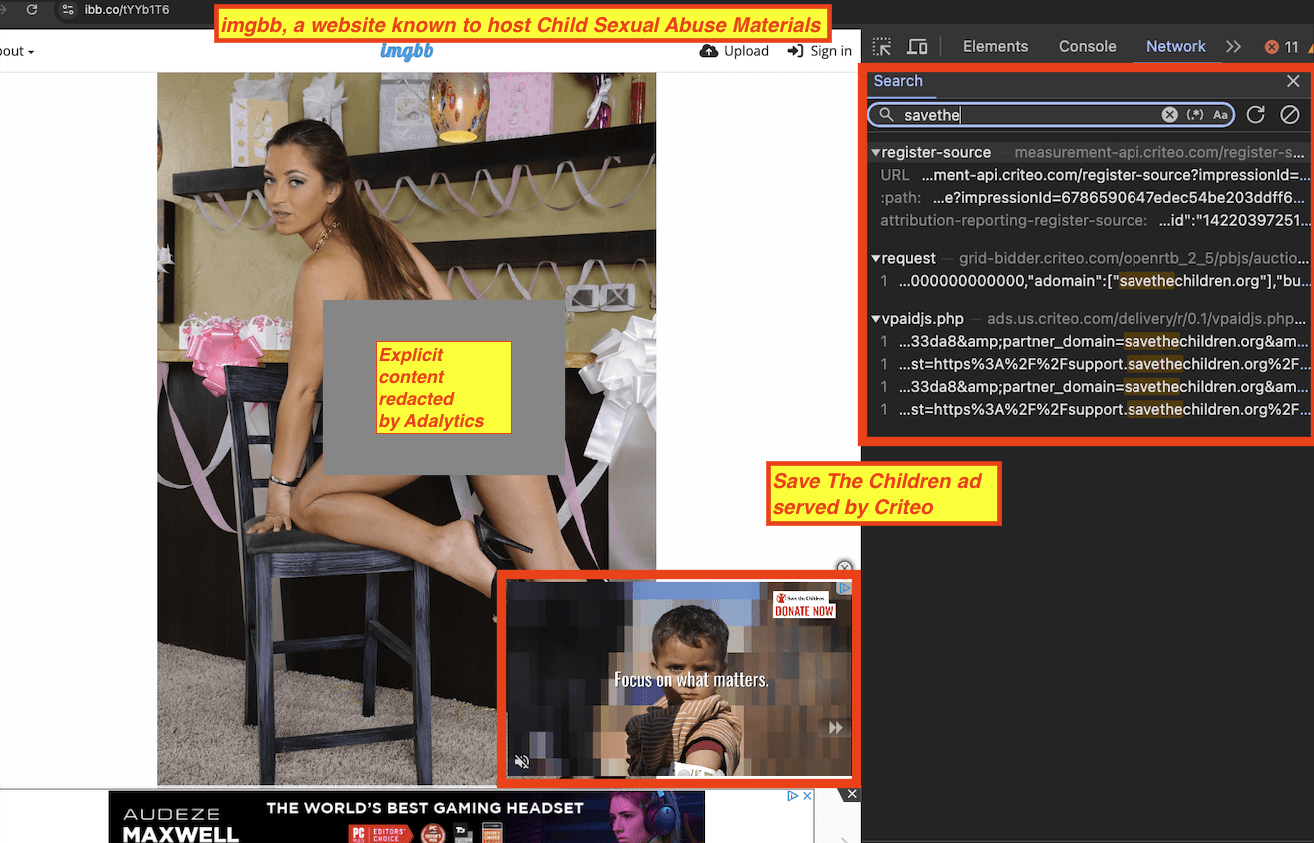

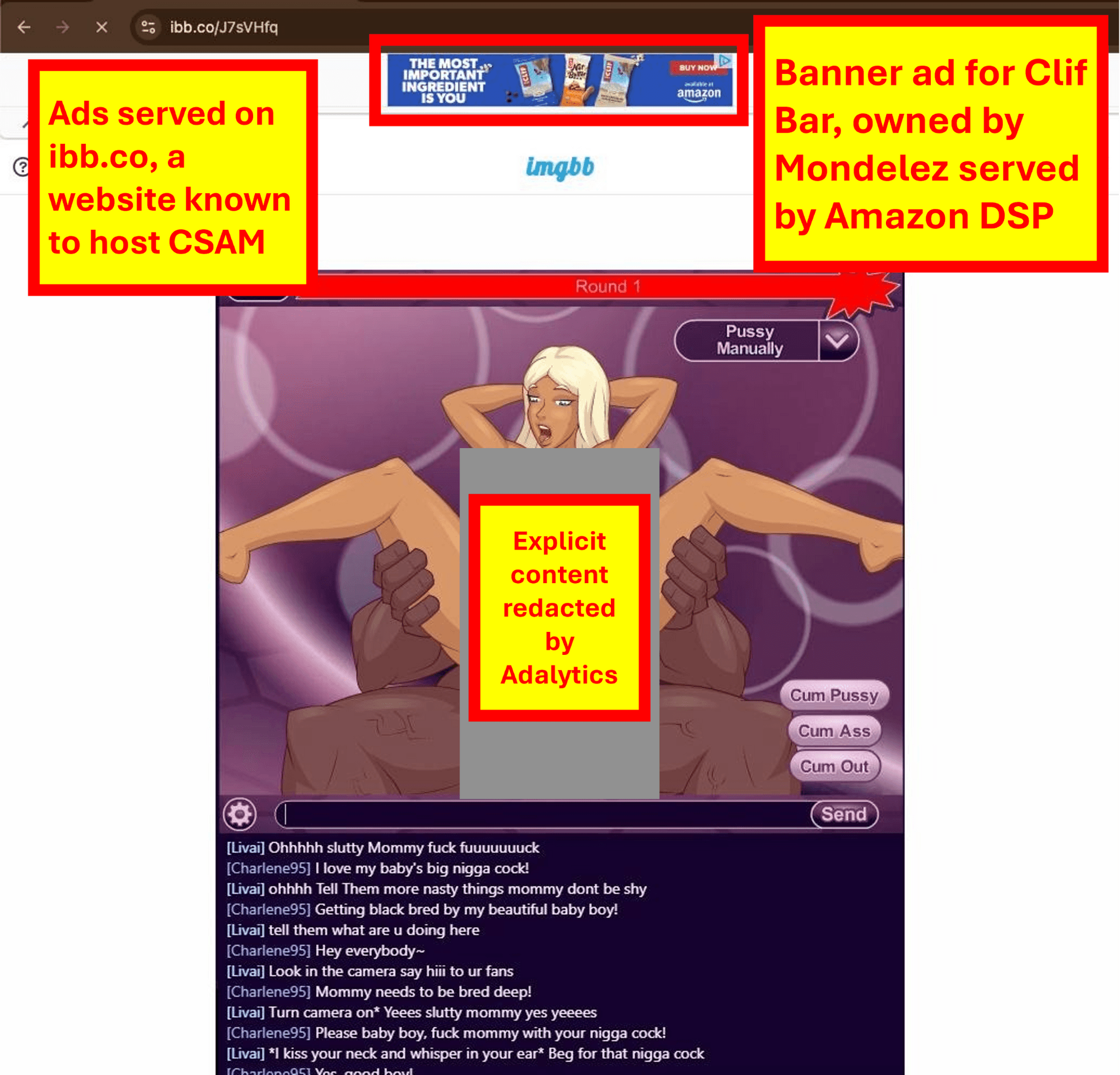

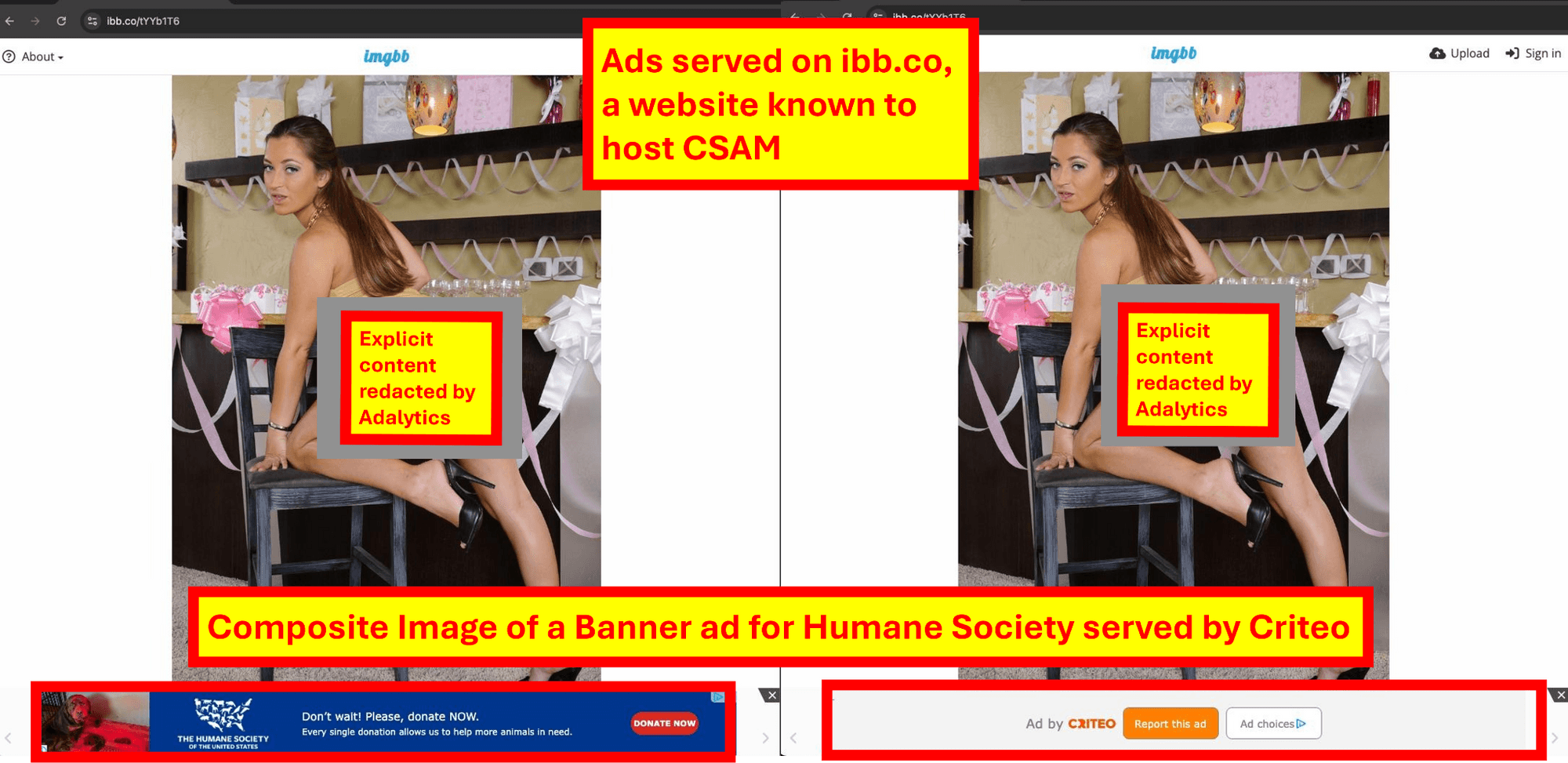

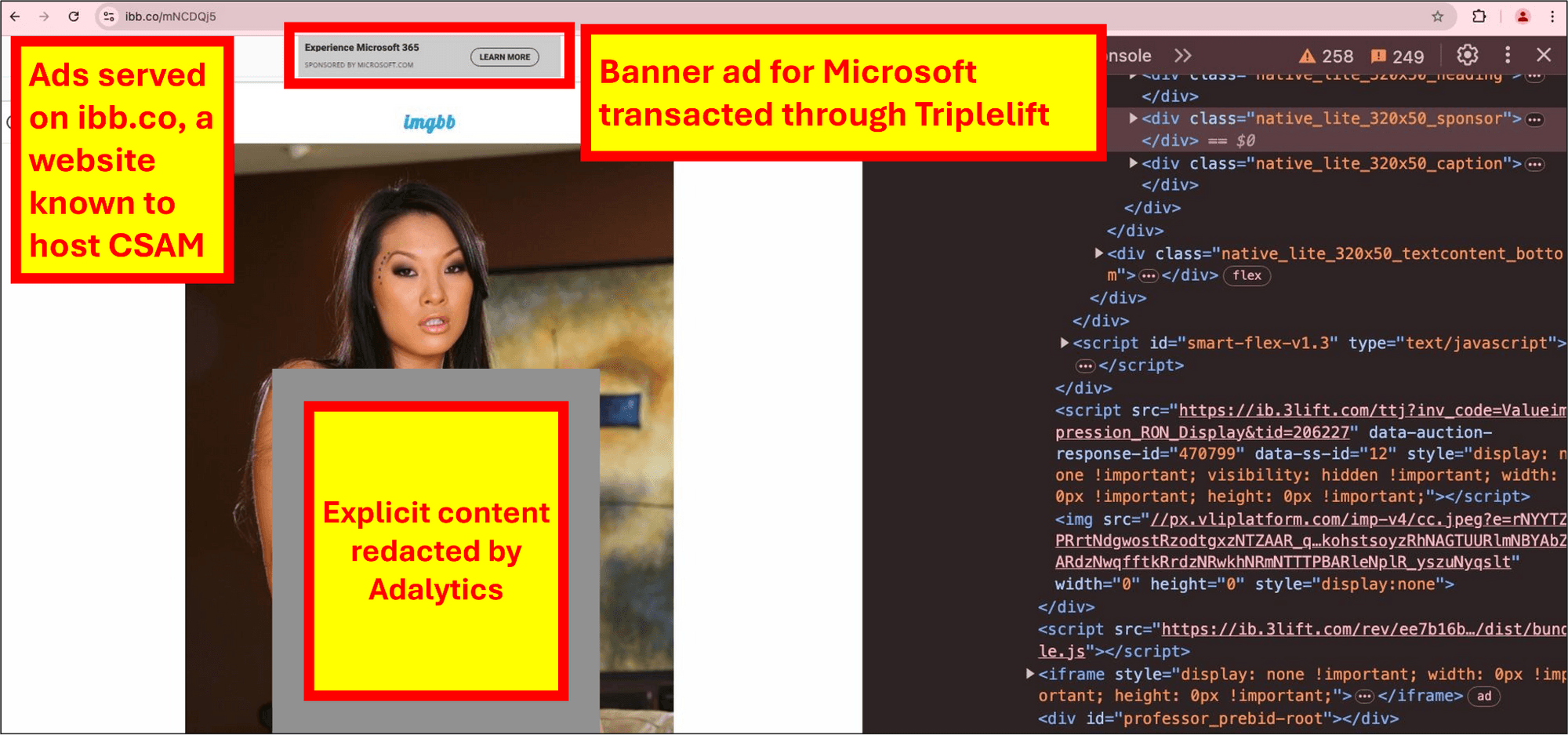

imgbb.com appears to financially support its free image hosting service by displaying digital ads from ad tech vendors such as Amazon, Google, Criteo, Quantcast, Microsoft, Outbrain, TripleLift, Zeta Global, Nexxen, and others.

It is worth noting that Google AdX, Google Ad Manager, and DV360 do not appear to be actively transacting ads on imgbb.com or ibb.co as of January 2025, at least in the observational sample of data available in this research study.

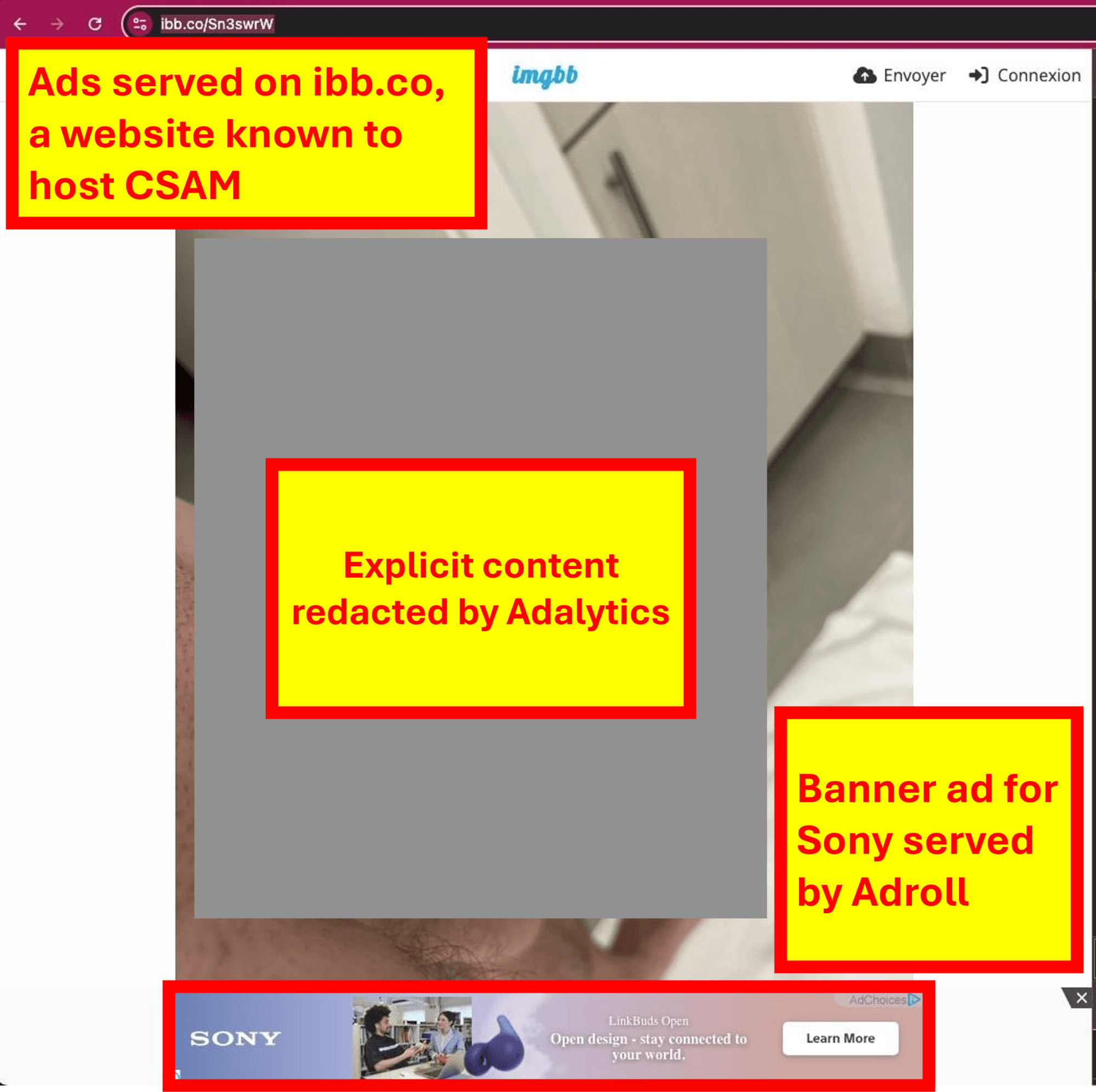

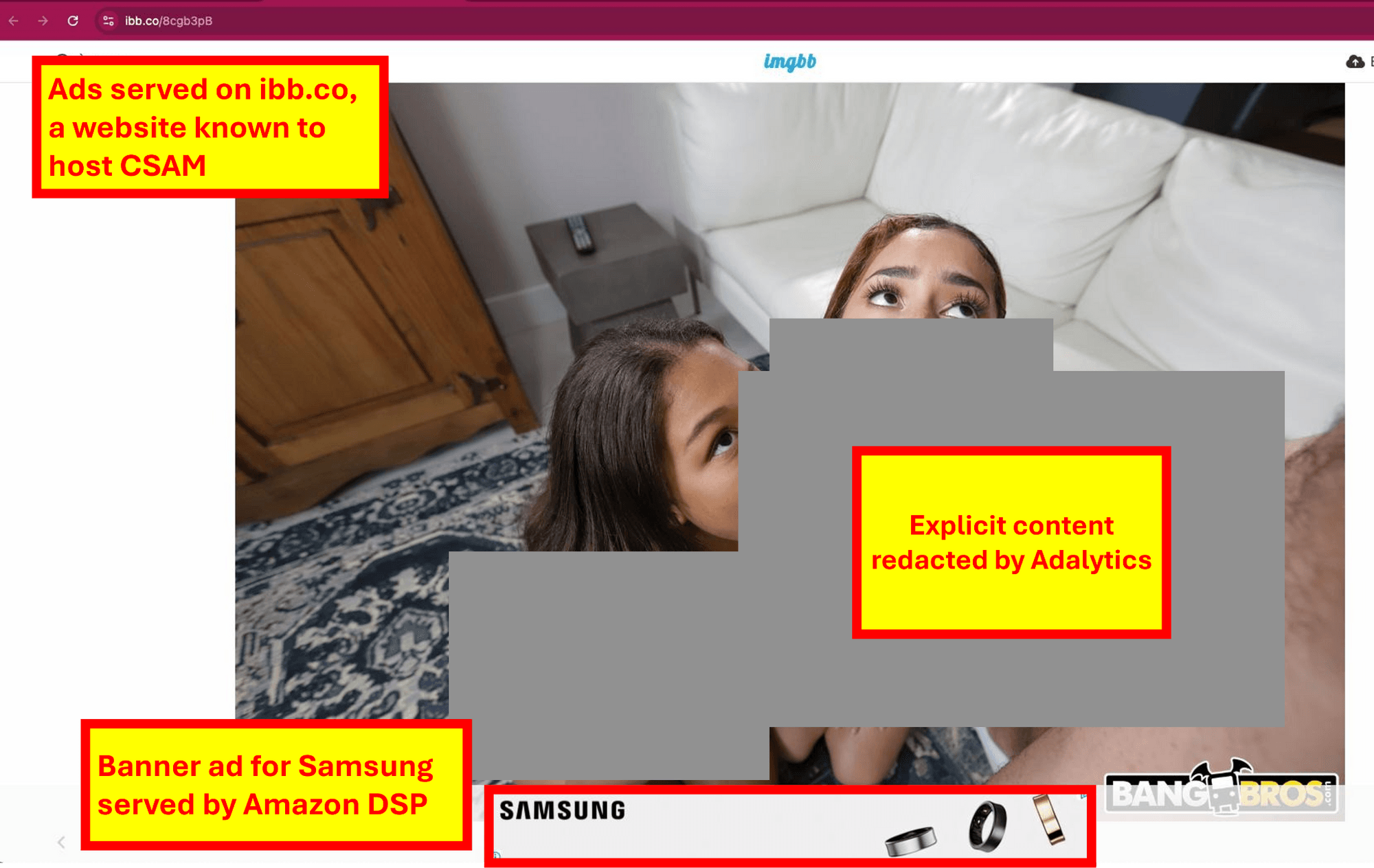

Many major advertisers appeared to have had their ads placed on a website which has been known - since at least 2021 - to host some amounts of CSAM. These advertisers may have inadvertently contributed funding to a website that is known to host and/or distribute CSAM. The list of advertisers whose ads were confirmed to have served on a site hosting CSAM include major entities such as the Department of Homeland Security, Texas state government (Office of the Texas Governor), Interpublic Group (IPG), Arizona State University, Tom Brady's TB12, MasterCard, Starbucks, PepsiCo (Gatorade), Mars candy and pet foods, McAfee, Kimberly-Clark Corporation (KCC; Depend), Honda, Uber Eats, Google Pixel, Amazon Prime, Whole Foods, DenTek, Puma, Fanduel, Sony Interactive Entertainment (Audeze), Sony Electronics, Thrive Market, Intuit (Mailchimp), Unilever, Lay's potato chips, Adobe, HP, L'Oreal, Acer, Duracell, Kenvue, Sanofi Consumer Healthcare NA, SC Johnson (off bug spray), Audible, AMC Networks' Acorn TV, J.M. Smucker Company (Meow Mix), Kodak, Medtronic, Reckitt Benckiser (mucinex, vanish), Leukemia & Lymphoma Society, Nestle, Adidas, Domino's Pizza, Hill's Science Pet Food, Samsung, Clorox, Simplisafe, Hallmark, Galderma Cetaphil, GoDaddy, Blink security cameras, Paramount+, HBO Max, Prestige Consumer Healthcare (Diabetic Tussin), Dyson, Tree Hut, Colgate, Hill’s Pet Nutrition, Sling TV, Progressive Leasing, Panasonic (ARC5 Palm-Sized Electric Razor), Sleep Number, Glad Trash Bags, savethechildren.org, Associated British Foods (Twinings tea), WayFair, SanDisk, Allergan, Sennheiser, Raymour & Flanigan furniture, Monday.com, Teamson Home, Brita, Virtue Labs smooth shampoo, Filorga, Delta Faucet, Wall Street Journal, Mansion Global, Yves Rocher, Allianz, Beiersdorf, Hertz, Mondelez, Amazon Ads, 23andMe and Skyscanner.

Multiple major brand advertisers whose ads were served on explicit content on imgbb.com reported that their ad tech vendors, such as Amazon, do not provide the advertisers with page URL level reporting that would allow the brands to investigate exactly on what page URLs their ads served. This is especially problematic in the context of a media publisher such as imgbb.com, where a lot of the content can only be seen if a user knows the exact page URL to check, because ibb.co allows uploaders to mark images as “noindex” and thus hide the images from Google and Bing search result pages.

Multiple major advertisers reported to Adalytics that their brand safety vendors had marked 100% of measured ad impressions served on ibb.co and imgbb.com as “brand safe” and/or “brand suitable”. Independent data obtained from URLScan.io confirmed that some of these media buyers' ads had served on explicit, sexual content.

Ad brand safety verification such as DoubleVerify and IAS were seen measuring or monitoring ads on various explicit pages on imgbb.com on behalf of major brands such as FanDuel, Arizona State University and Thrive Market.

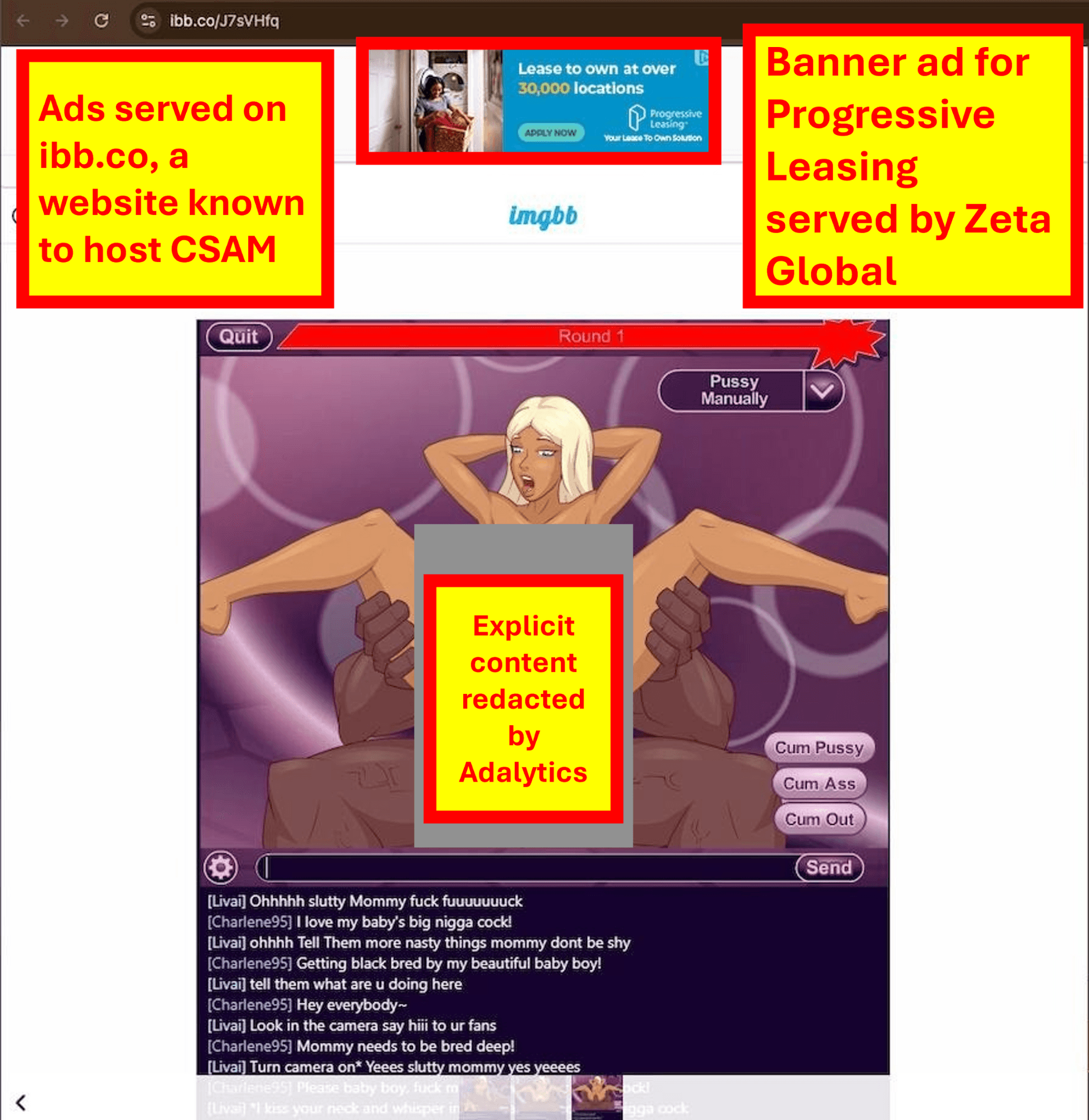

In one instance, 197 video ads showing the National Football League (NFL) or FanDuel logo were served on a ibb.co page showing a photo from an “online multiplayer sex game”. The 197x NFL and FanDuel co-branded video ads appeared to use DoubleVerify video tags. A screen recording of the 197x NFL co-branded ads can be seen here: https://www.loom.com/share/4a8de1732df14ac295e115abc520b815

Amazon, Google and other ad tech vendors claim to have media inventory quality policies. However, it is unclear the degree to which those policies are actually enforced if major brands and the US government's ads can be seen on a website that has been known to host CSAM for 3+ years.

Background

In late 2024, Adalytics was researching the phenomenon of how Fortune 500 brands and the United States government (Army, Navy, healthcare.gov, Air Force, USPS, Census) were having their digital ads served to bots rather than real humans.

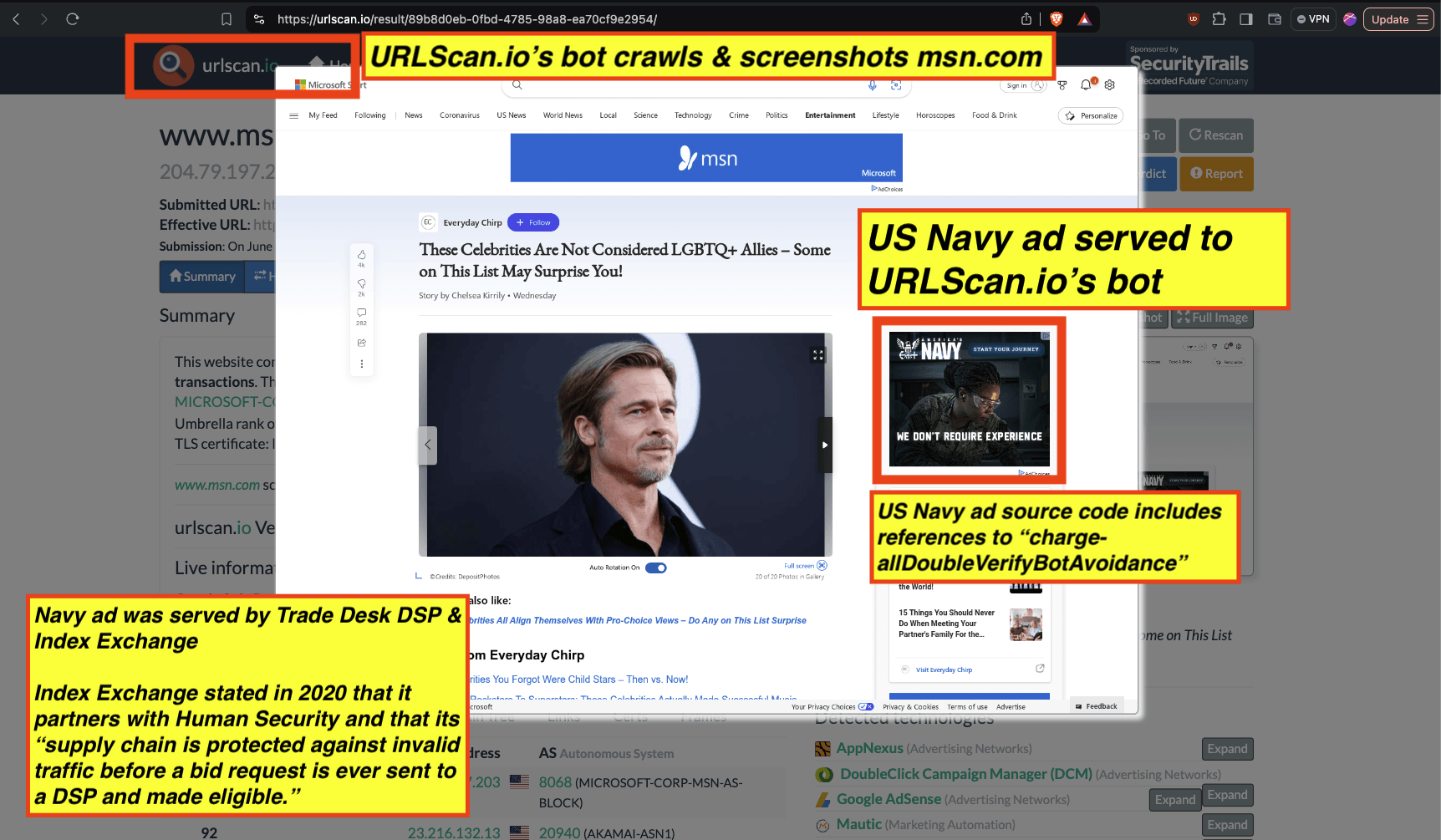

This research entailed looking at historical, archived page recordings generated by a bot called URLScan.io. URLScan.io’s bot crawls millions of page URLs and creates automated screenshots of the pages it crawls. For example, in the screenshot below one can see an example of a United States Navy ad served to URLScan.io’s bot, whilst the bot was crawling the website msn.com on June 16th, 2023.

Screenshot of a URLScan.io bot crawl of msn.com on June 16th, 2023, with a US Navy recruiting ad served to the bot. Source: https://urlscan.io/result/89b8d0eb-0fbd-4785-98a8-ea70cf9e2954/

Screenshot of a URLScan.io bot crawl of msn.com on June 16th, 2023, with a US Navy recruiting ad served to the bot. Source: https://urlscan.io/result/89b8d0eb-0fbd-4785-98a8-ea70cf9e2954/

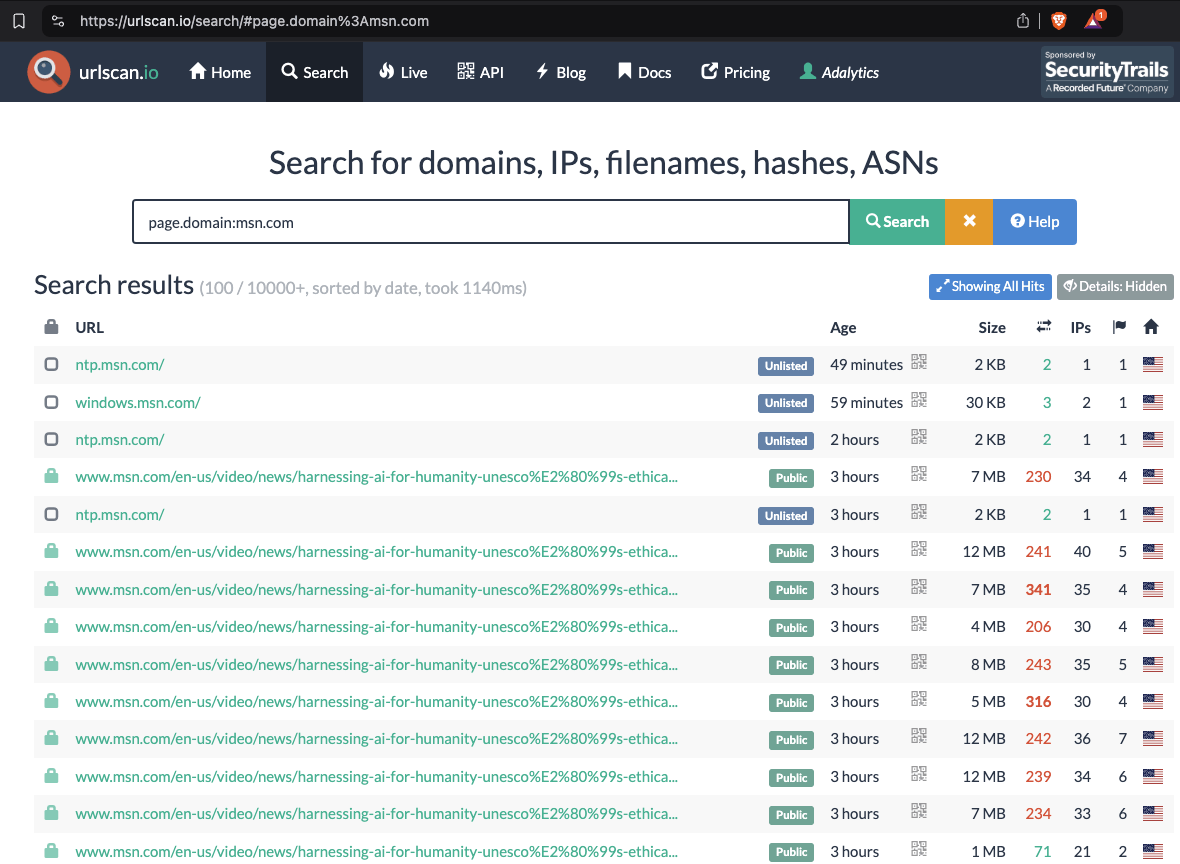

Similar to the public Internet Archive (archive.org), URLScan.io’s bot has historical screenshots archived for many different websites. One can search through those historical screenshots, and see what a given website looked like on a given date. Furthermore, one can also observe if ads were served to URLScan.io’s bot on a given date.

In the screenshot below, one can see an example of over a dozen recordings that URLScan.io’s bot generated on the website msn.com. URLScan.io has page recordings going as far back as 2016.

Screenshot of URLScan.io’s historical archives of msn.com

During the course of this research into how United States government ads were served to bots, Adalytics noticed that URLScan.io bot had recorded ads being served on a website called “imgbb.com” and “ibb.co” thousands of times over the course of 7+ years - going as far back as 2017.

Clicking into some of the URLScan.io historical archives of this “imgbb.com” and “ibb.co” website showed that the imgbb.com and ibb.co website hosts images of explicit, adult content. Furthermore, digital ads from major brands were appearing adjacent to explicit adult content on imgbb.com and ibb.co, when URLScan.io’s bot had archived the page in 2024.

Prompted by this unexpected discovery, Adalytics started reviewing the thousands of historical “imgbb.com” and “ibb.co” page recordings generated by URLScan.io’s bot from 2017 to 2024.

During the course of this historical data review, it was observed that many major brands had their ads served adjacent to explicit, adult content.

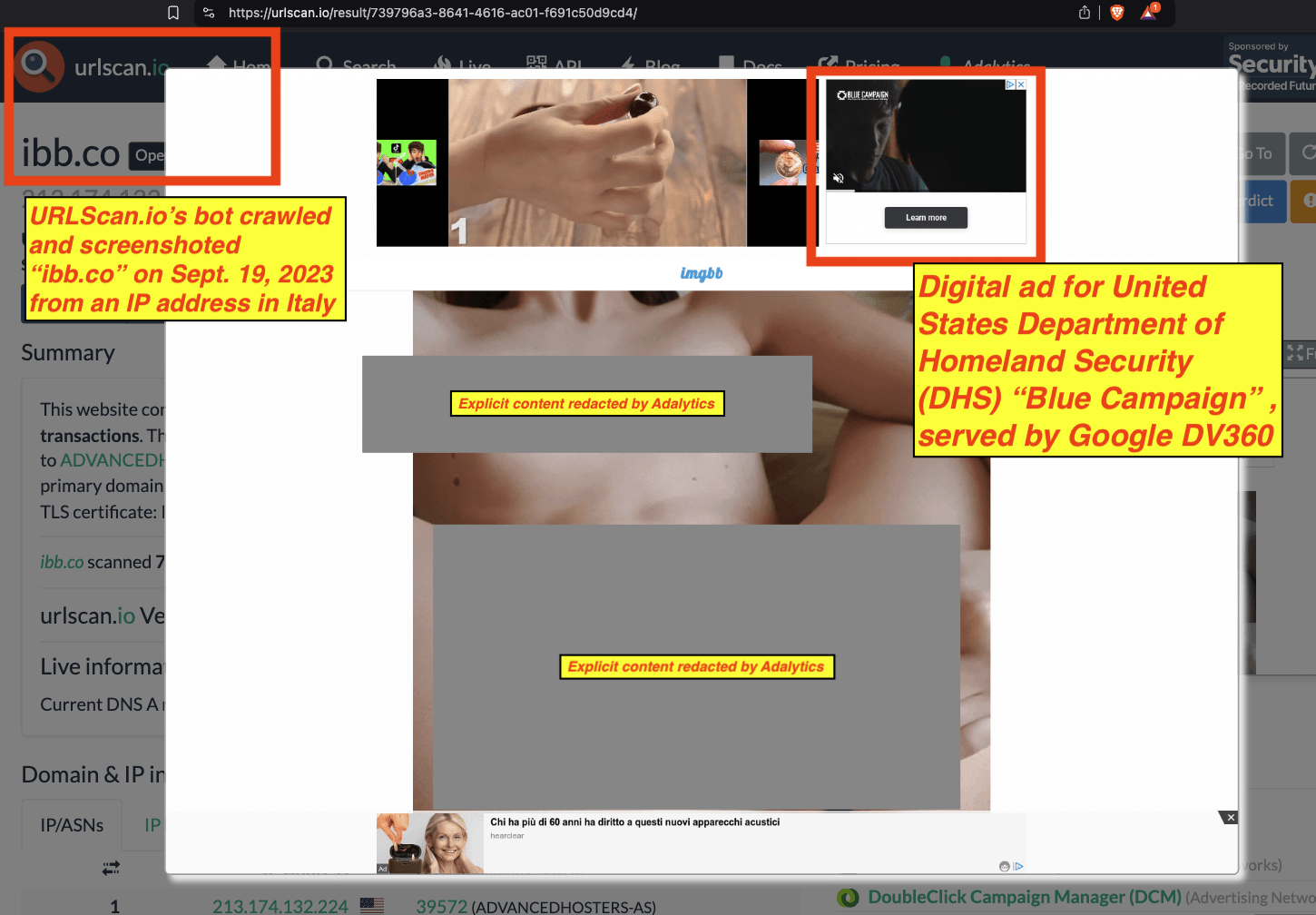

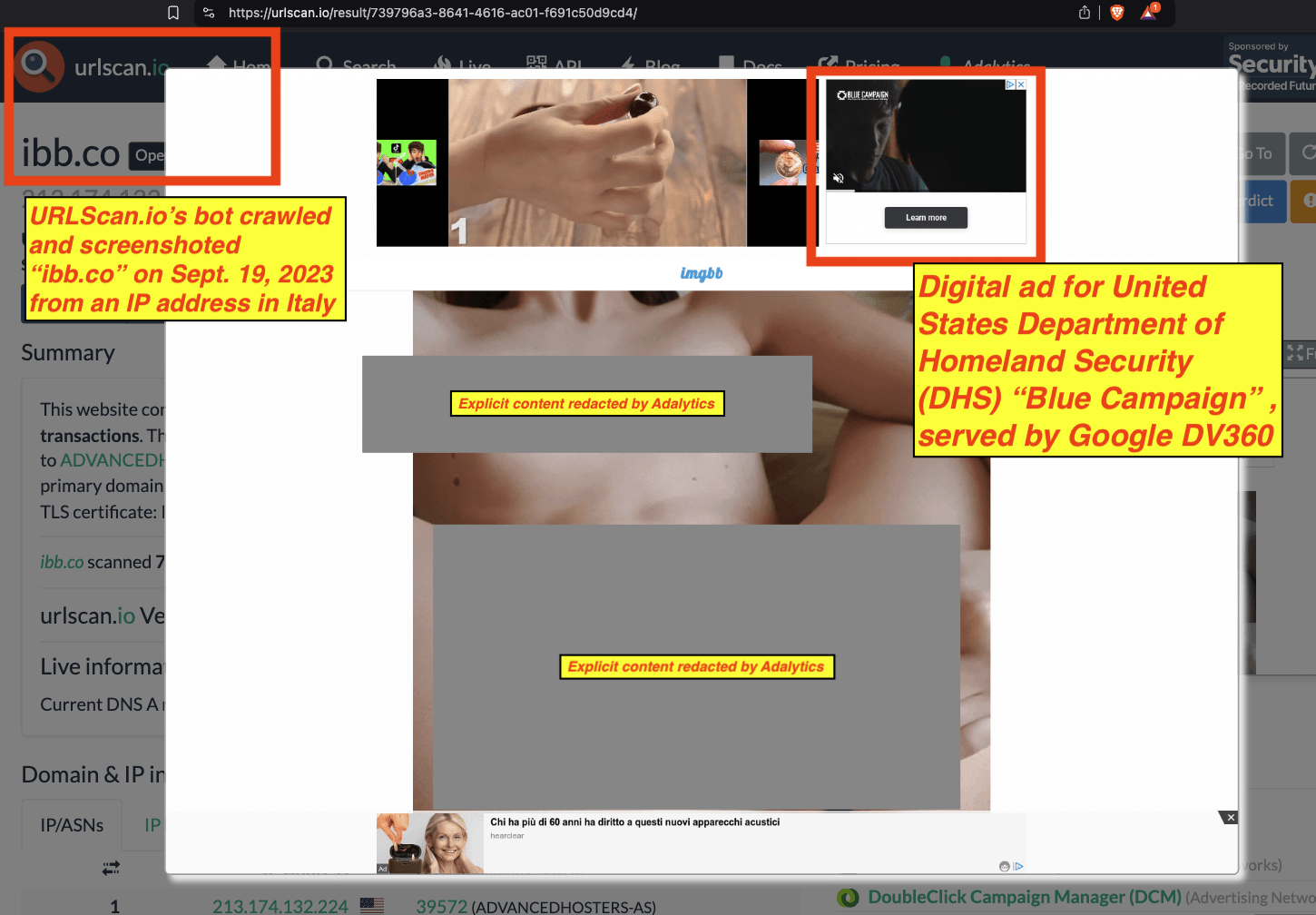

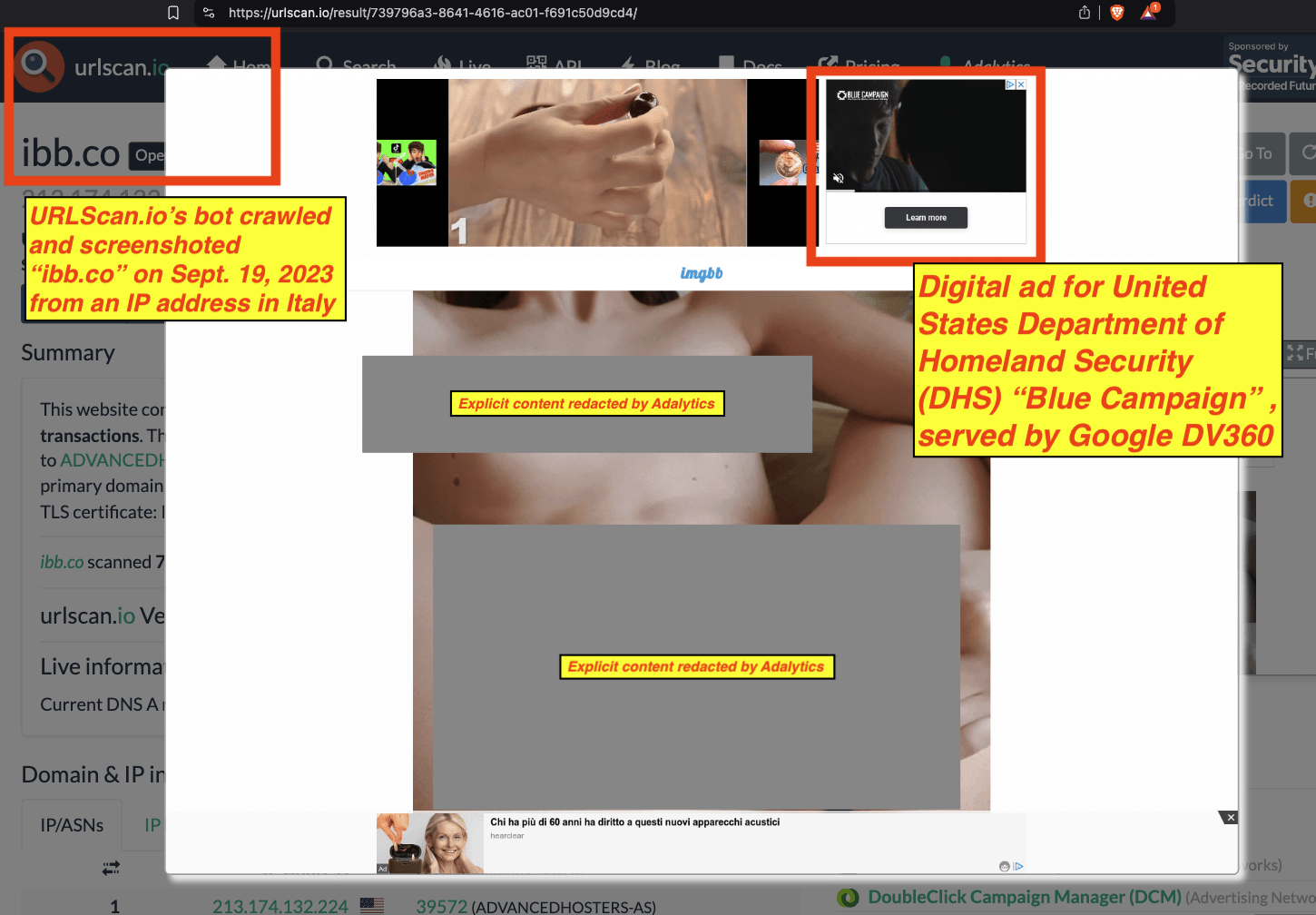

For example, on September 19th, 2023, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, from an IP address in Italy.

The bot screenshotted the specific “ibb.co” page, which appears to show a woman masturbating. Whilst the bot was screenshotting the page, it was served a digital ad by Google’s Display & Video 360 (DV360), a software platform used by advertisers to purchase ad placements. The specific ad was for the United States Department of Homeland Security (DHS) “Blue Campaign” (dhs.gov/blue-campaign).

Screenshot showing a United States government ad for the Department of Homeland Security (DHS) being served to a bot whilst the bot was crawling and screenshotting “ibb.co”. Source: https://urlscan.io/result/739796a3-8641-4616-ac01-f691c50d9cd4/#transactions.

During the course of reviewing these historical URLScan.io bot captures of “imgbb.com” and “ibb.co”, Adalytics noticed an instance where URLScan.io’s bot had archived a particular URL in early November 2024, wherein the explicit, nude content on the website appeared to be a young girl - possibly 4-6 years old. There were digital ads served to the bot whilst it was archiving that page.

Upon realizing that the given “ibb.co” page URL that URLScan.io had archived and screenshotted contained potential Child Sexual Abuse Material (CSAM), Adalytics immediately closed the given URLScan.io page capture. Adalytics documented the incident and provided specific evidence to the Federal Bureau of Investigation (FBI), the Department of Homeland Security Homeland Security Investigations (DHS HSI), and the National Center for Missing & Exploited Children (NCMEC), and the Canadian Centre for Child Protection (C3P), so that these entities could review and/or take down the putative CSAM hosted by “ibb.co”.

imgbb.com and ibb.co hosted Child Sexual Abuse Materials (CSAM) in 2021, 2022, and 2023

The National Center for Missing & Exploited Children (NCMEC) is a private, nonprofit organization established in 1984 by the United States Congress.

NCMEC’s mission is to help find missing children, reduce child sexual exploitation, and prevent child victimization.

NCMEC’s CyberTipline offers the public an easy way to quickly report suspected incidents of sexual exploitation of children online. U.S. federal law requires that U.S.-based electronic service providers (“ESPs”) report instances of apparent child pornography that they become aware of on their systems to NCMEC’s CyberTipline. Since the CyberTipline’s inception in 1998, NCMEC has received millions of reports and reviewed hundreds of millions of images and videos of suspected child sexual abuse material (CSAM) in an effort to locate exploited children and help law enforcement rescue them from abusive situations. NCMEC works to disrupt the trading of child sexual abuse images and videos online, and helps survivors begin to rebuild their lives.

NCMEC publishes annual transparency reports, which include information about the CyberTipline, the Child Victim Identification Program (CVIP), and other related topics.

According to NCMEC: “We often receive CyberTipline reports about imagery of child sexual exploitation that is being shared online. In some cases, the reports are made by the child victims themselves or their guardians/caregivers. The CyberTipline is a lifeline for families who are struggling to have explicit images of their child taken down. After visually reviewing the reported imagery, NCMEC staff members notify the electronic service provider where the image or video has been shared.”

Reviewing the annual NCMEC transparency reports allows one to see instances where a platform was notified by NCMEC of CSAM, exploitative content that falls short of CSAM, and sexually predatory texts. For example, if someone reports a given image on a website to NCMEC, NCMEC will review the reported image and adjudicate whether or not the image is actually CSAM. NCMEC will then send a formal notification to the website owner notifying them of the CSAM and requesting that the confirmed CSAM be removed from the platform.

The NCMEC transparency reports must be viewed with some discretion. The largest recipient of NCMEC notices is INHOPE, which operates 55 tiplines for foreign countries, and acts in a similar way to NCMEC. Likewise, in instances where a platform vigilantly patrols its users or website clients, a reader may also come to the mistaken conclusion that a thorough reporter is actually condoning CSAM.

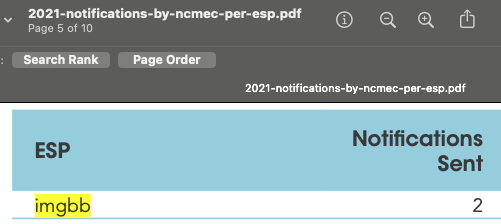

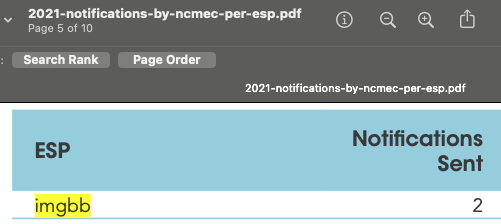

In the screenshot below of the 2021 NCMEC transparency report, one can see that in 2021, NCMEC received, confirmed, and filed alerts for two different pieces of CSAM to “imgbb”.

Source: “2021 Notifications Sent by NCMEC Per Electronic Service Providers (ESP)”. Source: https://www.missingkids.org/content/dam/missingkids/pdfs/2021-notifications-by-ncmec-per-esp.pdf.

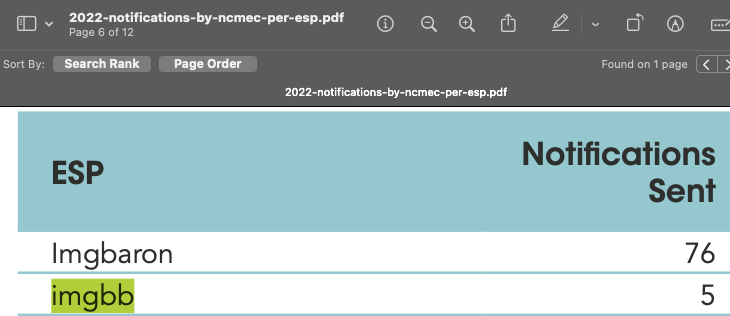

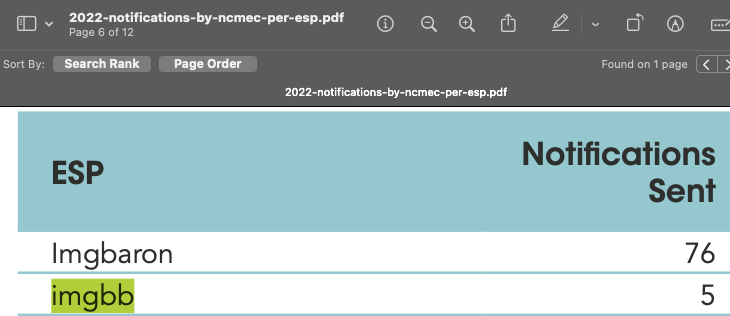

In the screenshot below of the 2022 NCMEC transparency report, one can see that in 2021, NCMEC received, confirmed, and filed alerts for five different pieces of CSAM to “imgbb”.

Source: “2022 Notifications Sent by NCMEC Per Electronic Service Providers (ESP)”. https://www.missingkids.org/content/dam/missingkids/pdfs/2022-notifications-by-ncmec-per-esp.pdf

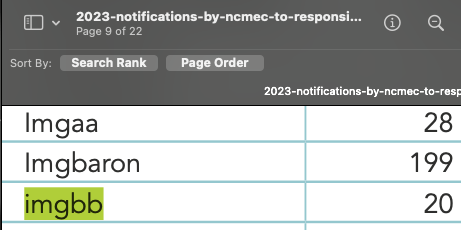

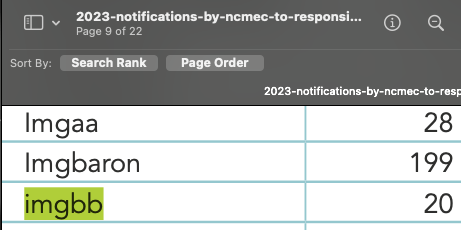

In the screenshot below of the 2023 NCMEC transparency report, one can see that in 2023, NCMEC received, confirmed, and filed alerts for twenty different pieces of CSAM to “imgbb”.

Source: “2023 Total Notifications sent by NCMEC to Responsive Electronic Service Providers (ESP)”. https://www.missingkids.org/content/dam/missingkids/pdfs/2023-notifications-by-ncmec-to-responsive-esp.pdf.

imgbb.com and ibb.co appear to allow anonymous photo uploads without requiring users to register for an account

imgbb.com and ibb.co appear to give the users the option of creating an account and registering with the platform via email address, but it appears that this registration is not a strict requirement for uploading images to the website.

For example, in the screenshot below one can see the home page of the imgbb.com website. Without registering or logging in, the user is immediately allowed to “start uploading” an image.

Screenshot of imgbb.com, showing that users can upload images without first registering

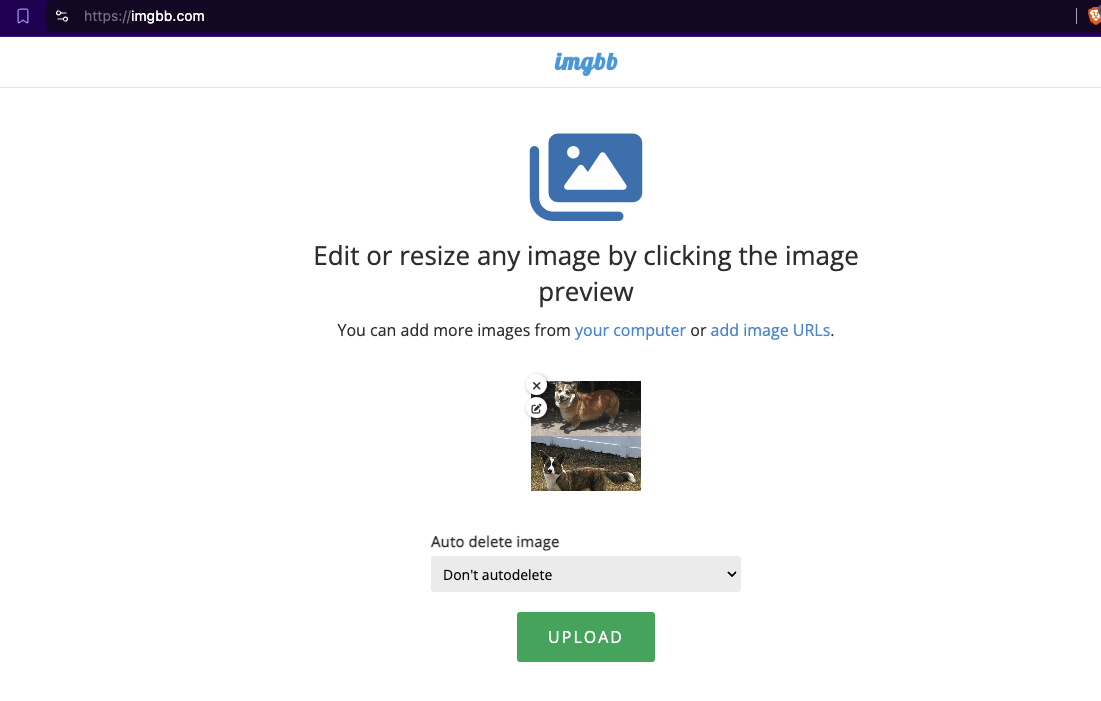

In the next screenshot below, one can see an unauthenticated, anonymous user uploading an image of two dogs to the website, without having registered or logged in.

Screenshot showing an anonymous image upload of a photo of two dogs to imgbb.com

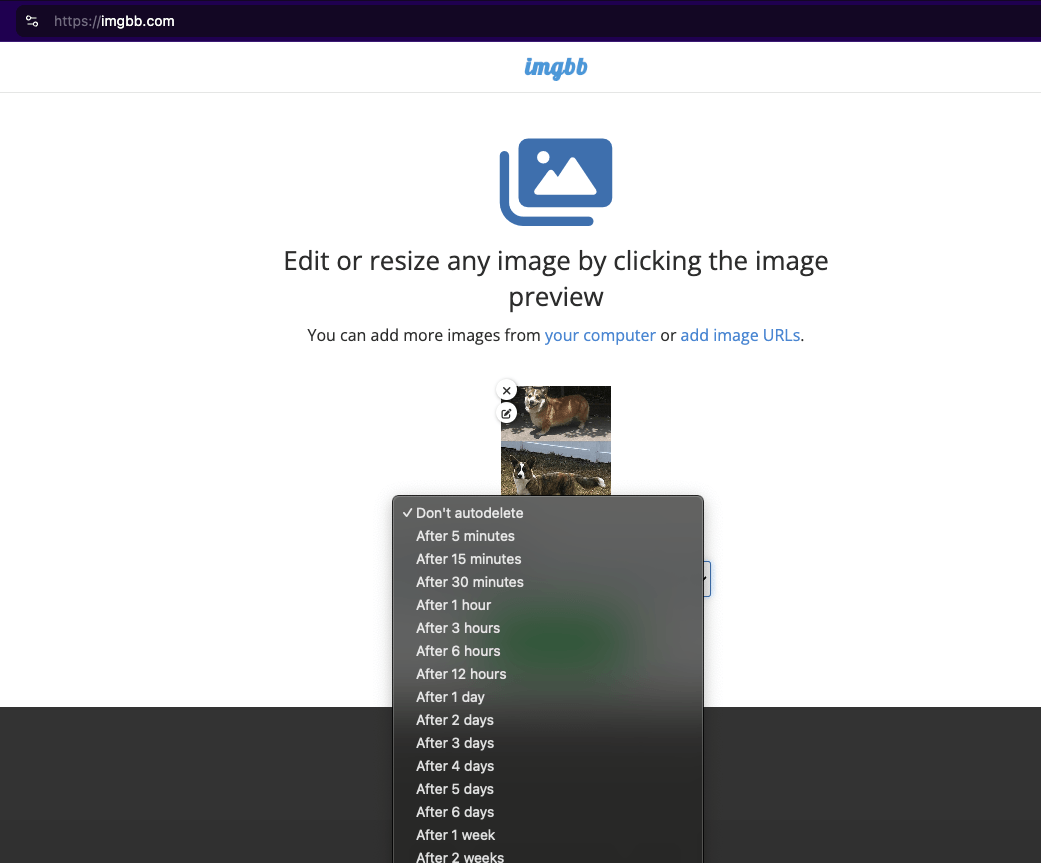

In the next screenshot below, one can see that the anonymous, un-authenticated uploader is given the option of setting the image to “autodelete” from the platform after a certain time frame.

Screenshot showing an anonymous image upload of a photo of two dogs to imgbb.com, and that the website allows users to “autodelete” images after a specific time.

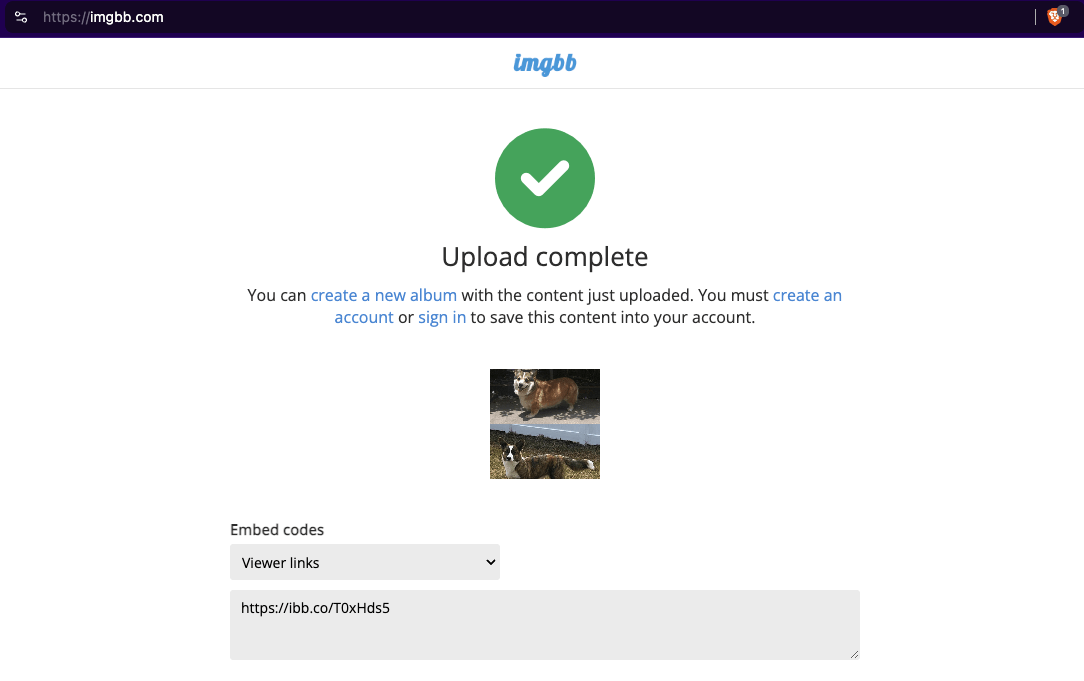

In the next screenshot below, one can see that the anonymous, un-authenticated uploader successfully uploaded the image of two dogs to the platform, and is provided with the option of sharing the image on the imgbb.com platform with others publicly.

Screenshot of imgbb.com showing an anonymous, un-authenticated user having successfully uploaded an image of two dogs

The user is also given the option of downloading the image, or sharing the image with others, via platforms such as Whatsapp, email, and others.

When someone else views the picture that was uploaded to imgbb.com, ads are served to the user.

Screenshot showing digital ads being served on imgbb.com, after the user uploaded a photo of two dogs without first registering. Source: https://urlscan.io/result/6635c990-dc27-4a3c-80b4-413b7ae9658f/

Given the design of these websites: no browsing functionality, no indexing by third parties like Google and Bing search engines, anonymous uploading, and a predetermined “auto-delete” functionality, it is no surprise that these sites have made their way into NCMEC transparency reports. Moreover, it seems rational to conclude that the NCMEC-reported transparency figures are significantly lower than the actual number of CSAM images uploaded, shared, and hosted by these sites.

In the screenshot below of the 2021 NCMEC transparency report, one can see that in 2021, NCMEC received, confirmed, and filed alerts for two different pieces of CSAM to “imgbb”.

Source: “2021 Notifications Sent by NCMEC Per Electronic Service Providers (ESP)”. Source: https://www.missingkids.org/content/dam/missingkids/pdfs/2021-notifications-by-ncmec-per-esp.pdf.

In the screenshot below of the 2022 NCMEC transparency report, one can see that in 2021, NCMEC received, confirmed, and filed alerts for five different pieces of CSAM to “imgbb”.

Source: “2022 Notifications Sent by NCMEC Per Electronic Service Providers (ESP)”. https://www.missingkids.org/content/dam/missingkids/pdfs/2022-notifications-by-ncmec-per-esp.pdf

In the screenshot below of the 2023 NCMEC transparency report, one can see that in 2023, NCMEC received, confirmed, and filed alerts for twenty different pieces of CSAM to “imgbb”.

Source: “2023 Total Notifications sent by NCMEC to Responsive Electronic Service Providers (ESP)”. https://www.missingkids.org/content/dam/missingkids/pdfs/2023-notifications-by-ncmec-to-responsive-esp.pdf.

Reddit appears to prohibit linking or hosting images on reddit.com from imgbb.com and ibb.co

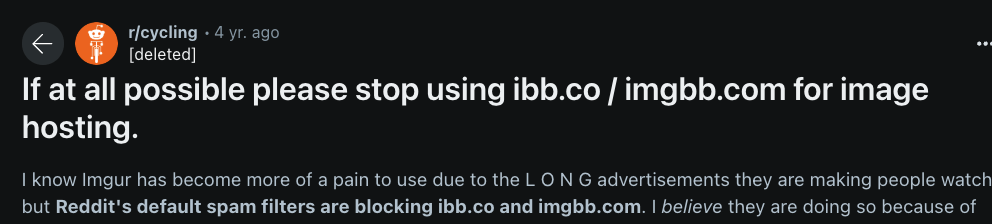

According to multiple posts and threads on reddit.com, users have reported that reddit.com appears to block or filter out images from ibb.co and imgbb.com. For example, in the screenshot below from four years ago, one can see a user saying: “Reddit's default spam filters are blocking ibb.co and imgbb.com”.

Source: https://www.reddit.com/r/cycling/comments/ns5ksc/if_at_all_possible_please_stop_using_ibbco/

Another reddit.com post says: “Please stop using ImgBB/ibb.co for your image hosting. Reddit thinks it's spam, and will stealth remove your post”.

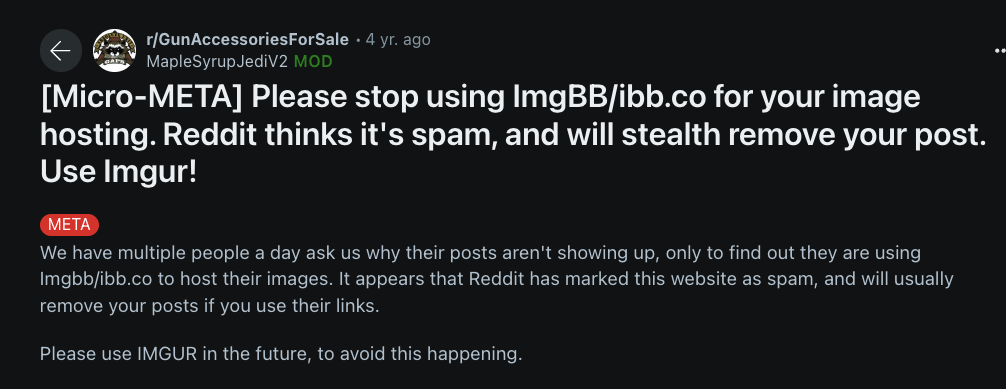

It also appears that Reddit users reported that Reddit blocks imgbb.com links in private direct messages and user-to-user chats. One user reported: “This morning during a chat I noticed when I add a link to IMGBB I get told the message was not sent due to a domain filter. Is this a recent change?”

Source: https://www.reddit.com/r/help/comments/nkmk2t/imgbb_links_blocked/

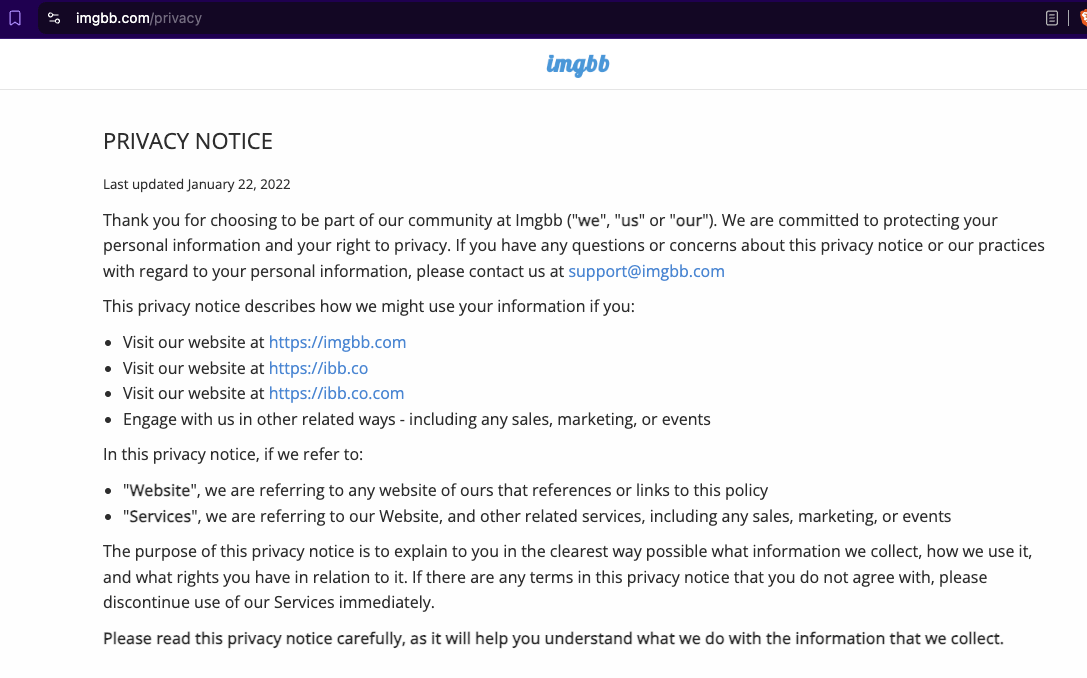

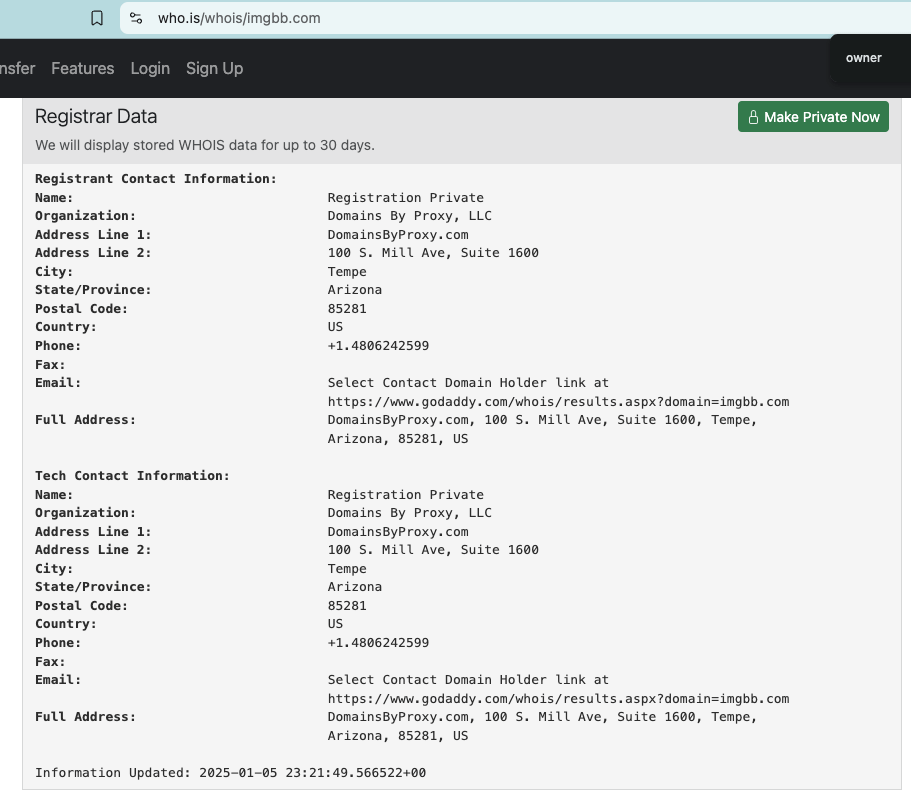

imgbb.com and ibb.co do not appear to publicly disclose their ownership or which country they operate from

There does not appear to be any readily-available public record of who owns or operates imgbb.com and ibb.co.

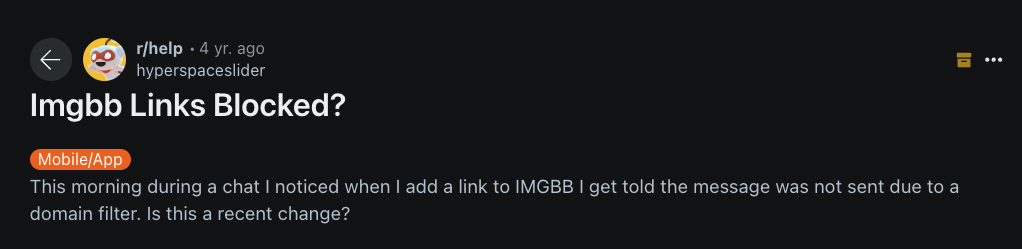

The website’s contact page (https://imgbb.com/contact) is simply a form with no address.

Source: https://imgbb.com/contact

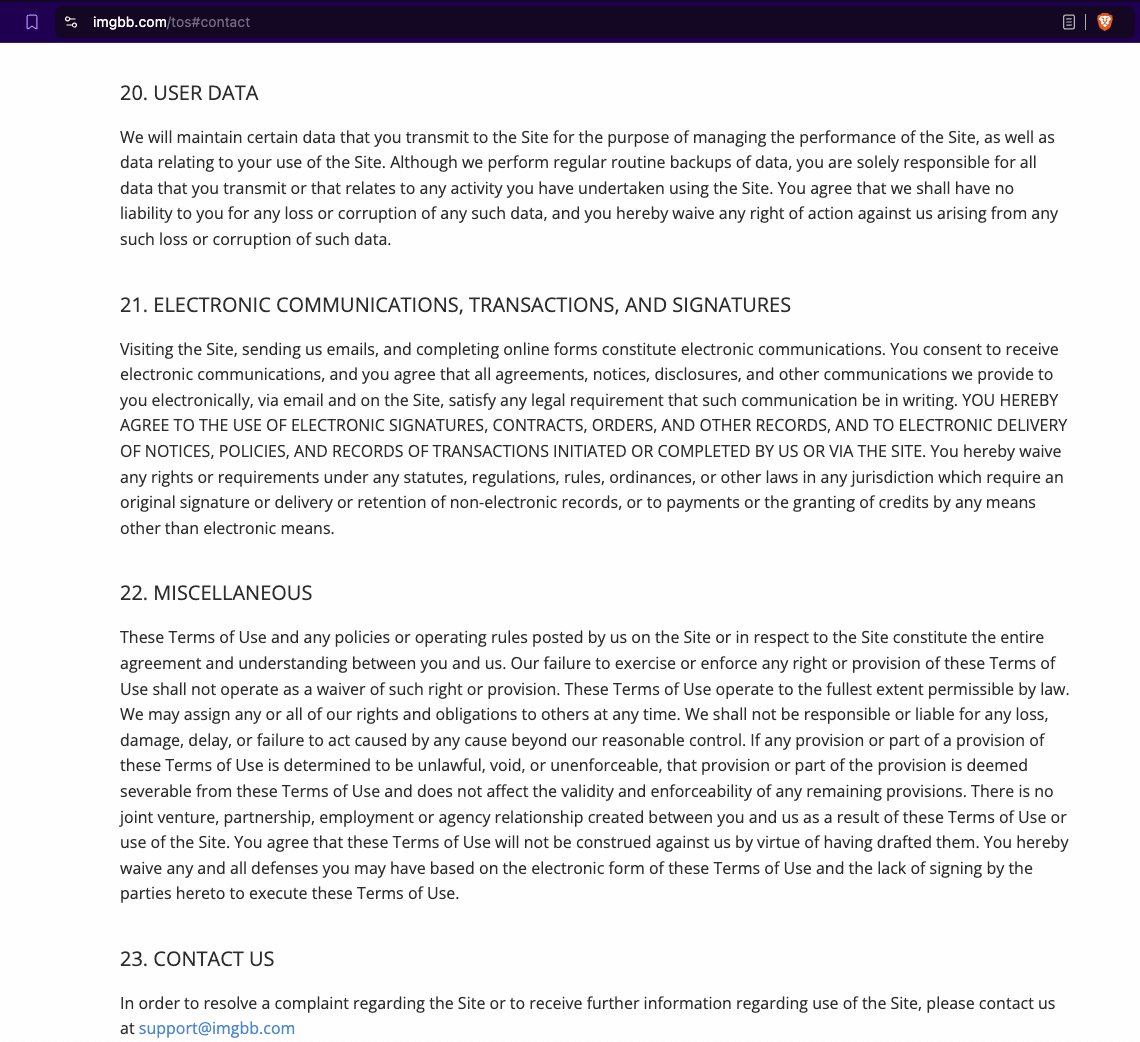

The website’s Terms of Service page (https://imgbb.com/tos) does not list what company or person operates the website, or where said operator is domiciled.

Source: https://imgbb.com/tos#contact

The website’s Privacy Notice (https://imgbb.com/privacy) also doesn’t list a physical address or who owns the website.

Source: https://imgbb.com/privacy#request

If one checks the public “WhoIS” records for the domain “imgbb.com”, it appears that the records are redacted by WhoIS domain privacy, a feature wherein a domain name registrar replaces the user's information in the WHOIS with the information of a forwarding service.

Source: https://who.is/whois/imgbb.com

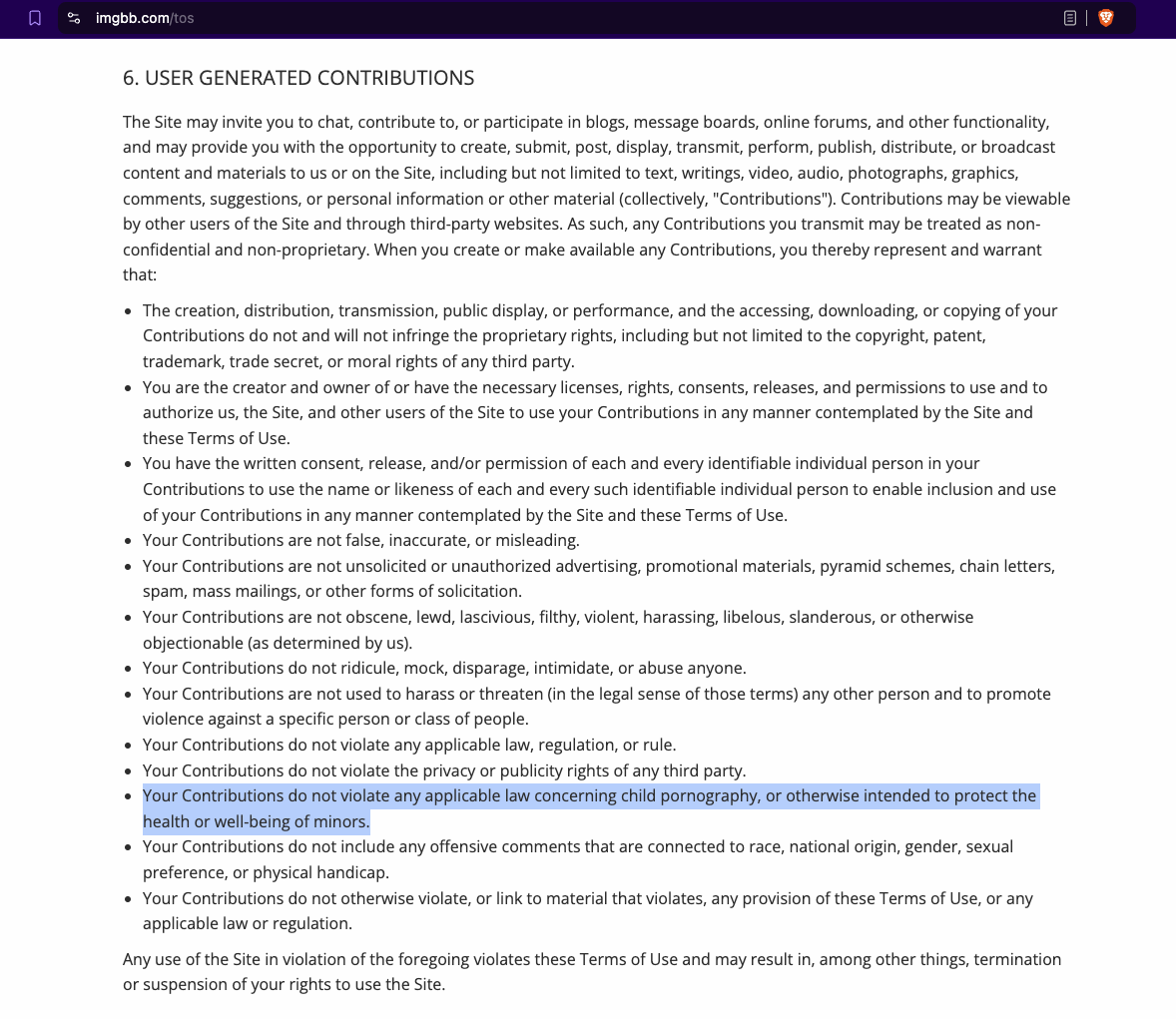

imgbb.com’s Terms of Service appear to prohibit “child pornography”

imgbb.com’s public terms of service (https://imgbb.com/tos) state: “When you create or make available any Contributions, you thereby represent and warrant that: [...] Your Contributions do not violate any applicable law concerning child pornography, or otherwise intended to protect the health or well-being of minors.”

Source: https://imgbb.com/tos

It is unclear what enforcement - if any - imgbb.com has of its Terms of Service with regards to anonymously uploaded child pornography on its platform.

imgbb.com and ibb.co appear to be financially supported by digital advertising revenues

When websites or media publishers allow for programmatic digital ads to be hosted on their webpages, these publishers receive a financial revenue share from ad tech platforms who help place ads on their pages. For example, the websites cnn.com and foxnews.com host digital ads from ad tech vendors Google, Amazon, Microsoft, Yahoo, and others.

When a digital ad is programmatically served on cnn.com and foxnews.com, the ad tech platforms share a percentage of the revenues with these media publishers.

Similarly, it appears that imgbb.com and ibb.co have hosted digital ads on their pages from 2017 to 2025. These ads appear to have been placed by different digital ad platforms, including Google, Amazon, Microsoft, Criteo, Outbrain, TripleLift, Pubmatic, Zeta Global, Infillion, Equativ (f/k/a Smartadserver), and others.

imgbb.com and ibb.co would potentially receive revenue from these vendors (directly or indirectly) for the ads hosted on the website.

Empirical Observations

Major brands may be inadvertently funding a website that is known to host Child Sexual Abuse Materials

As mentioned above, it appears that imgbb.com and ibb.co are at least partially funded by digital revenues. The website has been confirmed to host Child Sexual Abuse Materials (CSAM) by NCMEC in 2021, 2022, and 2023. Furthermore, Adalytics accidentally and unintentionally came across an instance where digital ads were served directly adjacent next to a putative CSAM that was archived in November 2024.

Thus it is possible that digital advertisers whose ads appeared on imgbb.com and ibb.co may have inadvertently helped finance the distribution of CSAM.

Important methodological note and disclaimer: This report does not include any screenshots or references to the specific imgbb.com or ibb.co page URLs which were confirmed to, or may potentially, host CSAM. Adalytics has already reported those specific examples to the FBI, DHS HSI, NCMEC, and C3P. This report will only show examples of major brands’ ads appearing next to adult explicit content materials, and those examples will also be redacted to avoid showing genitalia.

This report does not include any screenshots or references to the specific imgbb.com or ibb.co page URL which may potentially host CSAM. Adalytics has already reported specific examples to the FBI, DHS HSI, NCMEC, and C3P. This report will only show examples of major brands’ ads appearing next to adult explicit content materials, and those examples will also be redacted to avoid showing genitalia.

For example, on September 19th, 2023, URLScan.io’s bot crawled one of the “ibb.co” page URLs, from an IP address in Italy. The bot screenshotted the specific “ibb.co” page, which appears to show a woman masturbating. Whilst the bot was screenshotting the page, it was served a digital ad by Google’s Display & Video 360 (DV360), a software platform used by advertisers to purchase ad placements. The specific ad was for the United States Department of Homeland Security (DHS) “Blue Campaign” (dhs.gov/blue-campaign). This means that the United States government may have inadvertently helped finance a website which is known to host and distribute CSAM, via its ad spend in Google DV360.

Screenshot showing a United States government ad for the Department of Homeland Security (DHS) being served to a bot whilst the bot was crawling and screenshotting “ibb.co”. Source: https://urlscan.io/result/739796a3-8641-4616-ac01-f691c50d9cd4/#transactions.

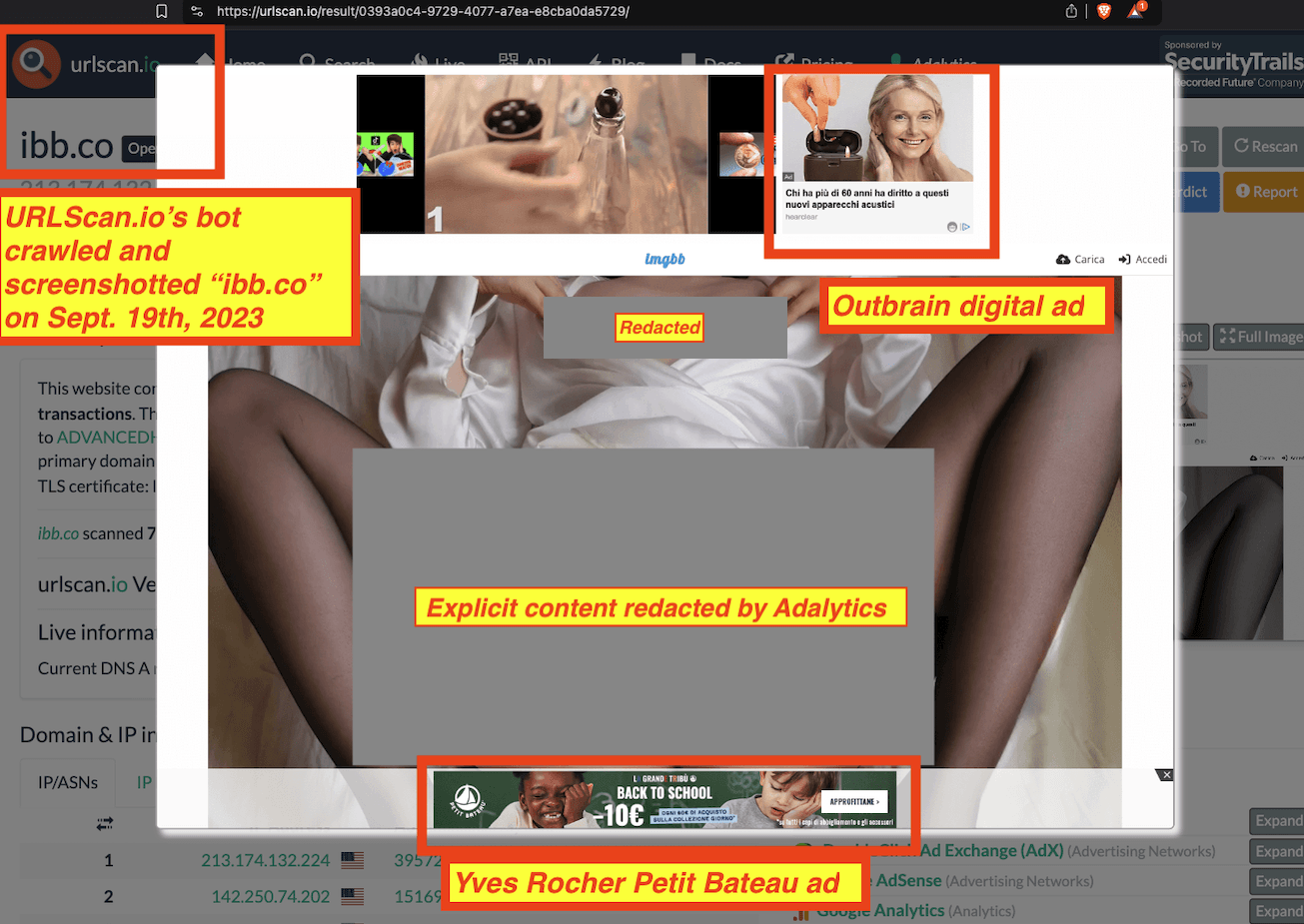

As another example, on September 19th, 2023, URLScan.io’s bot crawled one of the “ibb.co” page URLs. Whilst the bot was screenshotting the page, it was served two digital ads, including one for Yves Rocher. This could possibly indicate that Yves Rocher may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/0393a0c4-9729-4077-a7ea-e8cba0da5729/

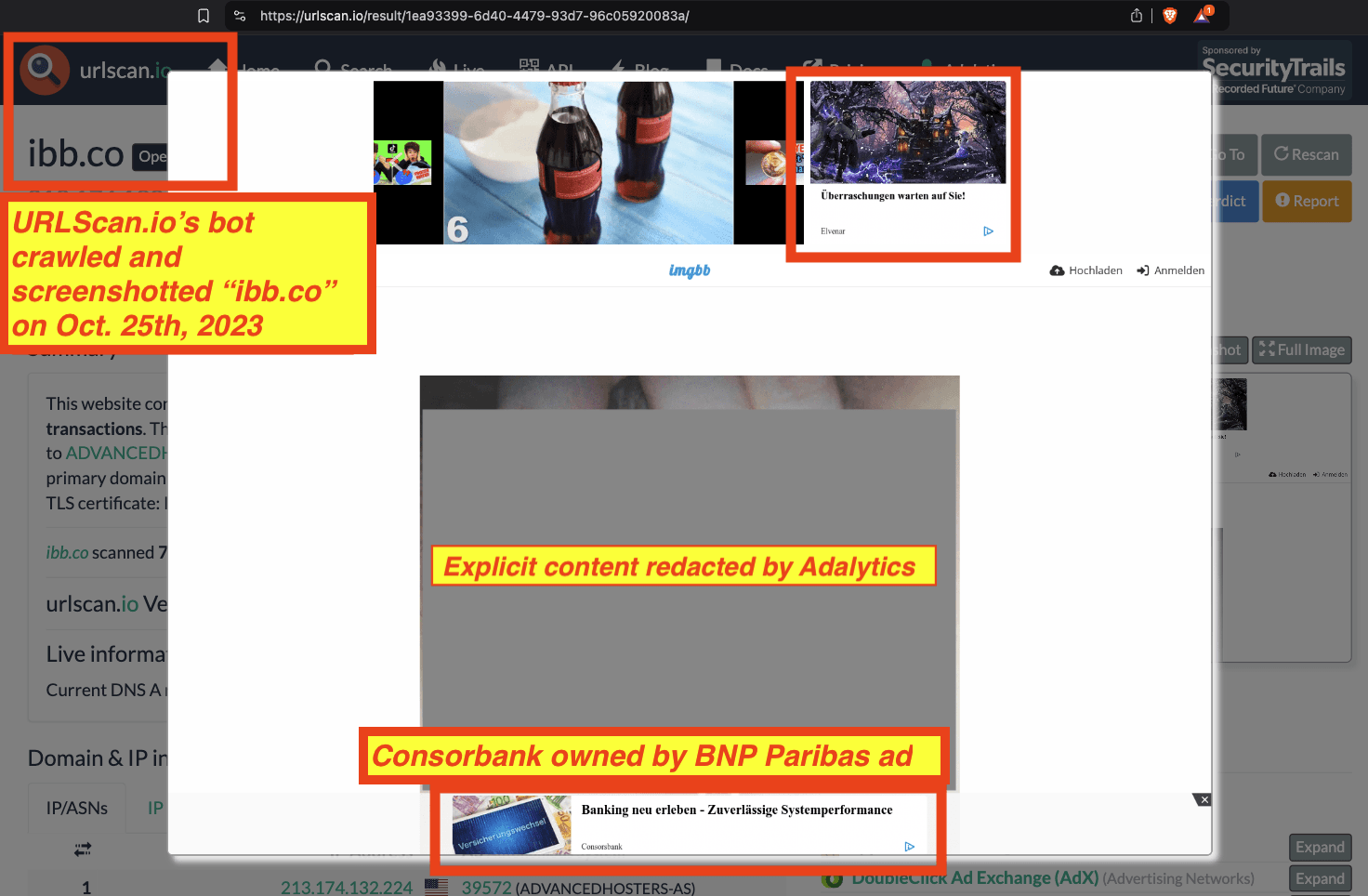

As another example, on October 25th, 2023, URLScan.io’s bot crawled one of the “ibb.co” page URLs, which has a photo of a woman's exposed genitalia. Whilst the bot was screenshotting the page, it was served a digital ad for Consorbank (owned by BNP Paribas). This could possibly indicate BNP Paribas may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/1ea93399-6d40-4479-93d7-96c05920083a/

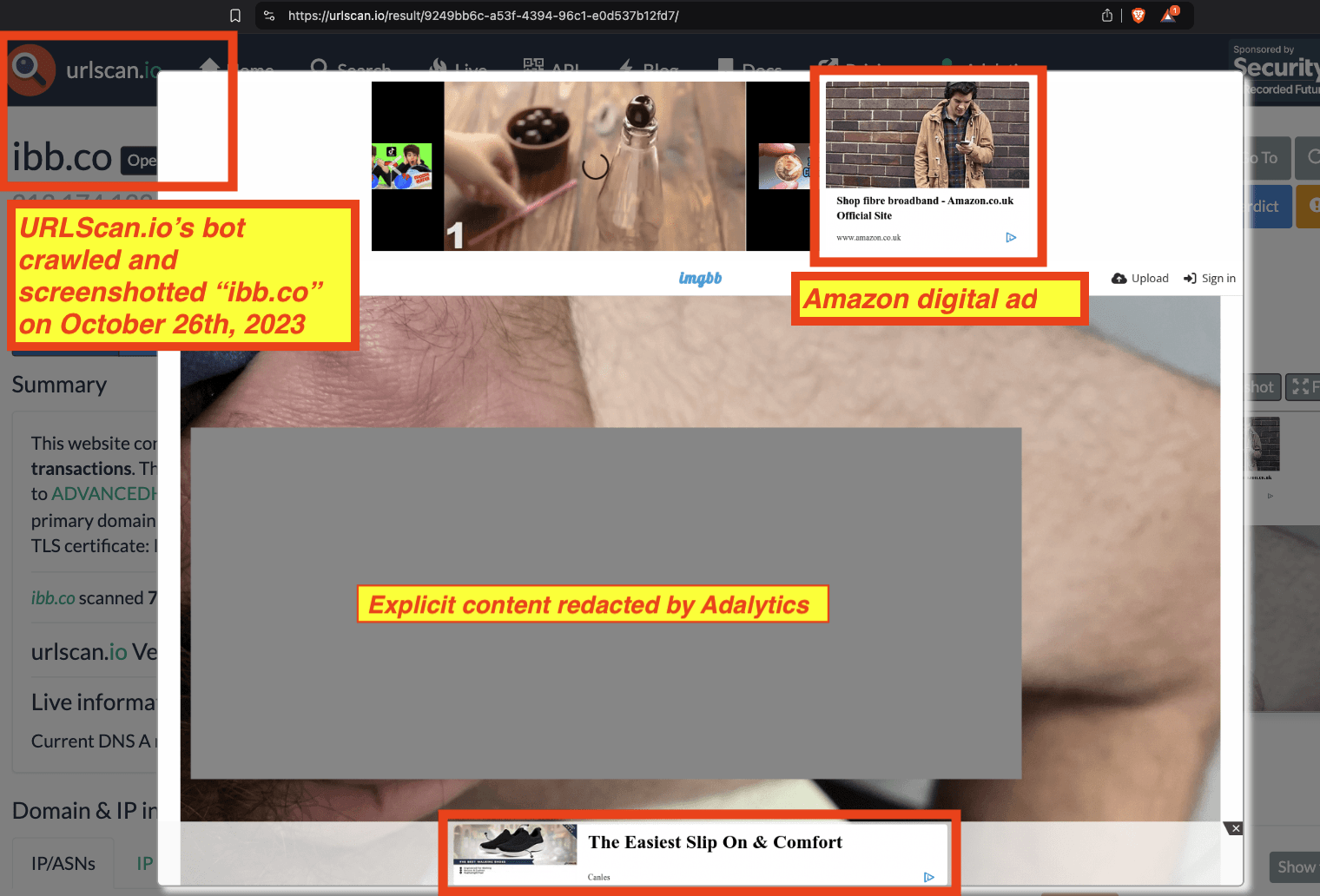

As another example, on October 26th, 2023, URLScan.io’s bot crawled one of the “ibb.co” page URLs, which has an explicit photo of a man’s erect penis. Whilst the bot was screenshotting the page, it was served a digital ad for Amazon. This could possibly indicate that Amazon may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/9249bb6c-a53f-4394-96c1-e0d537b12fd7/

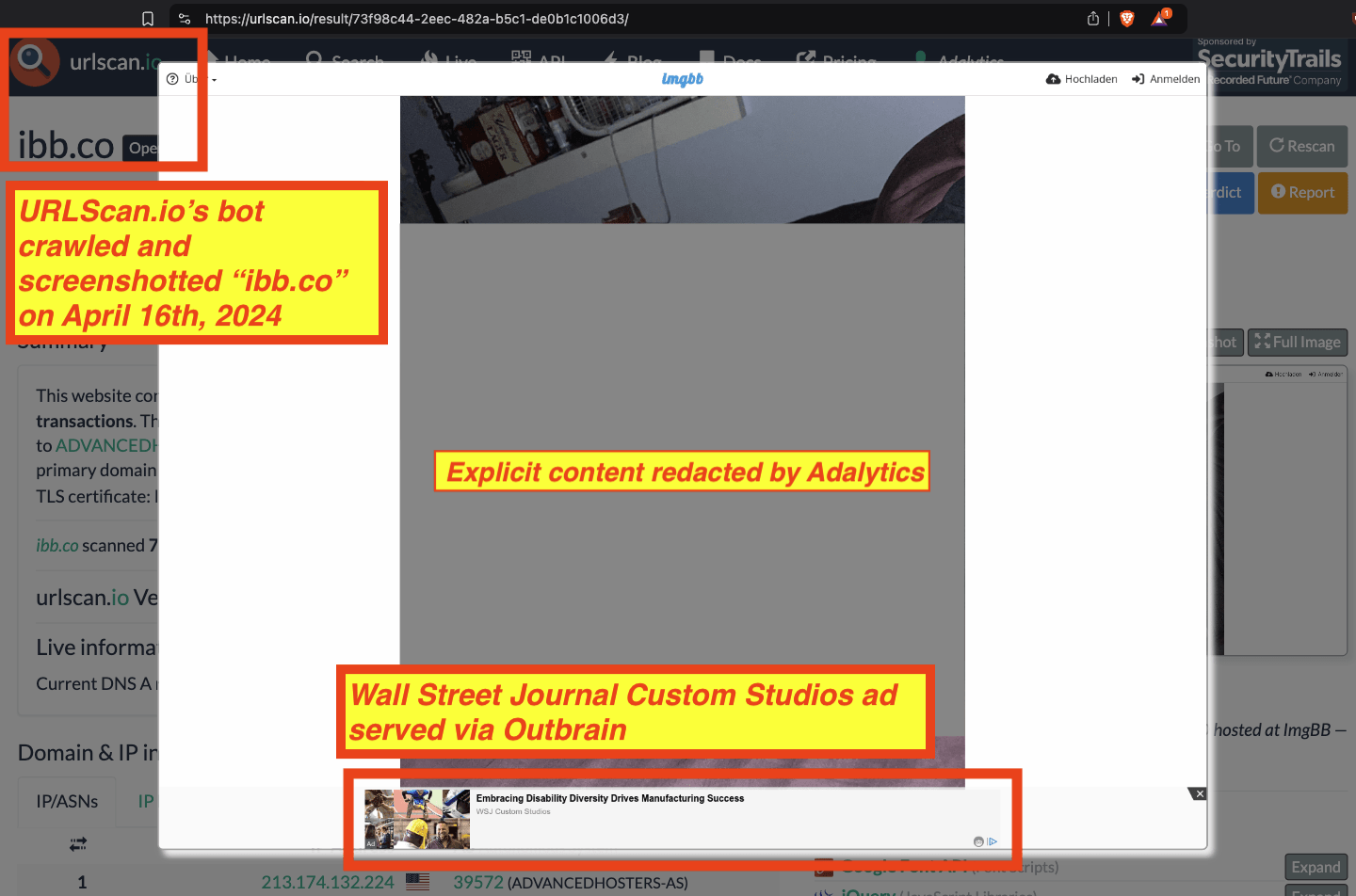

As another example, on April 16th, 2023, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, which has an explicit photo of a female’s rear end. Whilst the bot was screenshotting the page, it was served a digital ad for Wall Street Journal Custom Studios. This could possibly indicate that Wall Street Journal may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/73f98c44-2eec-482a-b5c1-de0b1c1006d3/

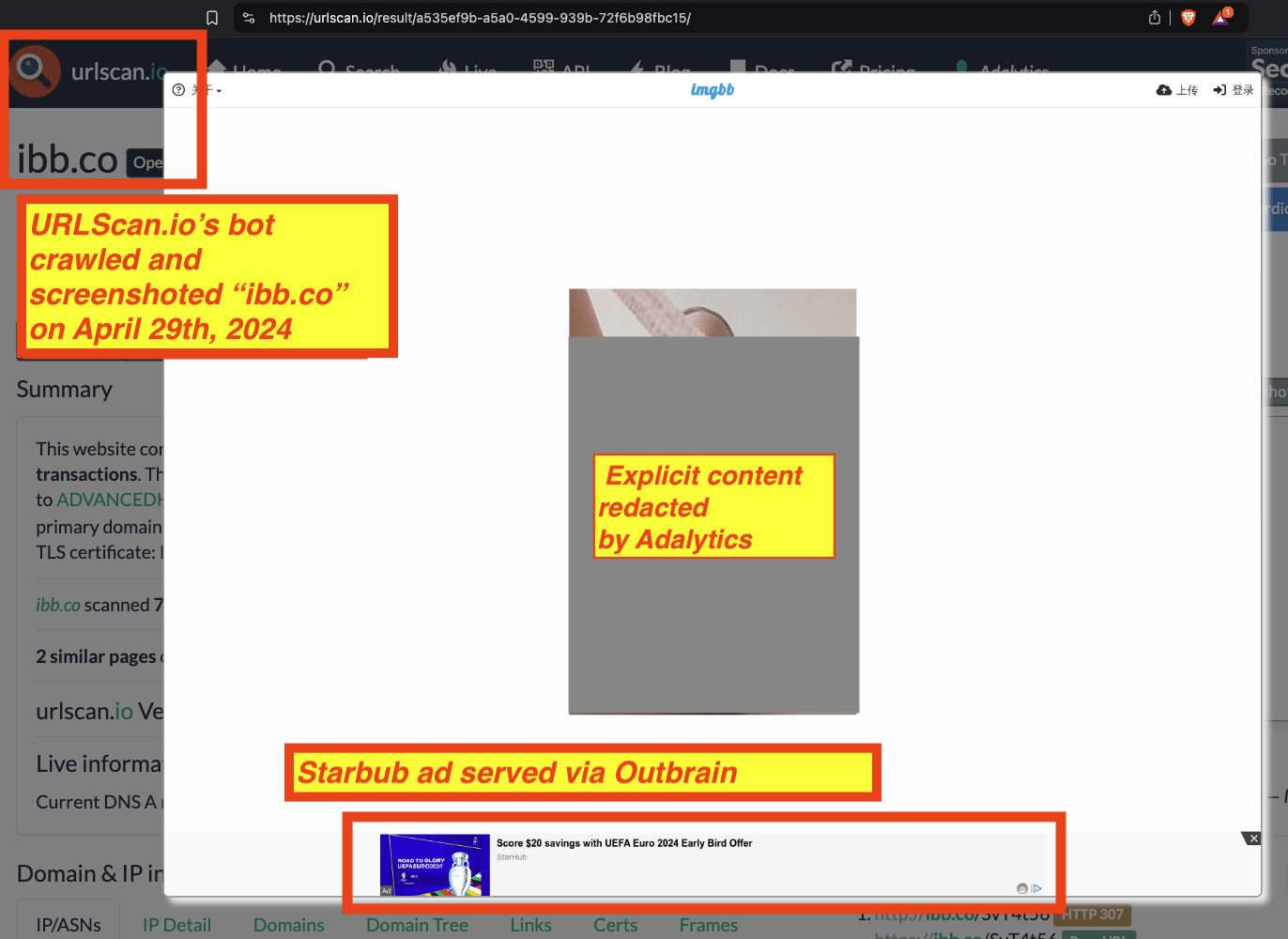

As another example, on April 19th, 2024, URLScan.io’s bot crawled one of the “ibb.co” page URLs. Whilst the bot was screenshotting the page, it was served a digital ad for Starhub by Outbrain. This means that Starhub may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/a535ef9b-a5a0-4599-939b-72f6b98fbc15/

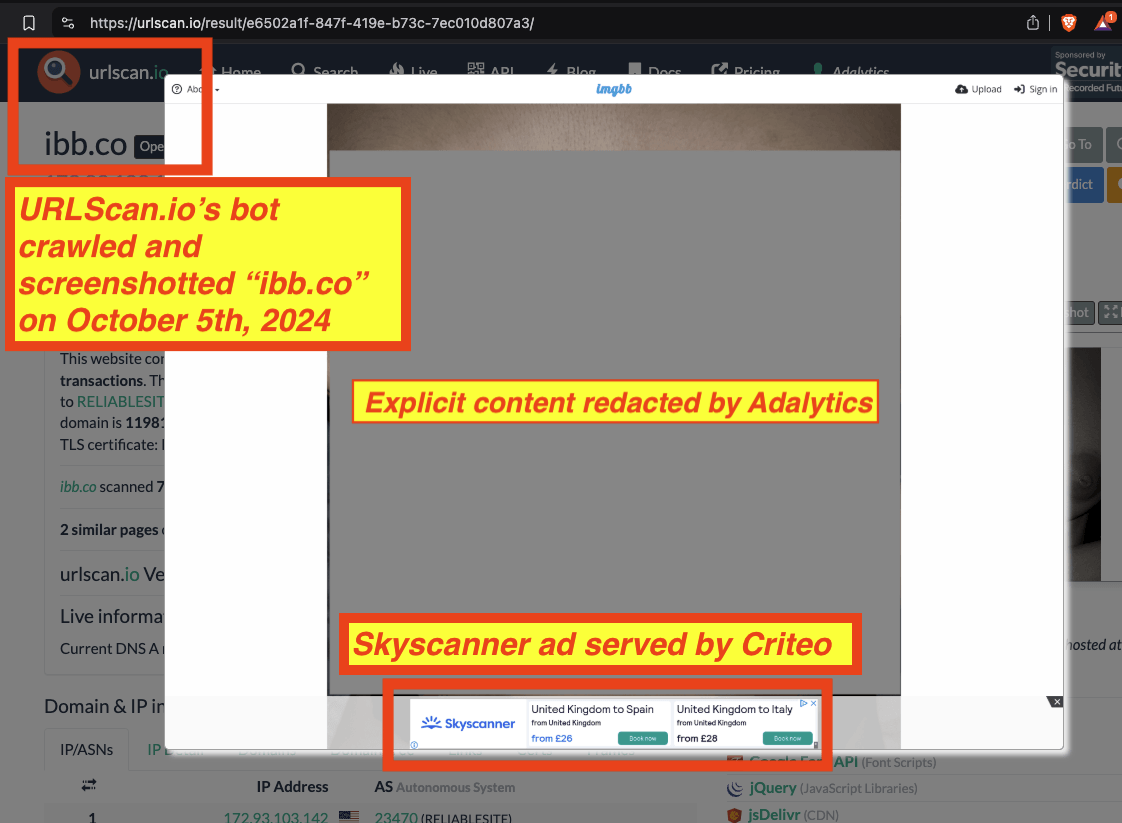

As another example, on October 5th, 2023, URLScan.io’s bot crawled one of the “ibb.co” page URLs, which shows a woman’s exposed breasts. Whilst the bot was screenshotting the page, it was served a digital ad for Skyscanner via Criteo. This could possibly indicate that Skyscanner may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/e6502a1f-847f-419e-b73c-7ec010d807a3/

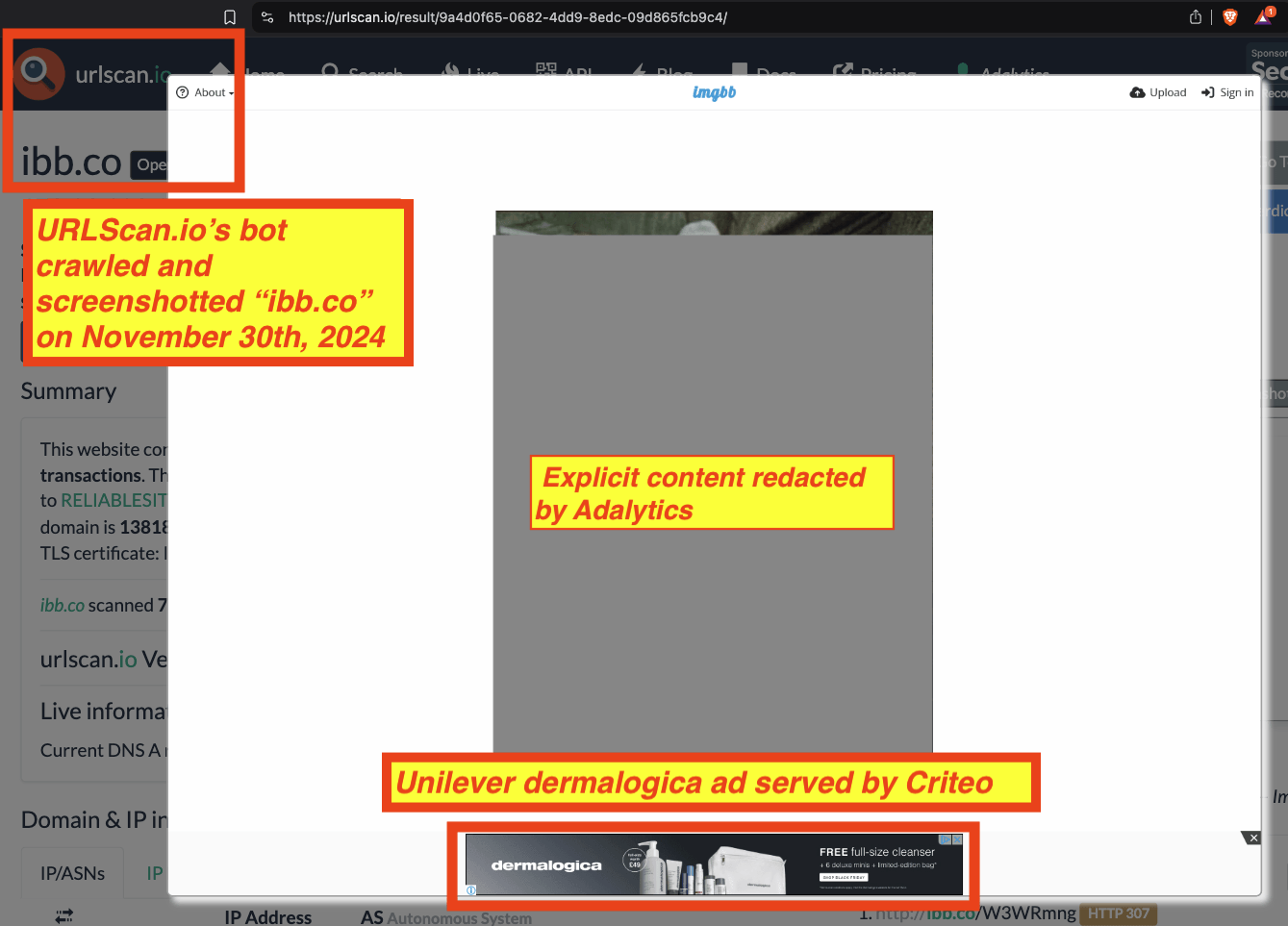

As another example, on November 30th, 2024, URLScan.io’s bot crawled one of the “ibb.co” page URLs, which shows a man masturbating. Whilst the bot was screenshotting the page, it was served a digital ad for Unilever Dermatologica via Criteo. This means that Unilever may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/9a4d0f65-0682-4dd9-8edc-09d865fcb9c4/

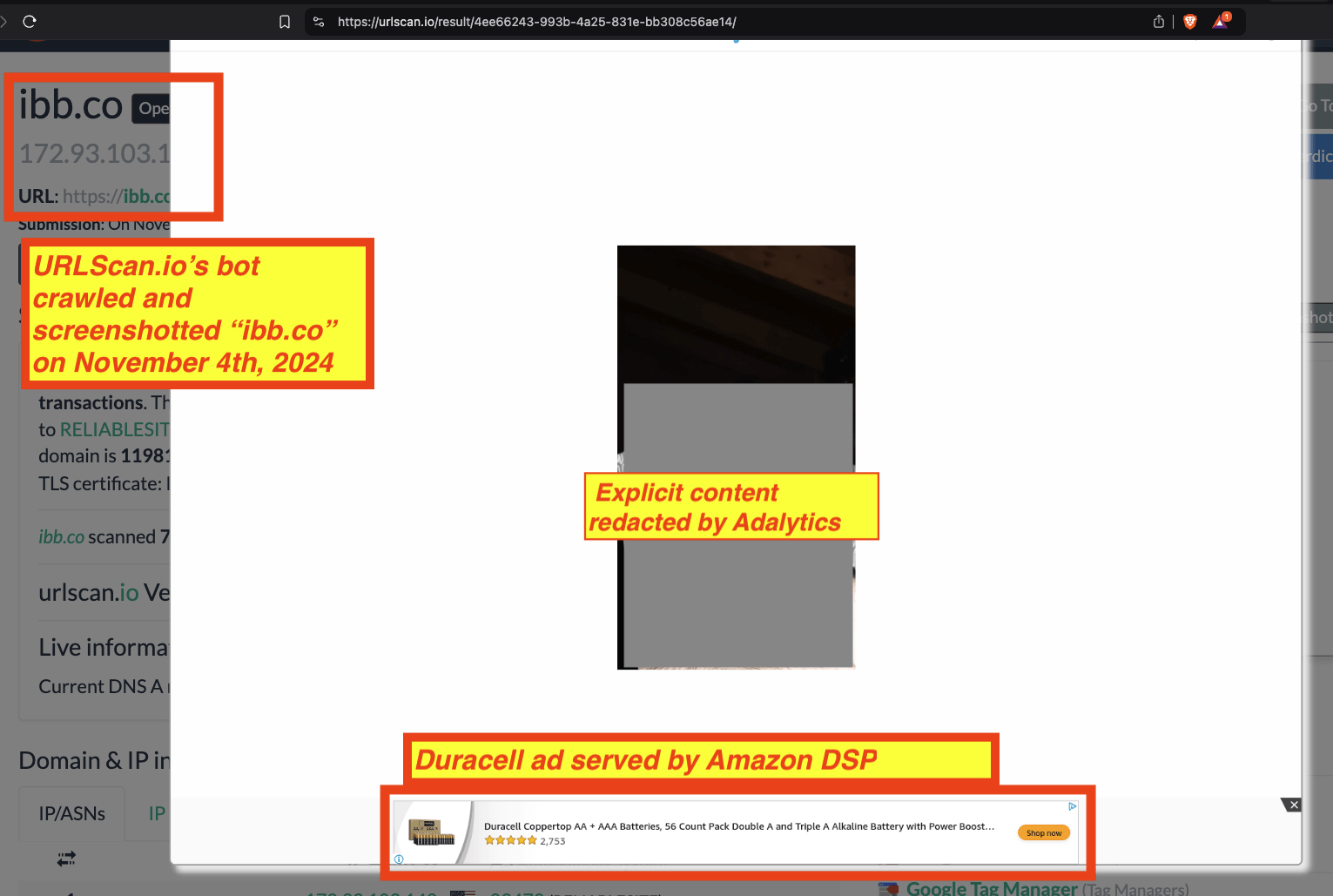

As another example, on November 4th, 2024, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, which shows a man masturbating. Whilst the bot was screenshotting the page, it was served a digital ad for Duracell batteries by Amazon demand side platform. This means that Duracell may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/4ee66243-993b-4a25-831e-bb308c56ae14/#summary

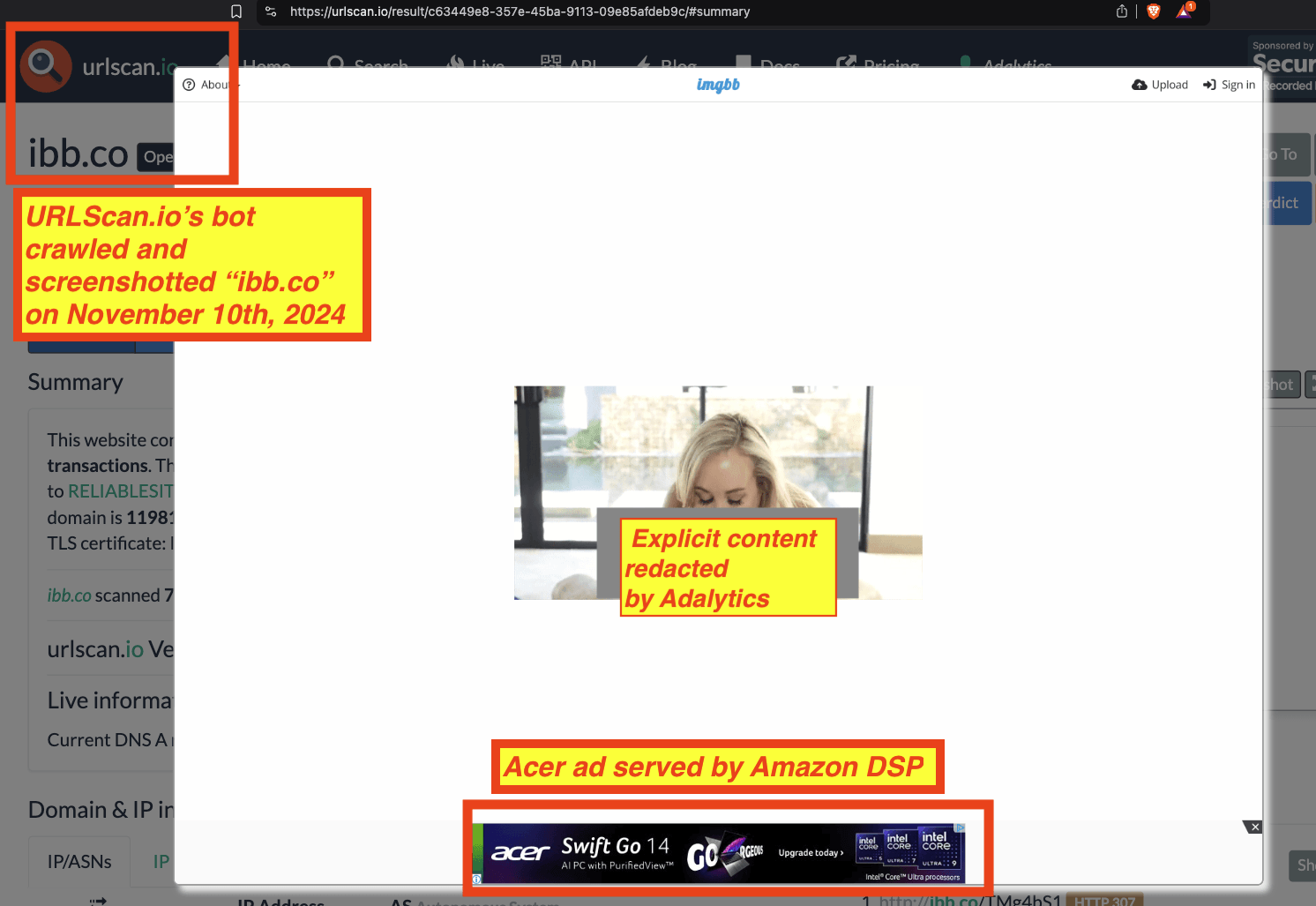

As another example, on November 10th, 2024, URLScan.io’s bot crawled one of the “ibb.co” page URLs, which shows a woman and man engaging in oral sex. Whilst the bot was screenshotting the page, it was served a digital ad for Acer by Amazon. This could possibly indicate that Acer may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/c63449e8-357e-45ba-9113-09e85afdeb9c/#summary

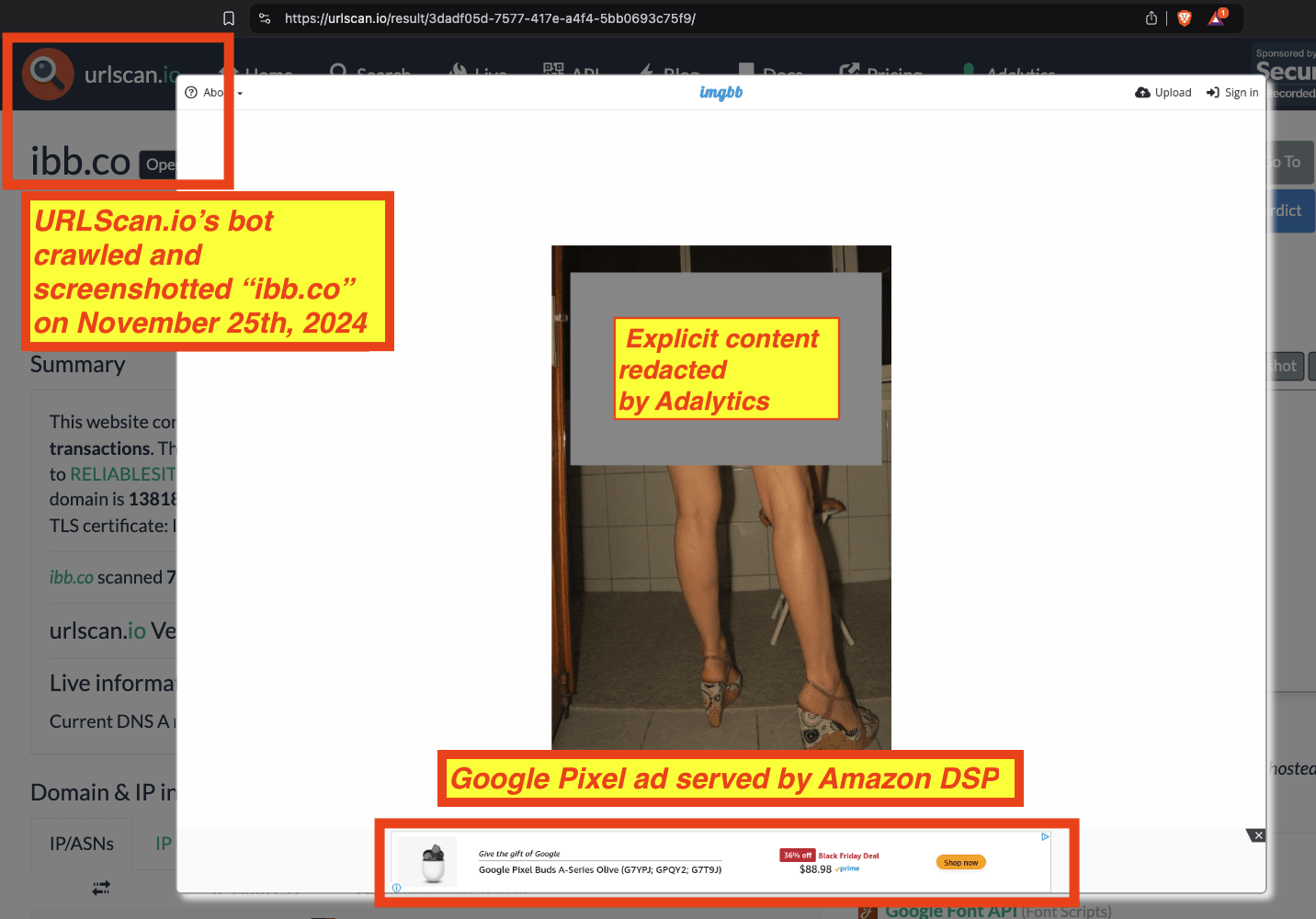

As another example, on November 25th, 2024, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, which shows a woman’s genitalia. Whilst the bot was screenshotting the page, it was served a digital ad for Google Pixel headphones via Amazon DSP. This means that Google may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/3dadf05d-7577-417e-a4f4-5bb0693c75f9/

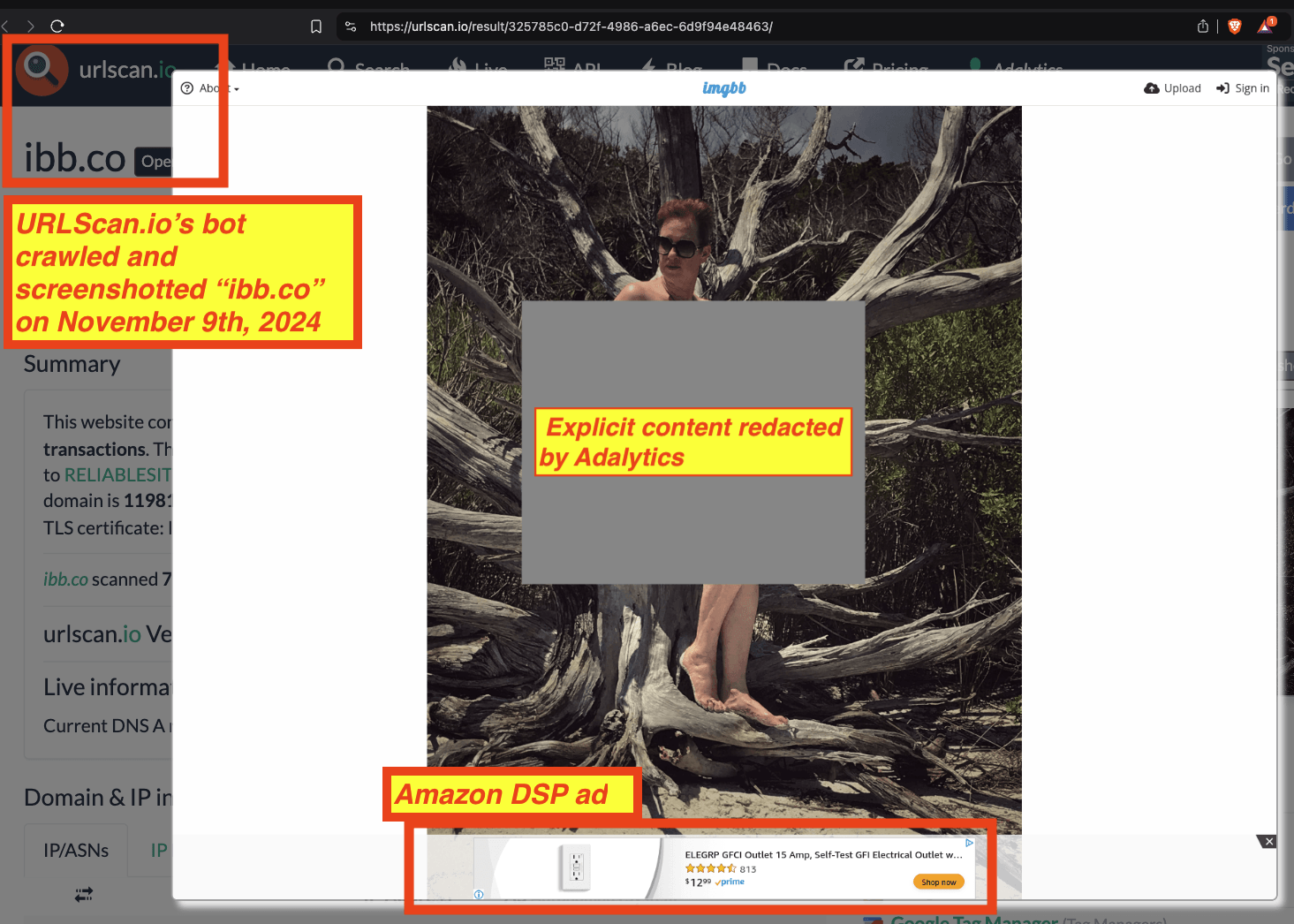

As another example, on November 9th, 2024, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, which shows a woman's breasts and genitals. Whilst the bot was screenshotting the page, it was served a digital ad for Elegrp via Amazon. This could possibly indicate that Elegrp may have inadvertently helped finance a website which is known to host and distribute CSAM, via its digital ad spend.

Source: https://urlscan.io/result/325785c0-d72f-4986-a6ec-6d9f94e48463/

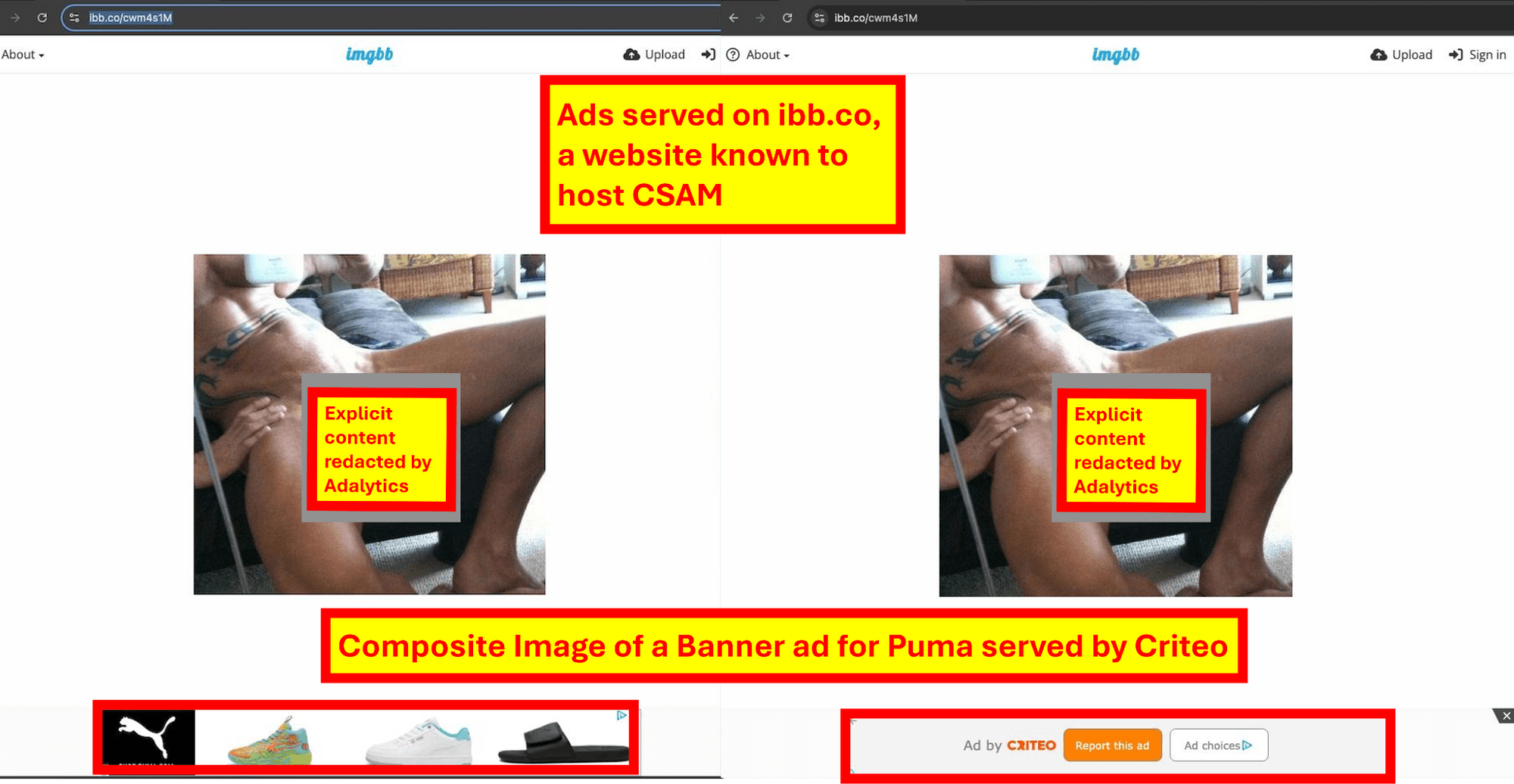

Many other major brands and advertisers were seen having their ads delivered on explicit ibb.co pages. These included the Department of Homeland Security, Tom Brady's TB12, MasterCard, Starbucks, PepsiCo (Gatorade), Mars candy and pet foods, McAfee, Honda, Tom Uber Eats, Google Pixel, Amazon Prime, Whole Foods, DenTek, Puma, Fanduel, Sony Interactive Entertainment (Audeze), Thrive Market, Intuit (Mailchimp) Unilever, Lay's potato chips, Adobe, HP, L'Oreal, Acer, Duracell, Kenvue, Sanofi Consumer Healthcare NA, SC Johnson (OFF! Insect repellent), Audible, AMC Networks' Acorn TV, Medtronic, Reckitt Benckiser (mucinex, vanish), Leukemia & Lymphoma Society, Nestle, Adidas, Domino's Pizza, Hill's Science Pet Food, Clorox, Simplisafe, Galderma Cetaphil, GoDaddy, Blink security cameras, Paramount+, HBO Max, Prestige Consumer Healthcare (Diabetic Tussin), Dyson, Tree Hut, Colgate, Hill’s Pet Nutrition, Sling TV, Progressive Leasing, Panasonic (ARC5 Palm-Sized Electric Razor), Sleep Number, Glad Trash Bags, savethechildren.org, WayFair, SanDisk, Allergan, Sennheiser, Raymour & Flanigan furniture, Monday.com, Teamson Home, Brita, Virtue Labs smooth shampoo, Filorga, Delta Faucet, Wall Street Journal, Mansion Global, Yves Rocher, Allianz, Beiersdorf, and Skyscanner.

Major ad tech vendors such as Amazon, Google, Microsoft, TripleLift, Pubmatic, Equativ, Zeta Global, Infillion, Quantcast, Sharethrough, Outbrain, Criteo, Nexxen and others appear to have served digital ads on imgbb.com and ibb.co

Several major ad tech vendors were observed transacting brands’ ads on explicit or pornographic ibb.co and imgbb.com page URLs. These vendors may have inadvertently directed their customers’ ad dollars to a website known to host Child Sexual Abuse Material, thus funding its operations. As a reminder, this report does not directly show or reference any confirmed or potential CSAM, as intentionally view CSAM is a crime under federal law.

These included vendors such as Amazon, Google, Microsoft, TripleLift, Pubmatic, Equativ, Zeta Global, Infillion (f/k/a Media Math), Quantcast, Outbrain, Criteo, Nexxen, and others.

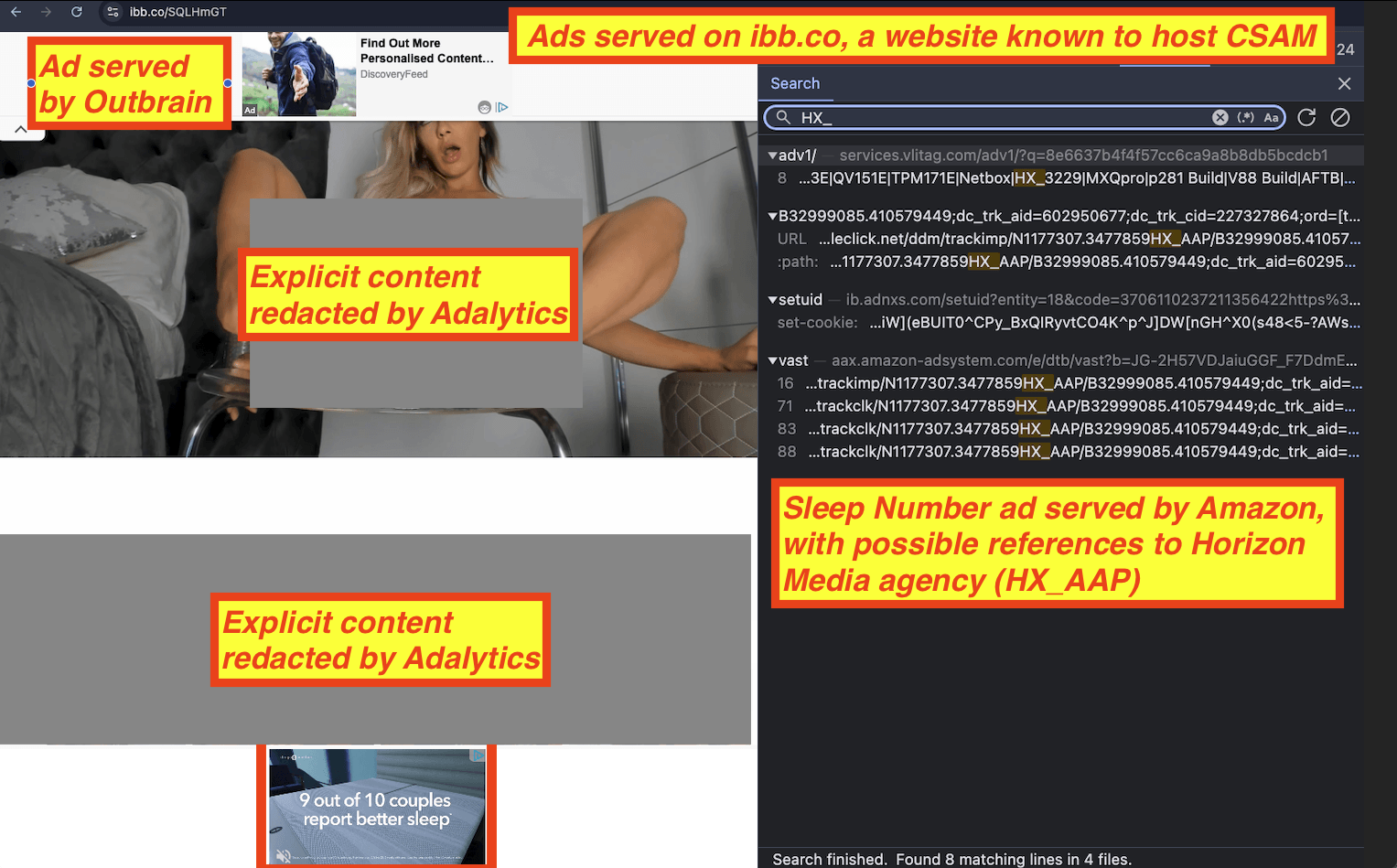

For example, in the screenshot below one can see an instance where Amazon served an ad for Sleep Number on an ibb.co page showing a woman masturbating. The source code of the Sleep Number ad includes references to “HX”, which may be Horizon Media. There is also an ad served by Outbrain visible on the pages.

Screenshot of a Sleep Number ad served by Amazon, and an Outbrain transacted ad.

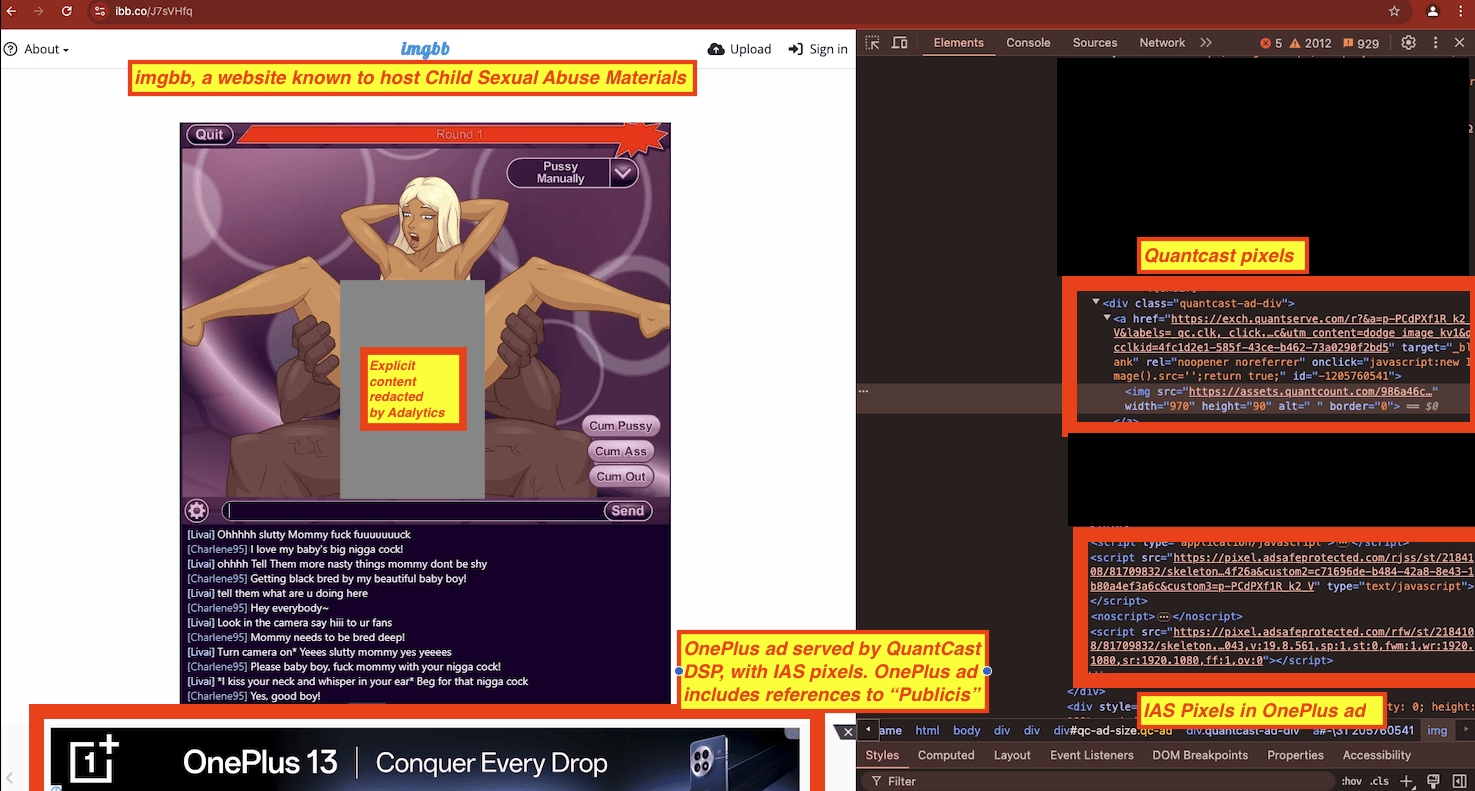

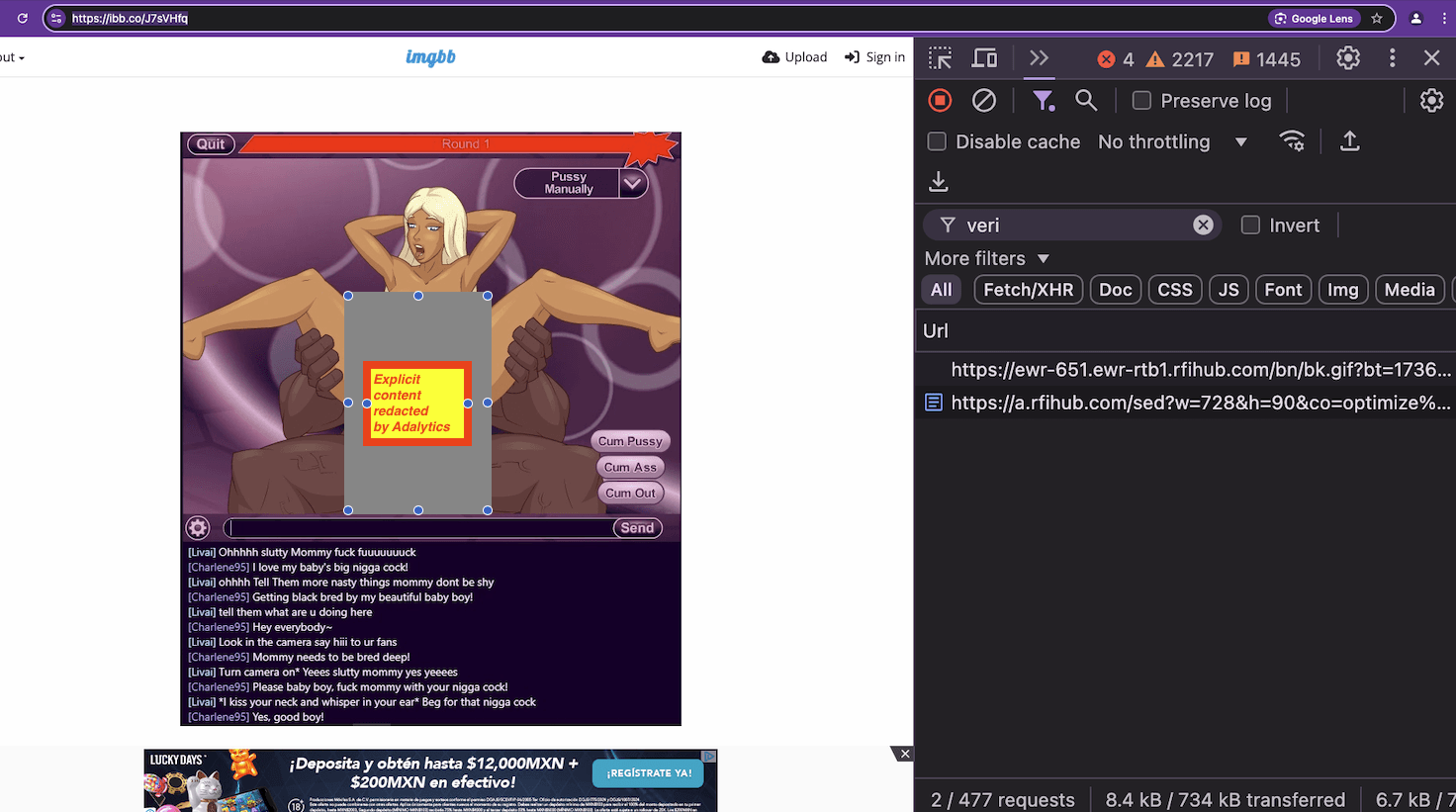

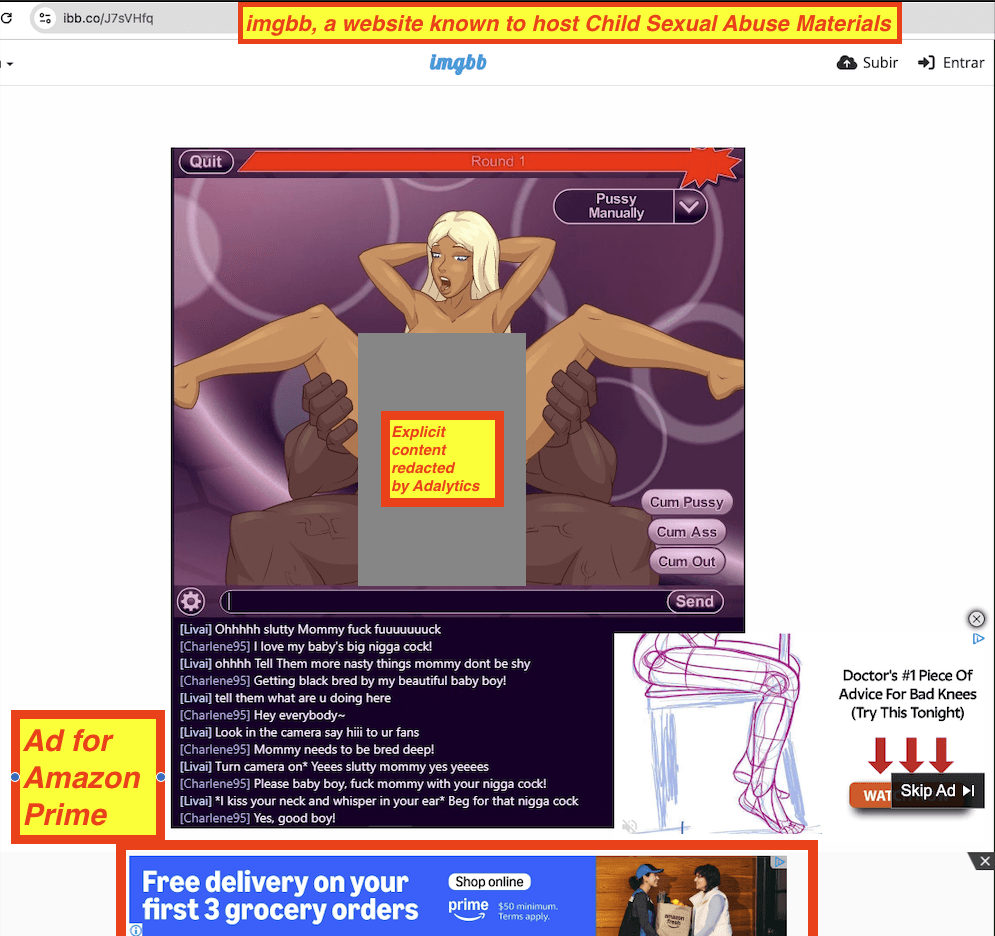

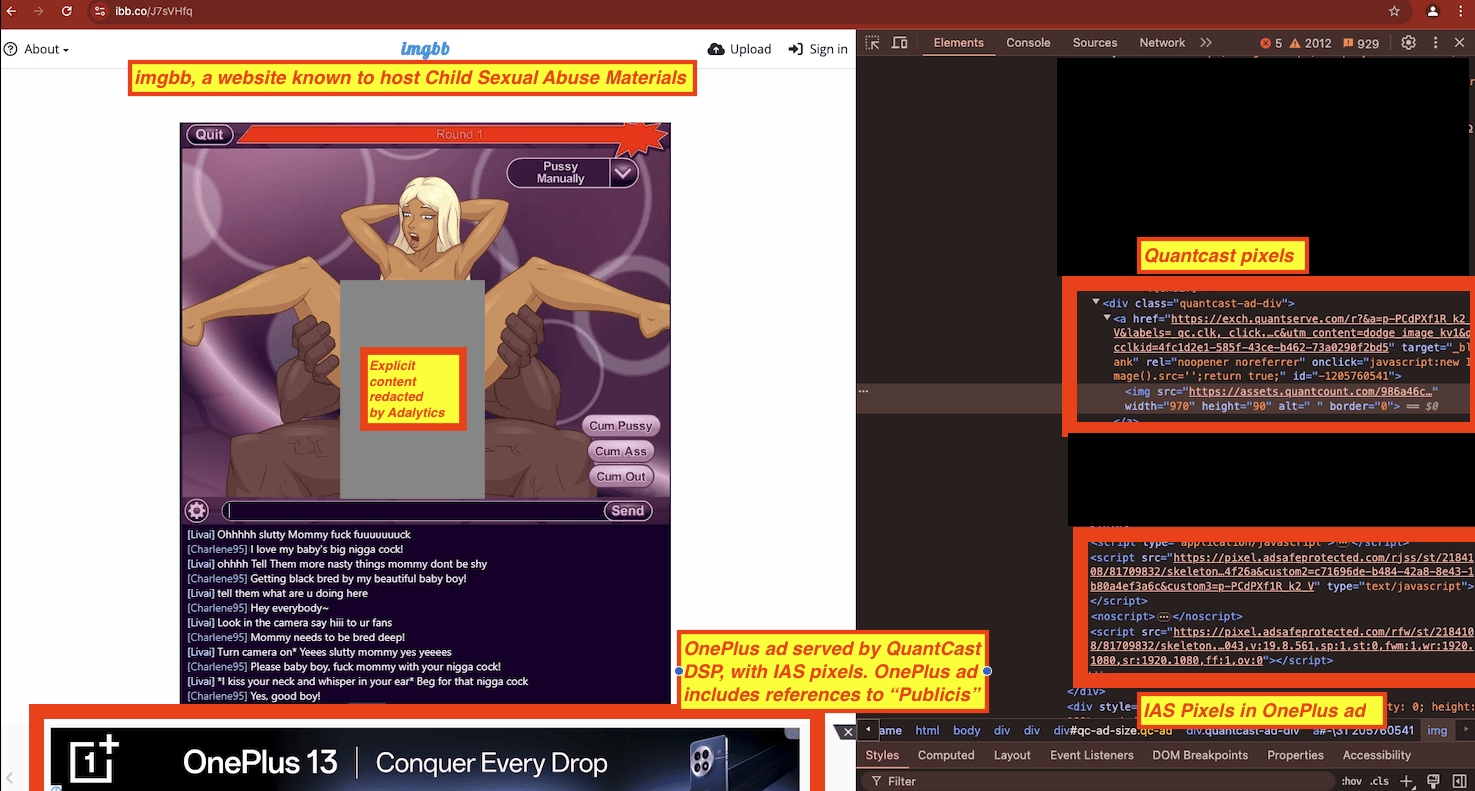

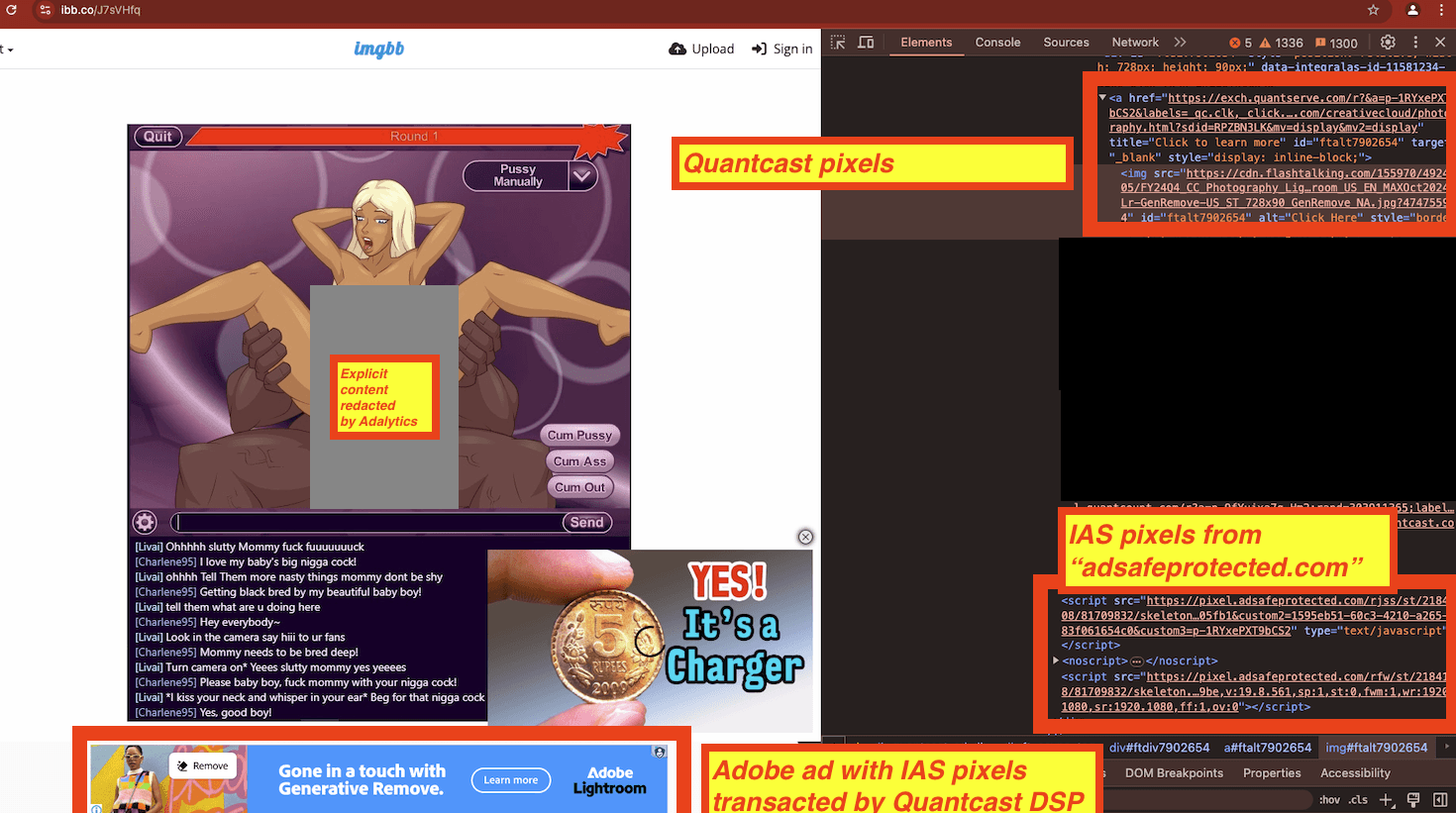

As another example, in the screenshot below one can see an ad for OnePlus served on an ibb.co page which appears to contain cartoon graphics of animated characters engaged in sexual intercourse. The source code of the ad suggests that it may have been transacted by Quantcast DSP, and the ad source code includes references to “Publicis”. Furthermore, the source code of the ad contains references to “adsafeprotected.com’, a domain operated by IAS when loading its pixel or tags for measurement purposes.

Screenshot showing a OnePlus 13 ad served with IAS pixels in the Chrome developer tools panel. The source code of the OnePlus 13 ad includes references to “Publicis”.

As another example, on September 19th, 2023, URLScan.io’s bot crawled one of the page “ibb.co” page URLs, from an IP address in Italy.

The bot screenshotted the specific “ibb.co” page, which appears to show a woman masturbating. Whilst the bot was screenshotting the page, it was served a digital ad by Google’s Display & Video 360 (DV360), a software platform used by advertisers to purchase ad placements. The specific ad was for the United States Department of Homeland Security (DHS) “Blue Campaign” (dhs.gov/blue-campaign).

Screenshot showing a United States government ad for the Department of Homeland Security (DHS) being served to a bot whilst the bot was crawling and screenshotting “ibb.co”. Source: https://urlscan.io/result/739796a3-8641-4616-ac01-f691c50d9cd4/#transactions.

It is worth noting that Google AdX, Google Ad Manager, and DV360 do not appear to be actively transacting ads on imgbb.com or ibb.co as of January 2025, at least in the observational sample of data available in this research study.

Brand safety vendors IAS and DoubleVerify appear to be measuring ad delivery on imgbb.com and ibb.co

Multiple major advertisers reported to Adalytics that their brand safety vendors had marked 100% of measured ad impressions served on ibb.co and imgbb.com as “brand safe” and/or “brand suitable”. Independent data obtained from URLScan.io confirmed that some of these media buyers' ads had served on explicit, sexual content.

Integral Ad Science (IAS) and DoubleVerify (DV) are two companies which provide advertisers with digital ad measurement and monitoring tools. The vendors also provide “brand safety” products.

According to Amazon, “Brand safety in digital advertising is about protecting your brand’s reputation when you advertise. A primary brand safety risk in the context of online advertising is having your ad appear alongside inappropriate content, and audiences developing a negative opinion of your brand because of its association with that content. Brand safety risks are a possibility in programmatic advertising—since ads are bought and sold through automated processes, buyers can't predict exactly where your ad will appear. In a study conducted by Integral Ad Science, 56% of US digital media professionals surveyed consider programmatic advertising to be vulnerable to brand risk incidents.”

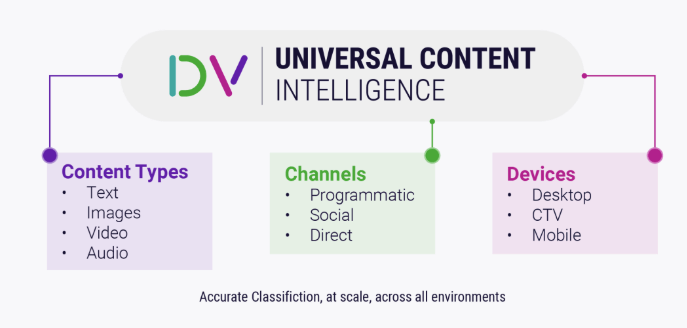

Some brand safety companies allege that some of their technology incorporates AI and/or analysis of visual media. For example, DoubleVerify asserts that: “DV Universal Content Intelligence (formerly known as DV Semantic Science), has evolved to include all key media types. To provide a holistic approach to content analysis and evaluation, DV Universal Content Intelligence looks at video, image, audio, speech, text and link elements. DV Universal Content Intelligence: DV’s AI-Powered Classification Engine. DV Universal Content Intelligence is an industry-leading classification engine that powers content categorization across various platforms. This sophisticated tool leverages AI and relies on DV’s robust and proprietary content policy to provide advertisers with accurate content evaluation, broad coverage and brand suitability protection at scale. DV Universal Content Intelligence takes a comprehensive approach to classify visual elements, audio and speech and text [...] Visual Elements: Leveraging Computer Vision (CV) models and Optical Character Recognition (OCR), the solution identifies objects and people within content. This meticulous visual analysis helps ensure that the visual components surrounding your ads are both suitable and consistent with your brand’s message and resonate with your audience.”

The ads of several brands whose ads were observed on imgbb.com or ibb.co appear to include references to or source code from Integral Ad Science (IAS) and DoubleVerify (DV).

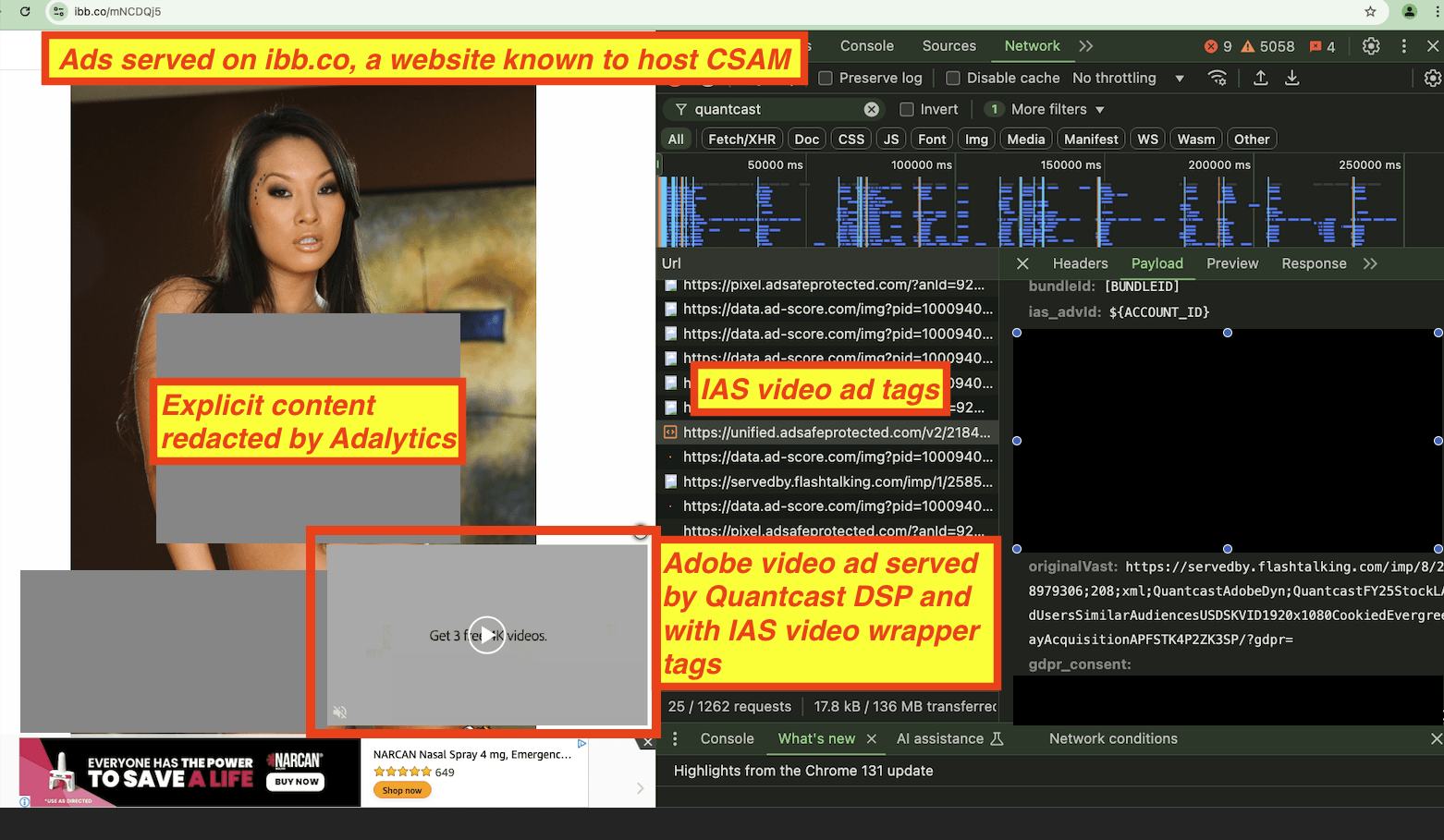

For example, in the screenshot below, one can see a video ad for Adobe that had IAS video ad related tags. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

The Adobe ad was served adjacent to an explicit image for an adult woman.

Screenshot showing an Adobe video ad served with IAS video tags in the Chrome developer tools panel.

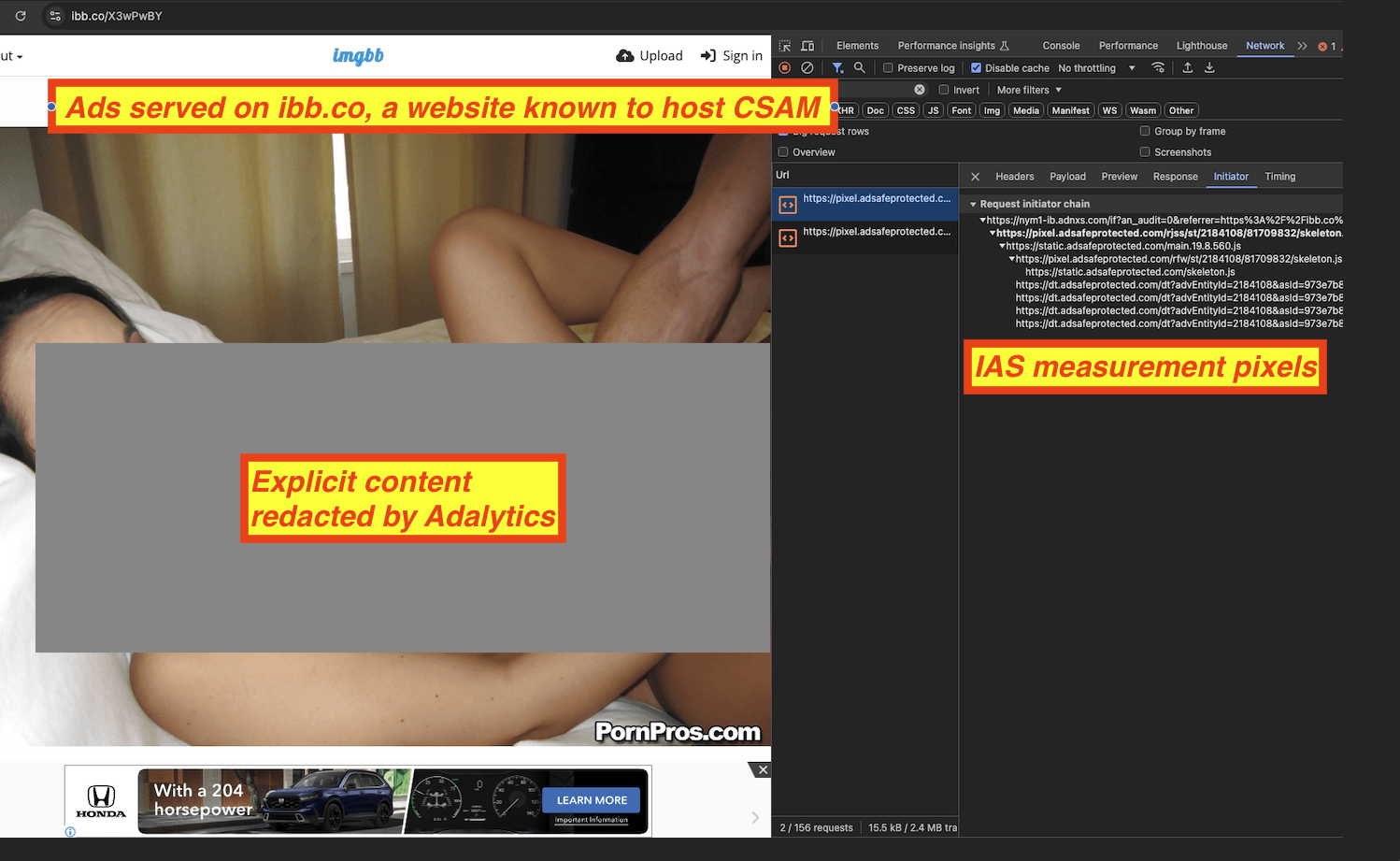

As a second example, in the screenshot below, one can see that several ads were served on an ibb.co page URL showing a screenshot from “pornpros.com”. IAS’ measurement pixels can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

Screenshot showing a Honda ad and IAS measurement pixels in the Chrome developer tools panel. Note that the IAS pixels may be unrelated to the specific Honda ad visible in the screenshot.

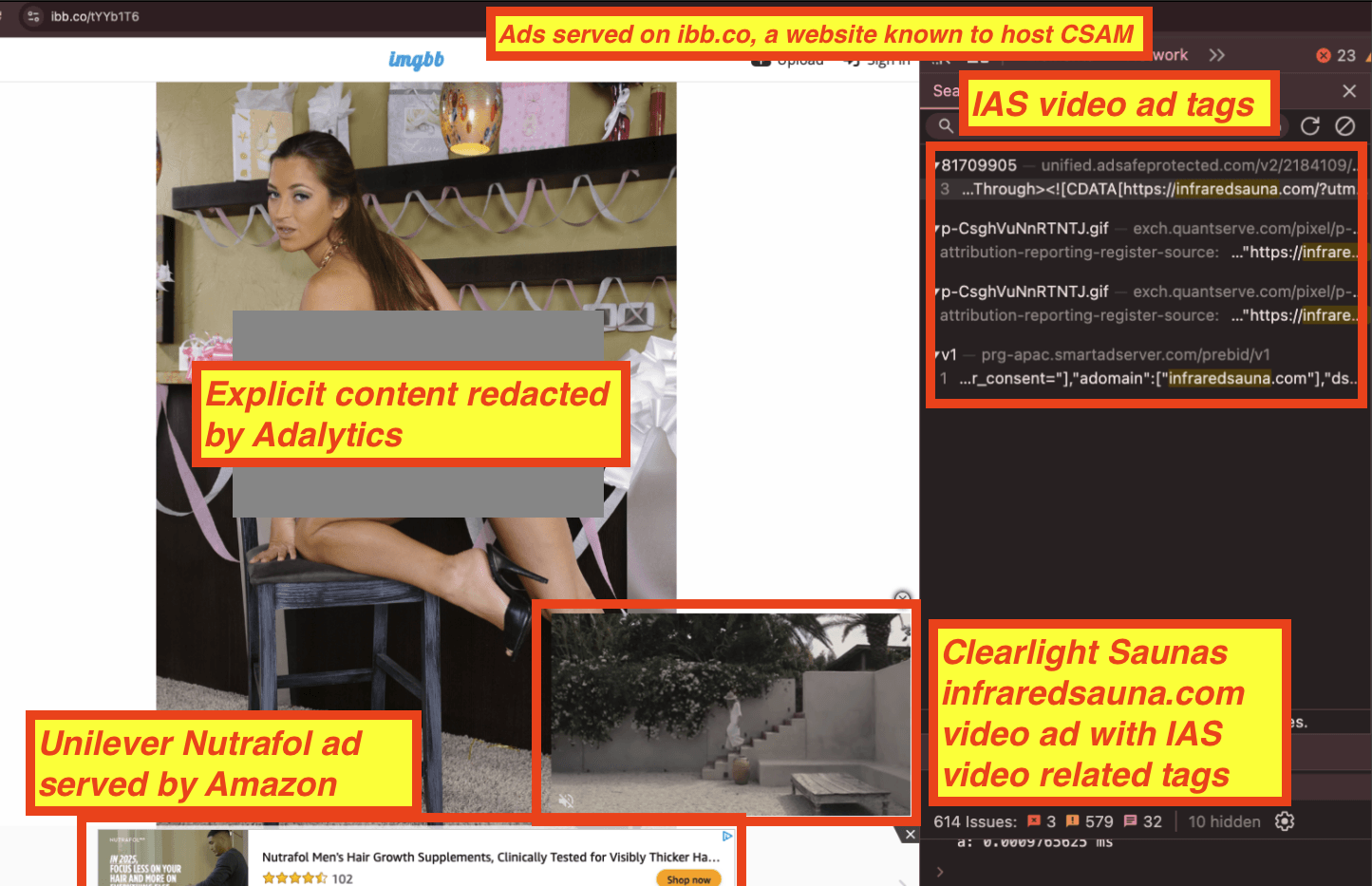

As a third example, in the screenshot below, one can see that several ads from Unilever and from Clearlight Saunas were served on an ibb.co page URL showing a screenshot of a nude woman from Naughty America, a pornographic film studio. The Clearlight Saunas video ad includes tags from Integral Ad Science (IAS), and was transacted by Quantcast DSP and Equativ SSP.

IAS’ video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

Screenshot showing a Clearlight Saunas video ad served with IAS video tags in the Chrome developer tools panel.

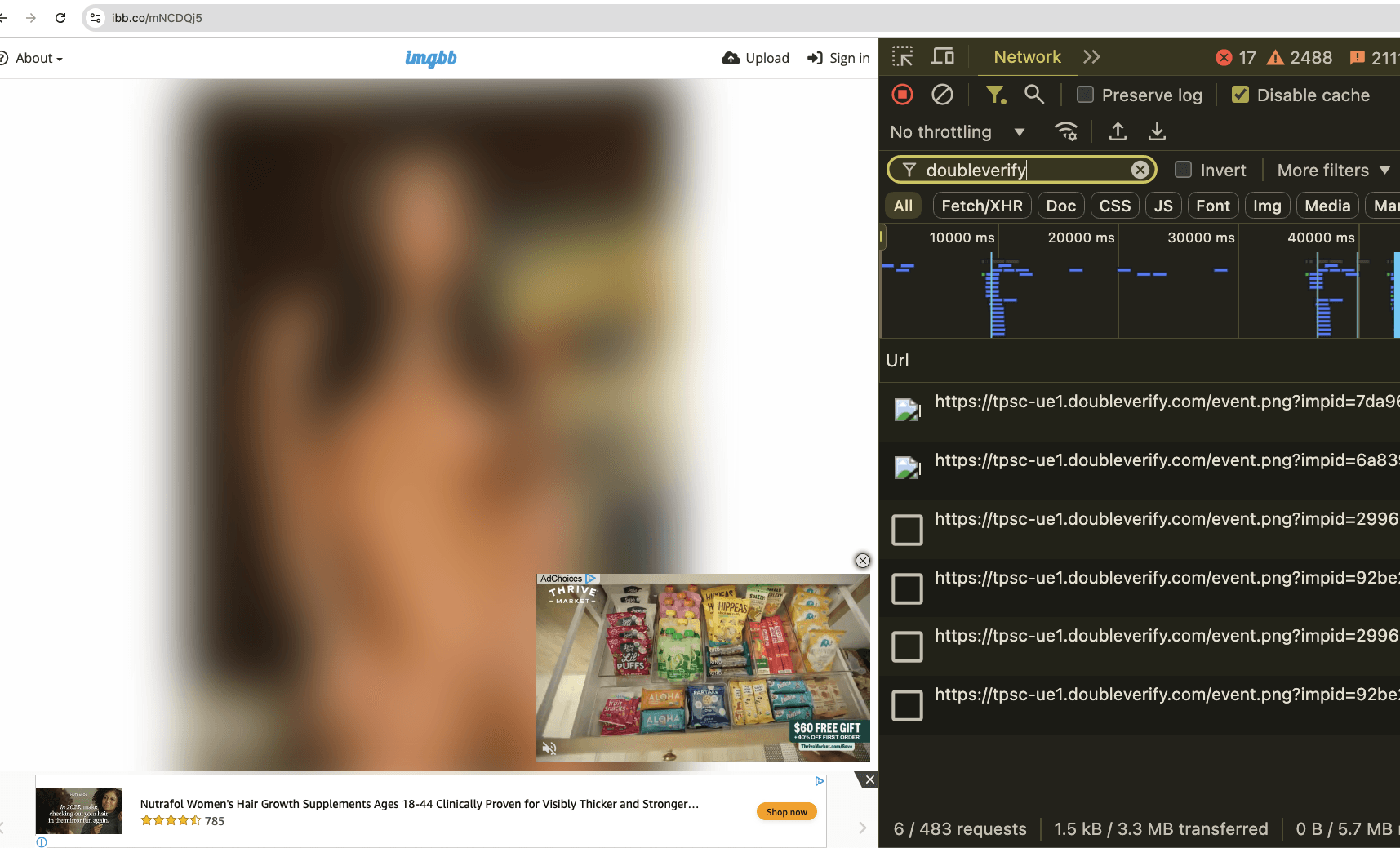

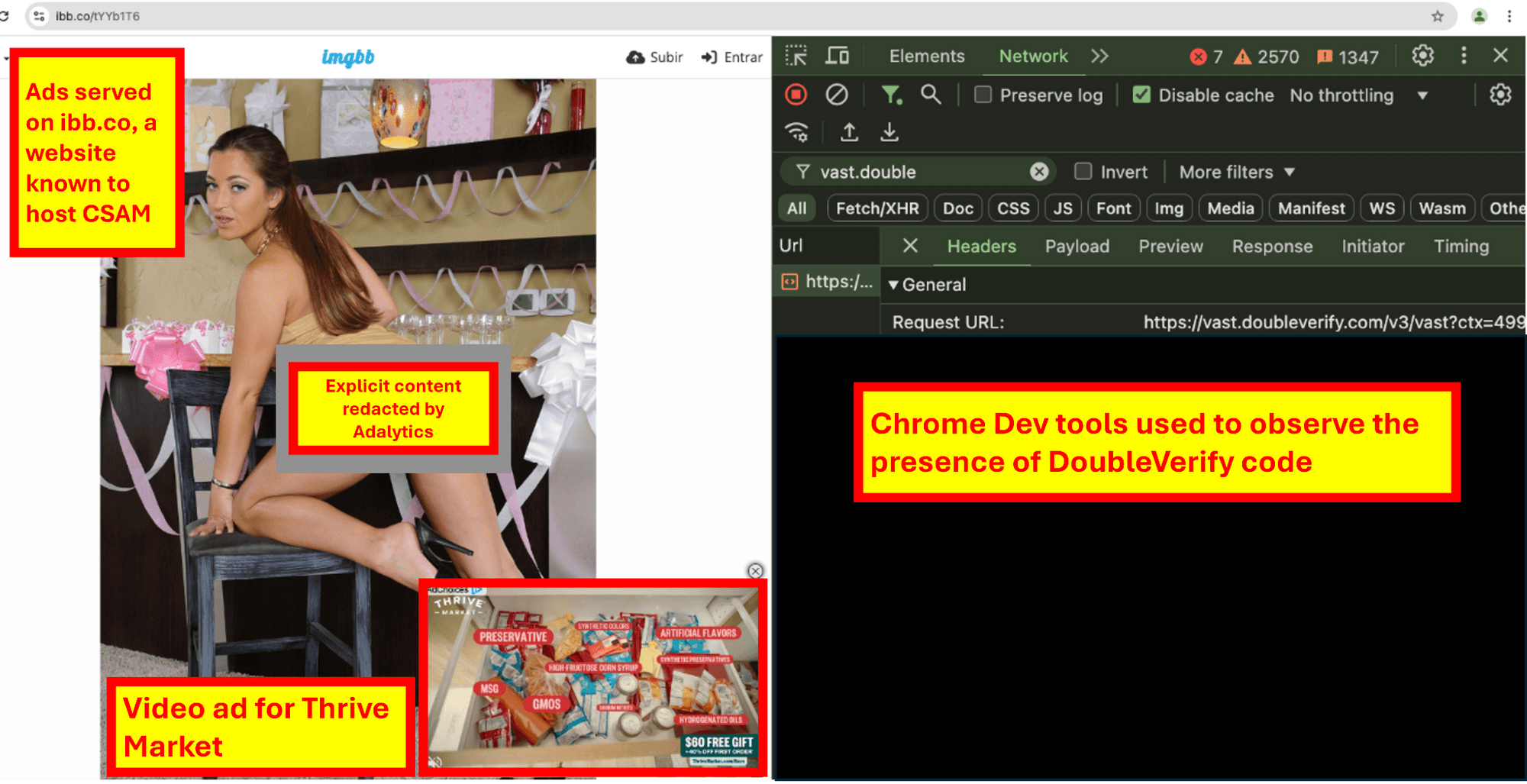

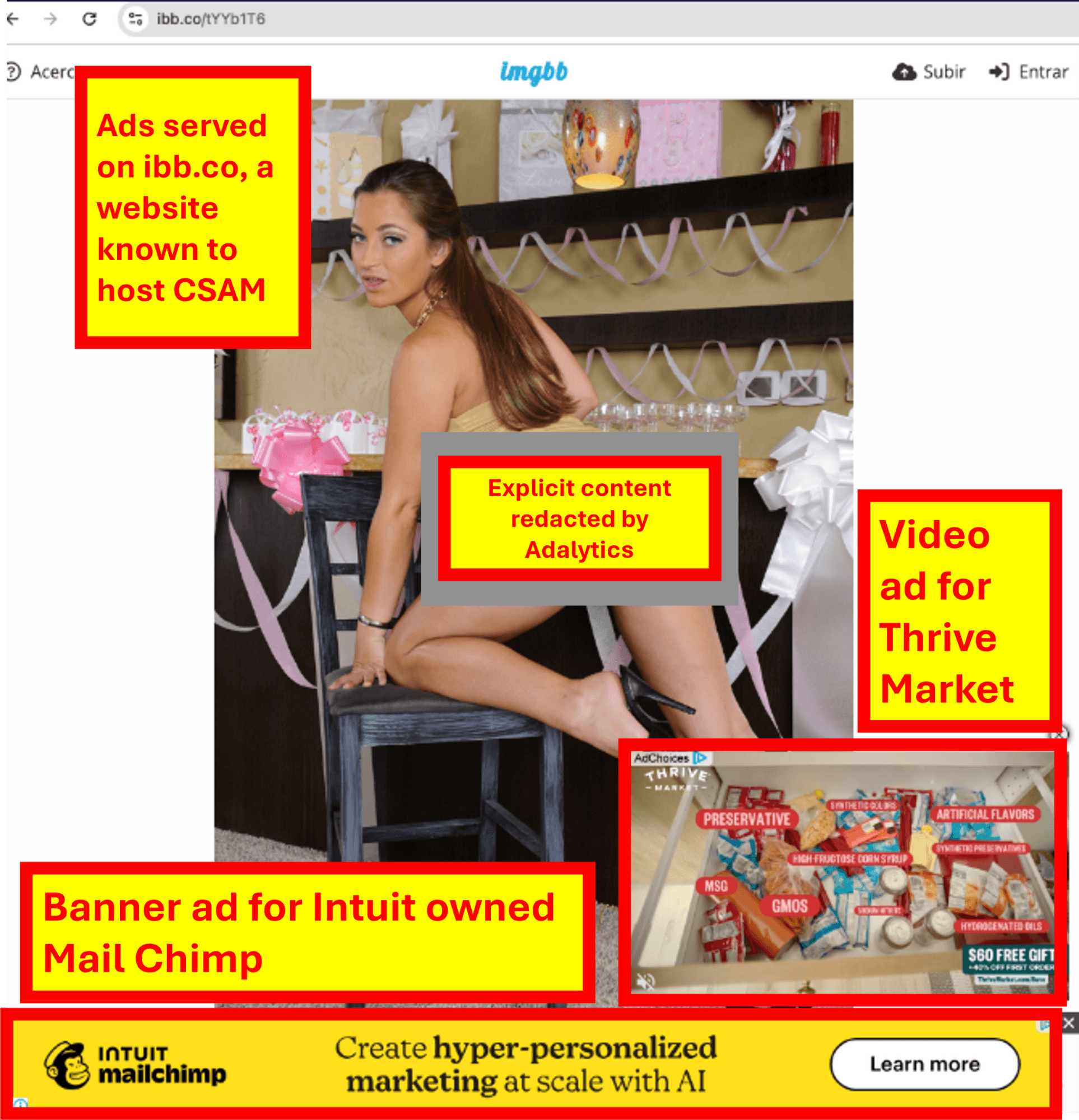

As a fourth example, in the screenshot below, one can see that a video ad for Thrive Market was served on an ibb.co page URL showing a screenshot of a man ejaculating on a woman’s face during oral sex. The screenshot description indicates that the nude woman in the photo is Dillion Harper, a 33-year old adult film actor. The image appears to have been sourced from passion-hd.com.

The source code of the Thrive Market ad mentions ad agency “Tinuiti” and “CTV”. The Thrive Market ad’s source code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

It is also unclear if the Thrive Market ad placements or DoubleVerify video ad tags utilized "DV Universal Content Intelligence”, which “looks at [...] image and brand suitability at scale [...] takes a comprehensive approach to classify visual elements” and uses “Computer Vision (CV) models".

Screenshot showing a Thrive Market video ad served with DoubleVerify video tags in the Chrome developer tools panel. The source code of the Thrive Market ad mentions “CTV” and “tinuiti” .

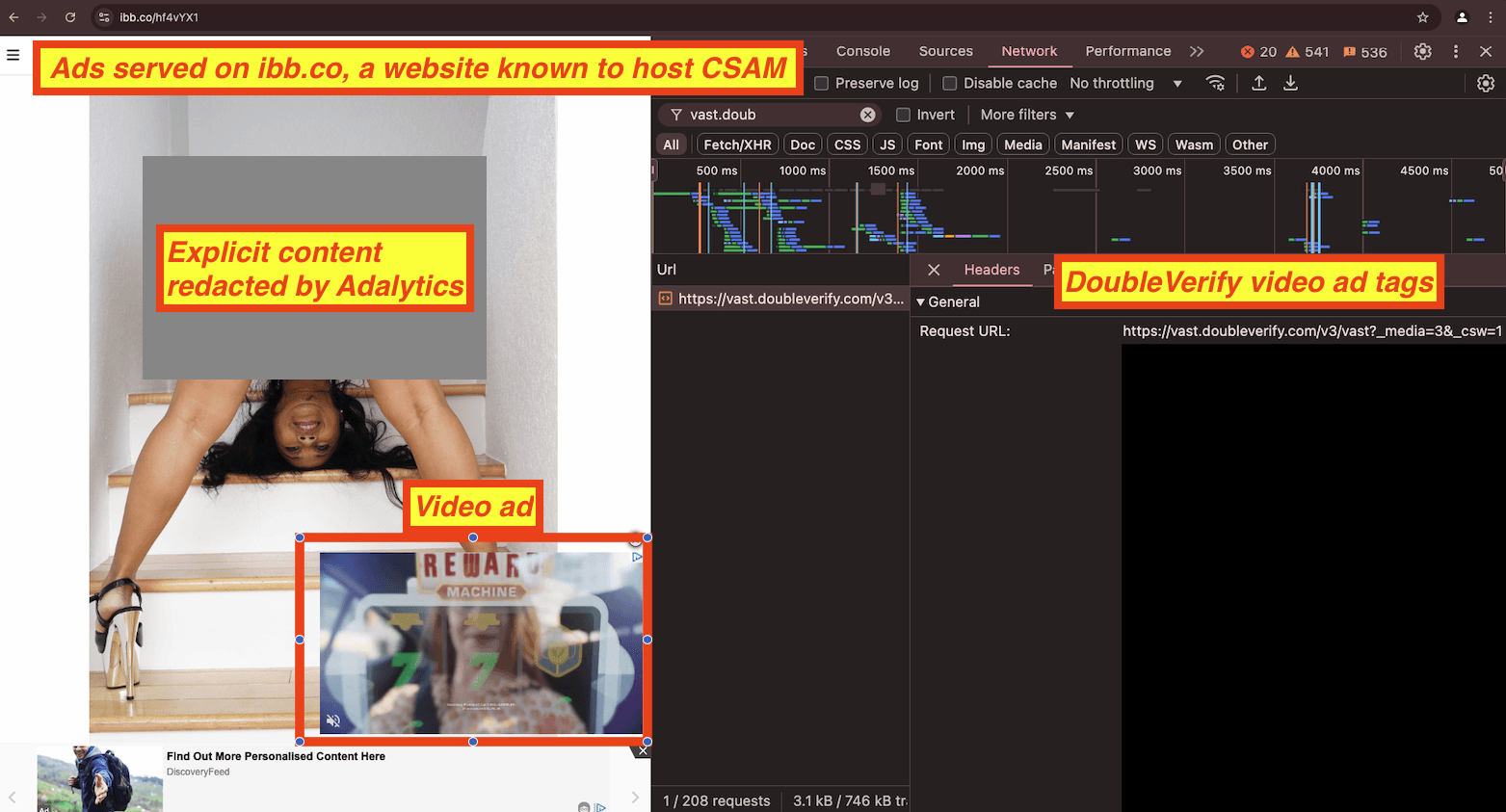

As a fifth example, in the screenshot below, one can see that a video ad for FanDuel and NFL co-branded ads was served on an ibb.co page URL showing a screenshot of a naked woman engaged in sexual intercourse.

The source code of the FanDuel and NFL co-branded ad video ad code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

It is also unclear if the FanDuel and NFL co-branded ad placements or DoubleVerify video ad tags utilized "DV Universal Content Intelligence”, which “looks at [...] image and brand suitability at scale [...] takes a comprehensive approach to classify visual elements” and uses “Computer Vision (CV) models".

Screenshot showing a FanDuel video ad served with DoubleVerify video tags in the Chrome developer tools panel.

As a sixth example, in the screenshot below, one can see that a video ad for FanDuel was served on an ibb.co page URL showing a screenshot of an adult entertainment video. The screenshot includes a watermark from “blacked.com”, which describes itself as a purveyor of “Exclusive BBC HD Erotica Porn Videos”. The ibb.co screenshot appears to show a man engaging in anal sex with a woman. The ibb.co screenshot description says that the woman in the photo is Anissa Kate, a 37 year old French adult entertainment industry actor.

The source code of the FanDuel video ad code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

It is also unclear if the FanDuel ad placements or DoubleVerify video ad tags utilized "DV Universal Content Intelligence”, which “looks at [...] image and brand suitability at scale [...] takes a comprehensive approach to classify visual elements” and uses “Computer Vision (CV) models".

Screenshot showing a FanDuel video ad served with DoubleVerify video tags in the Chrome developer tools panel.

As a seventh example, in the screenshot below, one can see that a video ad for Thrive Market was served on an ibb.co page URL showing a screenshot of a nude adult entertainment actor. The source code of the FanDuel video ad code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

It is also unclear if the Thrive Market video ad placements or DoubleVerify video ad tags utilized "DV Universal Content Intelligence”, which “looks at [...] image and brand suitability at scale [...] takes a comprehensive approach to classify visual elements” and uses “Computer Vision (CV) models".

Screenshot showing a Thrive Market video ad served with DoubleVerify video tags in the Chrome developer tools panel. The source code of the Thrive Market ad mentions “CTV” and “tinuiti” .

As an eighth example, in the screenshot below, one can see that a video ad was served on an ibb.co page URL showing a screenshot of a nude adult entertainment actor. The woman in the photo appears to be stretching and protruding her genitals. The watermark of the screenshot indicates the photo is from “ButtsMagics”.

The source code of the video ad code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

Screenshot showing a video ad served with DoubleVerify video tags in the Chrome developer tools panel.

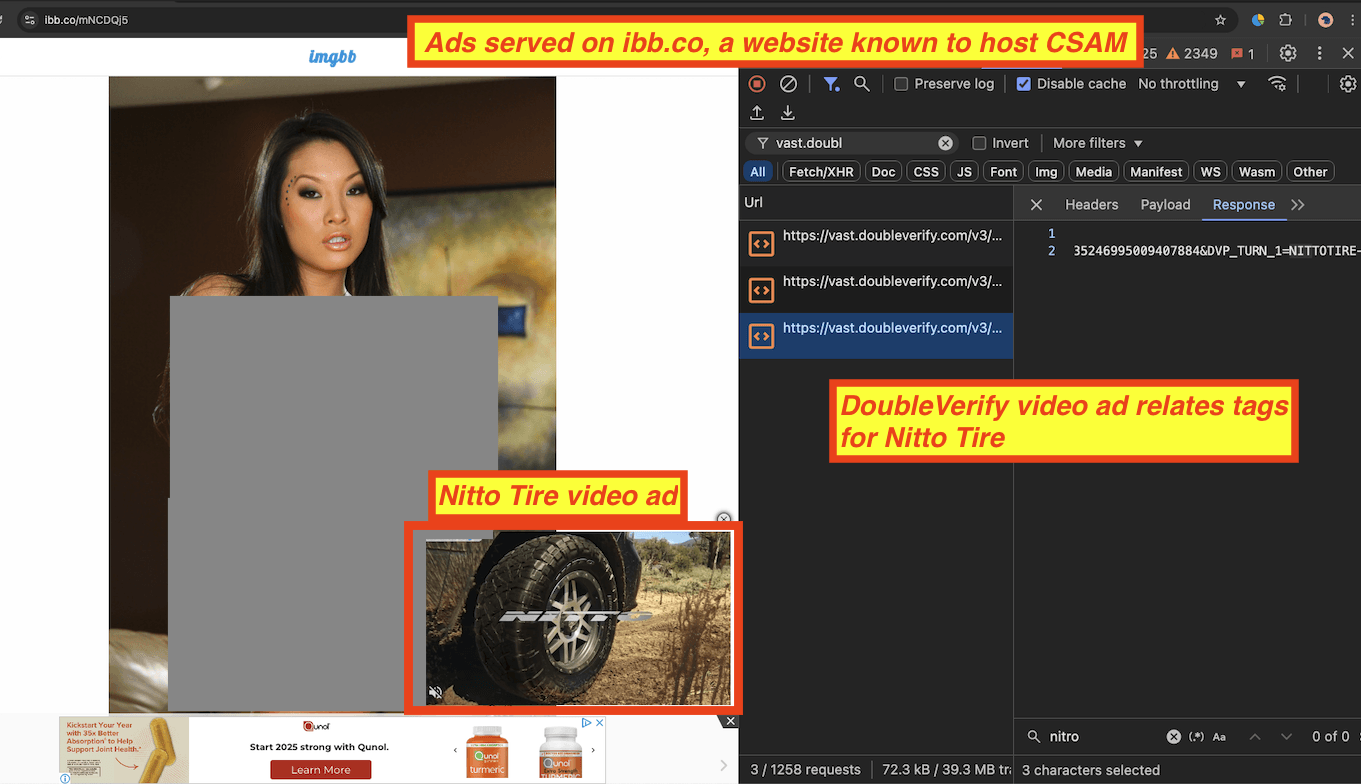

As a ninth example, in the screenshot below, one can see that a video ad for Nitto Tire was served on an ibb.co page URL showing a screenshot of a nude adult entertainment actor. The source code of the Nitto Tire video ad code appears to include tags from DoubleVerify.

DoubleVerify video tags can be seen on the right side of the screenshot, in the Chrome developer tools panel. Note that the tags may not necessarily be used for brand safety blocking. They could just be used for ad delivery and/or ad measurement or monitoring.

It is also unclear if the Nitto Tire ad placements or DoubleVerify video ad tags utilized "DV Universal Content Intelligence”, which “looks at [...] image and brand suitability at scale [...] takes a comprehensive approach to classify visual elements” and uses “Computer Vision (CV) models".

Screenshot showing a Nitto Tire video ad served with DoubleVerify video tags in the Chrome developer tools panel.

As another example, in the screenshot below one can see an ad for OnePlus served on an ibb.co page which appears to contain cartoon graphics of animated characters engaged in sexual intercourse. The source code of the ad suggests that it may have been transacted by Quantcast DSP, and the ad source code includes references to “Publicis”. Furthermore, the source code of the ad contains references to “adsafeprotected.com’, a domain operated by IAS when loading its pixel or tags for measurement purposes.

Screenshot showing a OnePlus 13 ad served with IAS pixels in the Chrome developer tools panel. The source code of the OnePlus 13 ad includes references to “Publicis”.

In one instance, 197 video ads showing the National Basketball Association (NBA) and FanDuel logo were served on a ibb.co page showing a photo from an “online multiplayer sex game”. The 197x NFL and FanDuel co-branded video ads appeared to use DoubleVerify video tags. A screen recording of the 197x NFL co-branded ads can be seen here: https://www.loom.com/share/4a8de1732df14ac295e115abc520b815

Ad tech vendors observed not serving any ads on imgbb.com and ibb.co in this sample of data

In this sample of data, several prominent ad tech vendors were not observed serving any ad impressions on imgbb.com or ibb.co.

For example, the following ad tech vendors were not observed transacting a single ad impression on imgbb.com and ibb.co in the last three years of available data from URLScan.io’s bot archive

the demand side platforms Trade Desk, Basis Technologies (f/k/a as Centro), and the buy side platform of Epsilon

Target Roundel, Walmart Connect, and Dollar General retail media networks

supply side platform (SSPs) Kargo and TrustX

This does not necessarily mean that these vendors have never transacted an ad impression on imgbb.com or ibb.co. However, those vendors were simply not observed transacting a single ad impression in the 3+ year sample of data of page crawls generated by URLScan.io’s bot.

This contrasts with the numerous examples of ads shown to URLScan.io’s bot whilst crawling these pages by vendors such as Amazon DSP, Google Ad Manager (GAM), Criteo, or Outbrain Zemanta.

Several major brand marketing executives report that ad tech vendors are not providing page URL level transparency or reporting

A major international brand was seen having its served on an explicit ibb.co page URL. However, the brand was unable to directly identify that ad delivery in its ad buying platform, because the platform only provided domain level reporting. The brand could see it had ads served on ibb.co, but it could not see how many of those ads were served on specific page URLs hosting explicit or illegal Child Sexual Abuse Material.

Adalytics reached out to several advertising experts to ask for their professional opinions on page URL rather than domain level transparency.

Several media agencies and Fortune 500 brand marketing executives reported that page URL level reporting transparency is critical for them, yet their ad tech vendors do not provide such level of reporting granularity easily or readily.

One Fortune 500 brand marketing executive said:

“URL level reporting is absolutely necessary in order for Brands to understand exactly where they are running. While domain reporting is helpful it’s not the full story. URL level detail is needed in order to validate that our brand safety settings are functioning properly.”

Unfortunately, multiple market leading DSPs have informed us that they can not share URL level reporting.”

Another Fortune 500 brand marketing executive said:

"We care deeply about URL-level reporting and these kinds of reports bely our concern that the reason it is not made available to advertisers has nothing to do with technical limitations and everything to do with reputational - and subsequent business - concerns. Since we have a zero tolerance policy for monetizing the illegal distribution of child abuse materials, we must take such allegations very seriously and will explore all possible remedies for this situation."

A third advertising executive commented:

"We have a zero tolerance policy for funding or advertising adjacent to explicit child materials. This is non negotiable.

Most marketers are unaware of the exploits that happen at the URL level, and lack access to the people or capability needed to analyze the data. This is not helped by many adtech providers being unwilling or unprepared to share data at this level falsely asserting privacy or other spurious reasons.

If we learned about our ads serving on a site hosting explicit child materials, we would be horrified and appalled. This would most likely result in an immediate pause of ad spend across all clients and a request for refunds.

There needs to be a bigger industry-wide conversation about the failure of policy enforcement by platforms and whether this is something that requires government oversight and intervention as child abuse is only part of the problem. We have also read reports of ads serving on Treasury sanctioned websites and adjacent to videos showing animal abuse - none of which should be acceptable to any Administration.”

An ad tech industry expert said:

“The full URL is vital for understanding if ads are hitting the mark. Especially with a contextual level solution, contextual level is a page level solution and domain even Subpath level reporting simply isn’t enough to ensure you’re hitting the right content. Without full URL reporting you’re on a “trust me” level understanding with the DSPs which shouldn’t be the case when we are talking about millions in ad spend.”

Another media buyer commented:

“As an advertiser, I am deeply disturbed by the findings of this report. While there may be debates about the nuances of brand safety, there is no debate—among advertisers, ad tech vendors, or any decent human being—that funding CSAM or any other form of extreme pornographic material is absolutely unacceptable. One cent or one impression is one too many.

The justification provided by major ad tech vendors for withholding URL-level transparency from advertisers has always been tenuous, but this report underscores the severe consequences of such opacity. Without URL-level transparency, brands are left vulnerable to funding reprehensible content unknowingly. This is an urgent call to action for every advertiser to demand URL-level transparency from their ad tech partners. The industry must prioritize accountability and ensure this never happens again.”

Another advertising executive commented:

"Advertisers big and small appearing next to pornographic images is entirely unacceptable and in violation of our partnership agreements with major advertising platforms. Any advertising supporting an anonymous website known to host CSAM is a moral failure I did not think was possible for the Advertising Technology community to achieve, but here we are. Every ad technology vendor should be on a Defcon 5 notice that if they have failed to vet their inventory supply until now in the pursuit of profit, they are now on the radar of law enforcement in a brand new way. This and other sources of anonymous content should be expunged from every ad server, SSP, and DSP yesterday. It's a sad day when we get a report showing the ad tech industry taking money from an organization called "Save The Children" to hand over to a website furthering the most heinous abuse of children. Every advertiser and agency should demand fully transparent, visible, full URL path log data NOW and not take any excuses for an answer."

Another advertising executive commented:

“In an industry where the bar for accountability is already scraping the floor, the inability—or unwillingness—of ad tech vendors to provide URL-level transparency is a masterclass in limbo dancing with disaster. If you’re funding platforms hosting CSAM, even inadvertently, you’re not just complicit in moral bankruptcy; you’re building a bridge to it plank by plank.

The truth is, brands deserve better than domain-level guesswork. Without URL-level insight, you’re blindfolded. Verification vendors and ad tech giants must enforce their policies, AND give brands the tools to do it themselves.”

A media agency executive commented:

“1. The digital advertising industry is facing a moral and operational crisis that goes far beyond traditional brand safety concerns. When it comes to CSAM content, there should be zero compromise. This report shows our current systems are fundamentally broken. Major brands are potentially funding criminal exploitation of children through their ad spend, largely because they're denied the basic transparency needed to prevent it.

2. These reports have exposed a devastating truth: domain-level reporting is a dangerous and insufficient industry standard that creates perfect cover for bad actors. Without URL-level visibility, brands are essentially writing blank checks and hoping for the best.

3. Vendors have the technical capability to provide URL-level transparency - they simply choose not to. They're selling brands an illusion of safety while withholding the data needed to ensure it. This isn't just a technical oversight - it's starting to feel like willful blindness. At what point does negligence become criminal?

4. If you are now discovering your brand's ads have been appearing alongside exploitation content for months or years, but you were never able to detect it because you only had domain-level reporting, while your agencies and ad tech vendors were assuring you you were protected - you should be furious. The legal and reputational damage is hard to calculate.

5. The verification vendors' failure to enforce their own supply policies is inexcusable, but their refusal to provide URL-level transparency is even worse. They're essentially saying: "We can't stop bad actors, and we won't let you stop them either." This isn't just a failure of technology or policy - it's a failure of ethics. The industry needs immediate, radical transparency. Brands must demand access to every URL where their ads appear, real-time reporting on ad placements, and immediate alerts for any suspicious content. Anything less is willfully enabling exploitation in a broken system.”

Conclusion

Caveats & limitations

Interpreting the results of this observational study requires nuance and caution.

This study is predicated on publicly available data.

Whilst the study did not directly incorporate data from advertisers within the body of the report, it was noted that data provided by advertisers and their ad tech platforms confirmed that the advertisers were in fact invoiced for ads served on imgbb.com or ibb.co. The advertisers were unable to confirm how many of their ads were directly served adjacent to child sexual abuse materials (CSAM) due to a lack of page URL level transparency from their ad tech vendors.

This report makes no assertions about the intent behind certain media buying practices, and whether or not it was done with specific parties’ authorization. This report makes no assertions or claims with regards to the quantitative magnitude of some of the phenomena observed in this study. This observational research study does not make any assertions regarding causality, provenance, intent, quantitative impact, or relative abundance.

Furthermore, the study notes that various “brand safety” vendors had some level of visibility into the fact that ads were appearing next to explicit, sexual content. It is possible that those vendors clearly indicated to their advertiser clients any potential brand safety ramifications of such ad delivery, and the clients made an implicit or deliberate decision to continue to run ads on imgbb.com or ibb.co. One should not assume that the vendors did not correctly identify and/or flag potentially offensive content to their advertiser clients.

Discussion

Multiple major advertisers reported to Adalytics that their brand safety vendors had marked 100% of measured ad impressions served on ibb.co and imgbb.com as “brand safe” and/or “brand suitable”. Independent data obtained from URLScan.io confirmed that some of these media buyers' ads had served on explicit, sexual content.

Some brands, such FanDuel and the National Football League (NFL), were observed to have their ads served hundreds of times to a single consumer on a website known to host child sexual abuse materials (video recording of 197x FanDuel and NFL co-branded ads: https://www.loom.com/share/4a8de1732df14ac295e115abc520b815). These ads appear to include code from third party verification vendors such as DoubleVerify, whilst some other ads included code from IAS.

It is unclear whether IAS and DoubleVerify’s technology identified any child sexual abuse materials on ibb.co specifically, and whether IAS or DoubleVerify reported any putative CSAM on ibb.co to the authorities such as NCMEC or C3P. Some of the explicit pages that were found in URLScan.io’s archive of ibb.co appear to have been online for months. Media Rating Council (MRC) guidelines suggest that accredited vendors should report potential illegal media content to authorities.

Some, though not all, ad tech platforms have specific inventory policies and/or terms of service that prohibit ad delivery on media environments that include illegal content, such as child sexual abuse materials. More importantly still–and notwithstanding any “policies” by ad tech vendors–the United States criminalizes the production, distribution, sale, and possession of materials depicting CSAM.

However, the results of this observational research study suggest that some of those ad tech vendors are not comprehensively enforcing their own policies, or with some amount of latency after ads have been served and/or advertisers have been billed.

It is worth noting that Google AdX, Google Ad Manager, and DV360 do not appear to be actively transacting ads on imgbb.com or ibb.co as of January 2025, at least in the observational sample of data available in this research study, despite previously having served ads on explicit pages. This is possible due to Google having removed imgbb.com and/or ibb.co from its inventory pool. Google’s Advertising Policies specifically prohibit “child sexual abuse materials” (https://support.google.com/admanager/answer/10502938).

Ad tech vendors such as Kargo, TrustX, Basis Technologies, Epsilon buy-side platform, Trade Desk, Walmart Connect, Dollar General retail media, and Target Roundel were never observed transacting ads on explicit pages on imgbb.com or ibb.co in the historical sample of data examined in this study. This may be due observational or data sample limitations, or it could be reflective of these vendors having taken definitive actions to avoid serving client ads on these pages.

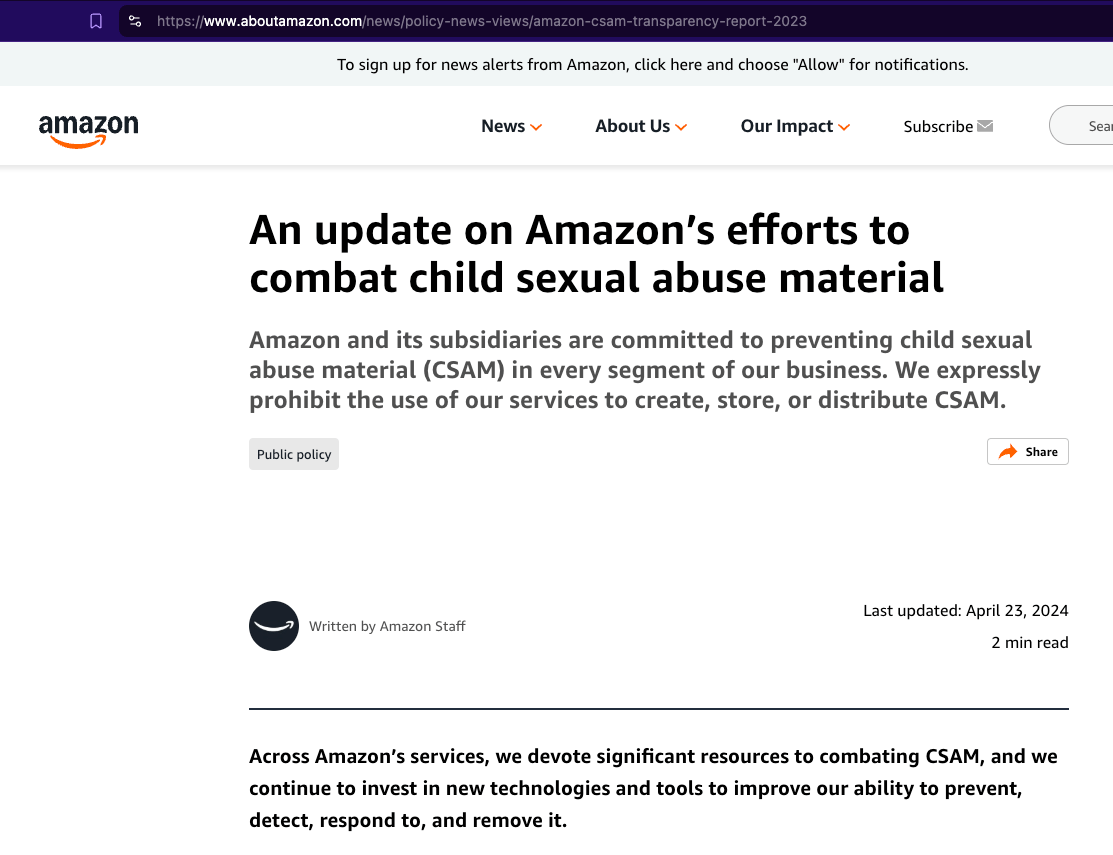

Amazon has publicly touted its “efforts to combat child sexual abuse material’. Amazon declares: “Amazon and its subsidiaries are committed to preventing child sexual abuse material (CSAM) in every segment of our business. We expressly prohibit the use of our services to create, store, or distribute CSAM.” Indeed, Amazon holds seats on the Boards of both NCMEC and the Tech Coalition (an industry organization devoted to eliminating CSAM online). Moreover, the company has spent significant resources to find, report and eliminate CSAM hosted on its own facilities.

Source: Amazon

Amazon’s “Content Adjacency Policies for Amazon DSP” state that: “We take a variety of measures to prevent your ads from appearing on webpages, apps, streaming TV, and podcasts containing sensitive content, products, and services. [...] We require ad exchanges and publishers to ensure compliance with content restrictions included in contractual arrangements. We also use signals from independent brand safety providers to filter out third-party webpages and apps containing content in the categories listed below. When these vendors return a signal that the page content includes these categories, we don’t serve your ad. We also provide the ability for you to block entire domains and mobile apps of your choosing. You can layer on filtering capabilities by DoubleVerify and Integral Ad Science to exclude additional content categories they provide.”

As noted, there is no reason to doubt Amazon’s commitment to eliminating any and all access to CSAM through its services. Yet, when, despite Amazon’s extensive efforts to achieve this goal, Amazon DSP was observed serving dozens of major brands’ ads on explicit pages, including directly adjacent to confirmed Child Sexual Abuse Material pages on ibb.co. Several major brand advertisers confirmed they were billed for ad impressions served on a website known to host CSAM by Amazon DSP.

Many of the ad tech vendors seen transacting ads on sexually explicit and/or potentially illegal CSAM containing pages in this study appear to be certified for “brand safety” by advertising industry trade groups such as the Trustworthy Accountability Group (TAG).

Multiple advertising executives expressed concerns by the fact that their advertising vendors do not readily or easily provide access to page URL level transparency. This limited visibility into where exactly their ads are serving can make it difficult if not impossible for the advertisers to quickly detect and block ad delivery on potentially illegal pages.

Lastly, this report noted examples where the United States Government itself was seen advertising on explicit content on a page known to host Child Sexual Abuse Material. Policy makers may wish to inquire whether the funding of such websites - via the governments’ digital advertising - are a prudent allocation of tax payer dollars.

Appendix

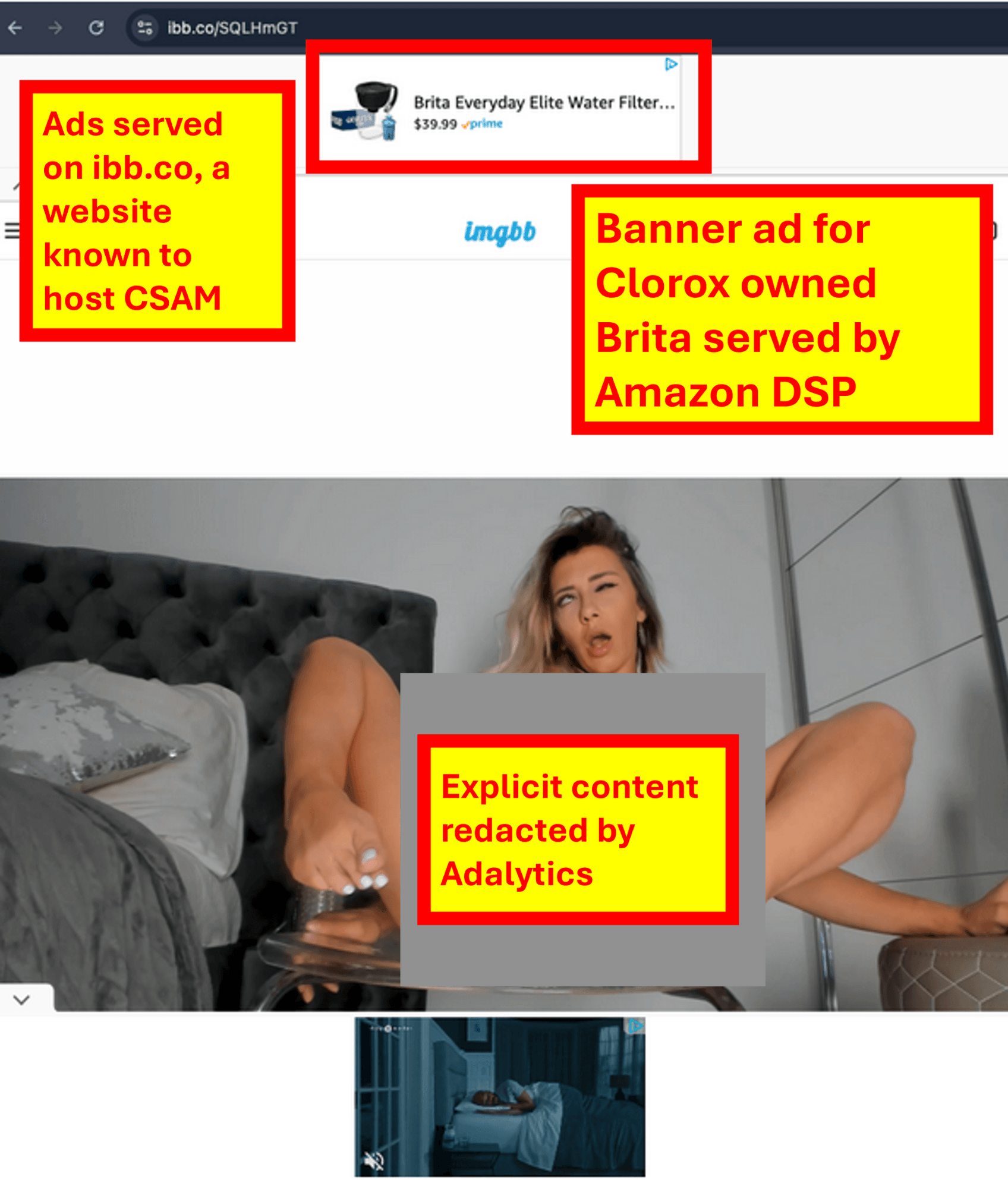

Screenshot of a Brita ad served by Amazon on ibb.co, a website known to host Child Sexual Abuse Materials

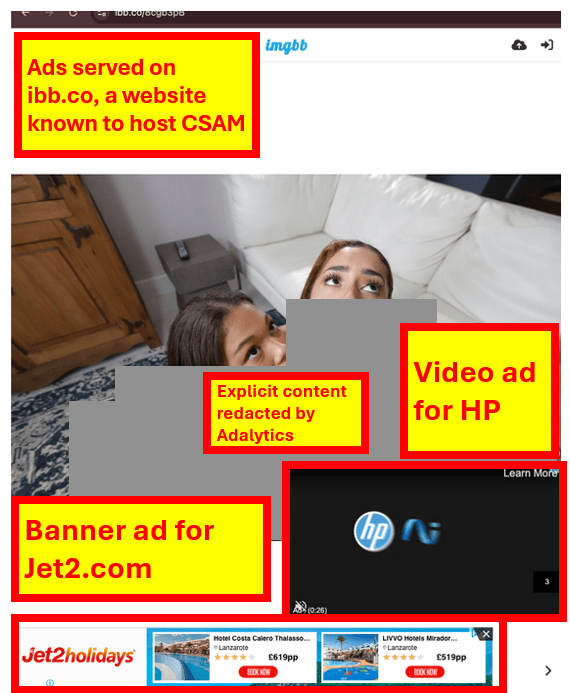

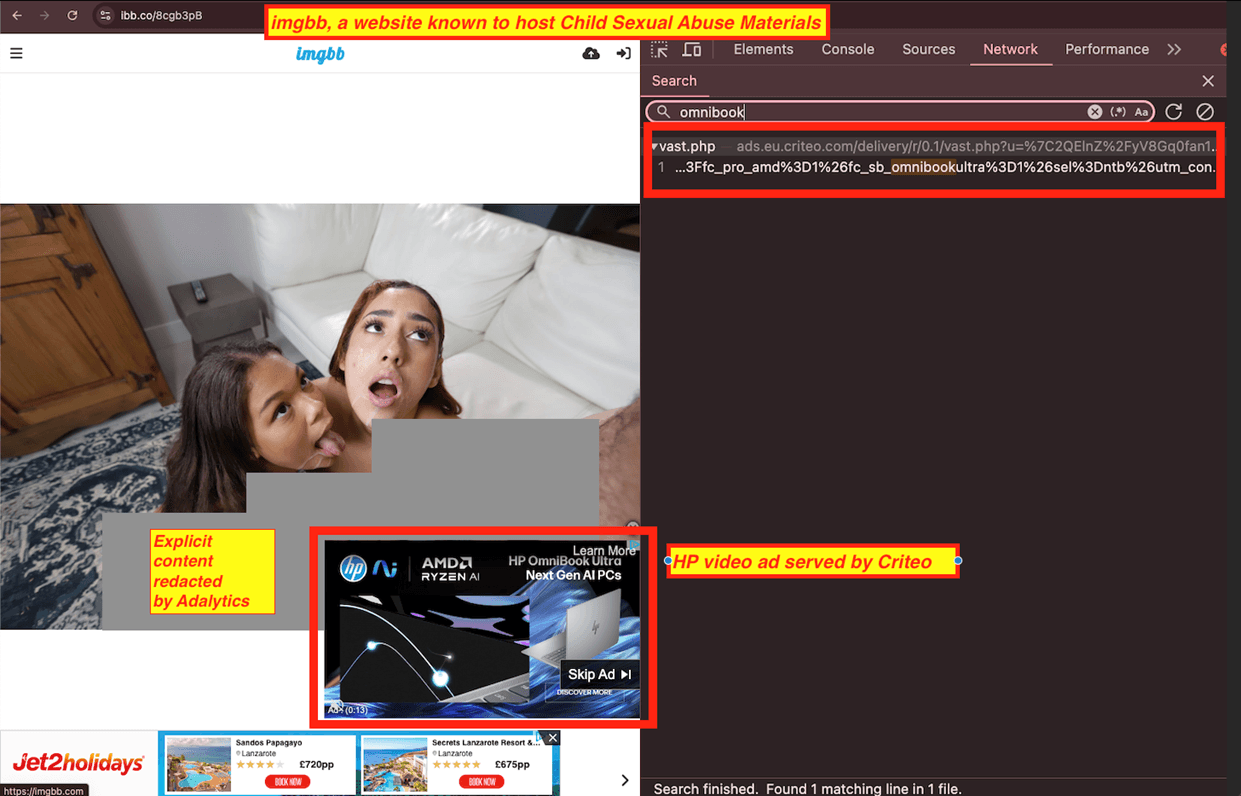

Screenshot of an HP ad served by Criteo on ibb.co, a website known to host Child Sexual Abuse Materials

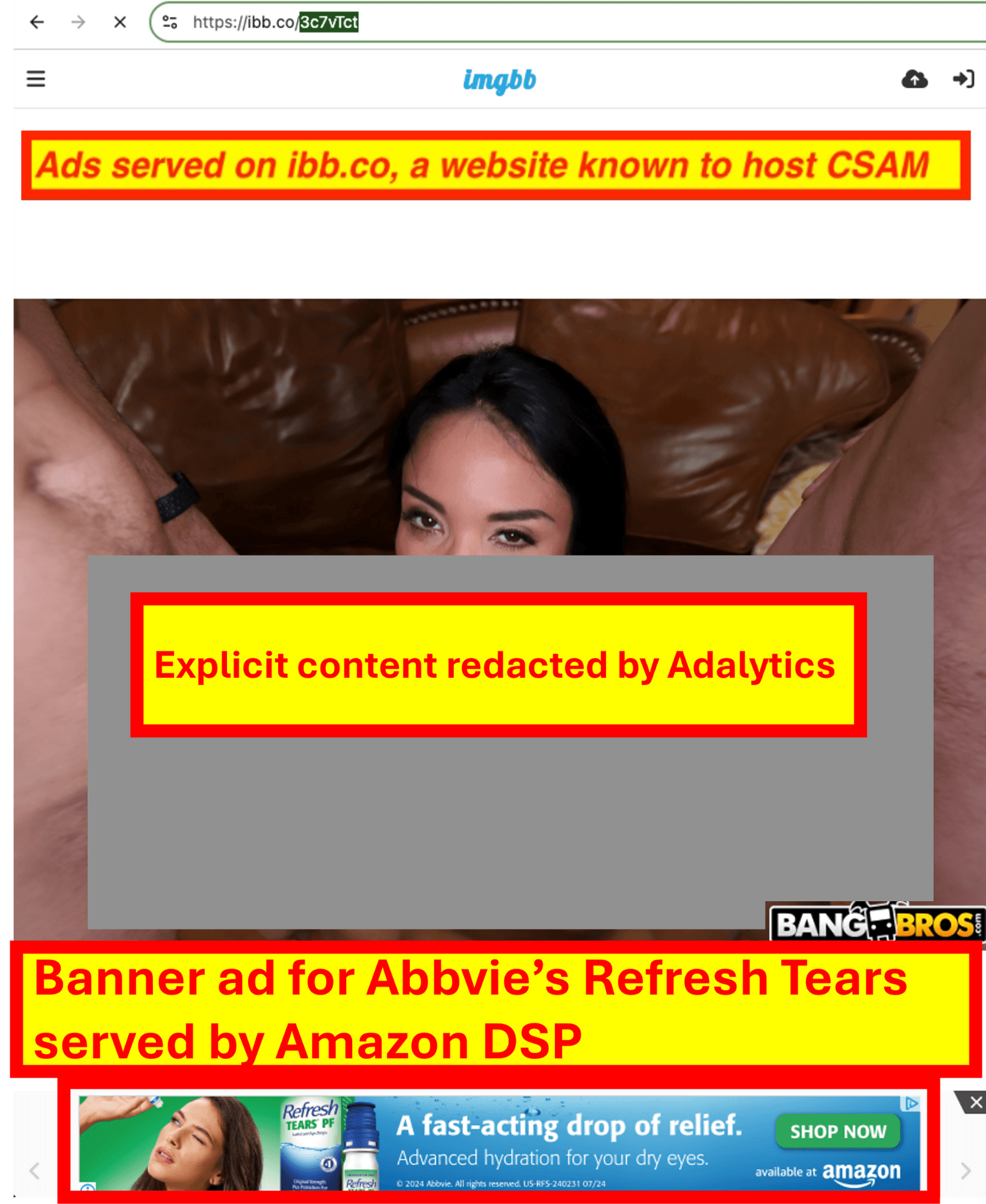

Screenshot of an Abbvie Refresh Tears ad served by Amazon DSP on ibb.co, a website known to host Child Sexual Abuse Materials

Screenshot of an Abbvie Refresh Tears ad served by Amazon DSP on ibb.co, a website known to host Child Sexual Abuse Materials

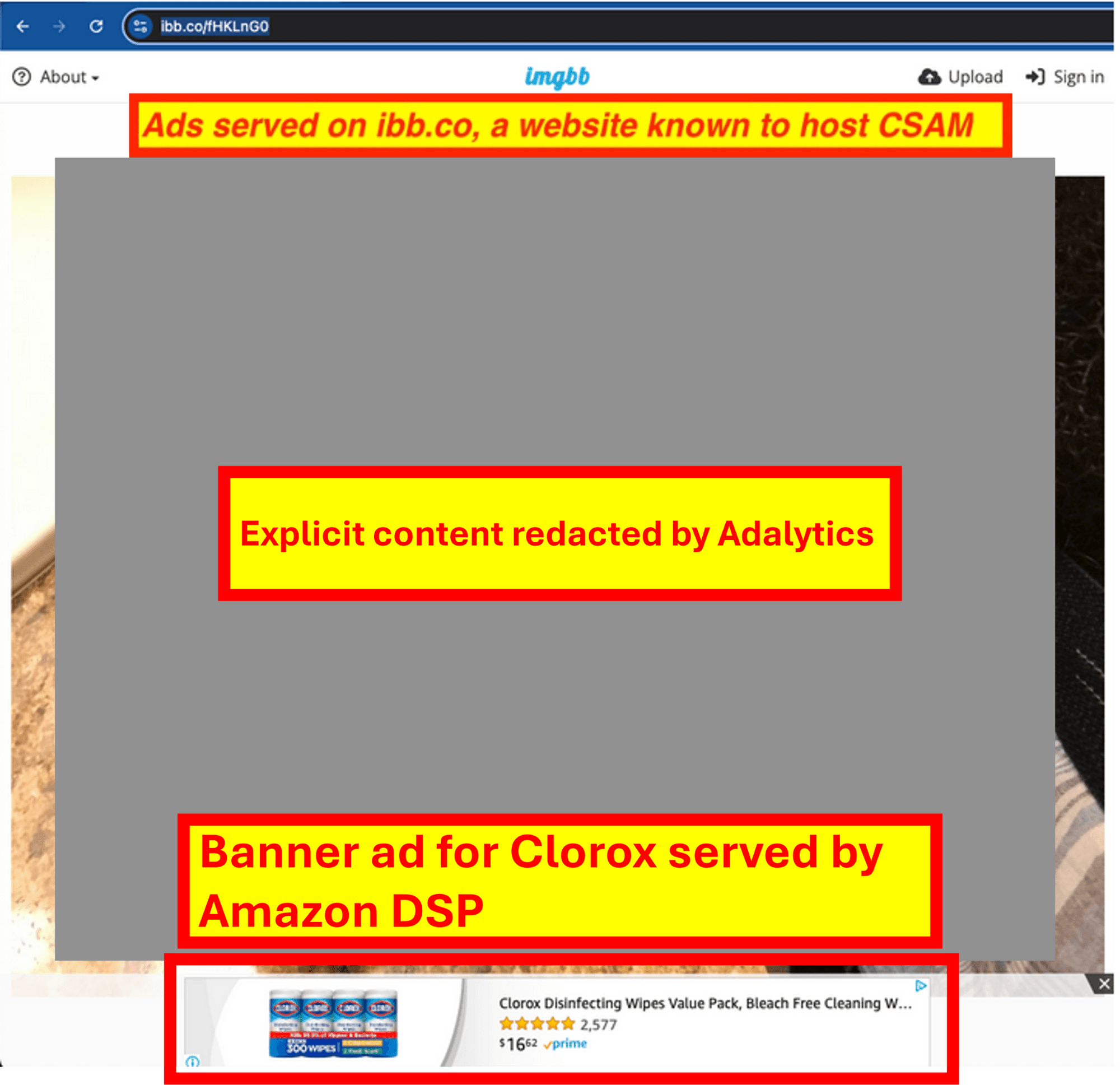

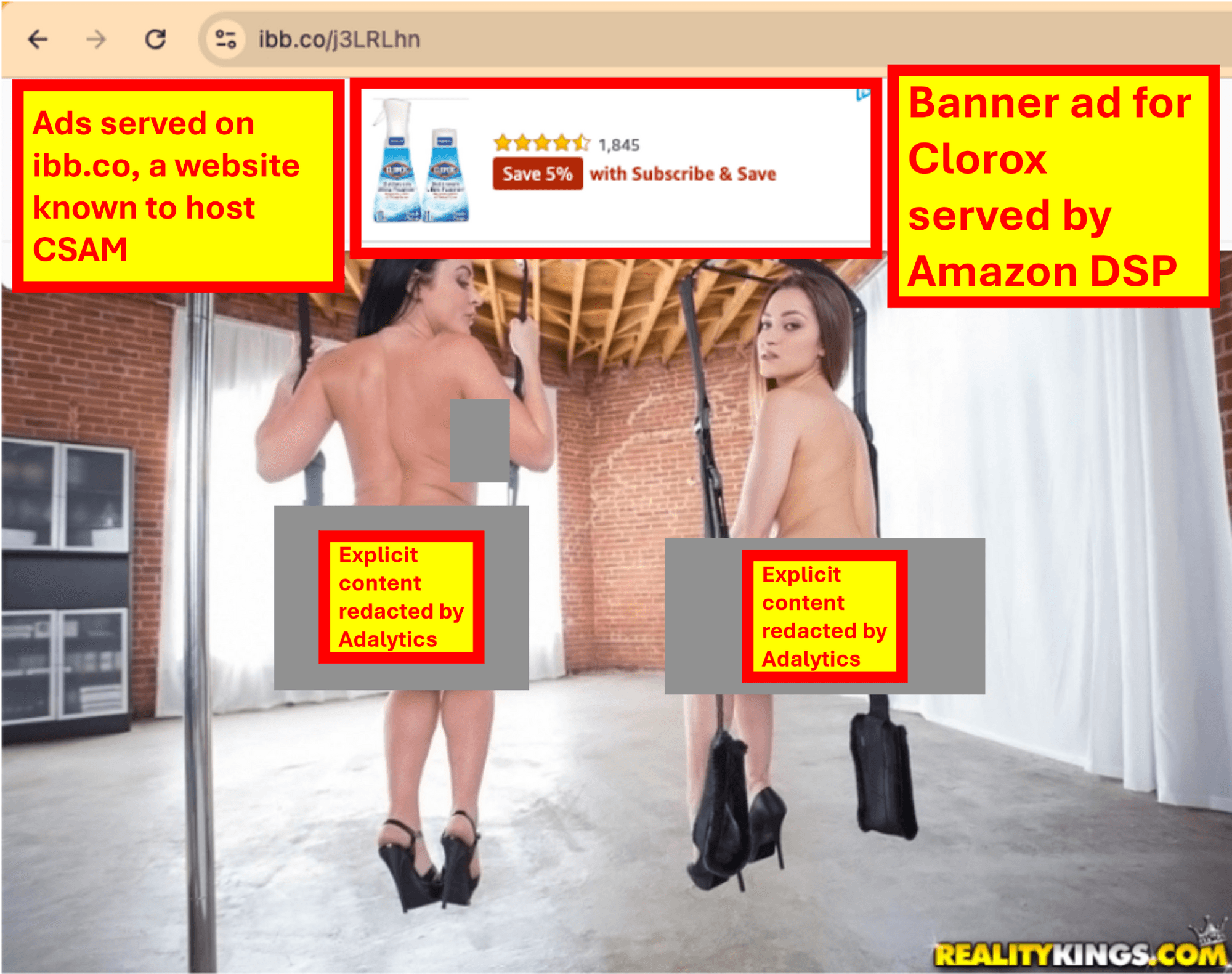

Screenshot of a Clorox disinfecting wipes ad served by Amazon DSP on ibb.co, a website known to host Child Sexual Abuse Materials

Screenshot of a Clorox disinfecting wipes ad served by Amazon DSP on ibb.co, a website known to host Child Sexual Abuse Materials

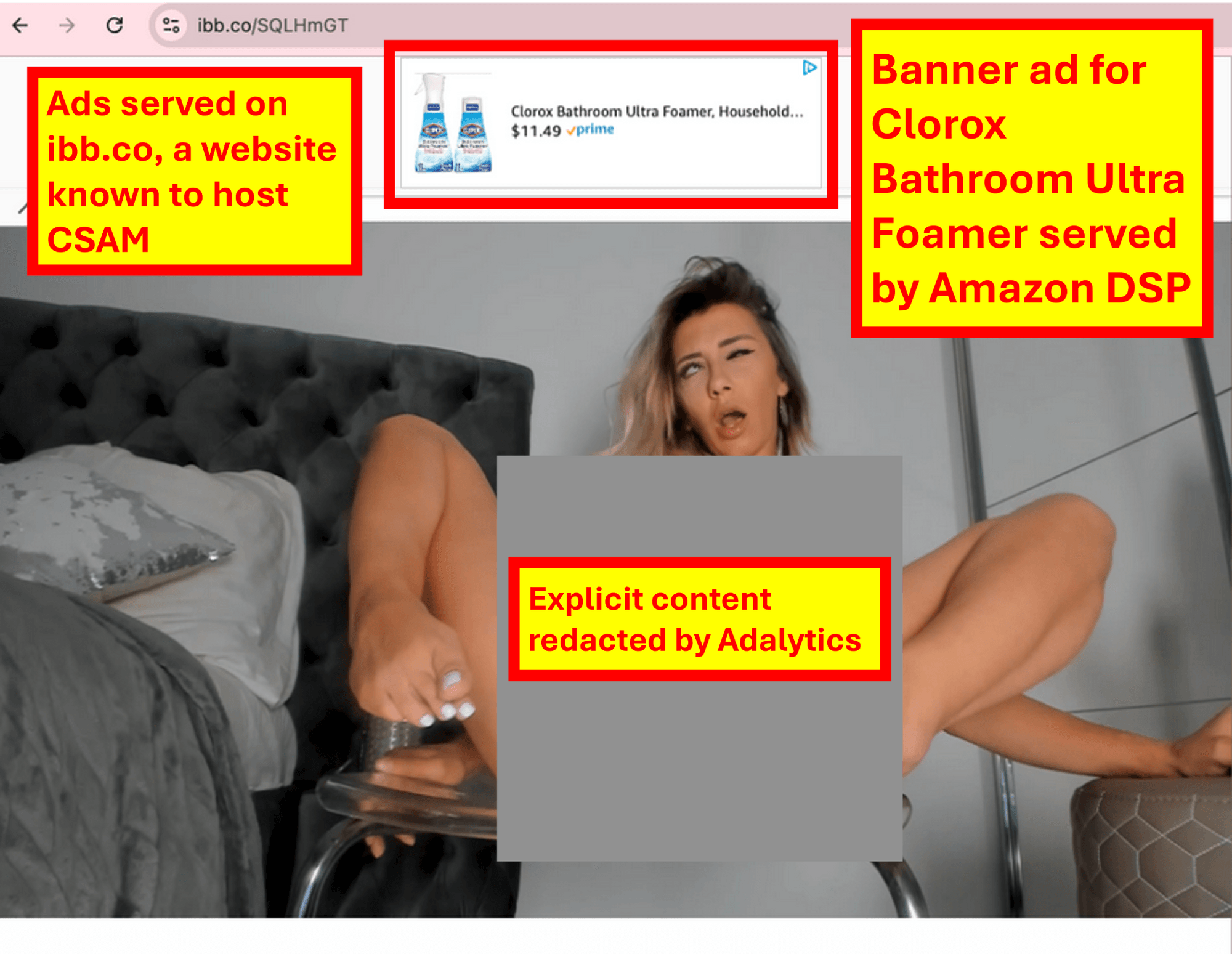

Screenshot of a Clorox Bathroom Ultra Foamer ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Clorox Bathroom Ultra Foamer ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

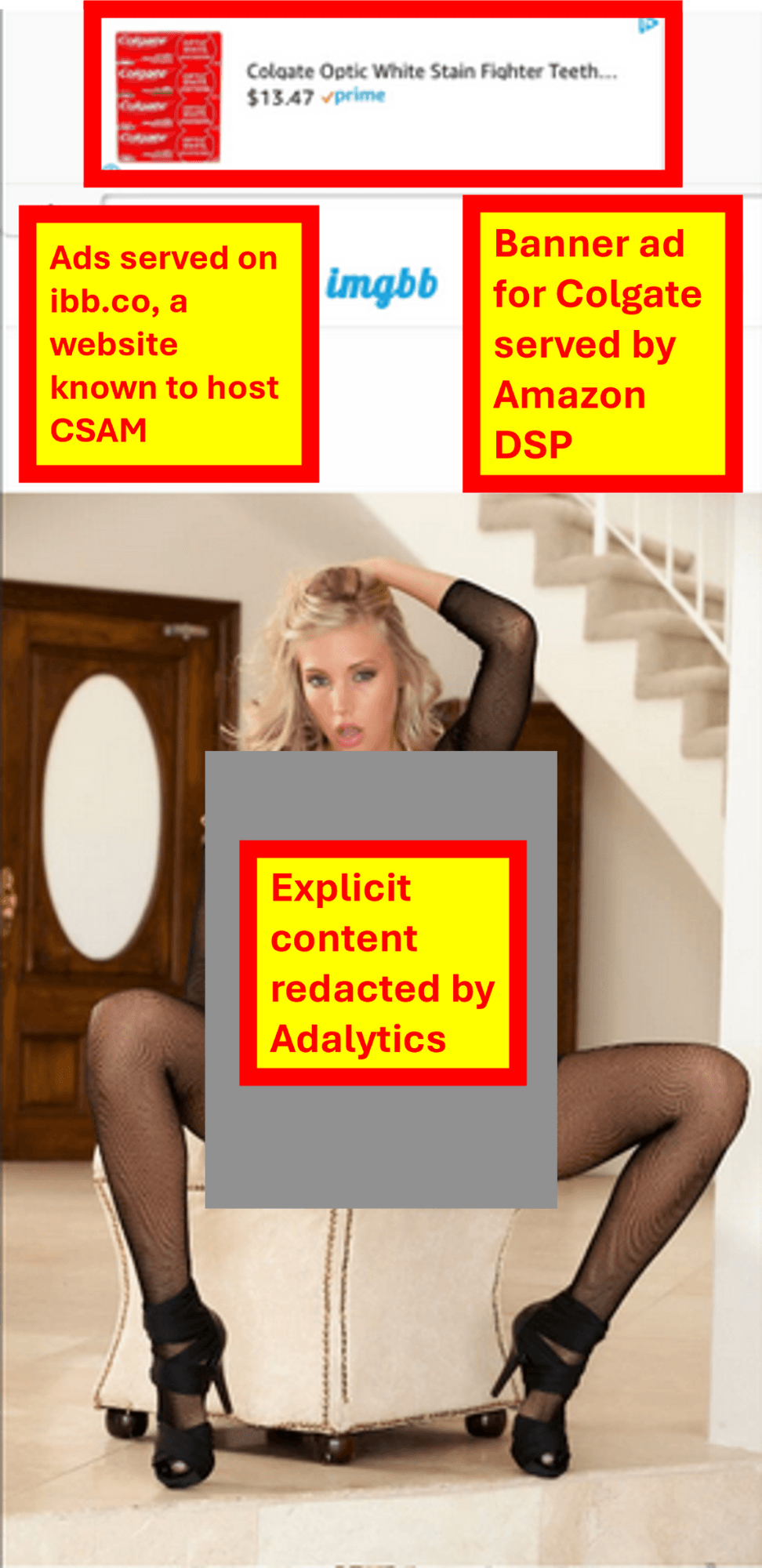

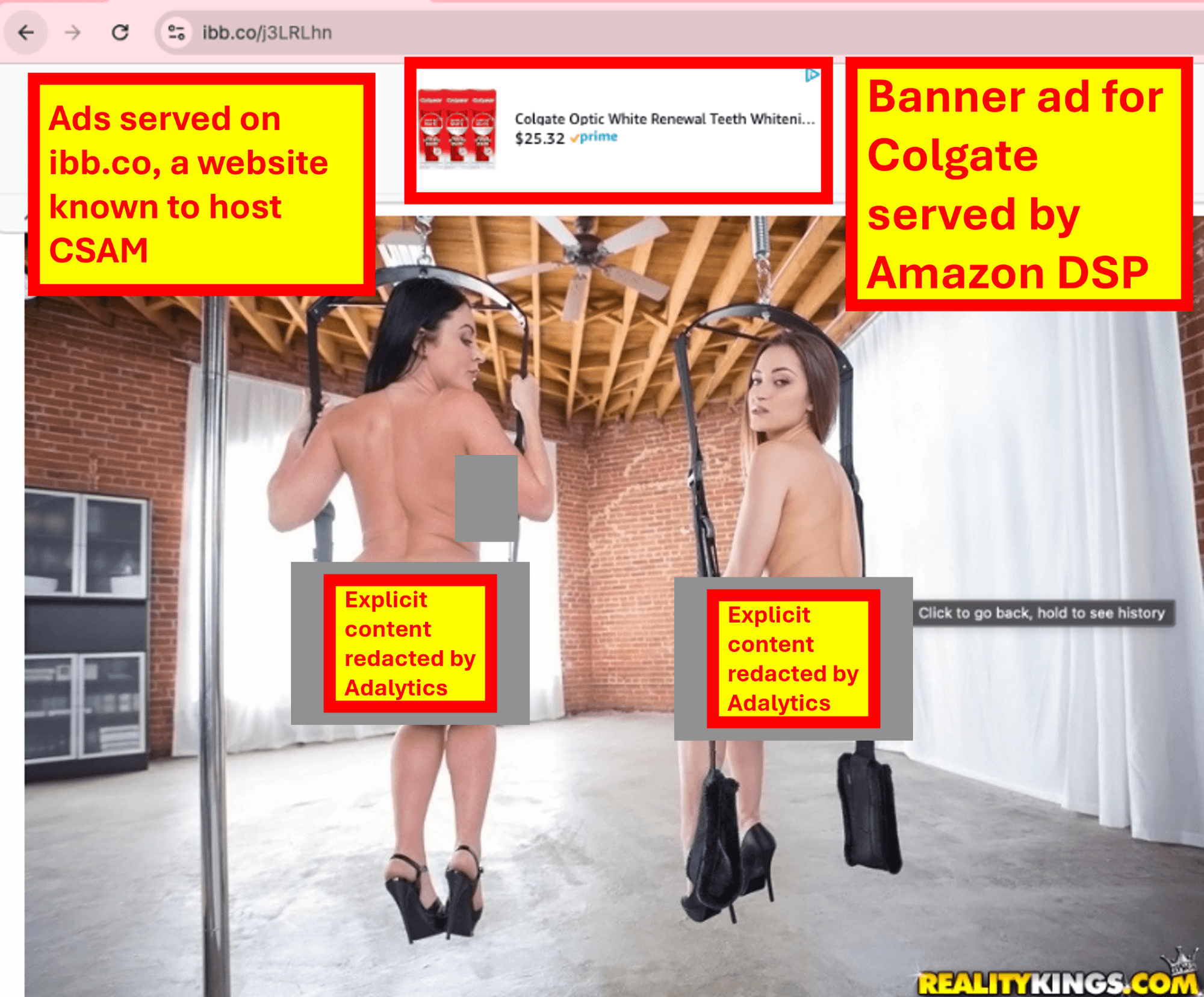

Screenshot of a Colgate ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Dentek Mouth Guard ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

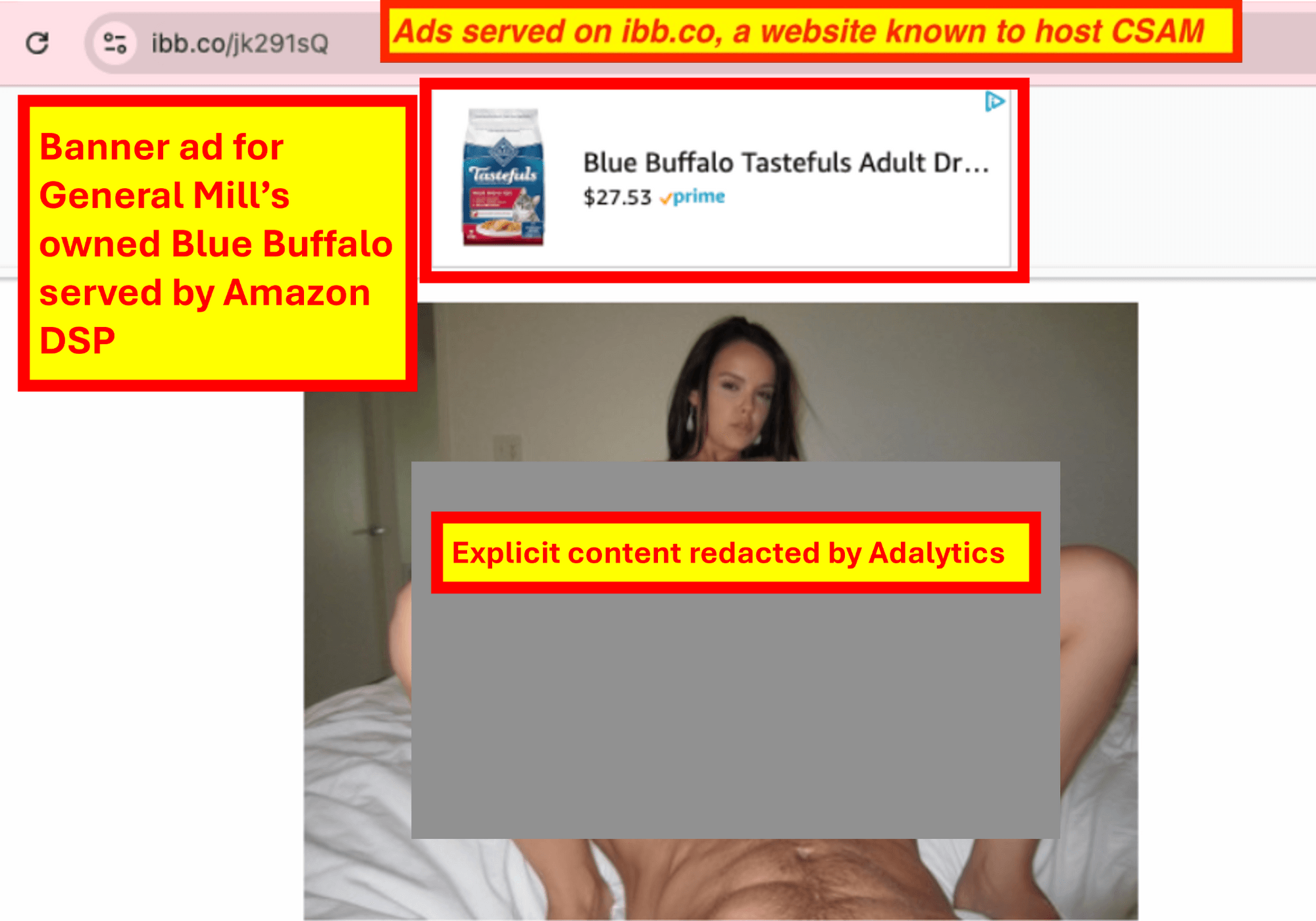

Screenshot of a General Mills Owned Blue Buffalo ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a General Mills Owned Blue Buffalo ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

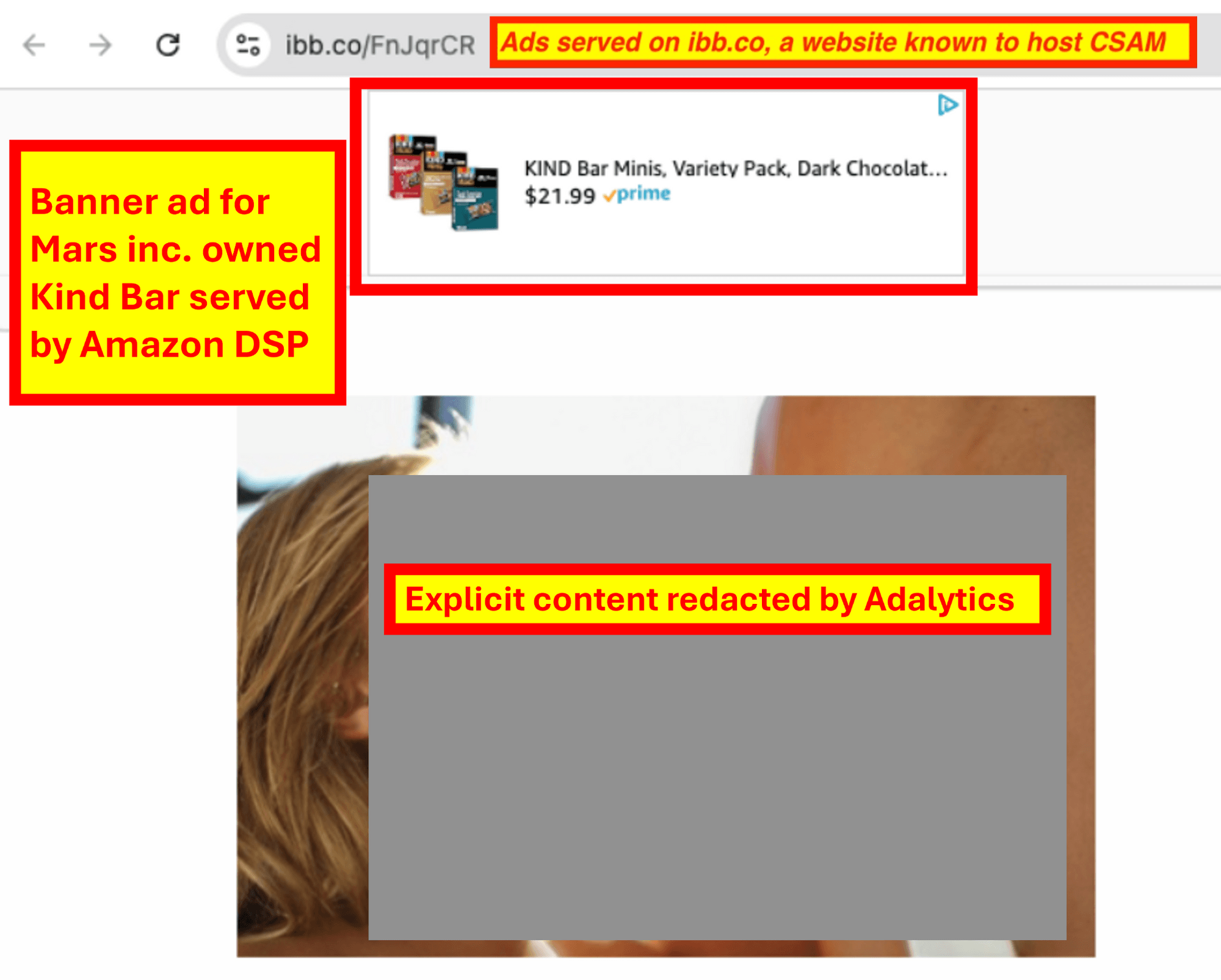

Screenshot of a Mars Kind bar ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Mars Kind bar ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

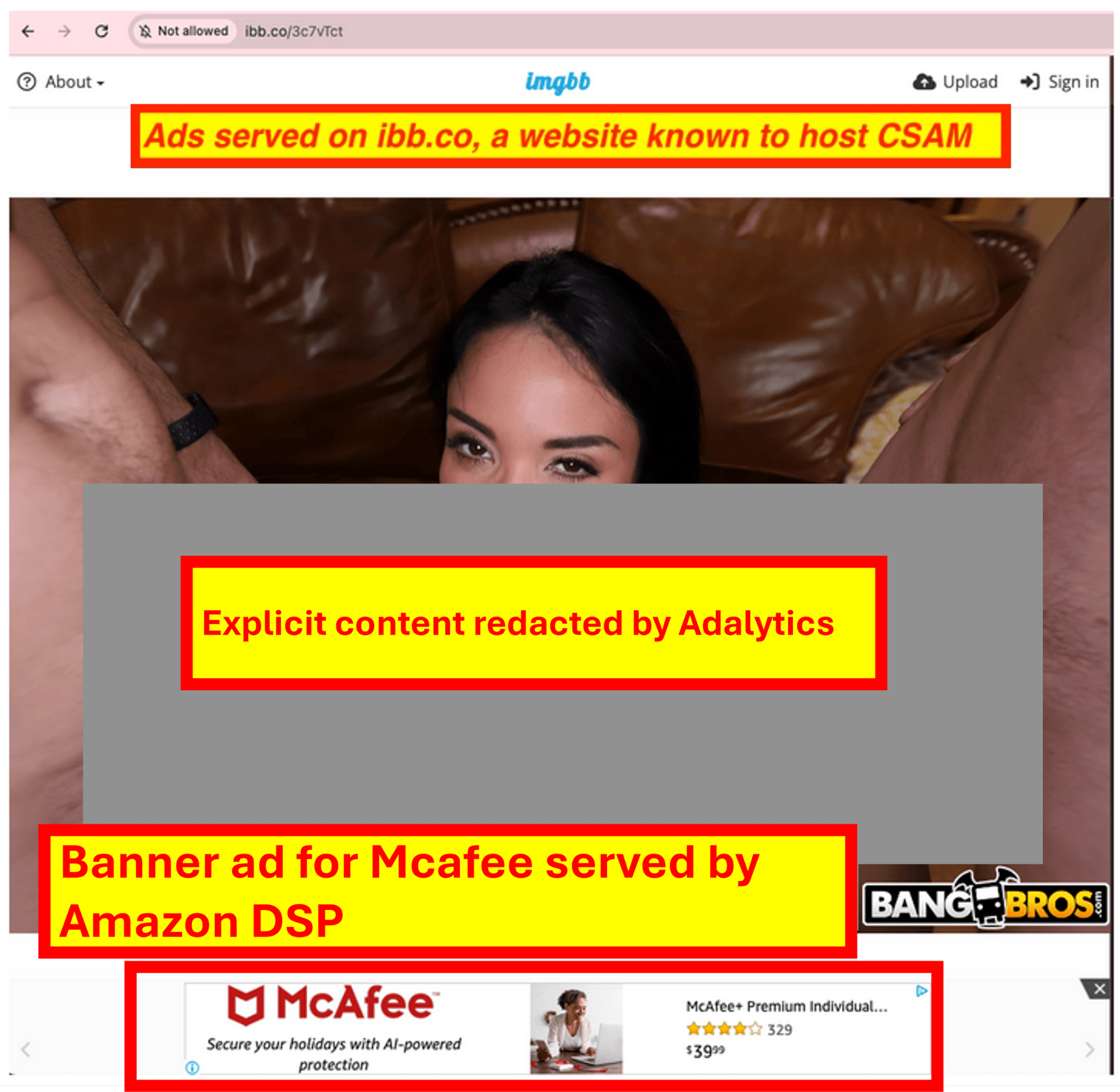

Screenshot of a Mcafee ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Mcafee ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

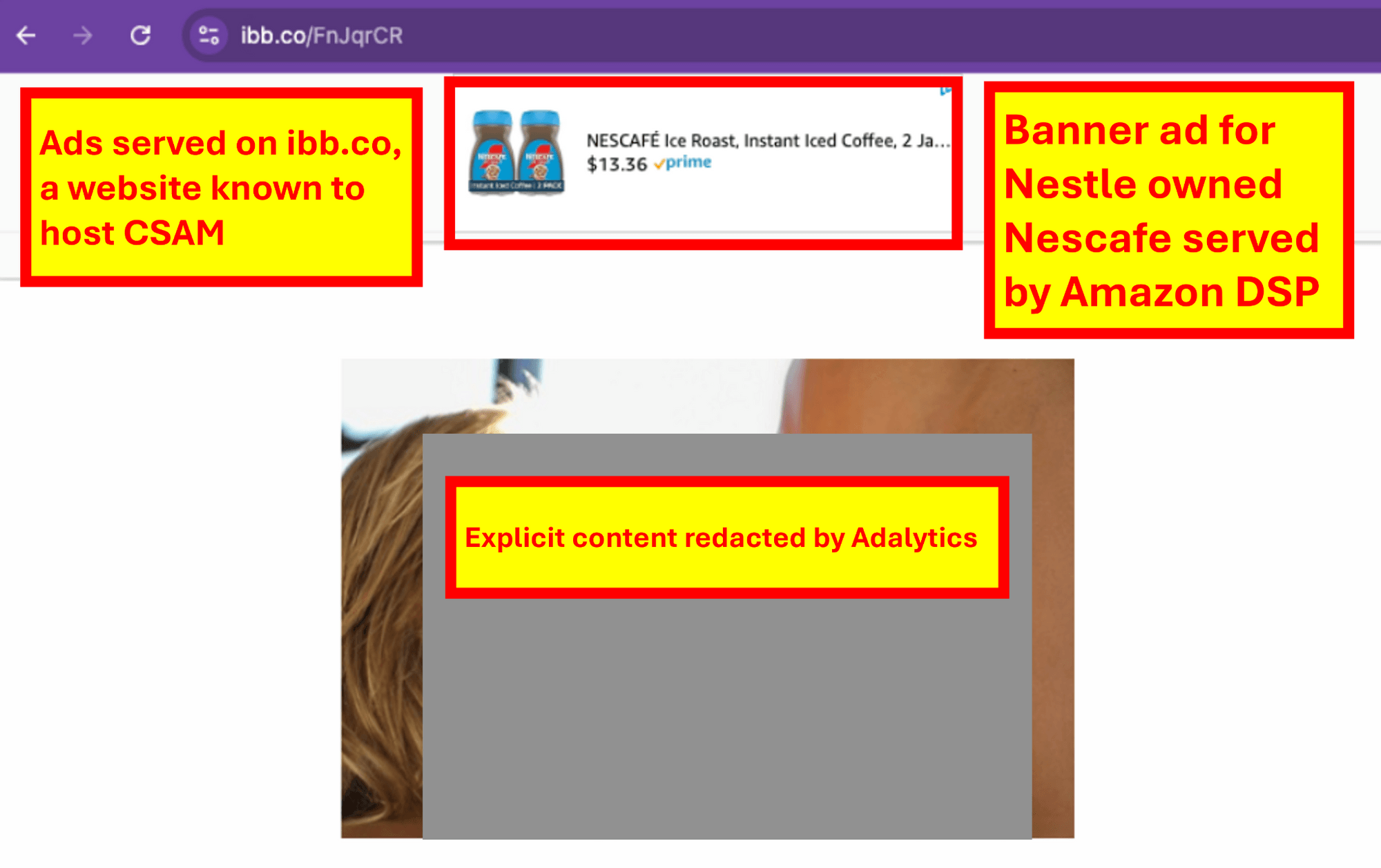

Screenshot of a Nestle Nescafe ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Nestle Nescafe ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

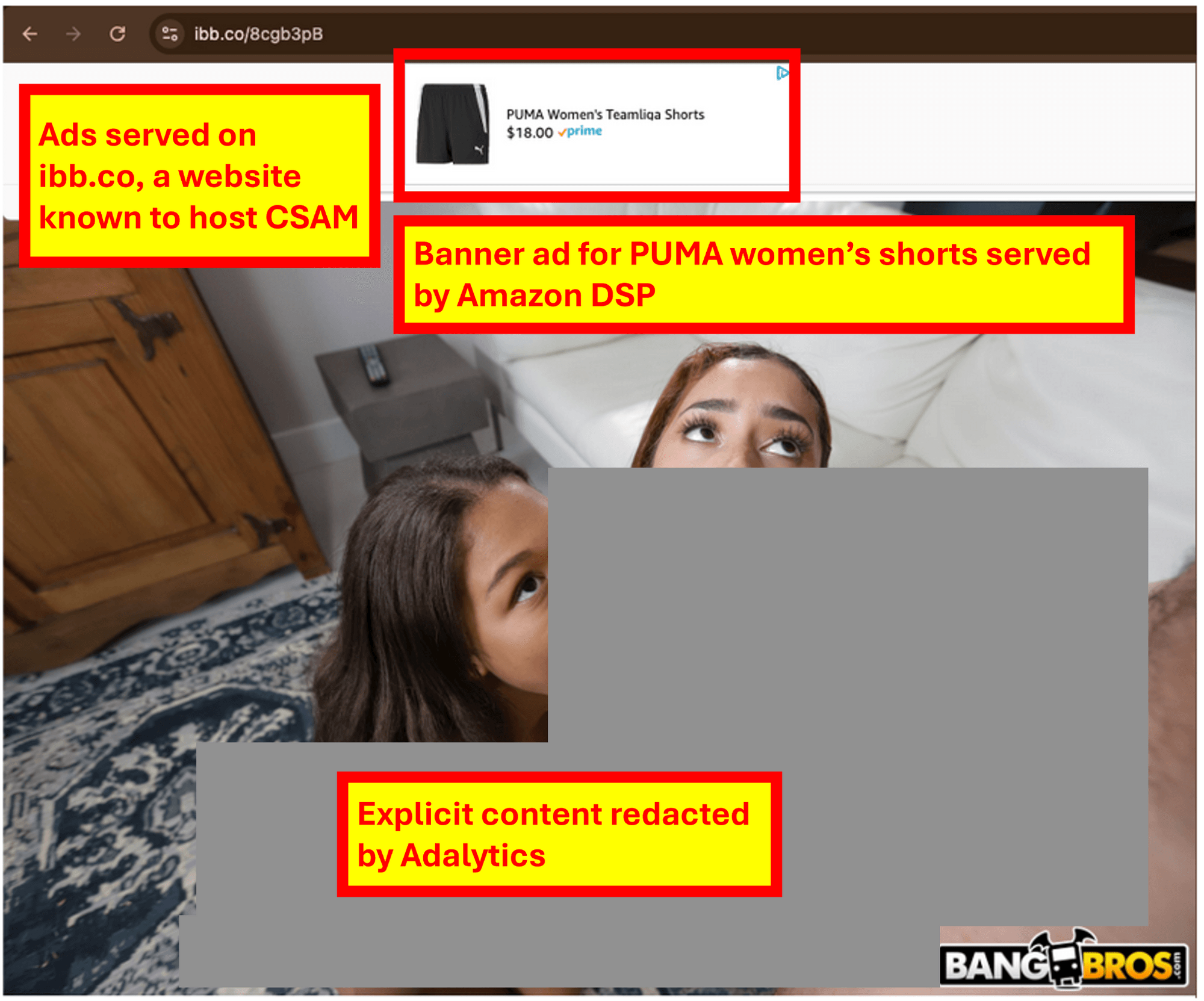

Screenshot of a Puma ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Puma ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

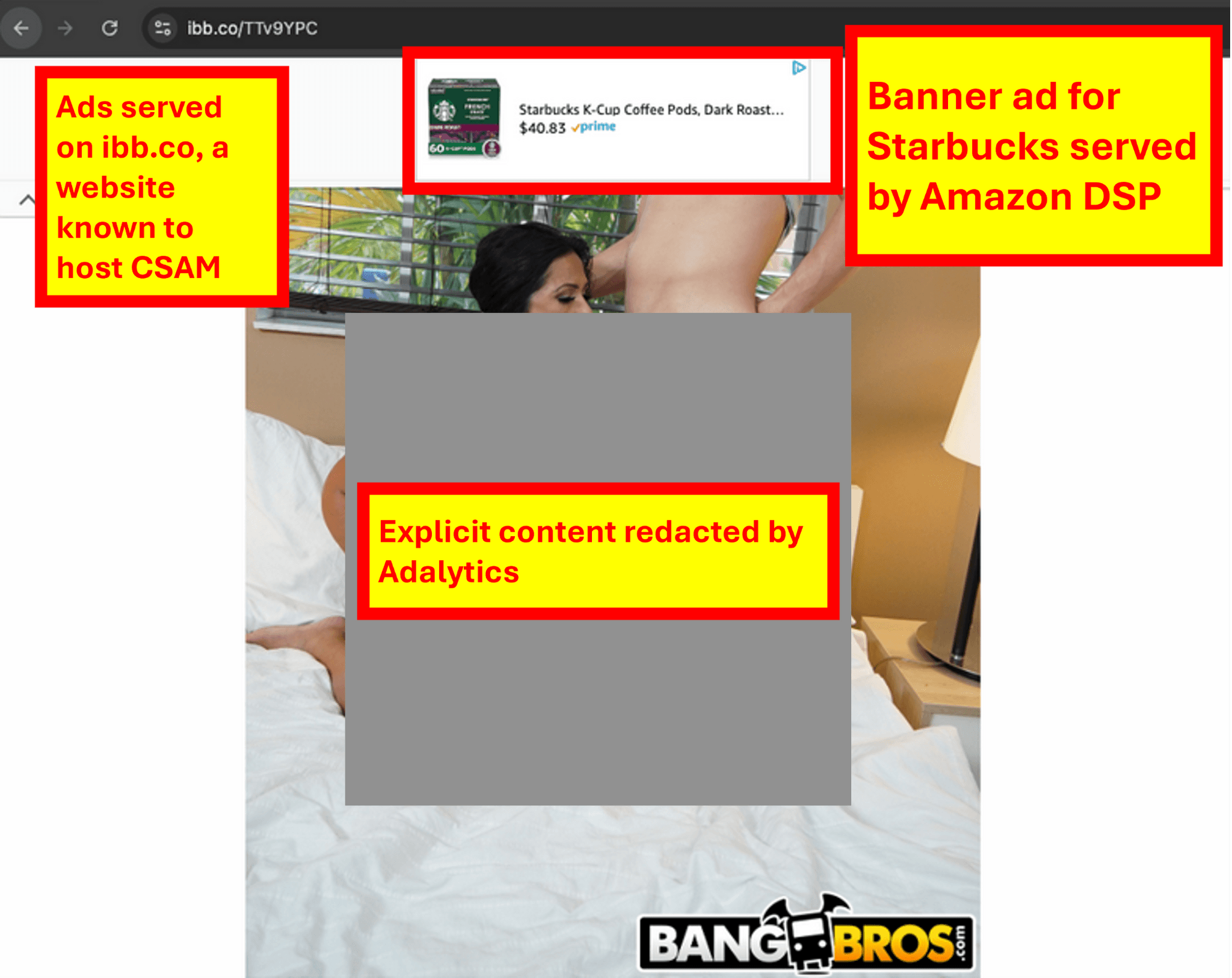

Screenshot of a Starbucks ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Starbucks ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

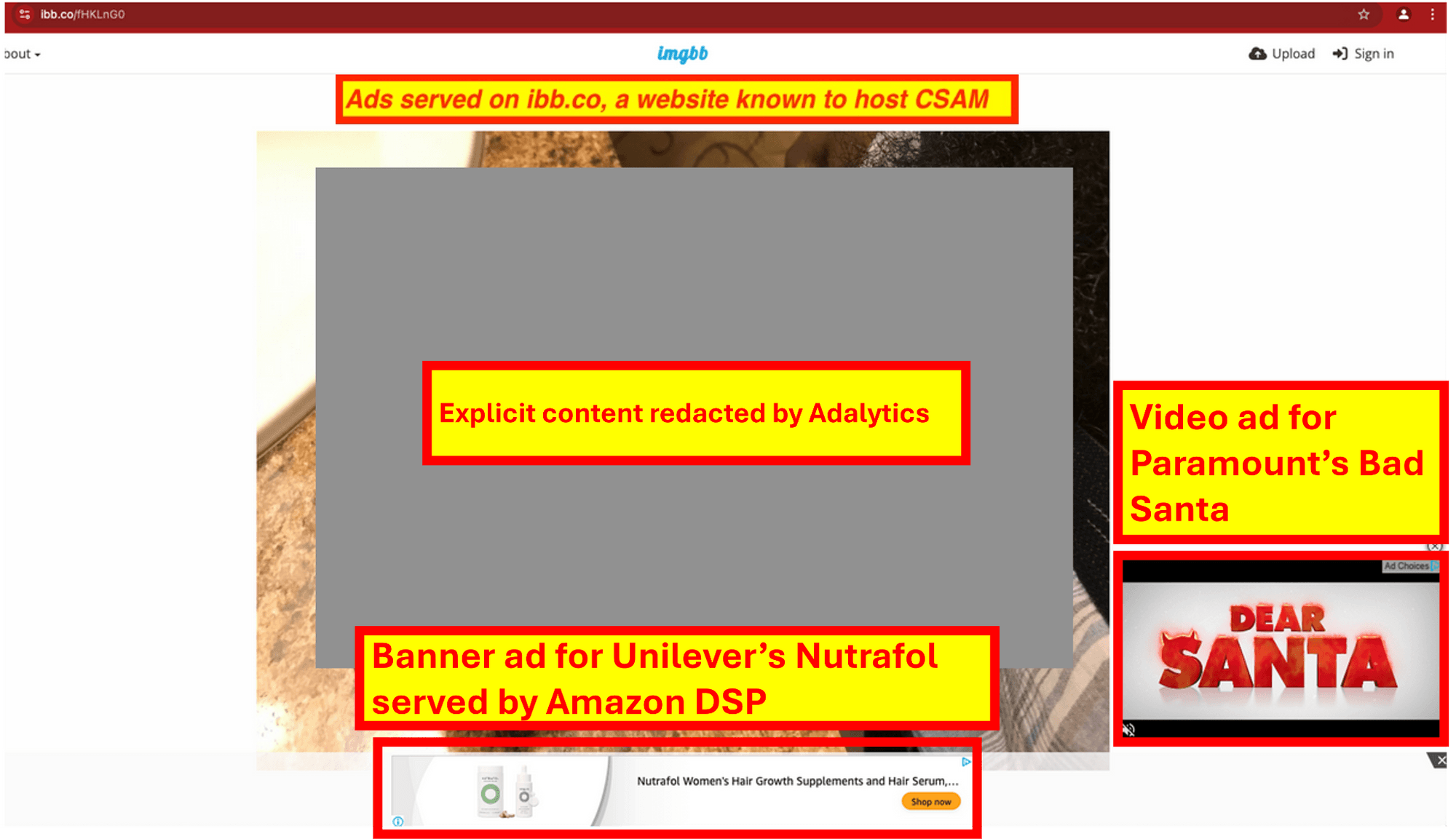

Screenshot of a Unilever Nutrafol ad served by Amazon DSP & an ad for Paramount’s Bad Santa on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Unilever Nutrafol ad served by Amazon DSP & an ad for Paramount’s Bad Santa on ibb.co, a website known to host child sexual abuse materials

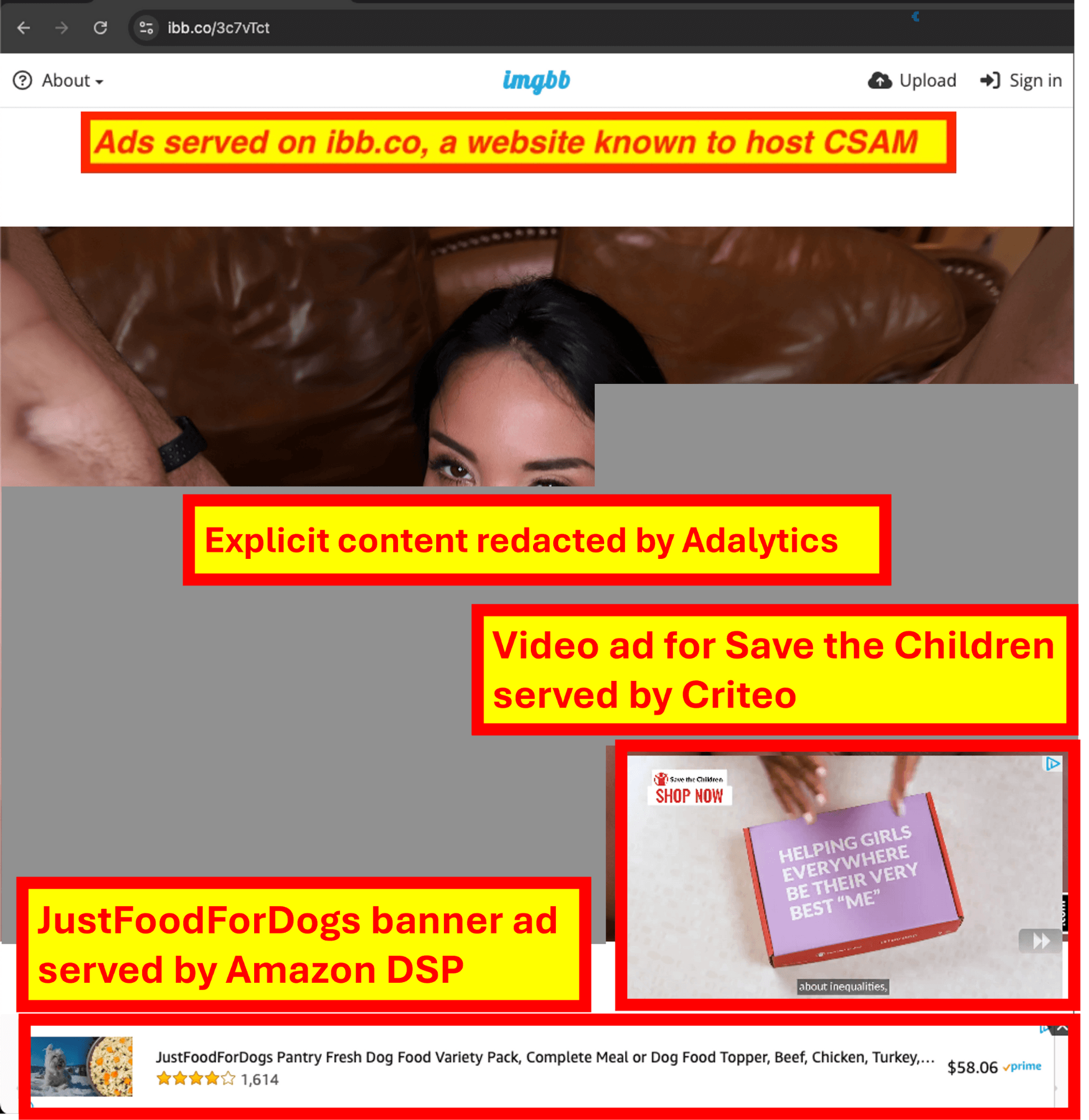

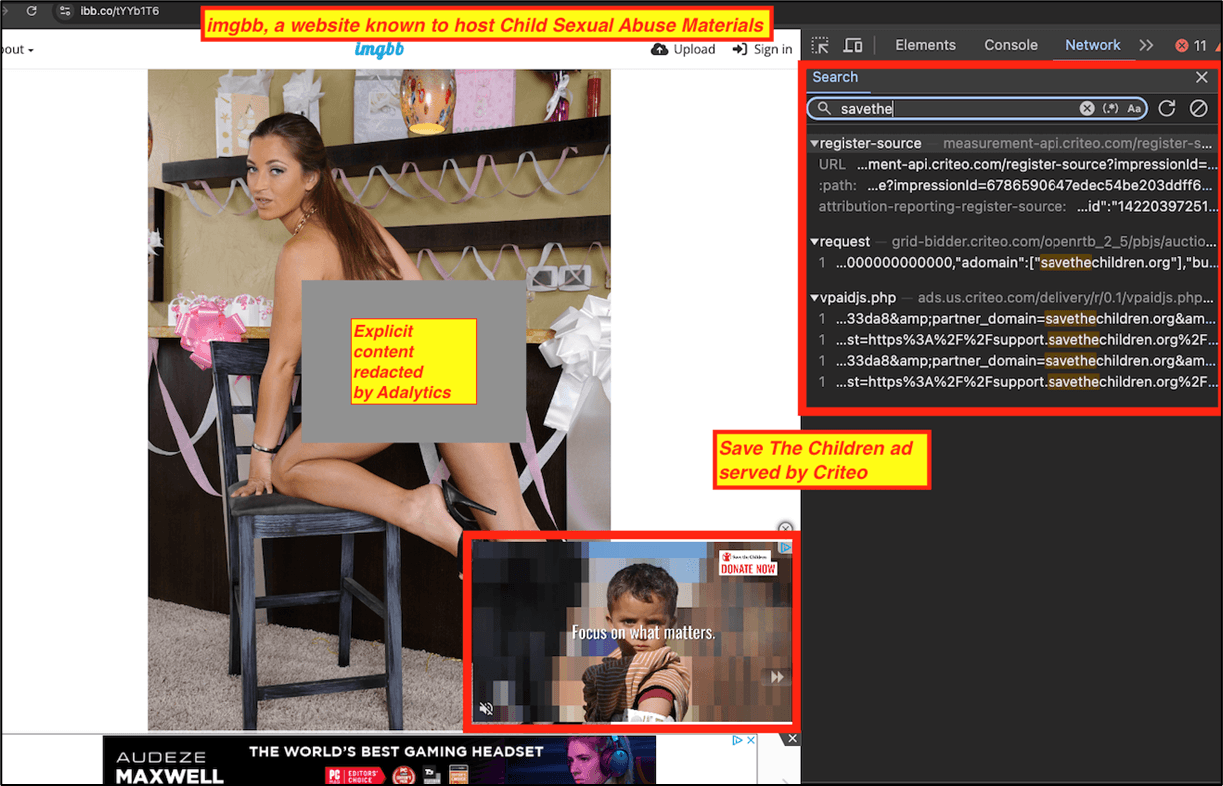

Screenshot of a JustFoodForDogs ad served by Amazon DSP & an ad for Save the Children served by Criteo on ibb.co, a website known to host child sexual abuse materials

Screenshot of a JustFoodForDogs ad served by Amazon DSP & an ad for Save the Children served by Criteo on ibb.co, a website known to host child sexual abuse materials

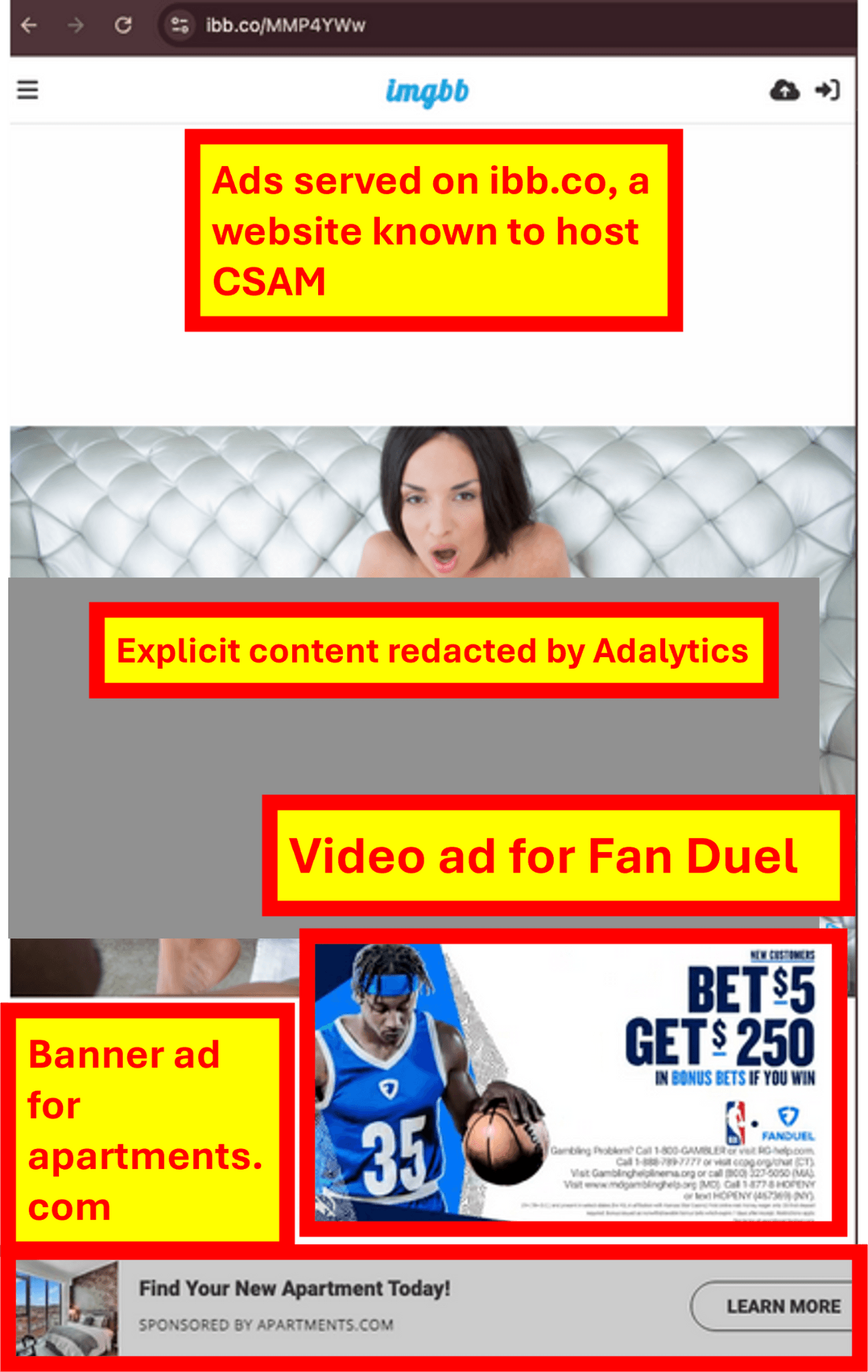

Screenshot of a Fan Duel ad & an ad for apartments.com on ibb.co, a website known to host child sexual abuse materials

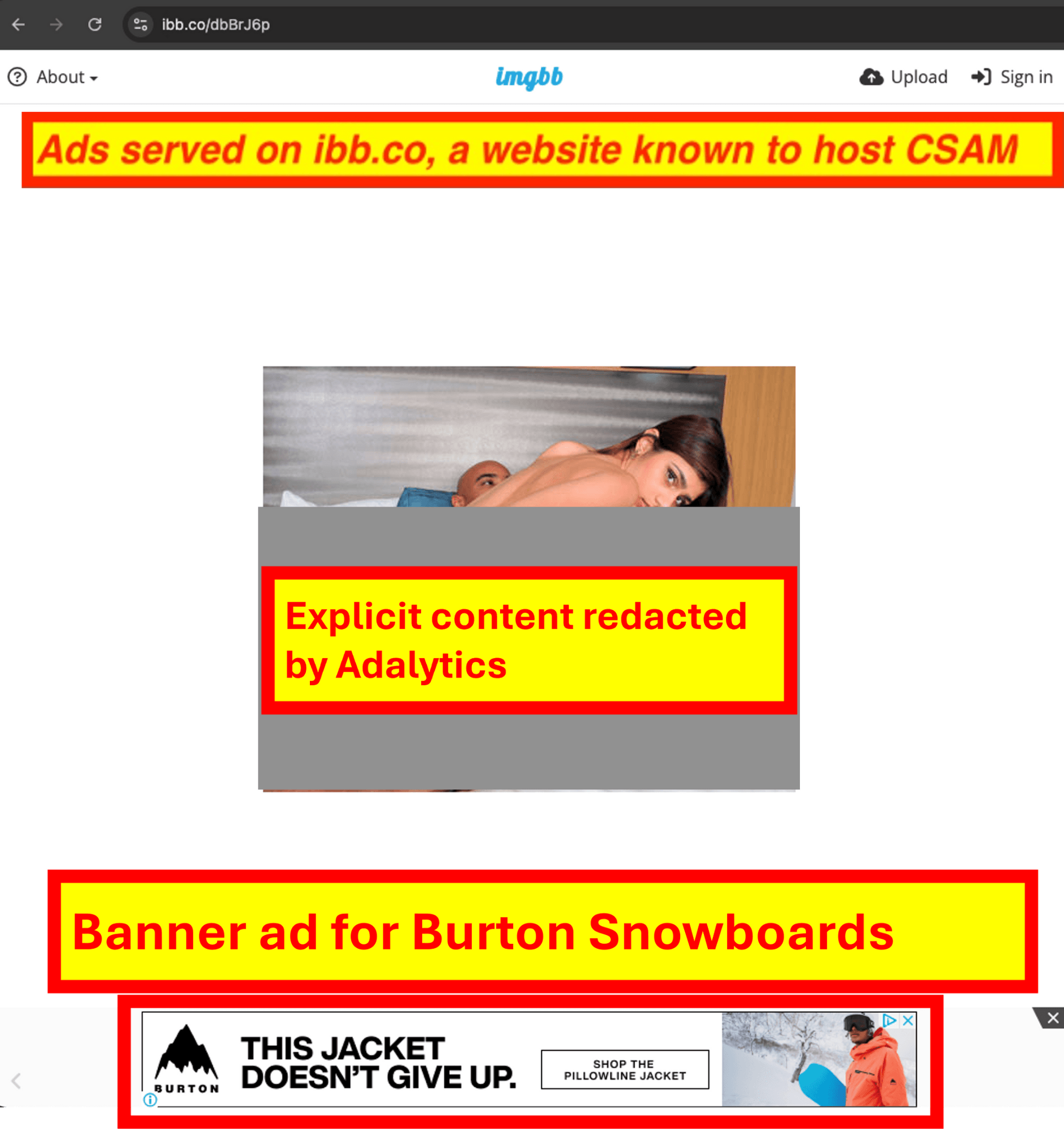

Screenshot of a Burton Snowboards ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Burton Snowboards ad on ibb.co, a website known to host child sexual abuse materials

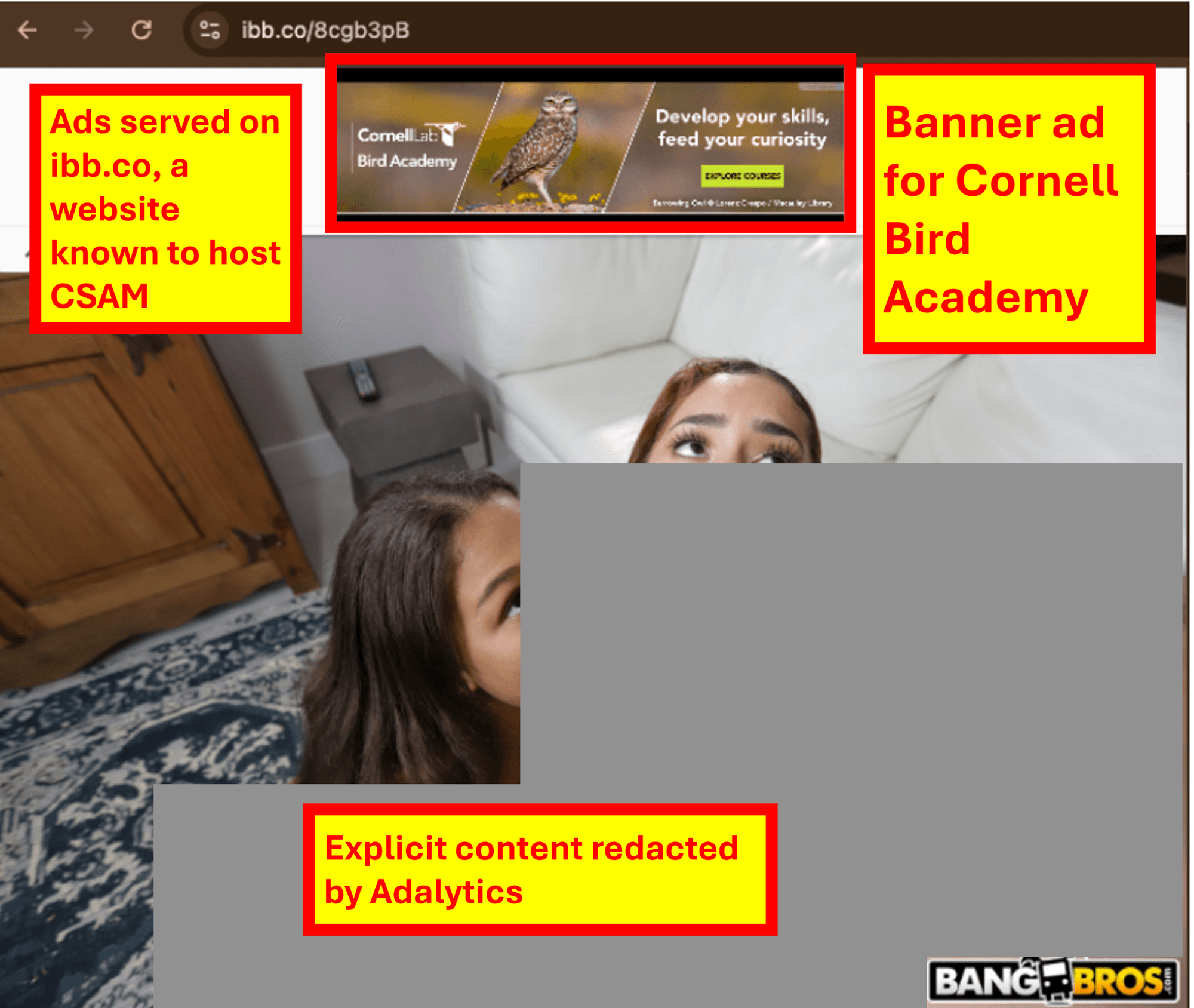

Screenshot of a Cornell Bird Academy ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Cornell Bird Academy ad on ibb.co, a website known to host child sexual abuse materials

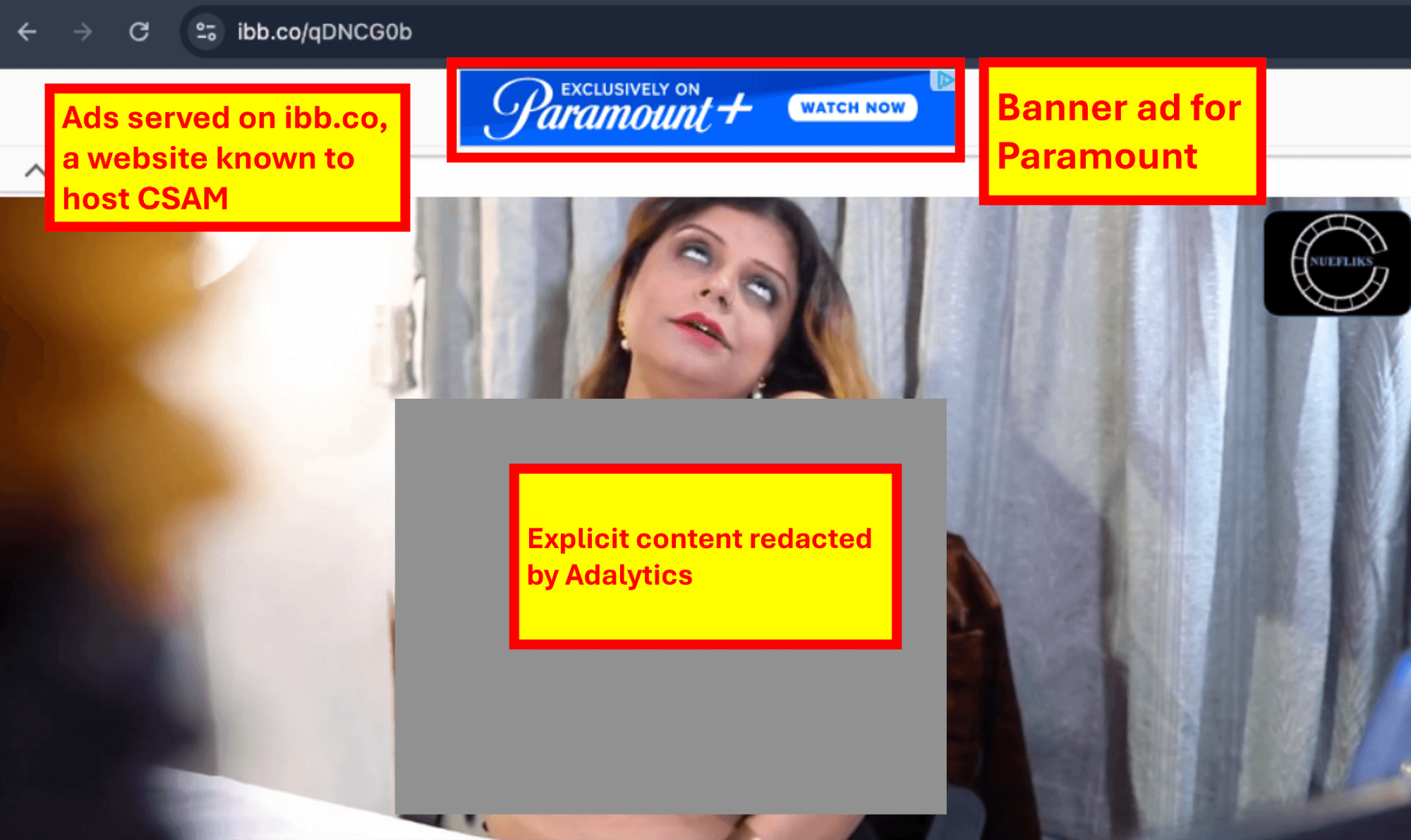

Screenshot of a Paramount+ ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Paramount+ ad on ibb.co, a website known to host child sexual abuse materials

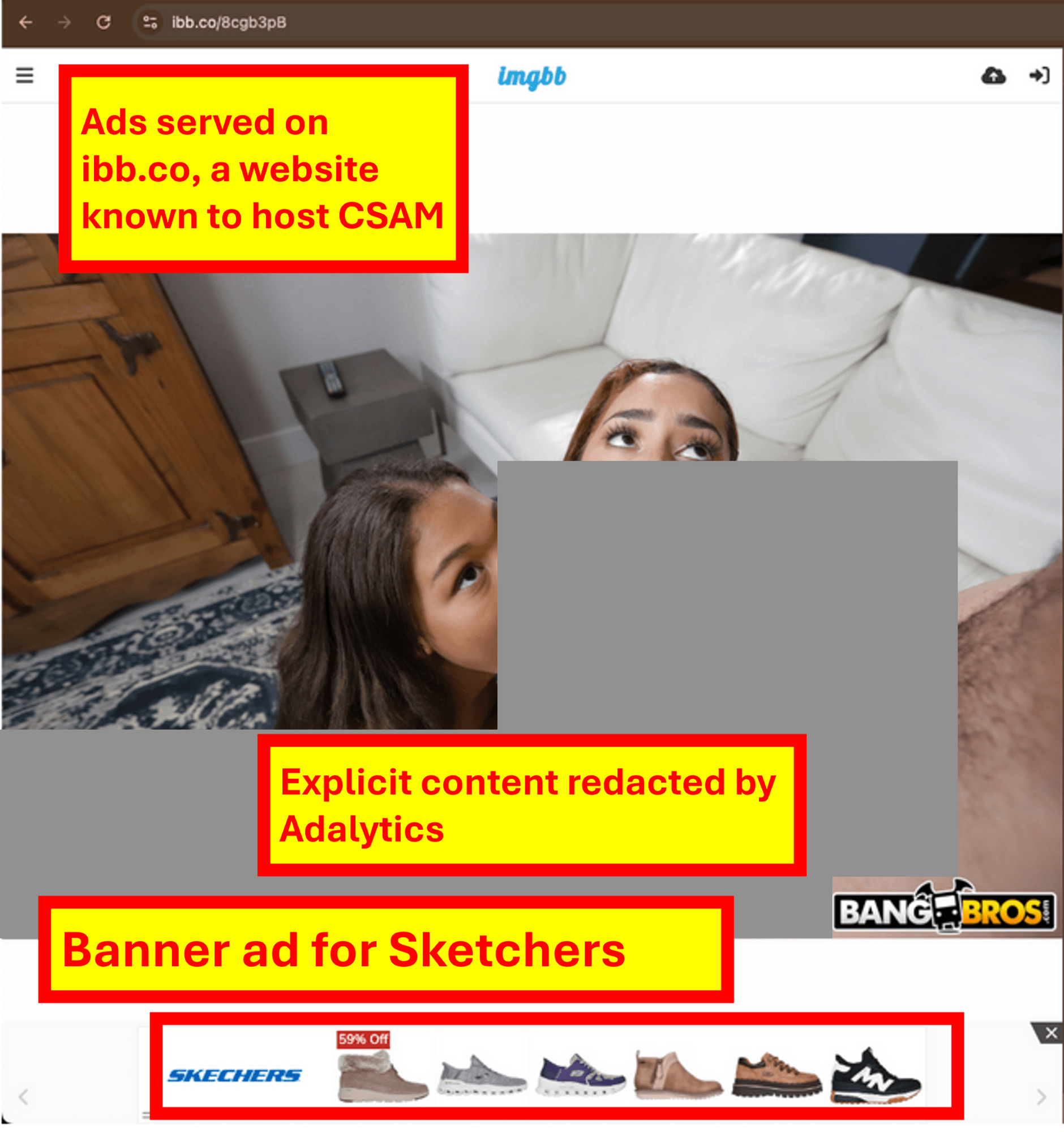

Screenshot of a Sketchers ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Sketchers ad on ibb.co, a website known to host child sexual abuse materials

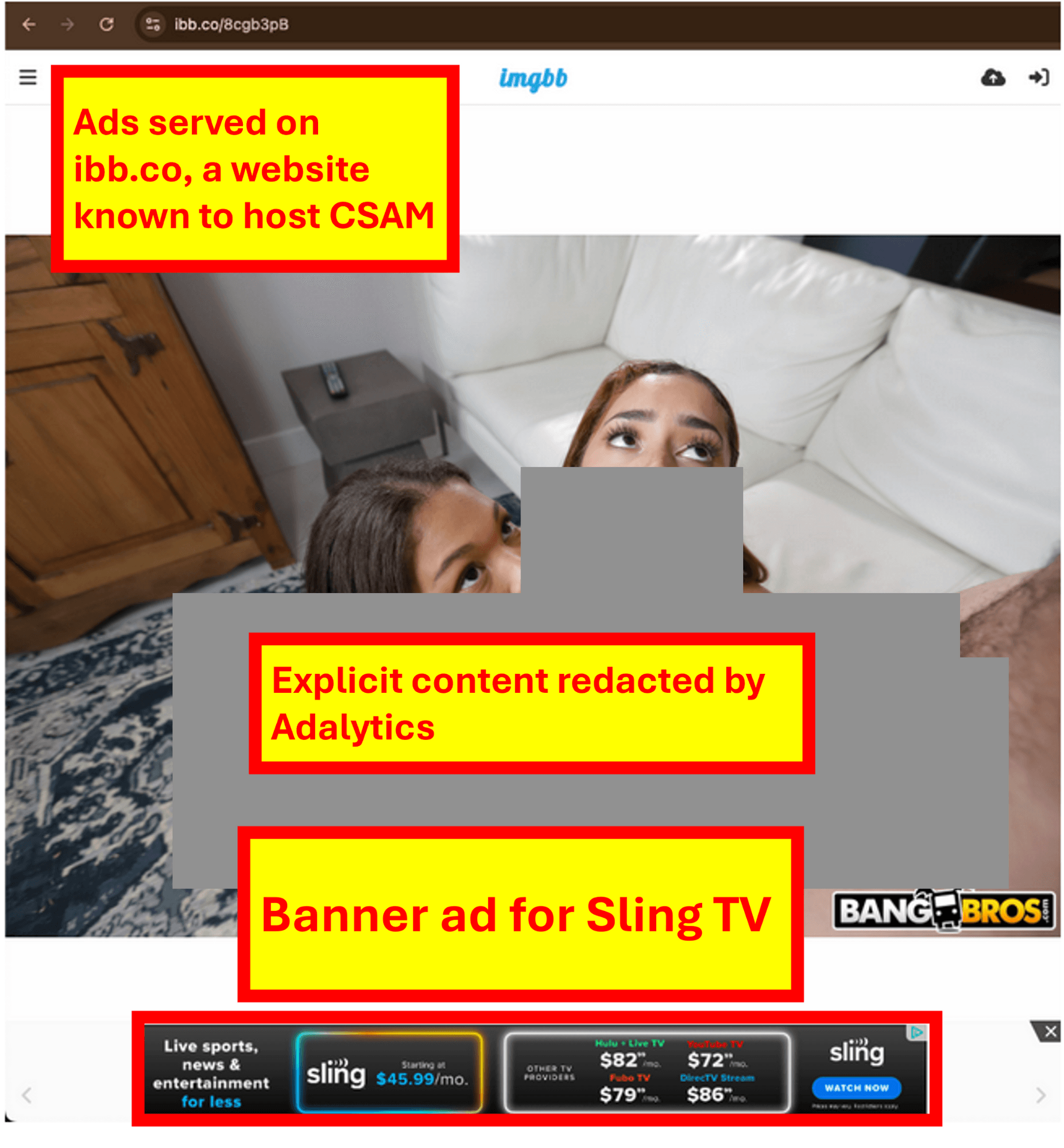

Screenshot of a Sling TV ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Sling TV ad on ibb.co, a website known to host child sexual abuse materials

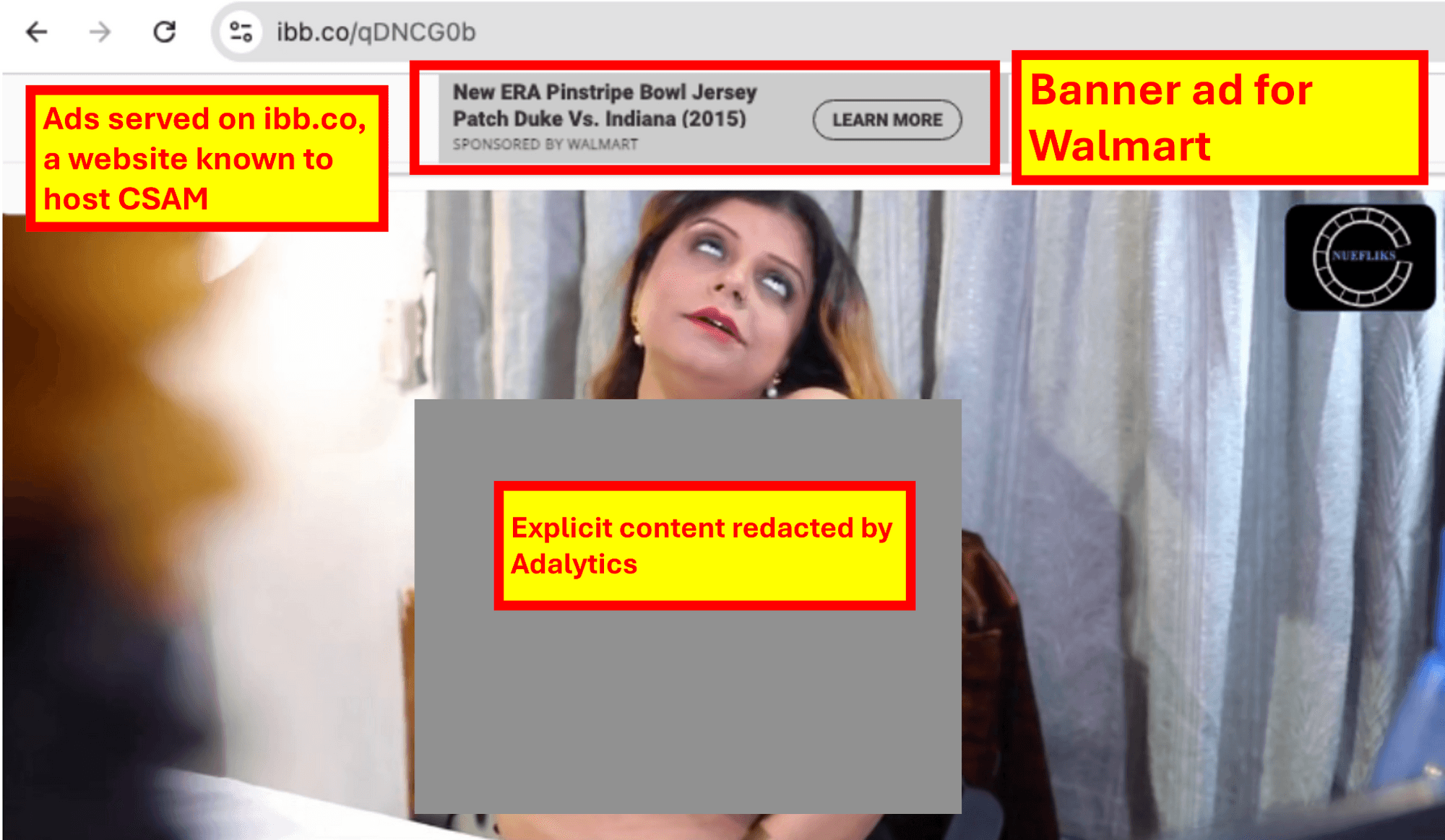

Screenshot of a Walmart ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Walmart ad on ibb.co, a website known to host child sexual abuse materials

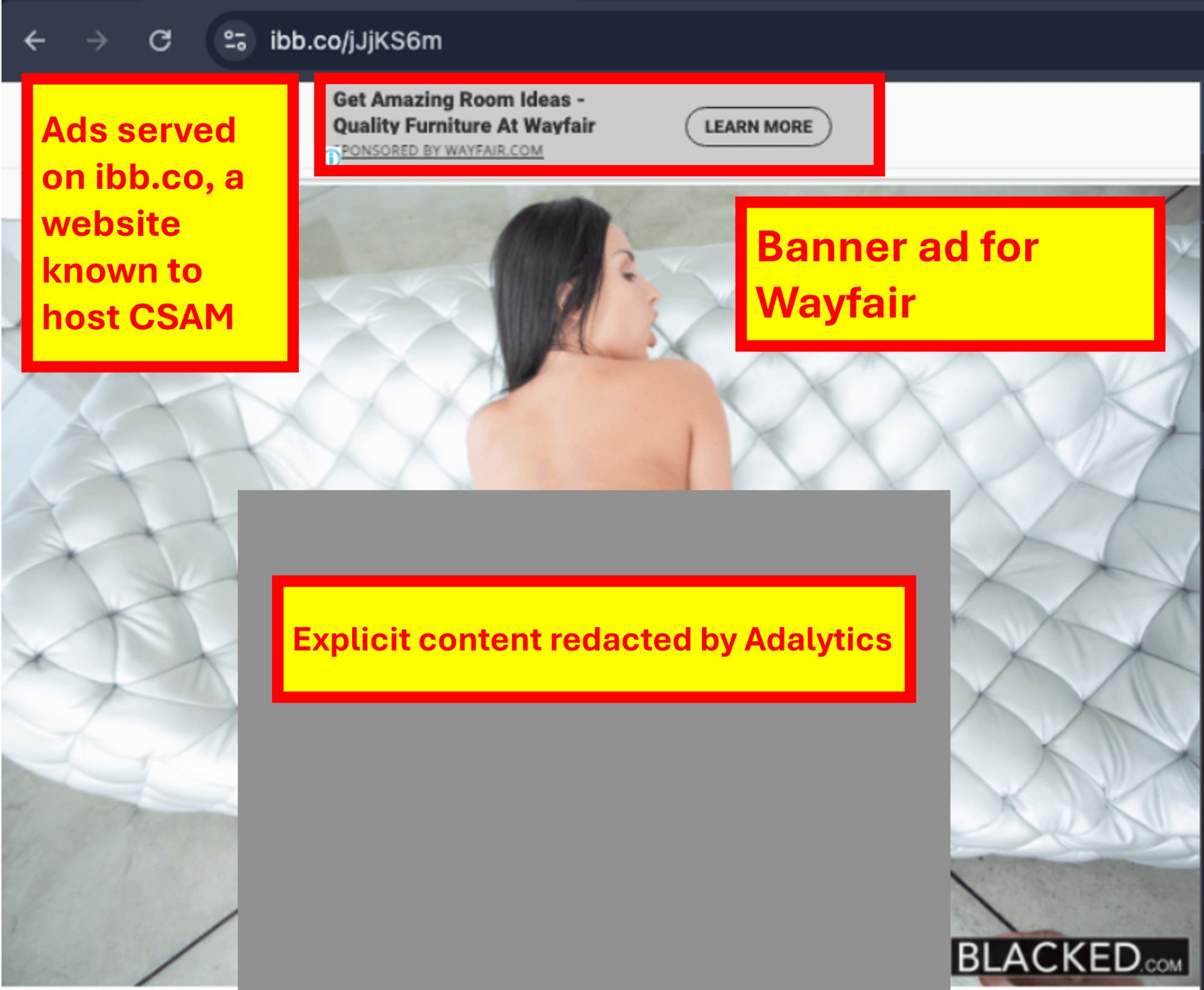

Screenshot of a Wayfair ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Wayfair ad on ibb.co, a website known to host child sexual abuse materials

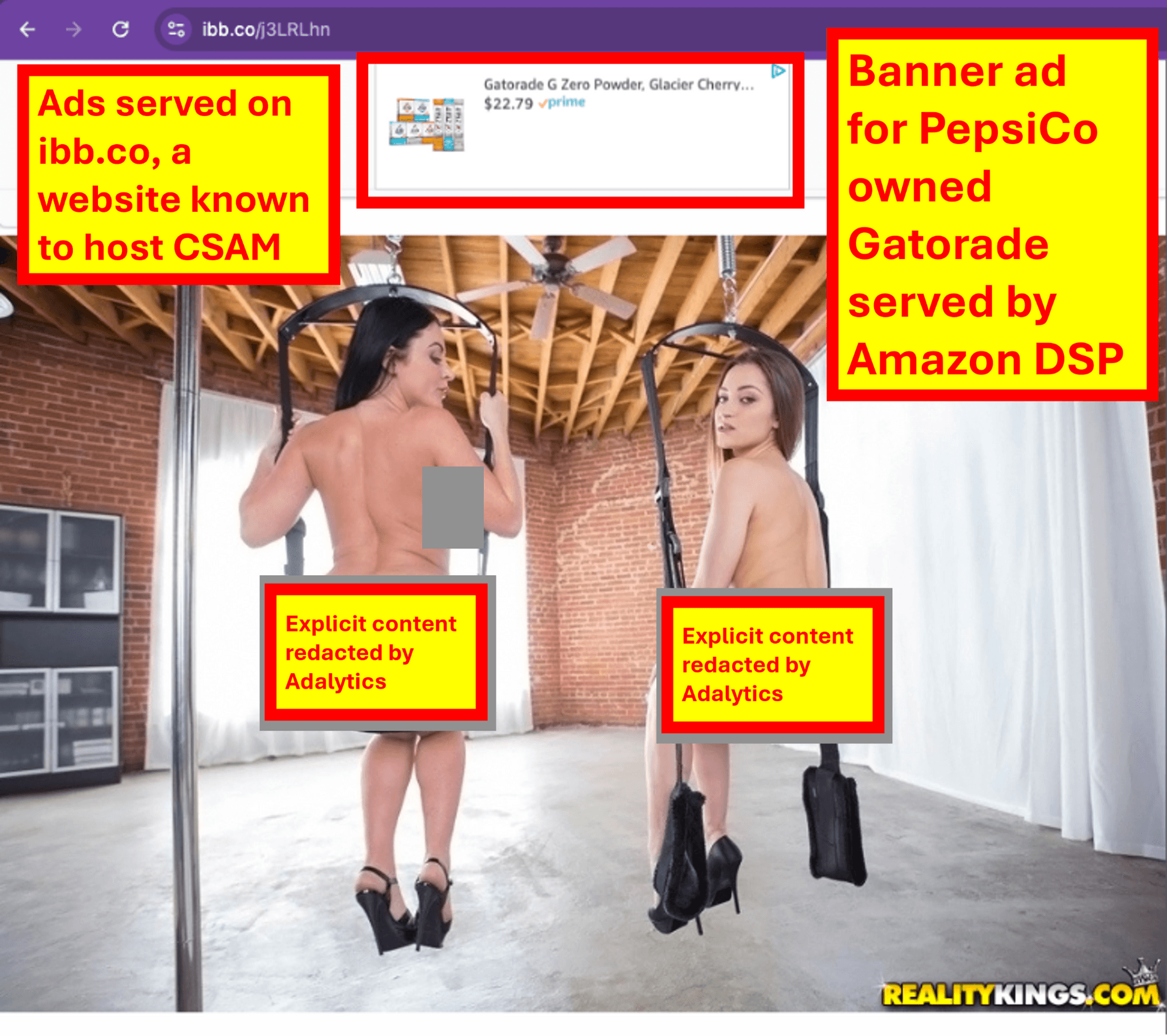

Screenshot of a PepsiCo owned Gatorade ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a PepsiCo owned Gatorade ad on ibb.co, a website known to host child sexual abuse materials

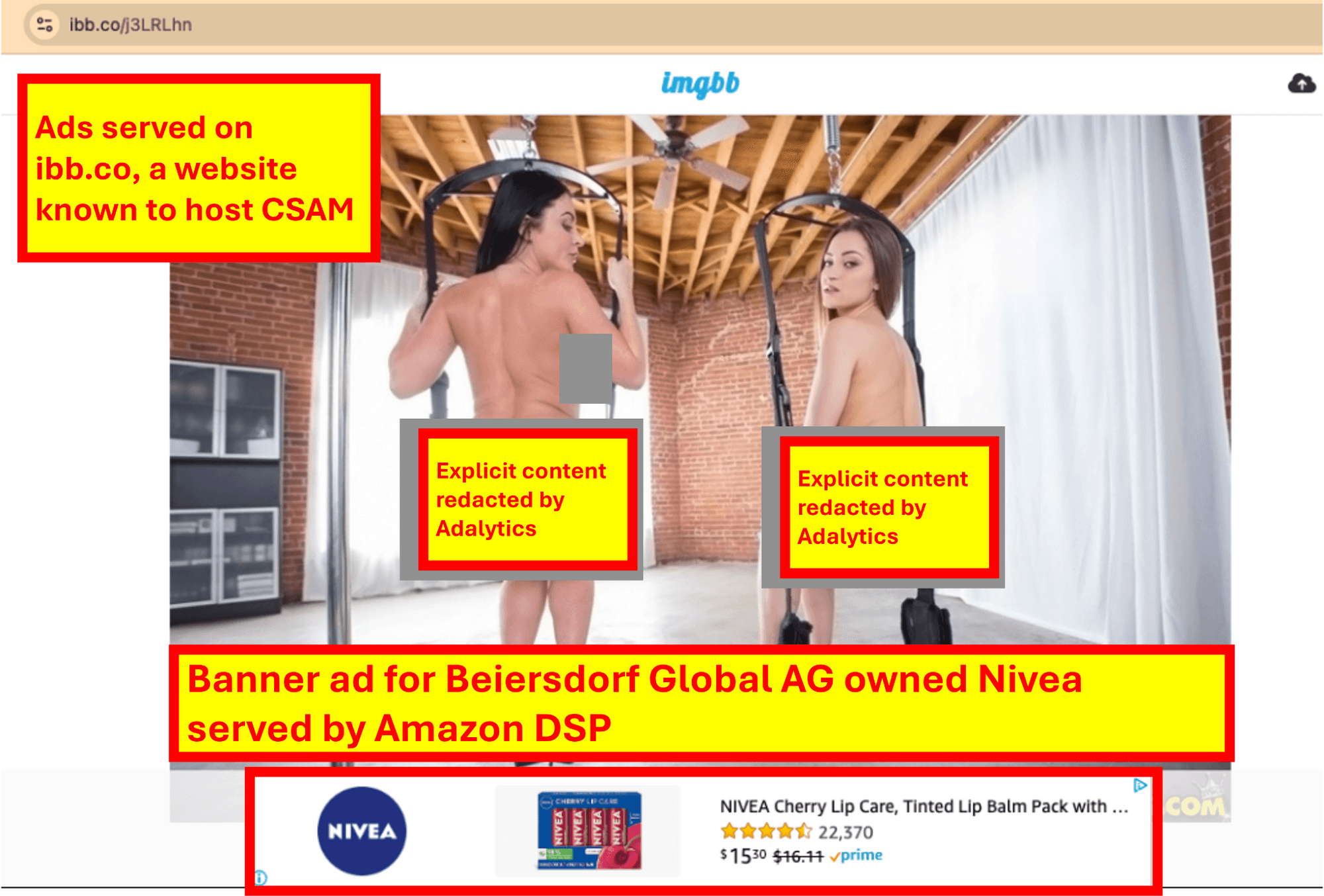

Screenshot of a Beiersdorf Global AG owned Nivea ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Beiersdorf Global AG owned Nivea ad on ibb.co, a website known to host child sexual abuse materials

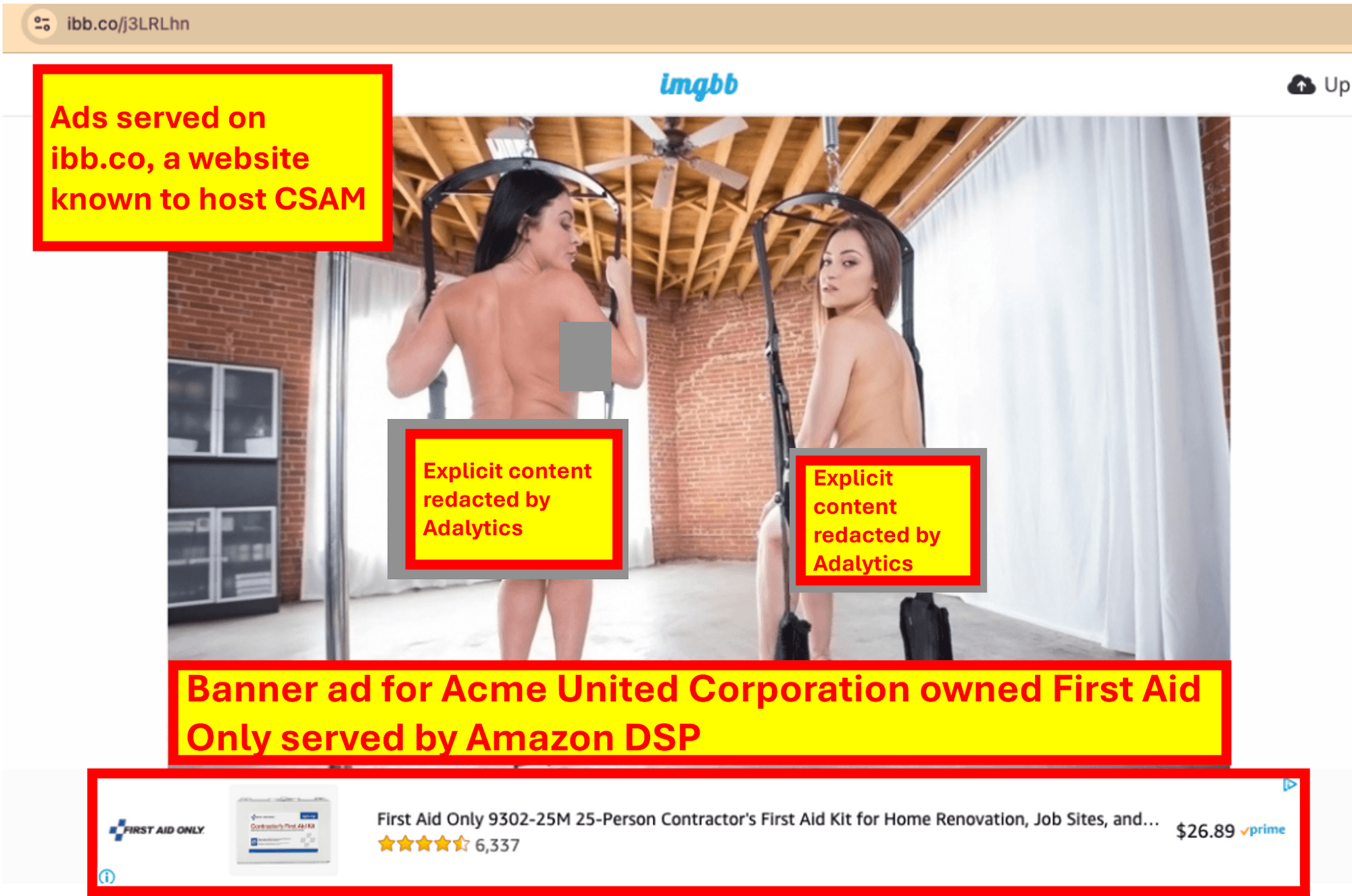

Screenshot of a Acme United Corporation owned First Aid Only ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Acme United Corporation owned First Aid Only ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of an Amazon owned Audible ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of an Amazon owned Audible ad on ibb.co, a website known to host child sexual abuse materials

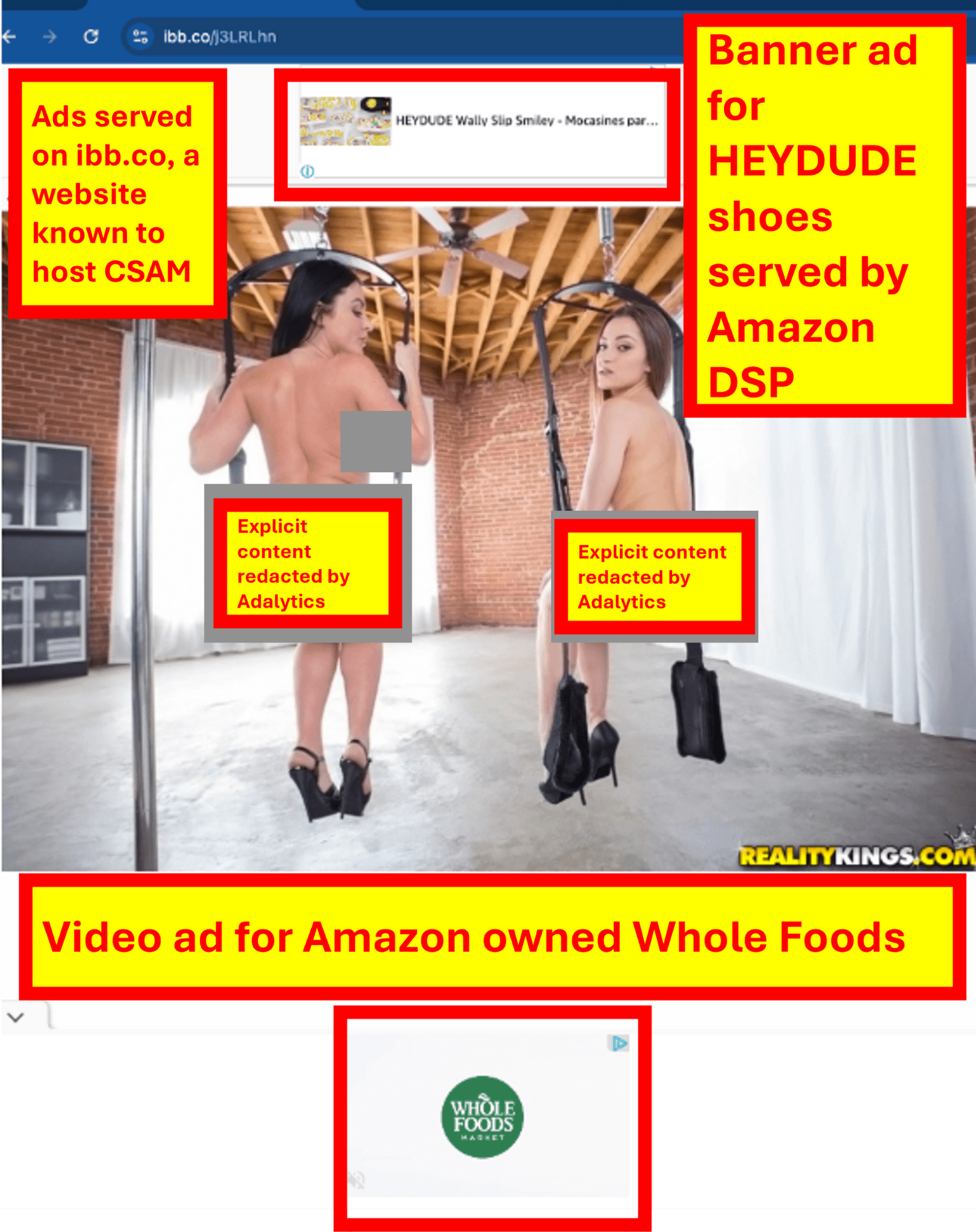

Screenshot of a HEYDUDE shoes ad & a Whole Foods ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a HEYDUDE shoes ad & a Whole Foods ad on ibb.co, a website known to host child sexual abuse materials

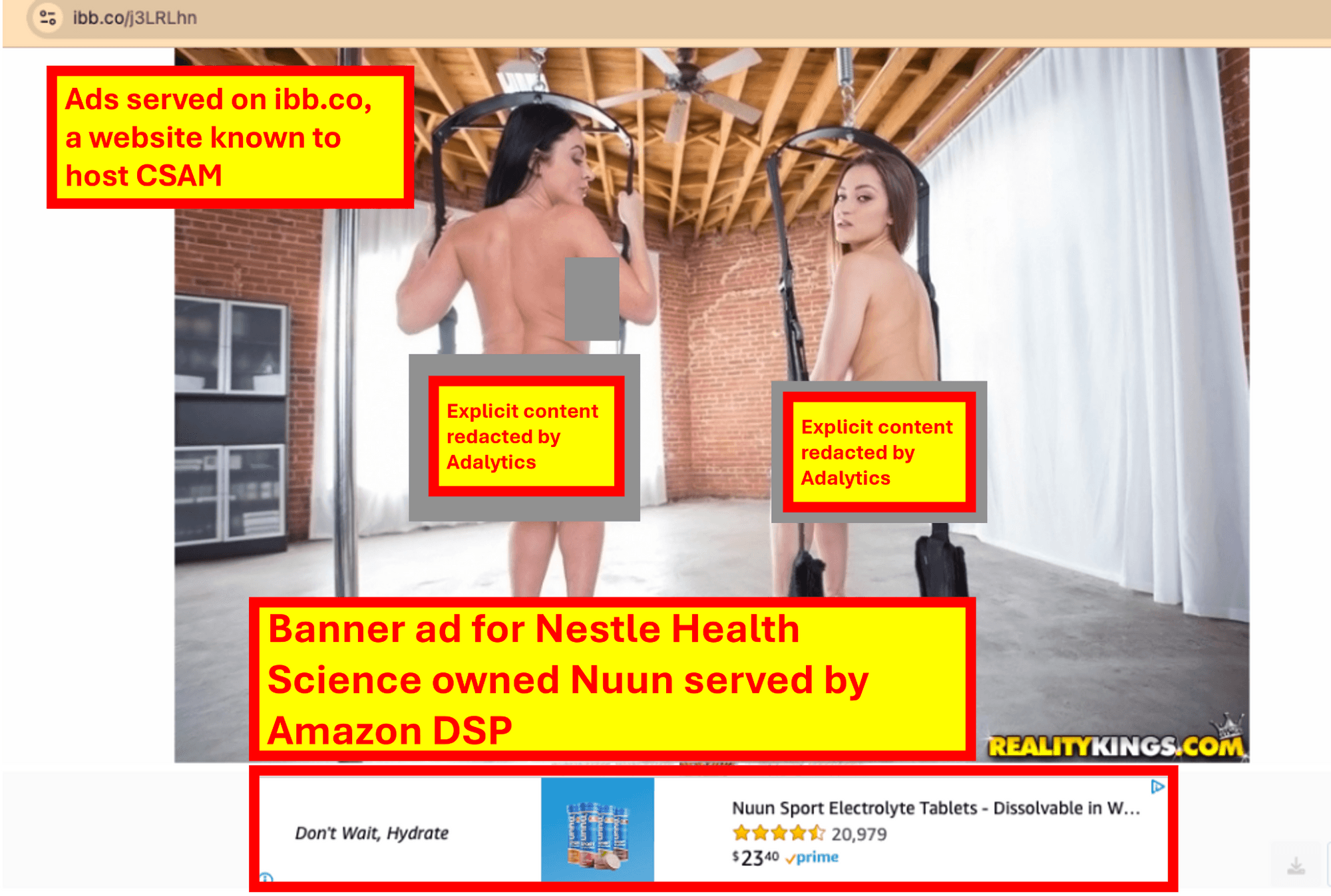

Screenshot of a Nestle Health Science owned Nuun ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Nestle Health Science owned Nuun ad on ibb.co, a website known to host child sexual abuse materials

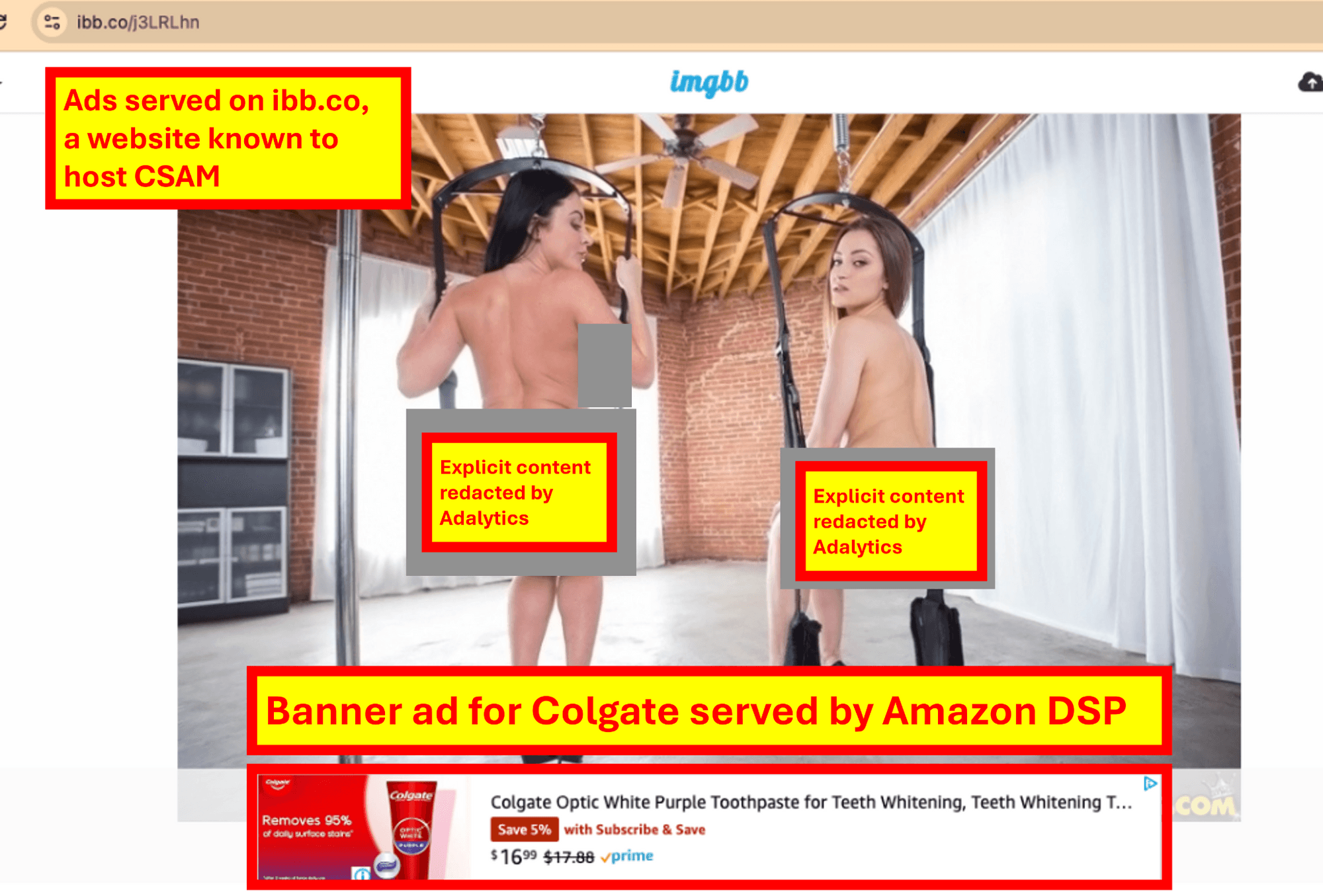

Screenshot of a Colgate ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Colgate ad on ibb.co, a website known to host child sexual abuse materials

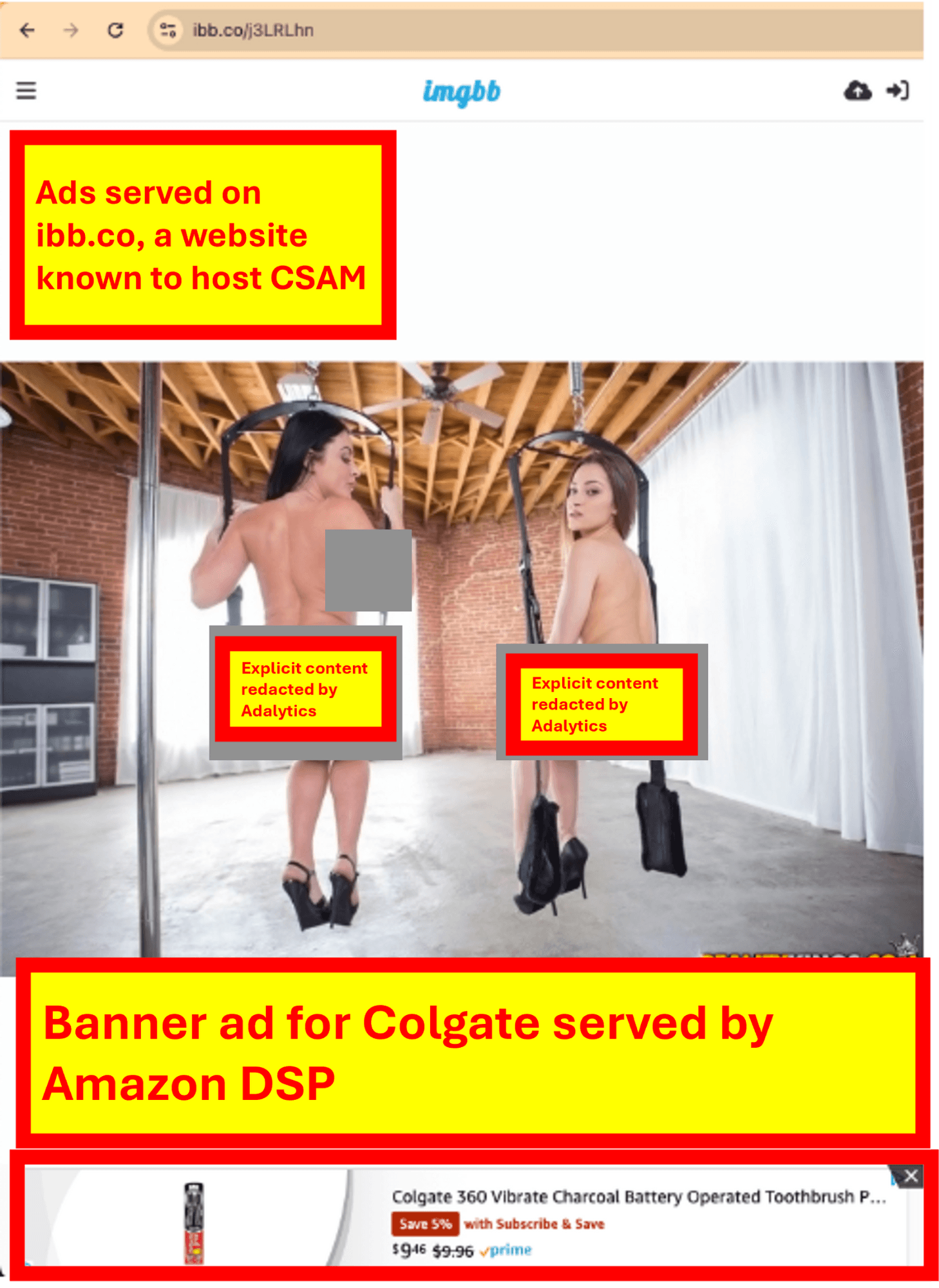

Screenshot of a Colgate ad on ibb.co, a website known to host child sexual abuse materials

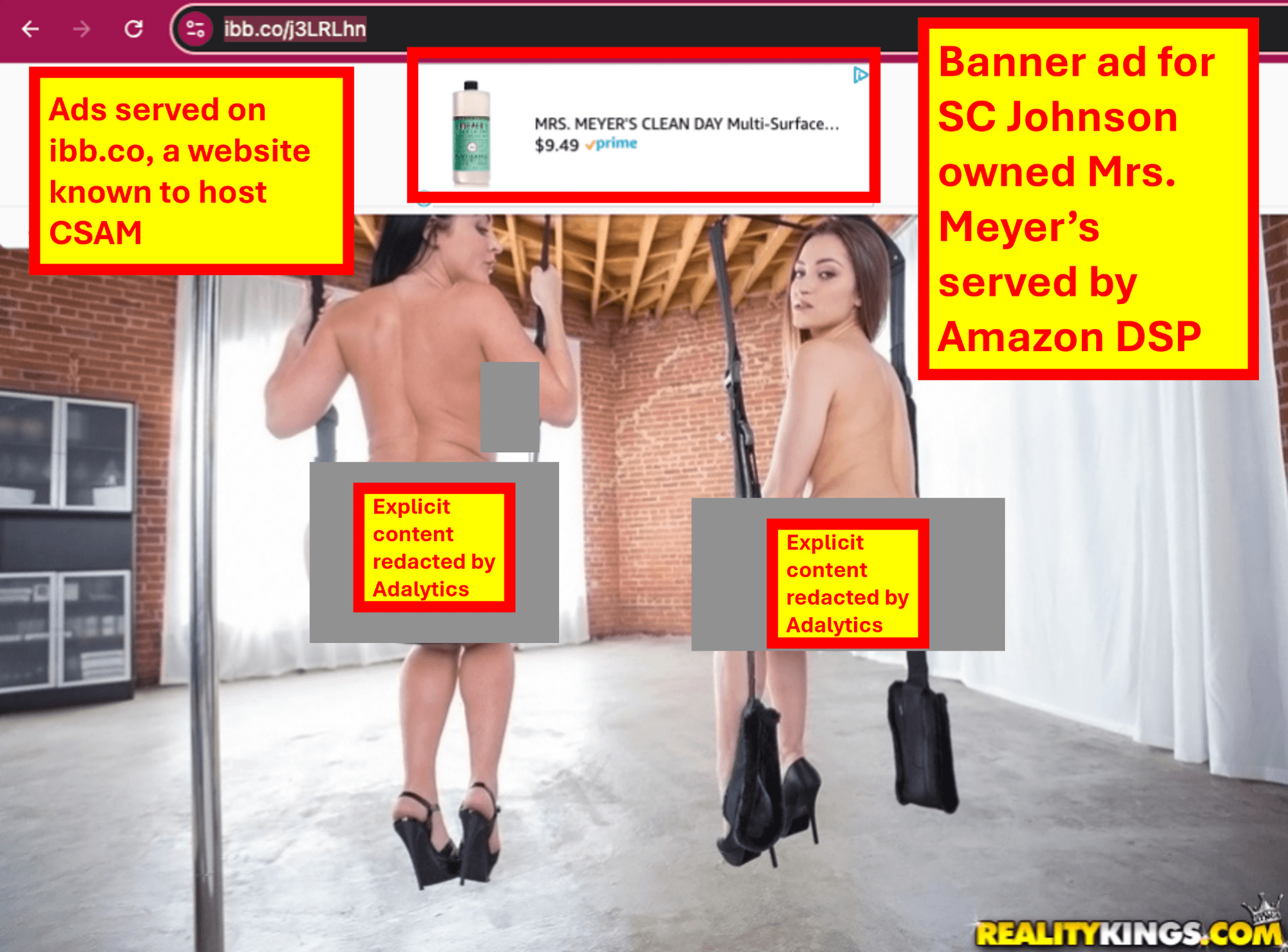

Screenshot of a SC Johnson owned Meyer’s ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a SC Johnson owned Meyer’s ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Clorox ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Clorox ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Colgate ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Colgate ad on ibb.co, a website known to host child sexual abuse materials

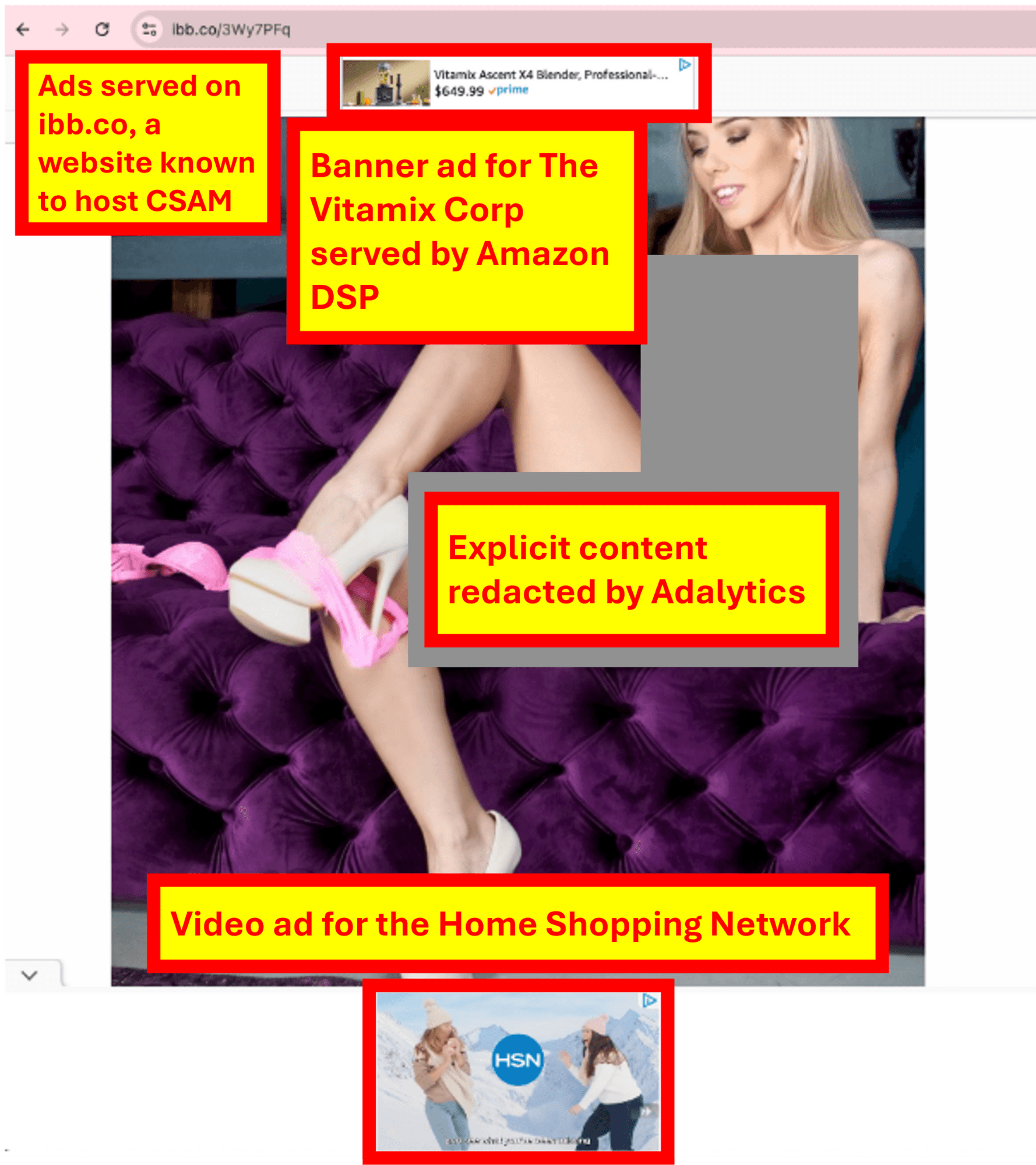

Screenshot of a Vitamix Corp ad & a Home Shopping Network ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Vitamix Corp ad & a Home Shopping Network ad on ibb.co, a website known to host child sexual abuse materials

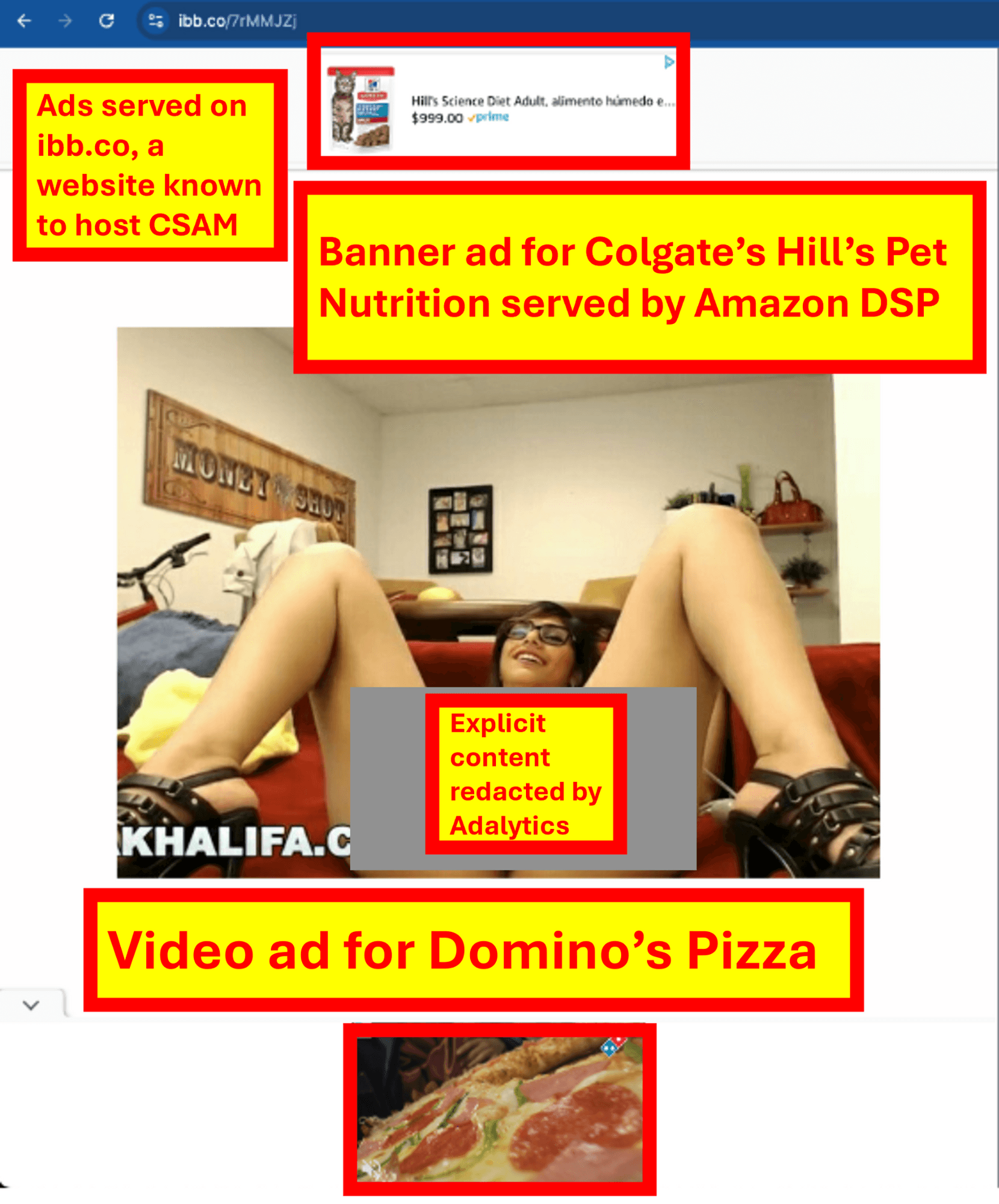

Screenshot of a Colgate owned Hill’s Pet Nutrition ad & a Domino’s Pizza ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Colgate owned Hill’s Pet Nutrition ad & a Domino’s Pizza ad on ibb.co, a website known to host child sexual abuse materials

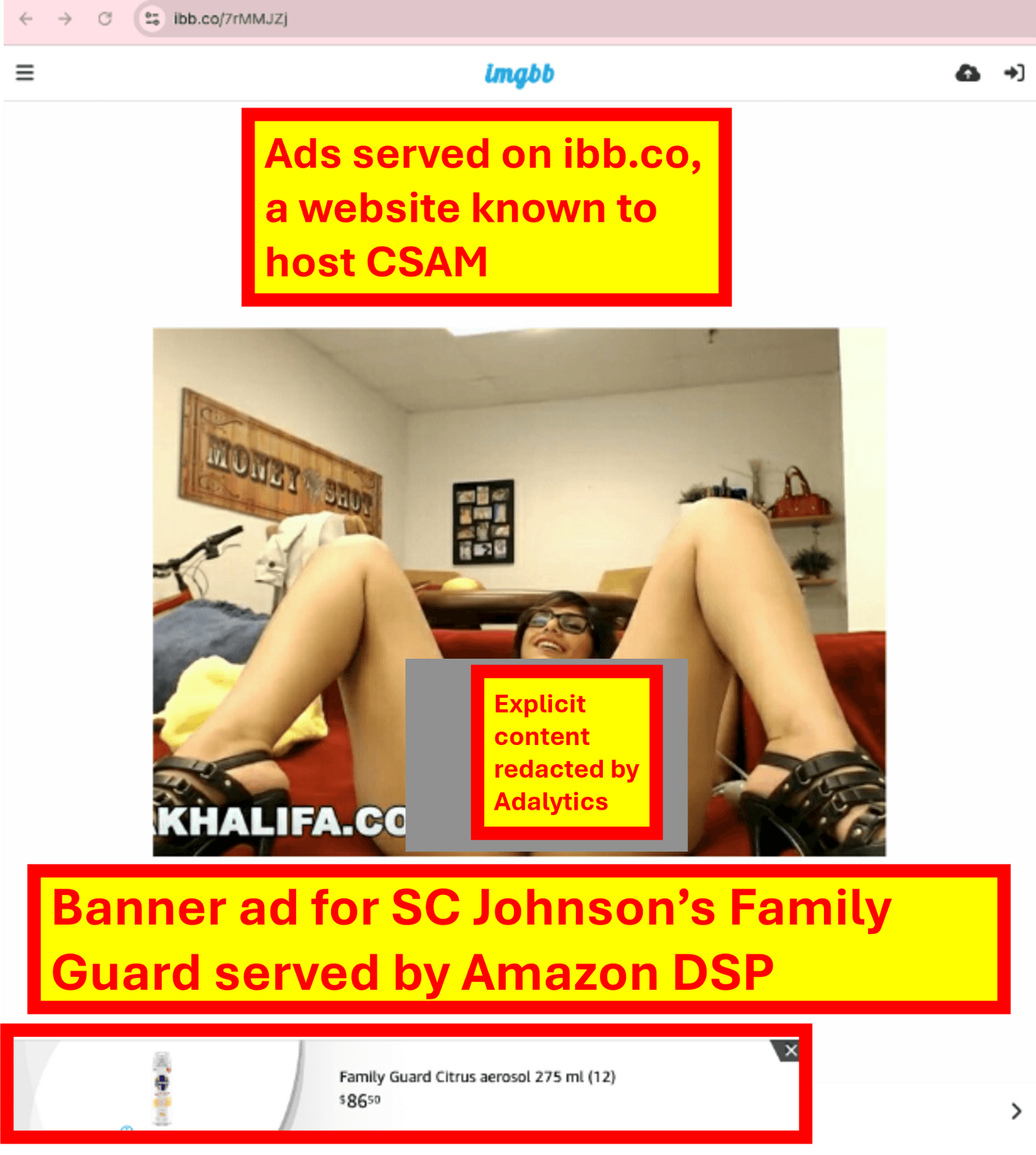

Screenshot of a SC Johnson owned Family Guard ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a SC Johnson owned Family Guard ad on ibb.co, a website known to host child sexual abuse materials

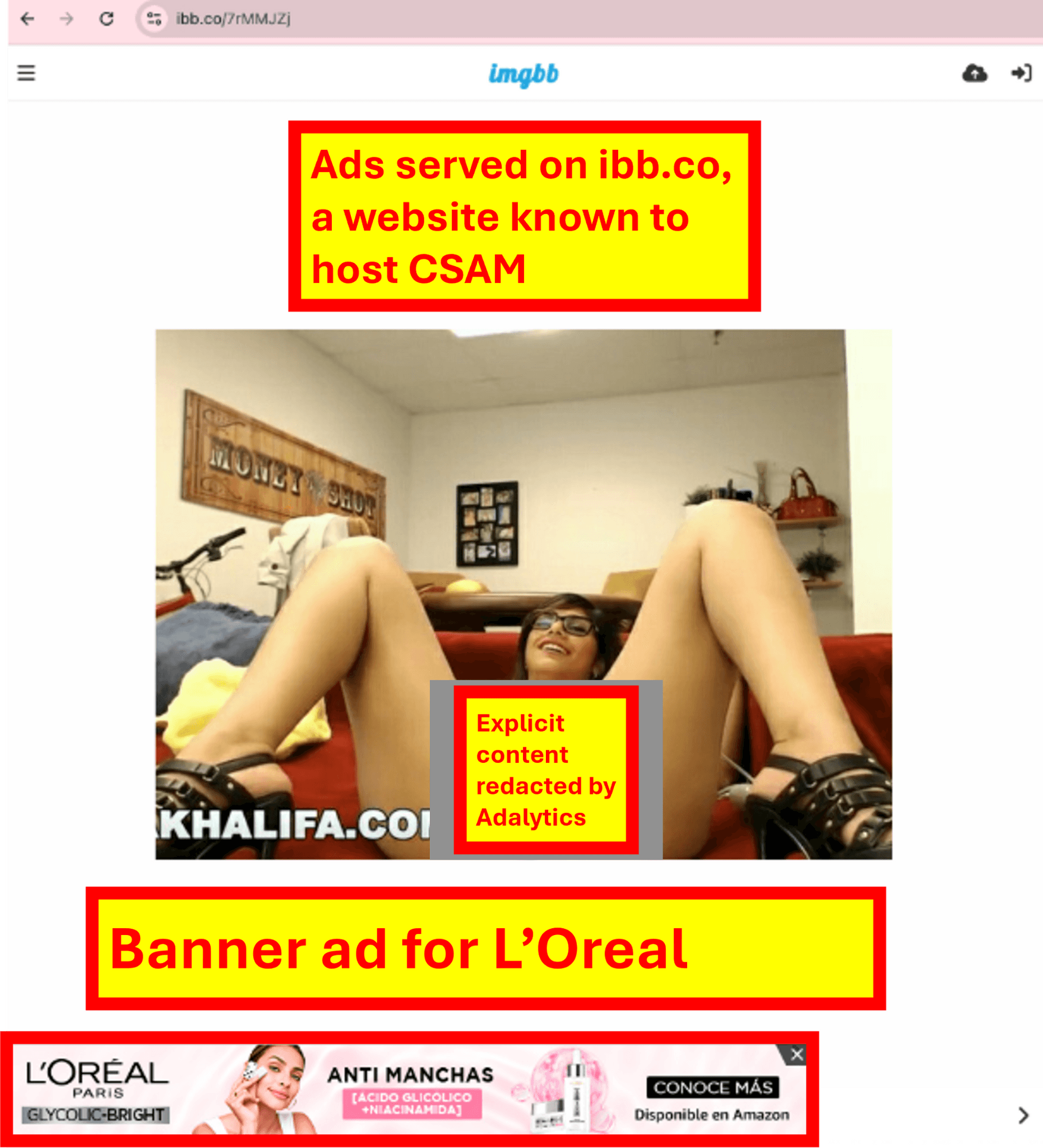

Screenshot of a L’Oreal ad on ibb.co, a website known to host child sexual abuse materials

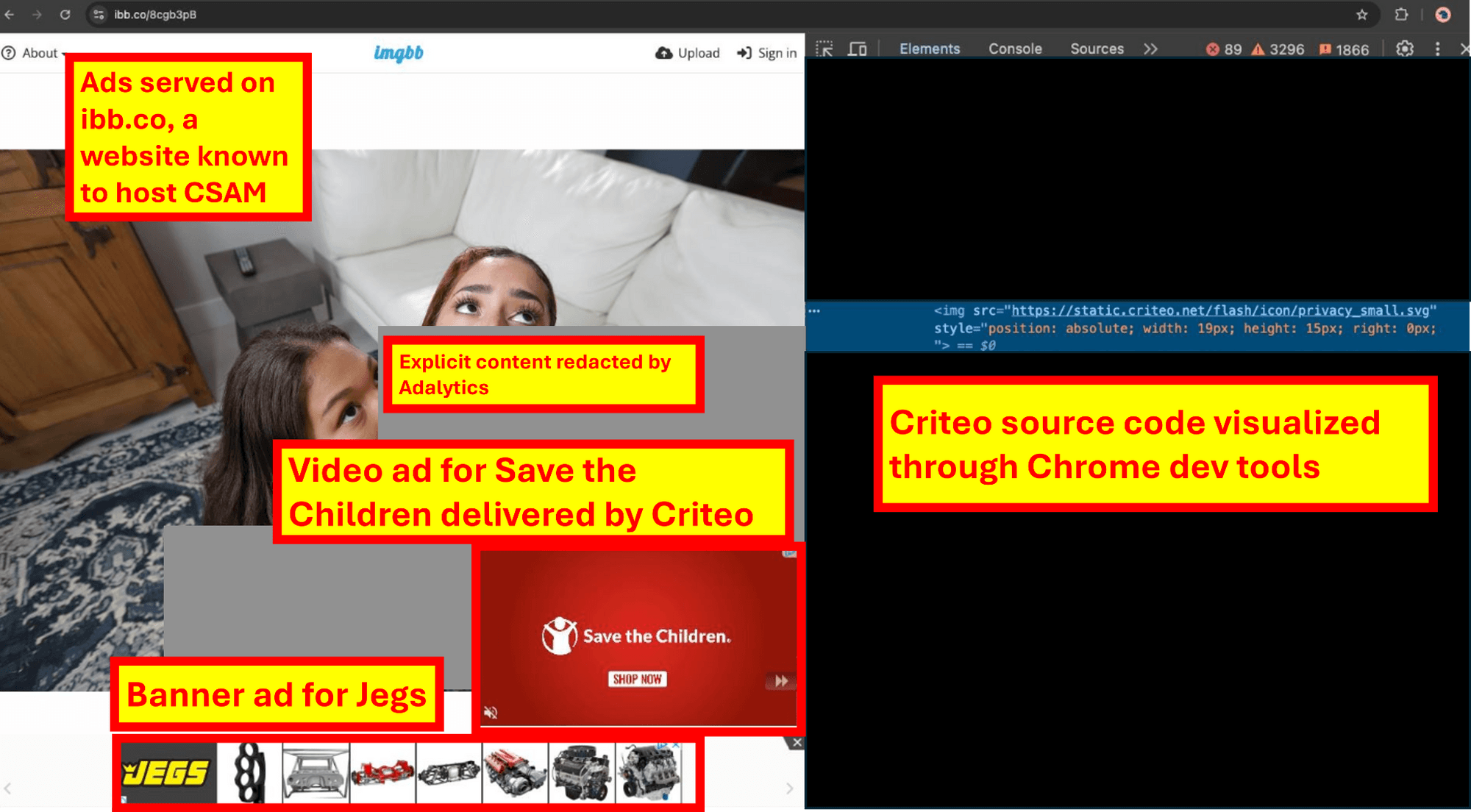

Screenshot of a Save the Children ad served by Criteo & Jegs ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Save the Children ad served by Criteo & Jegs ad on ibb.co, a website known to host child sexual abuse materials

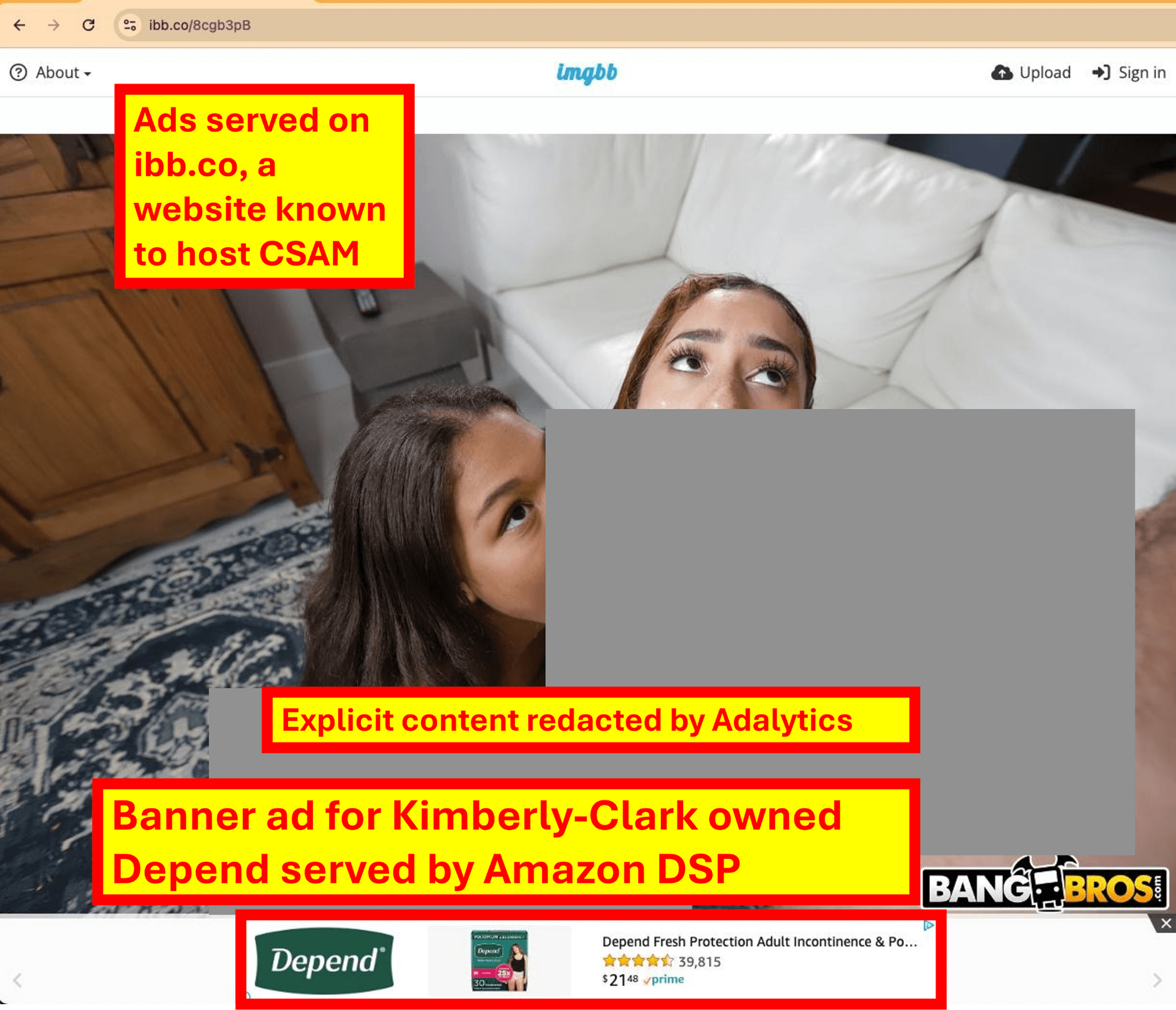

Screenshot of a Kimberly-Clark owned Depend ad on ibb.co, a website known to host child sexual abuse materials

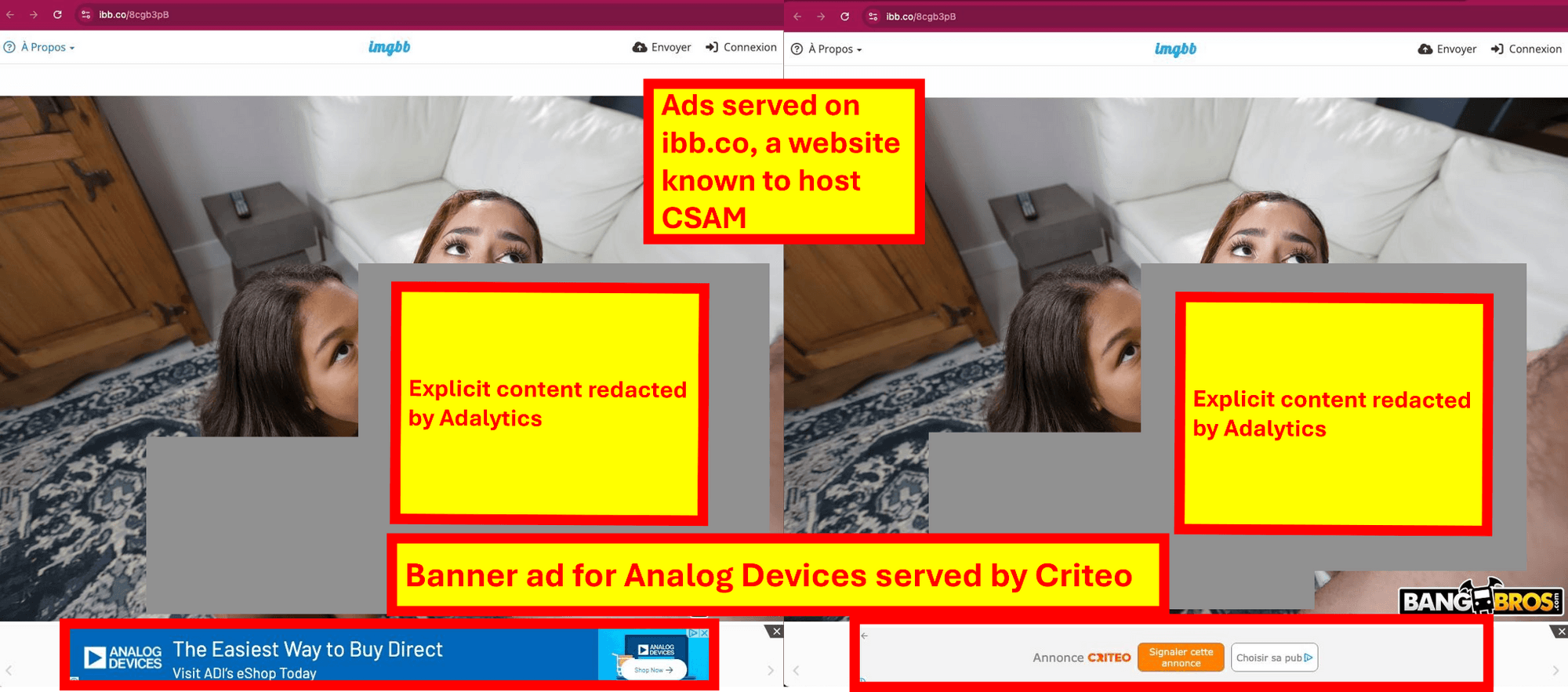

Screenshot of an Analog Devices ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of an Analog Devices ad on ibb.co, a website known to host child sexual abuse materials

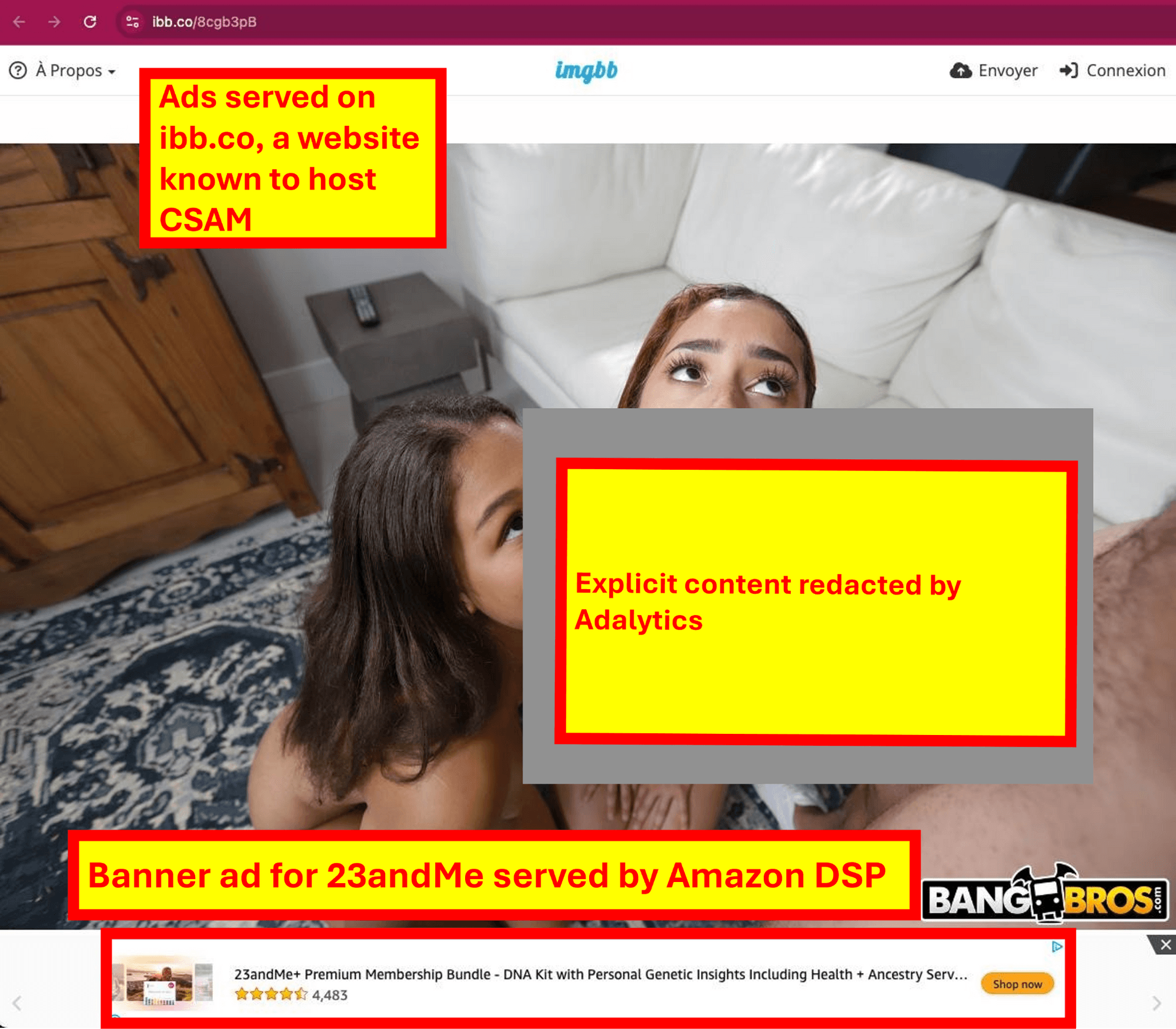

Screenshot of a 23andMe ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a 23andMe ad on ibb.co, a website known to host child sexual abuse materials

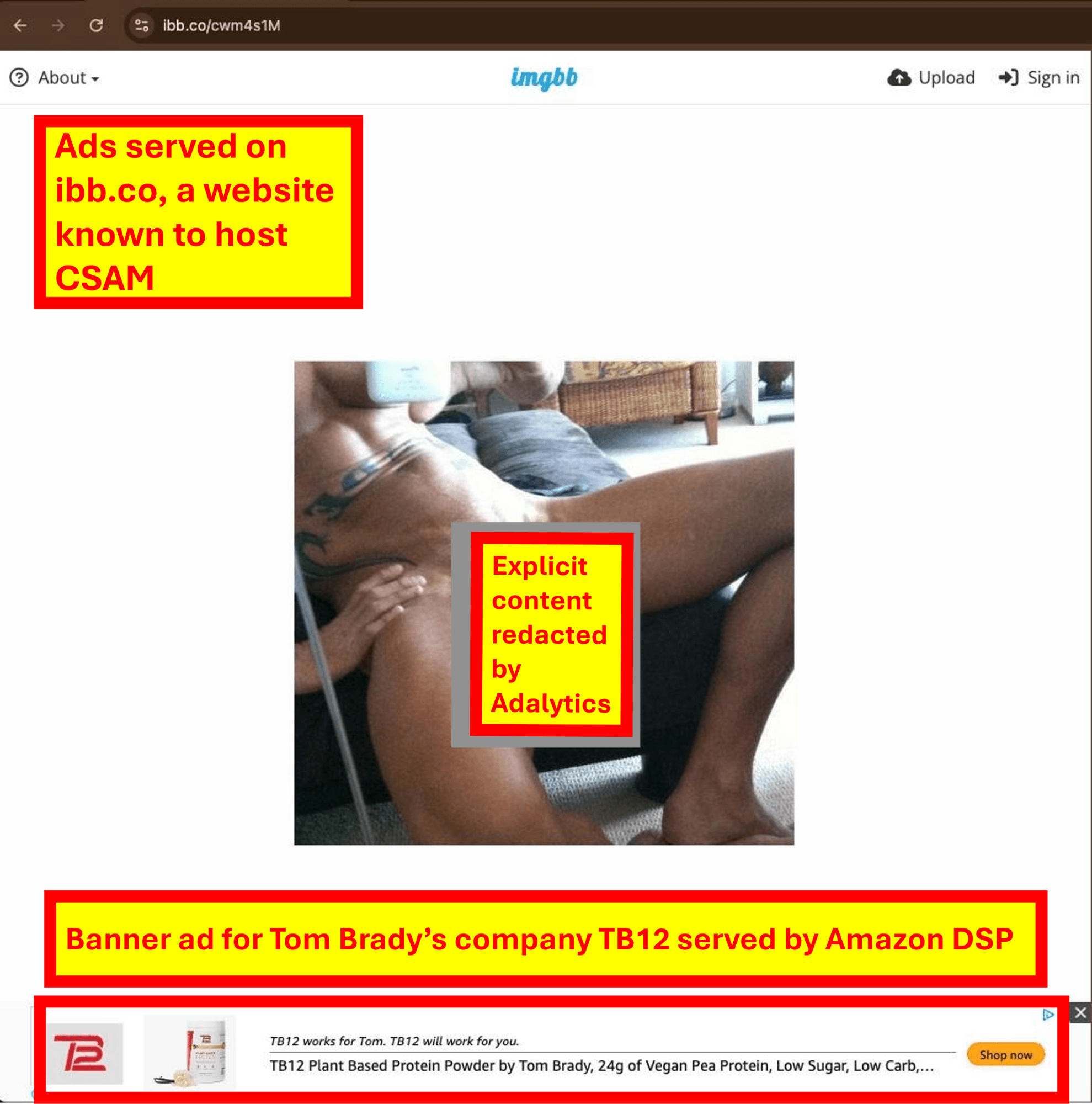

Screenshot of a Tom Brady owned TB12 ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Tom Brady owned TB12 ad on ibb.co, a website known to host child sexual abuse materials

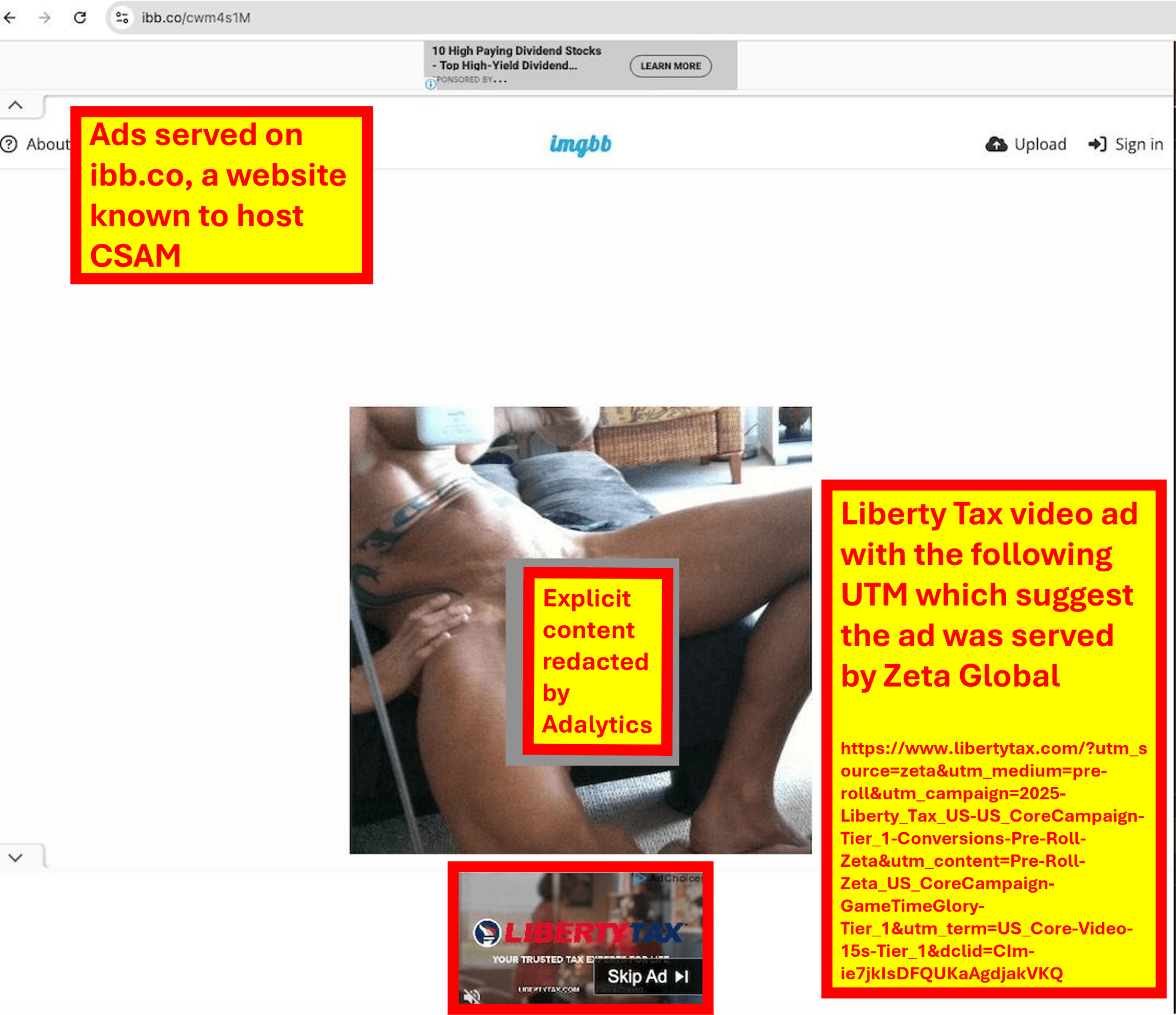

Screenshot of a Liberty Tax ad served by Zeta Global on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Liberty Tax ad served by Zeta Global on ibb.co, a website known to host child sexual abuse materials

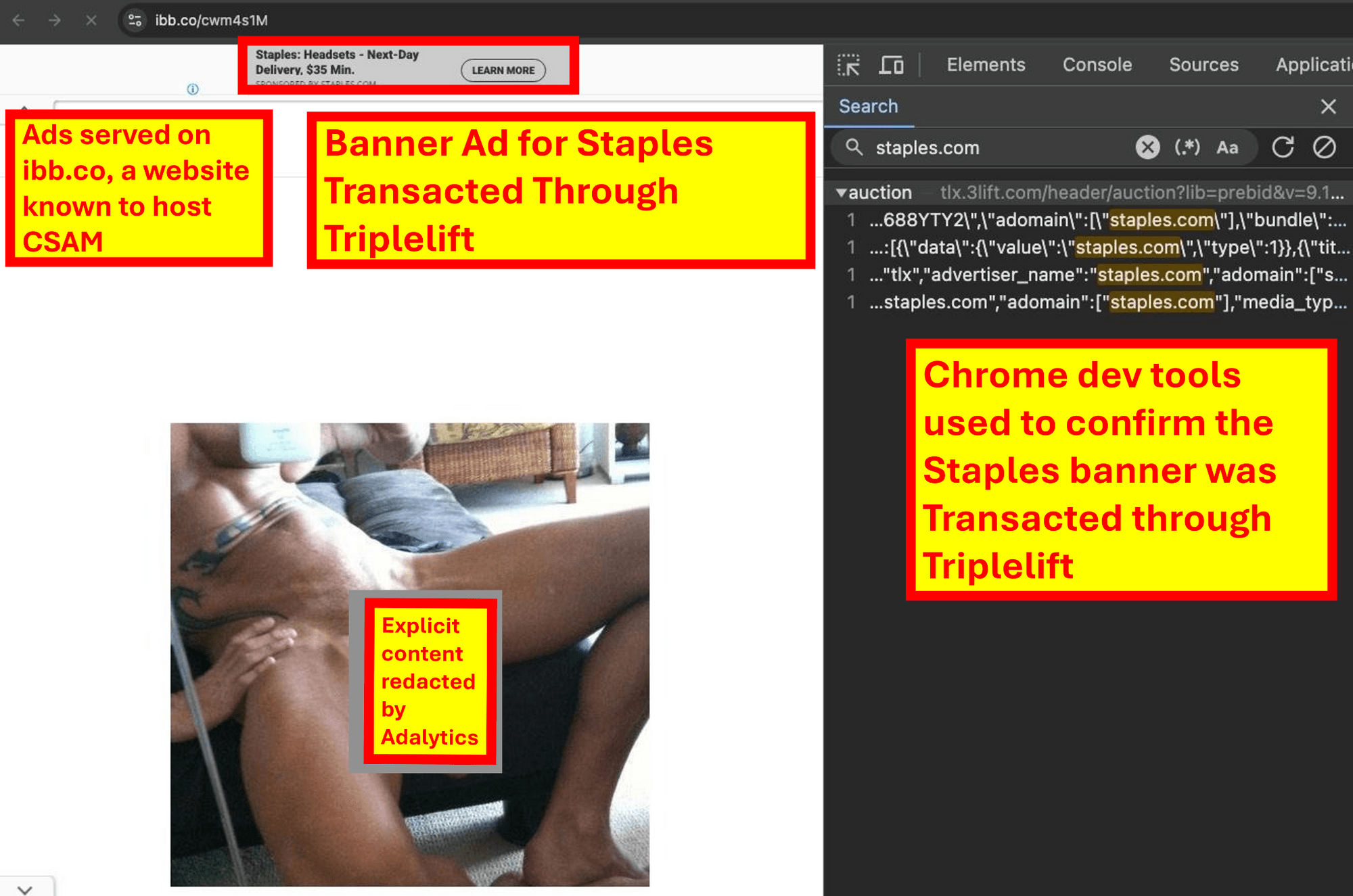

Screenshot of a Staples ad transacted through Triplelift on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Staples ad transacted through Triplelift on ibb.co, a website known to host child sexual abuse materials

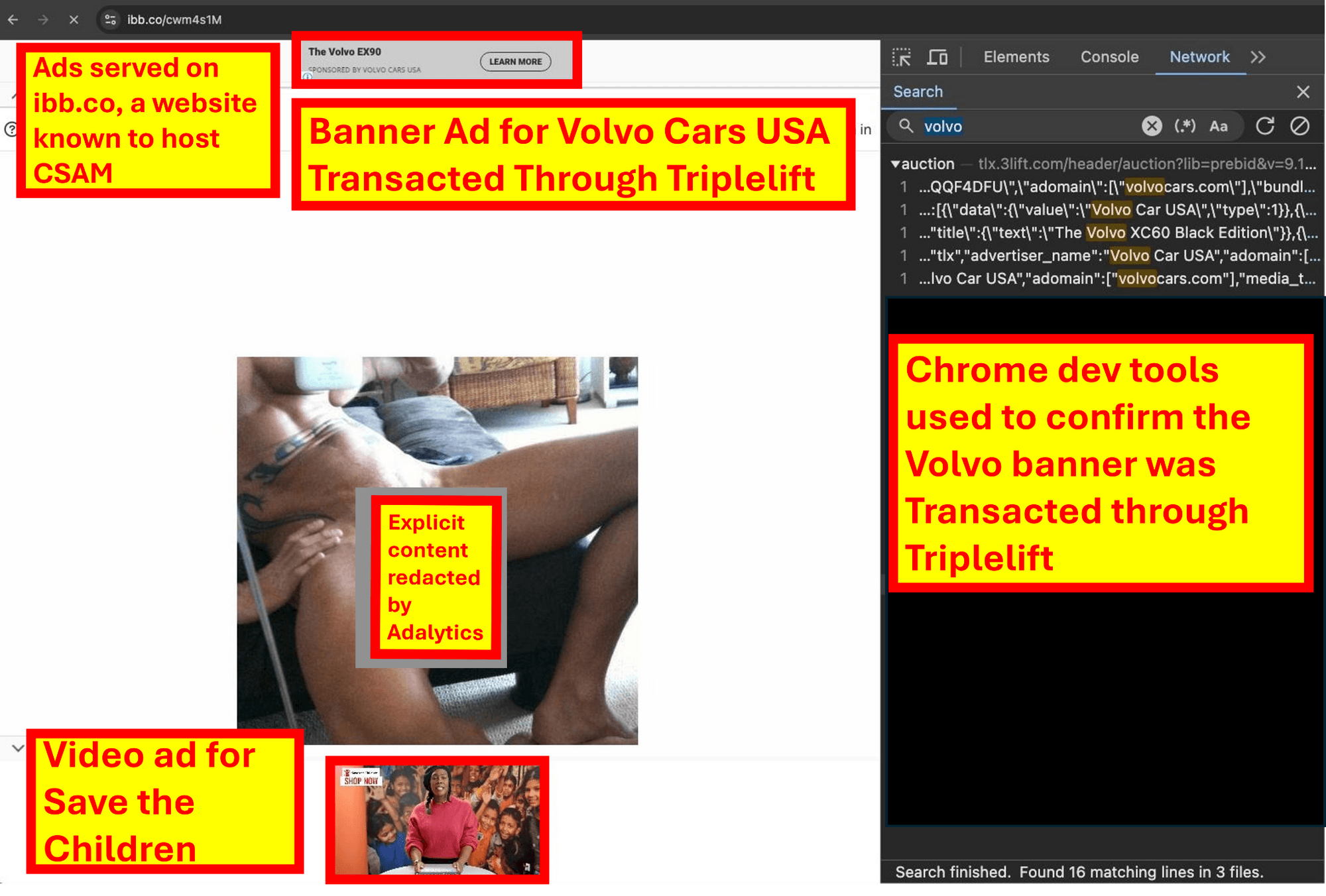

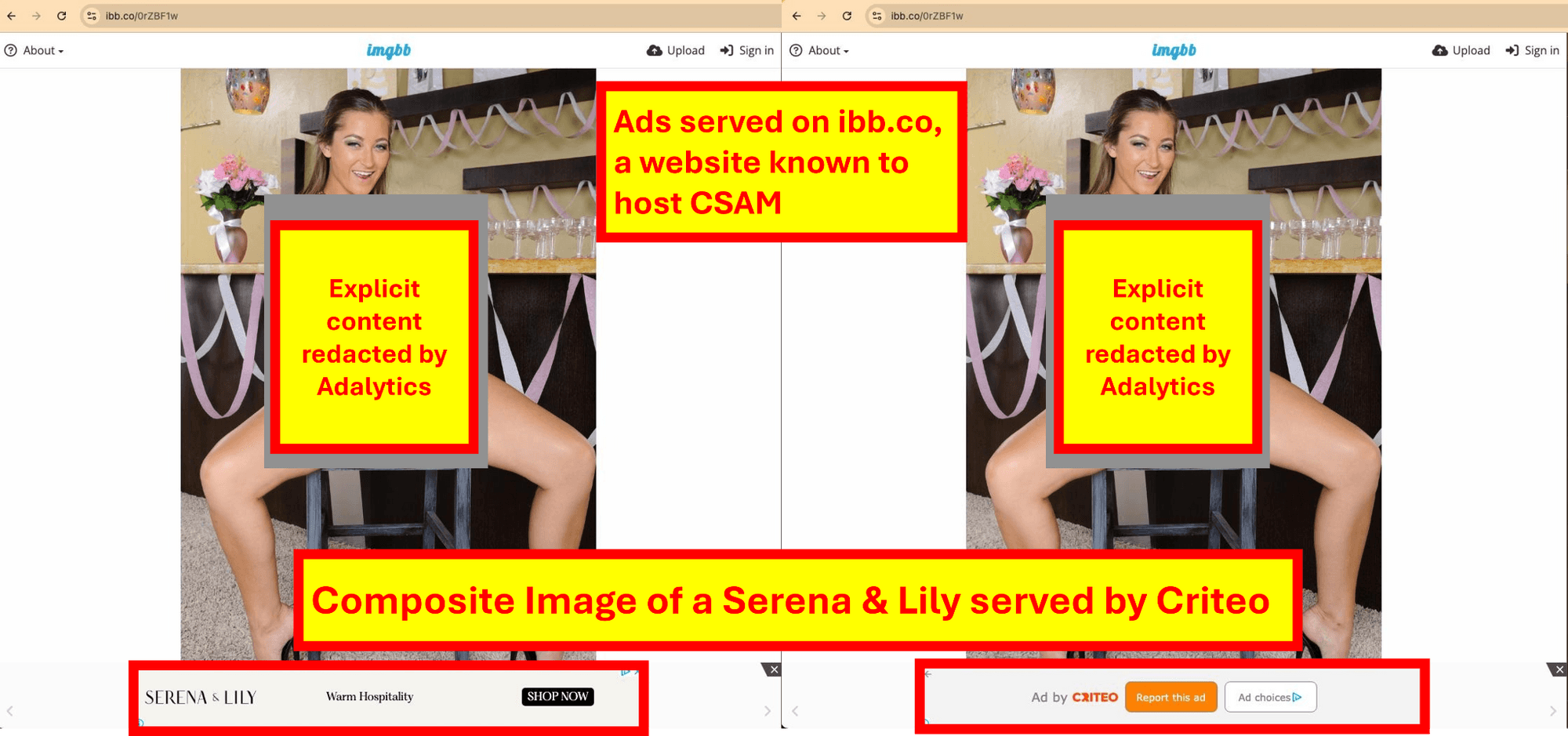

Screenshot of a Volvo ad transacted through Triplelift & a Save the Children ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Volvo ad transacted through Triplelift & a Save the Children ad on ibb.co, a website known to host child sexual abuse materials

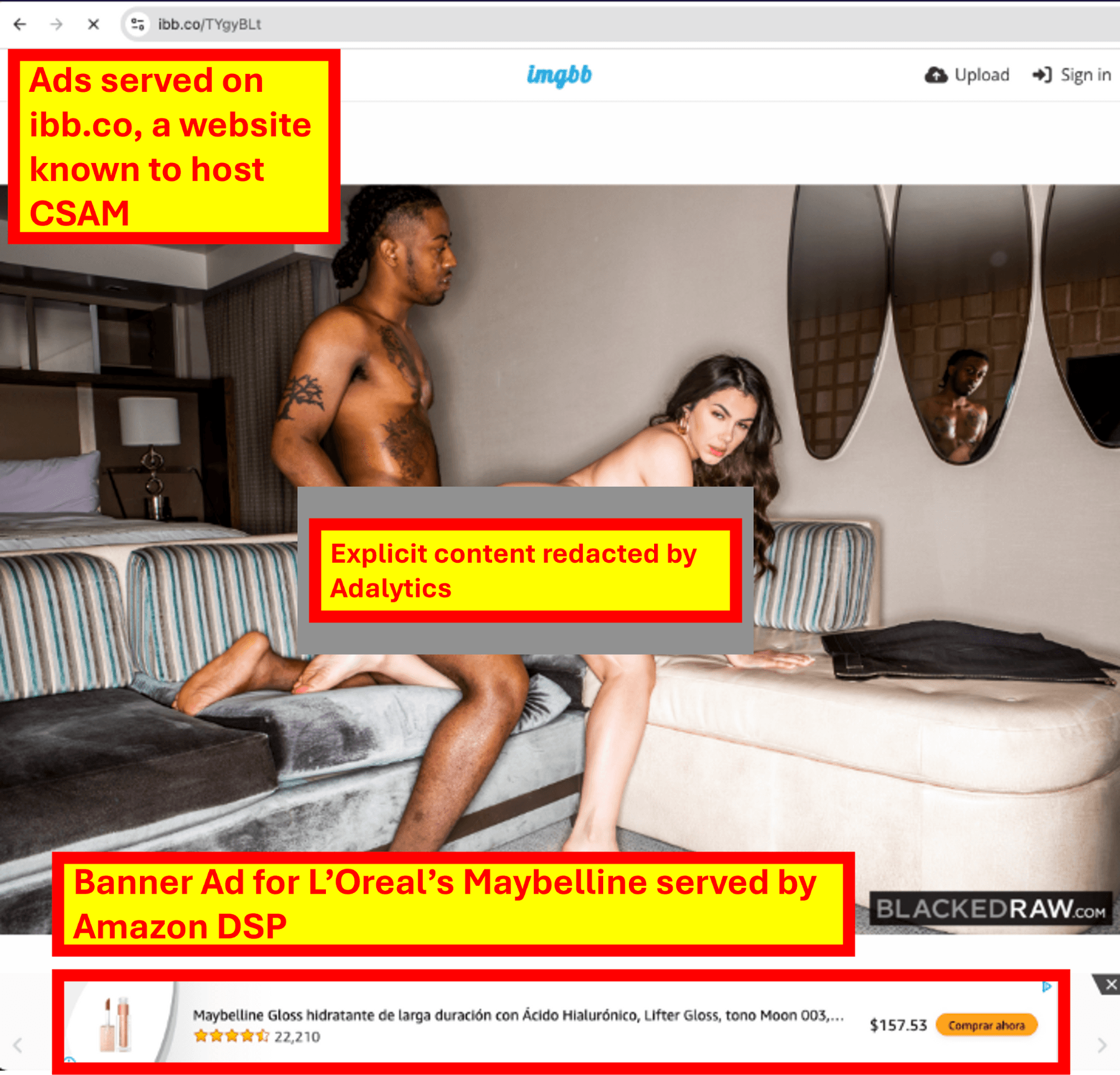

Screenshot of a L’Oreal owned Maybelline ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a L’Oreal owned Maybelline ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of multiple Colgate owned Hill’s Pet Nutrition ads on ibb.co, a website known to host child sexual abuse materials

Screenshot of multiple Colgate owned Hill’s Pet Nutrition ads on ibb.co, a website known to host child sexual abuse materials

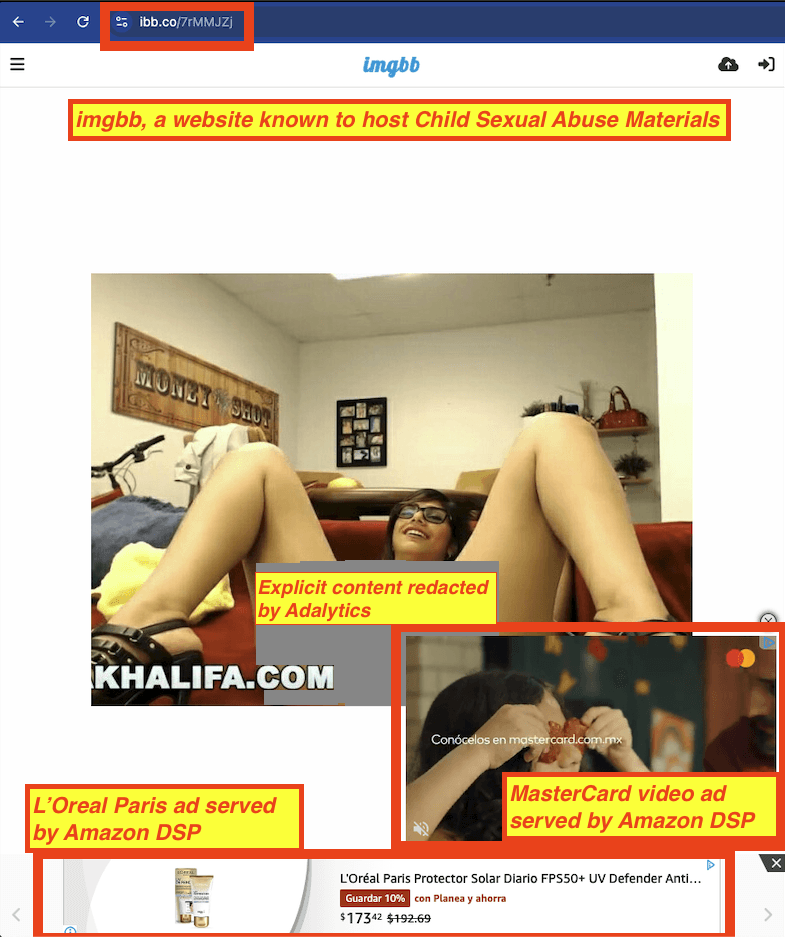

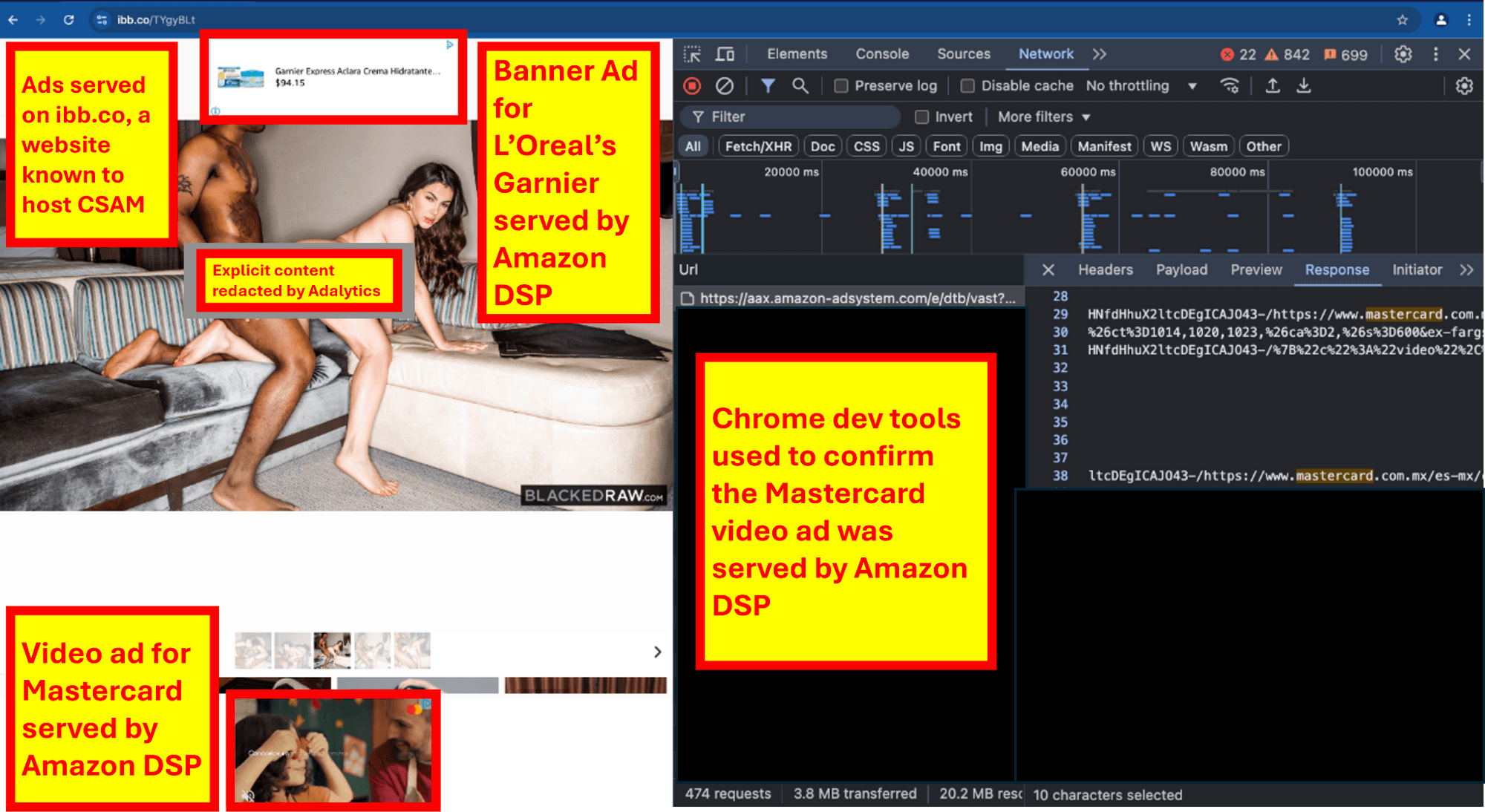

Screenshot of a L’Oreal owned Garnier ad & a Mastercard ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

Screenshot of a L’Oreal owned Garnier ad & a Mastercard ad served by Amazon DSP on ibb.co, a website known to host child sexual abuse materials

]

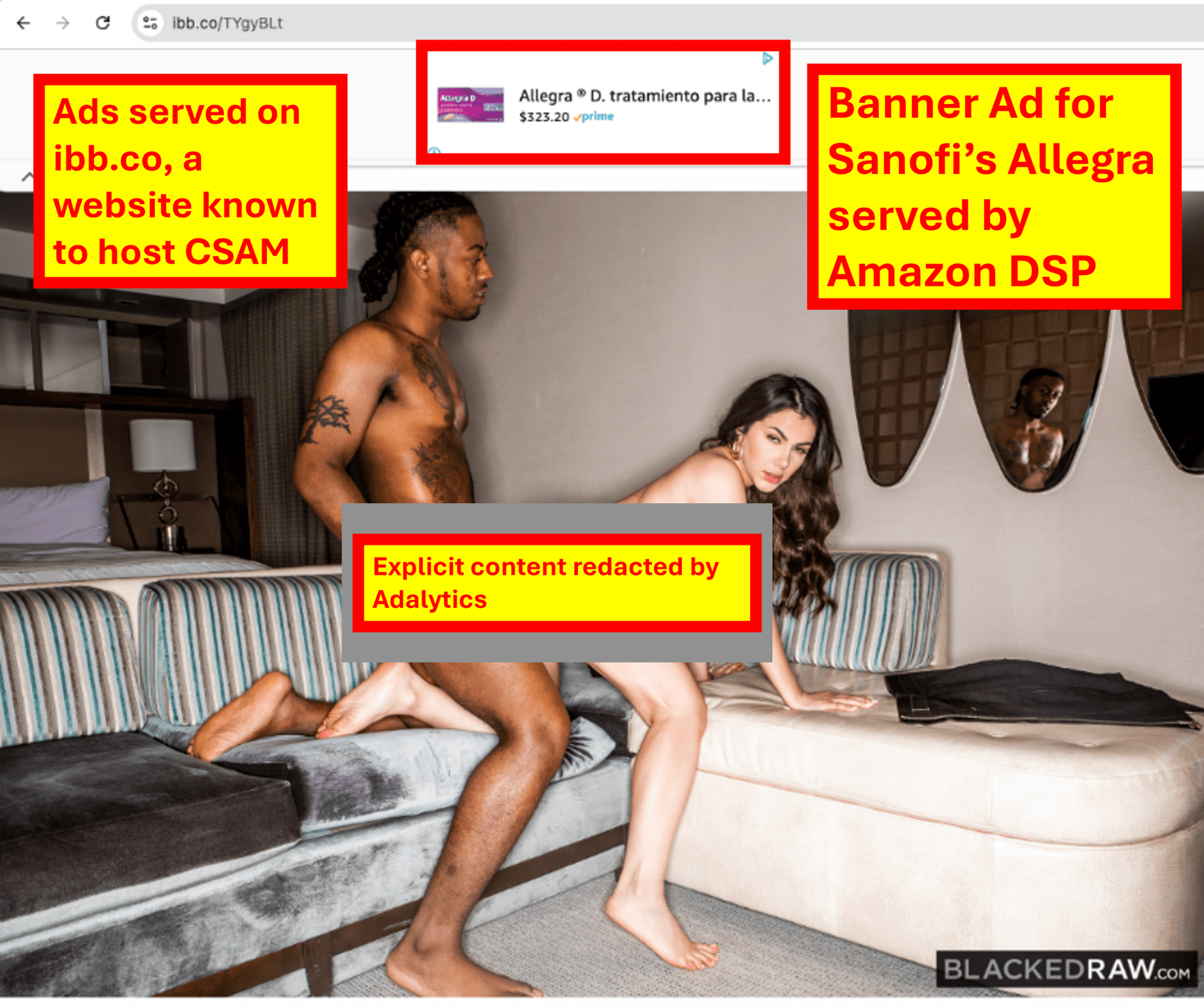

Screenshot of a Sanofi owned Allegra ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a L’Oreal ad & a Mastercard ad on ibb.co, a website known to host child sexual abuse materials

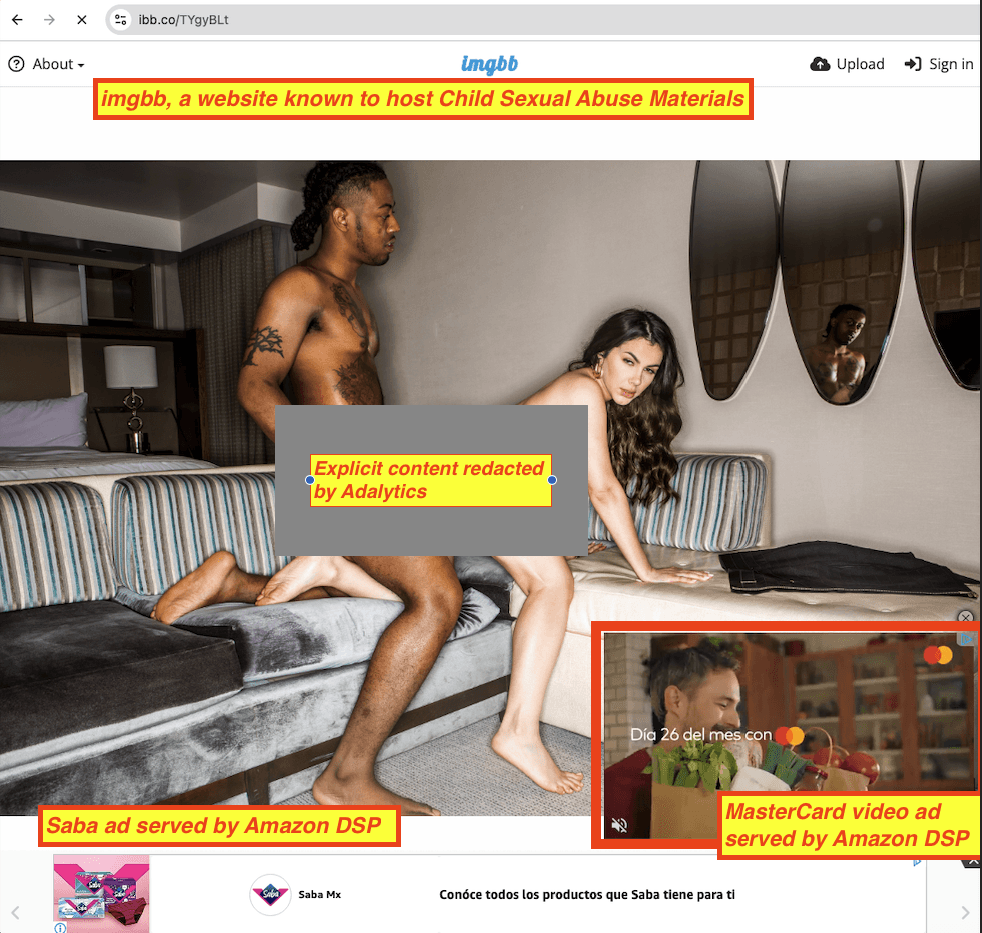

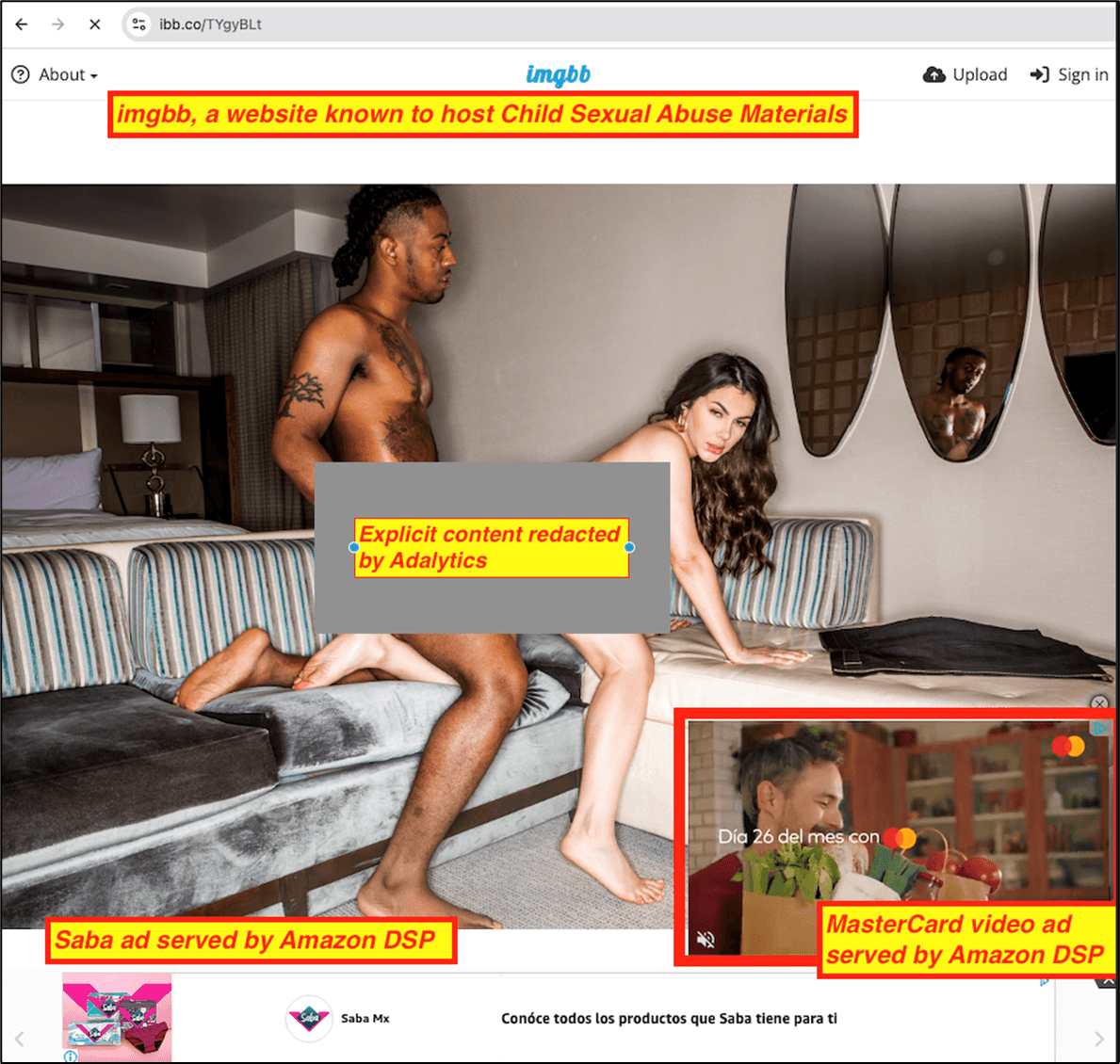

Screenshot of a Saba ad & a Mastercard ad on ibb.co, a website known to host child sexual abuse materials

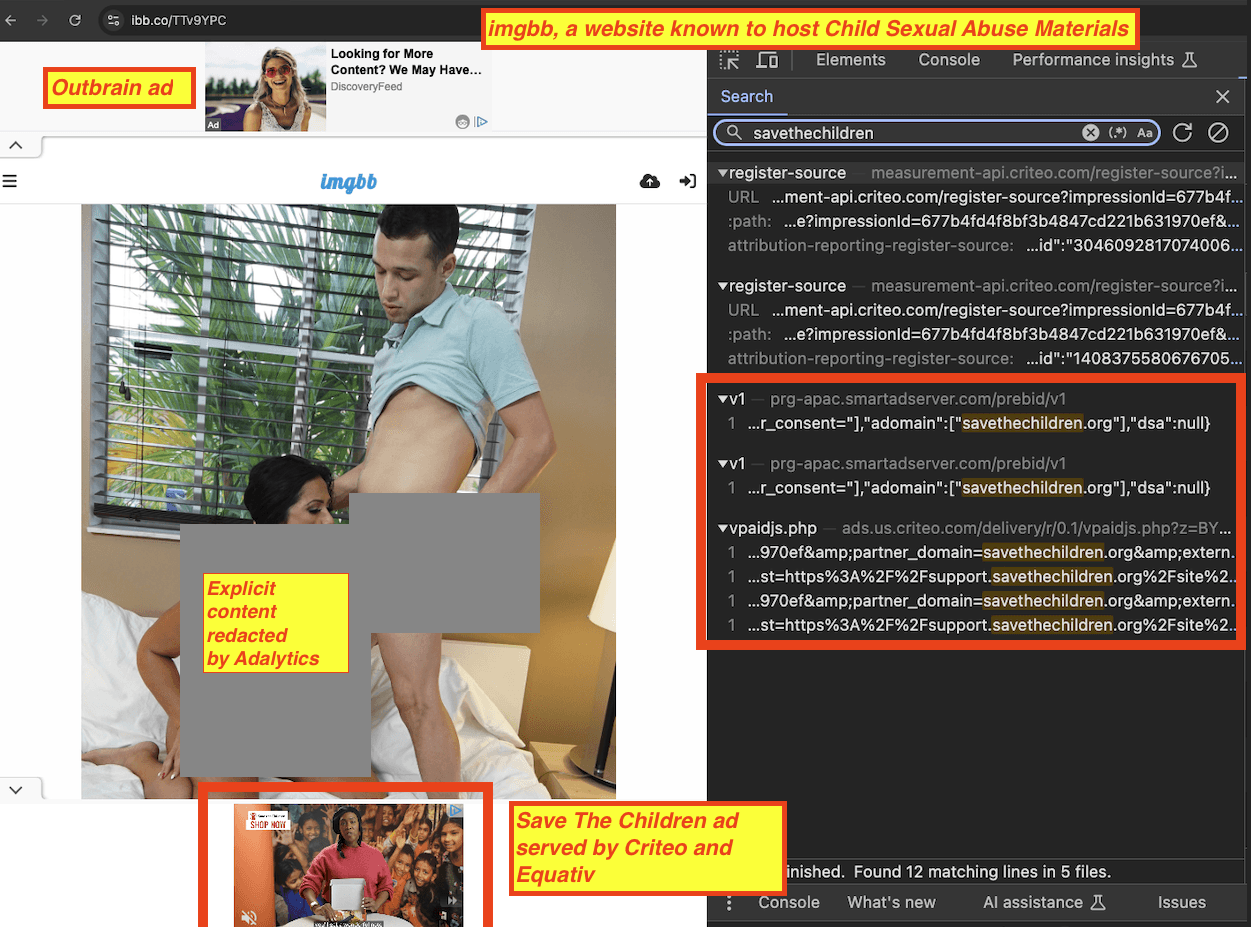

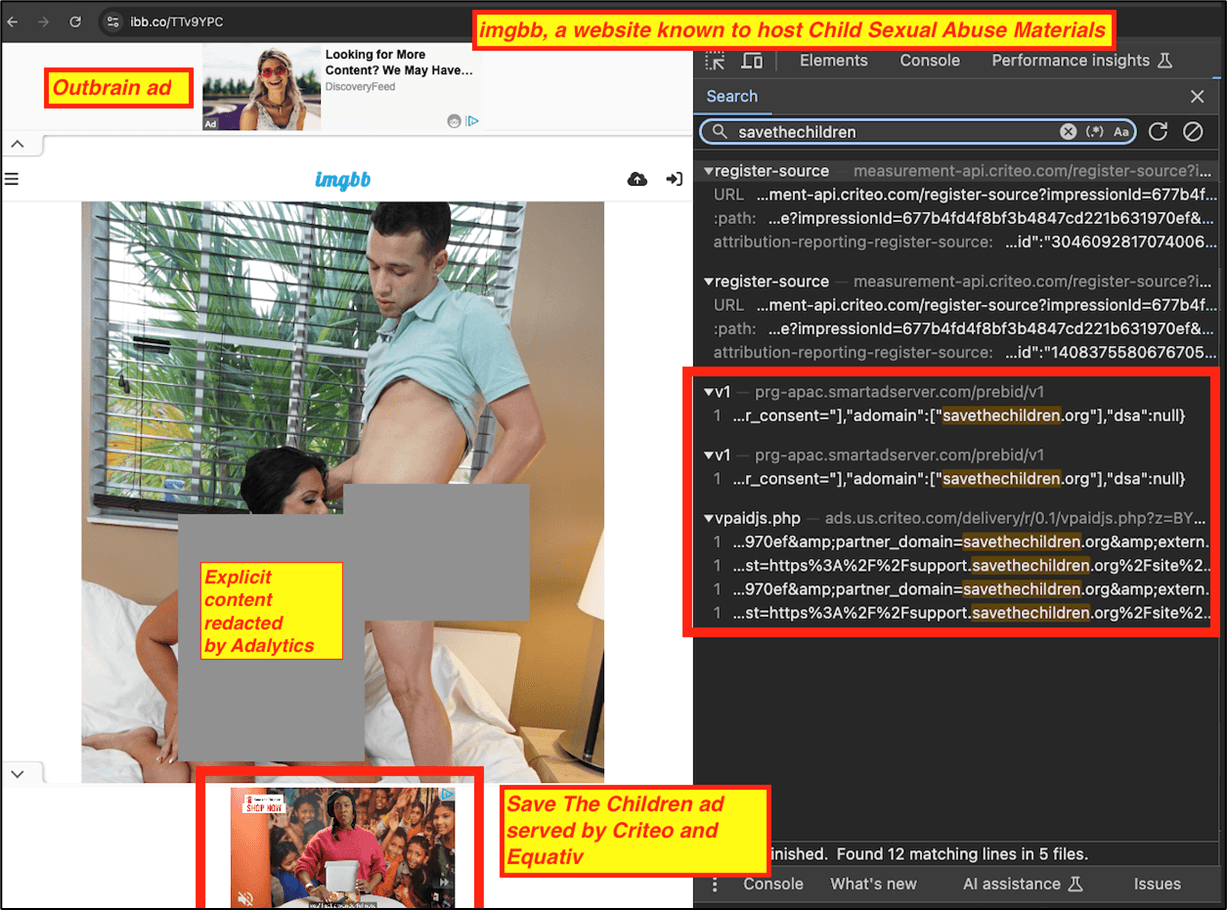

Screenshot of a Outbrain served ad & a Save the Children ad served by Criteo through Equativ on ibb.co, a website known to host child sexual abuse materials

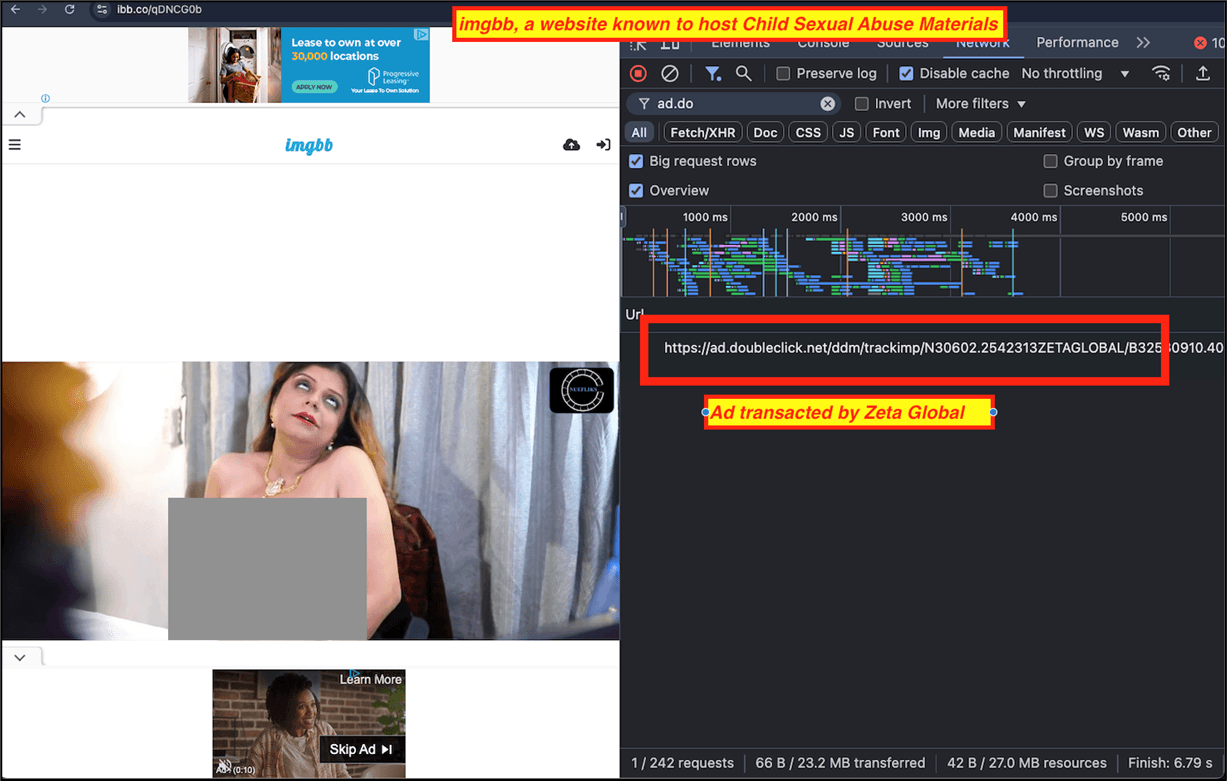

Screenshot of a Zeta Global served ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of an HP ad served by Criteo & a Jet2 ad on ibb.co, a website known to host child sexual abuse materials

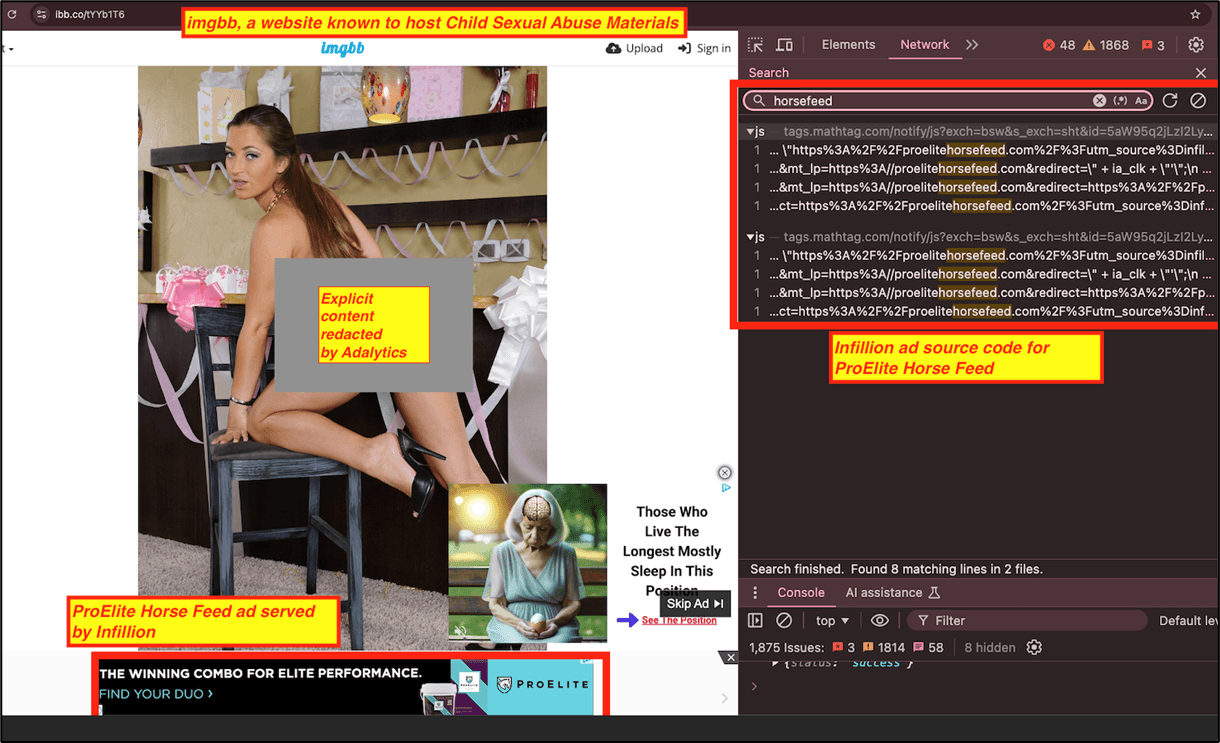

Screenshot of a ProElite Horse Feed ad served by Infillion on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Save the Children ad served by Criteo on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Thrive Market ad that was observed with DoubleVerify code through Chrome Dev tools on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Thrive Market ad that was observed with DoubleVerify code through Chrome Dev tools on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Intuit owned Mailchimp ad & a Thrive Market ad on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Intuit owned Mailchimp ad & a Thrive Market ad on ibb.co, a website known to host child sexual abuse materials

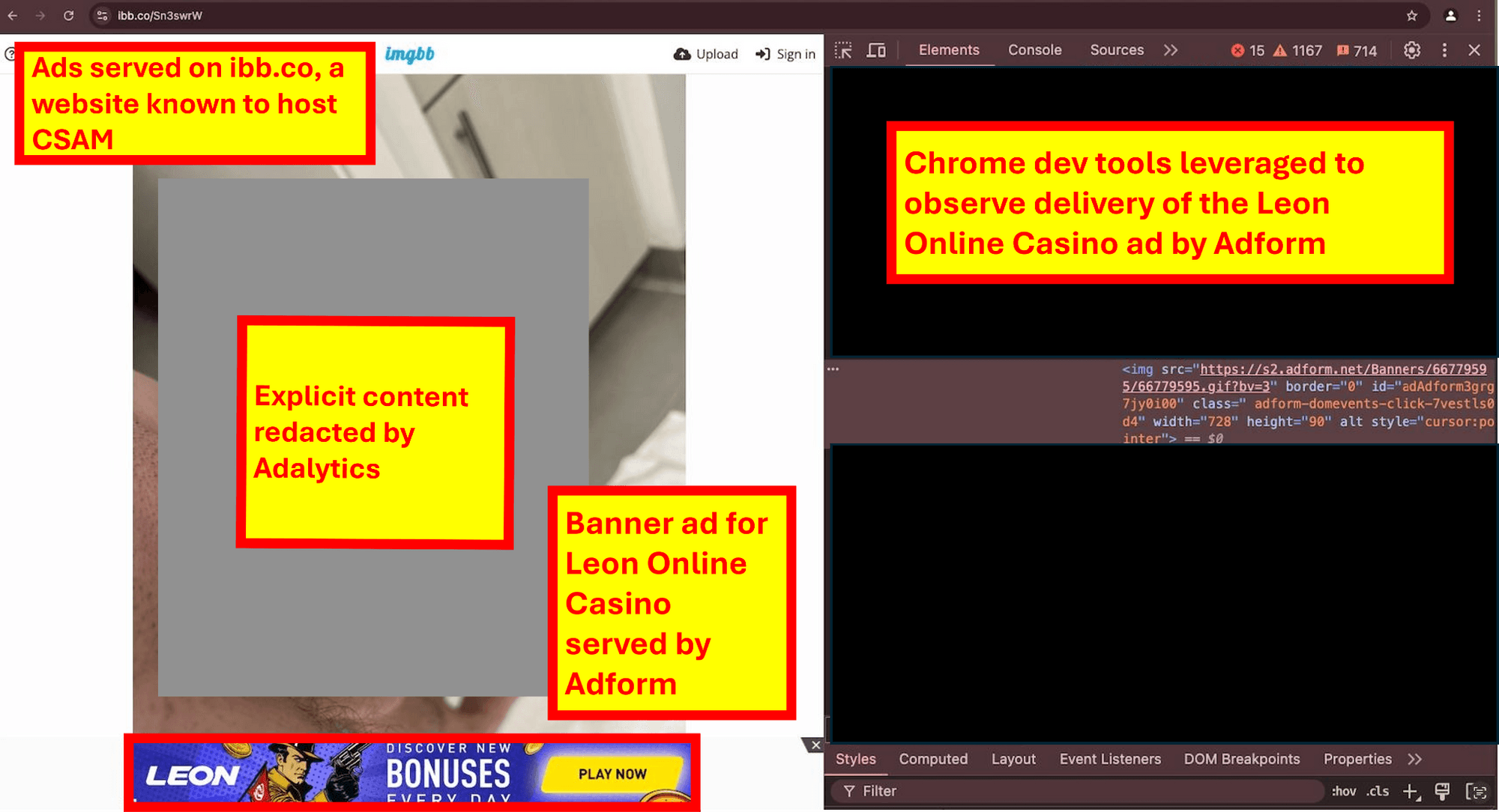

Screenshot of a Leon Online Casino ad served by Adform on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Leon Online Casino ad served by Adform on ibb.co, a website known to host child sexual abuse materials

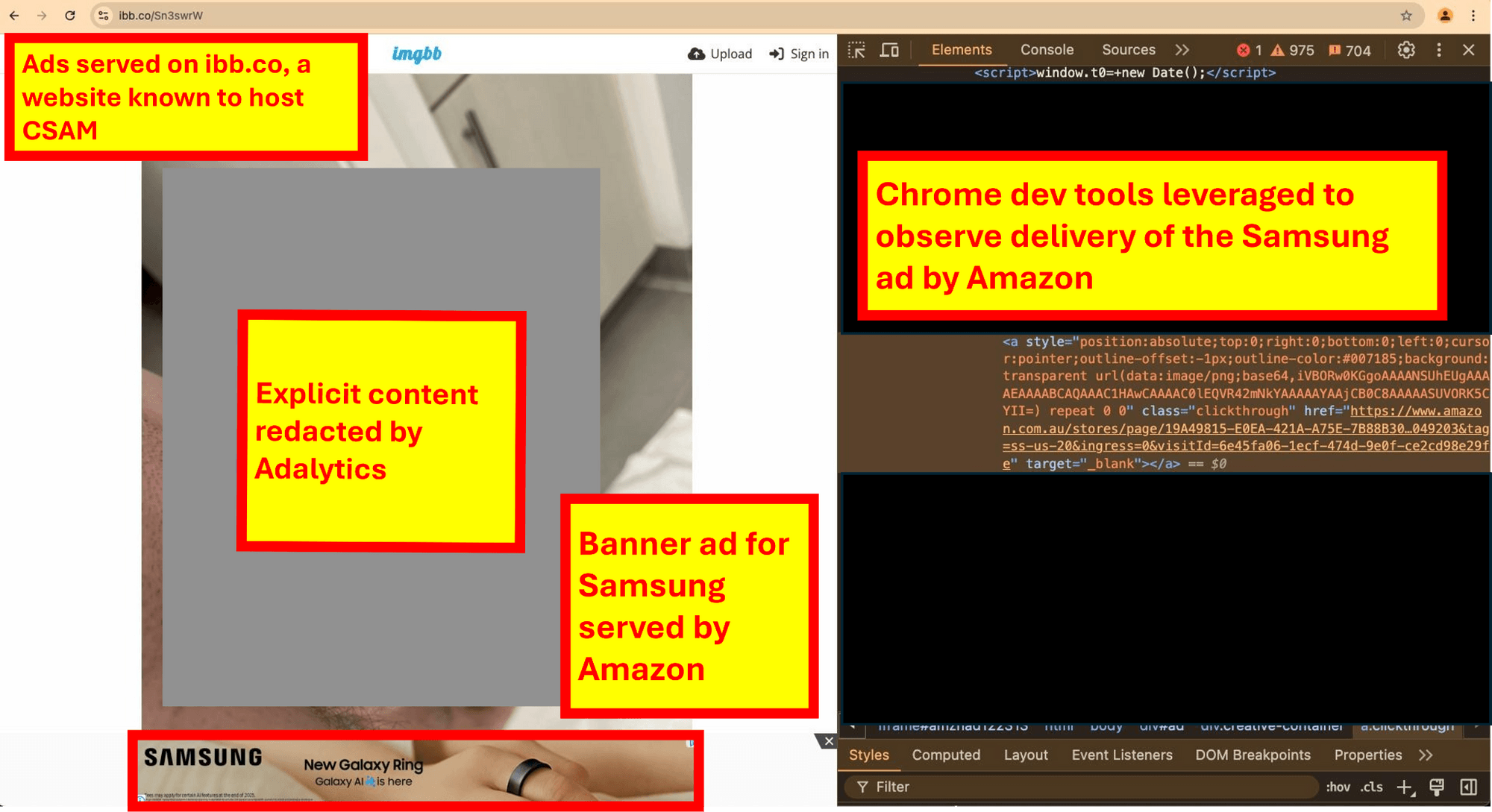

Screenshot of a Samsung ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Samsung ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

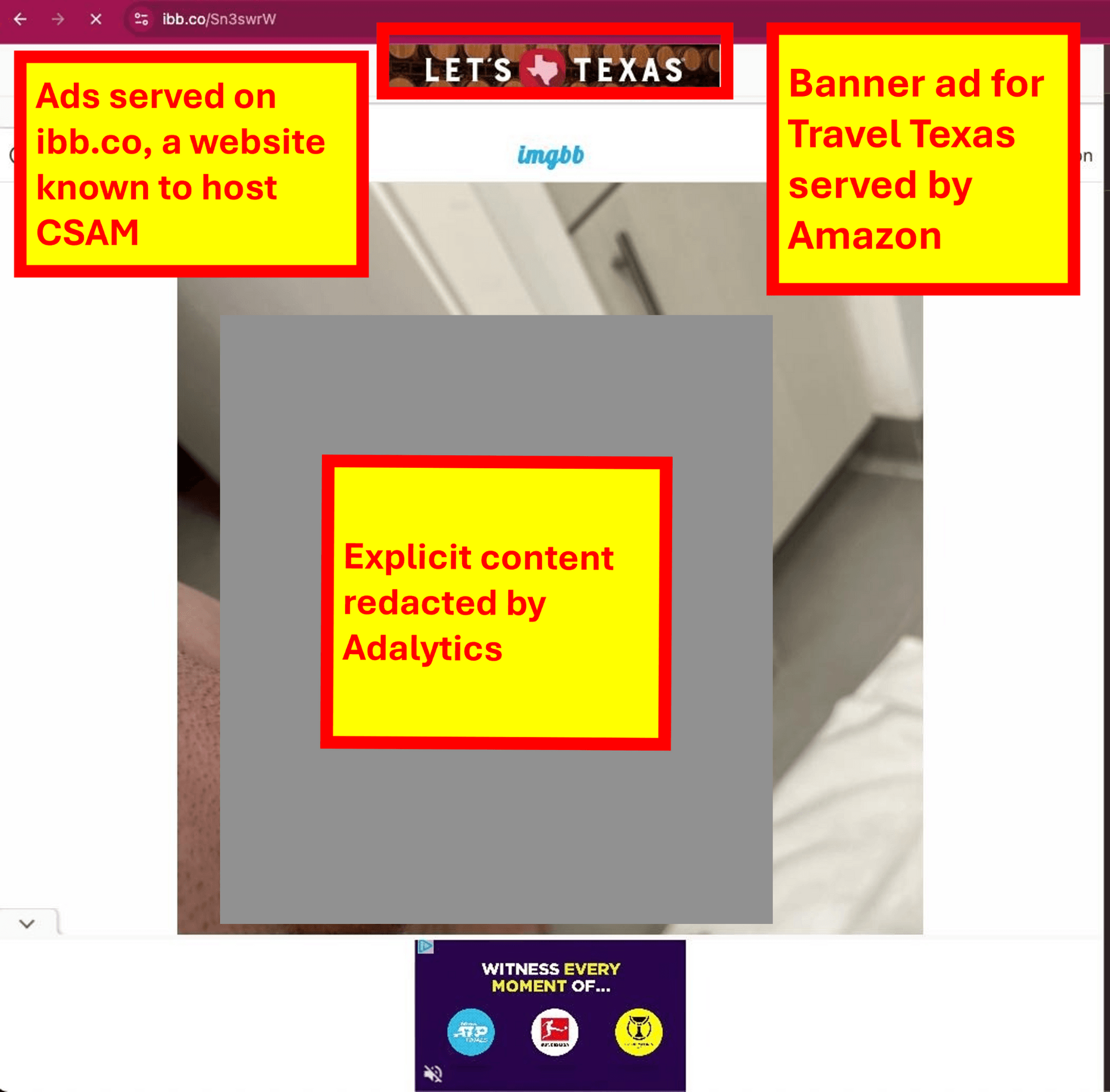

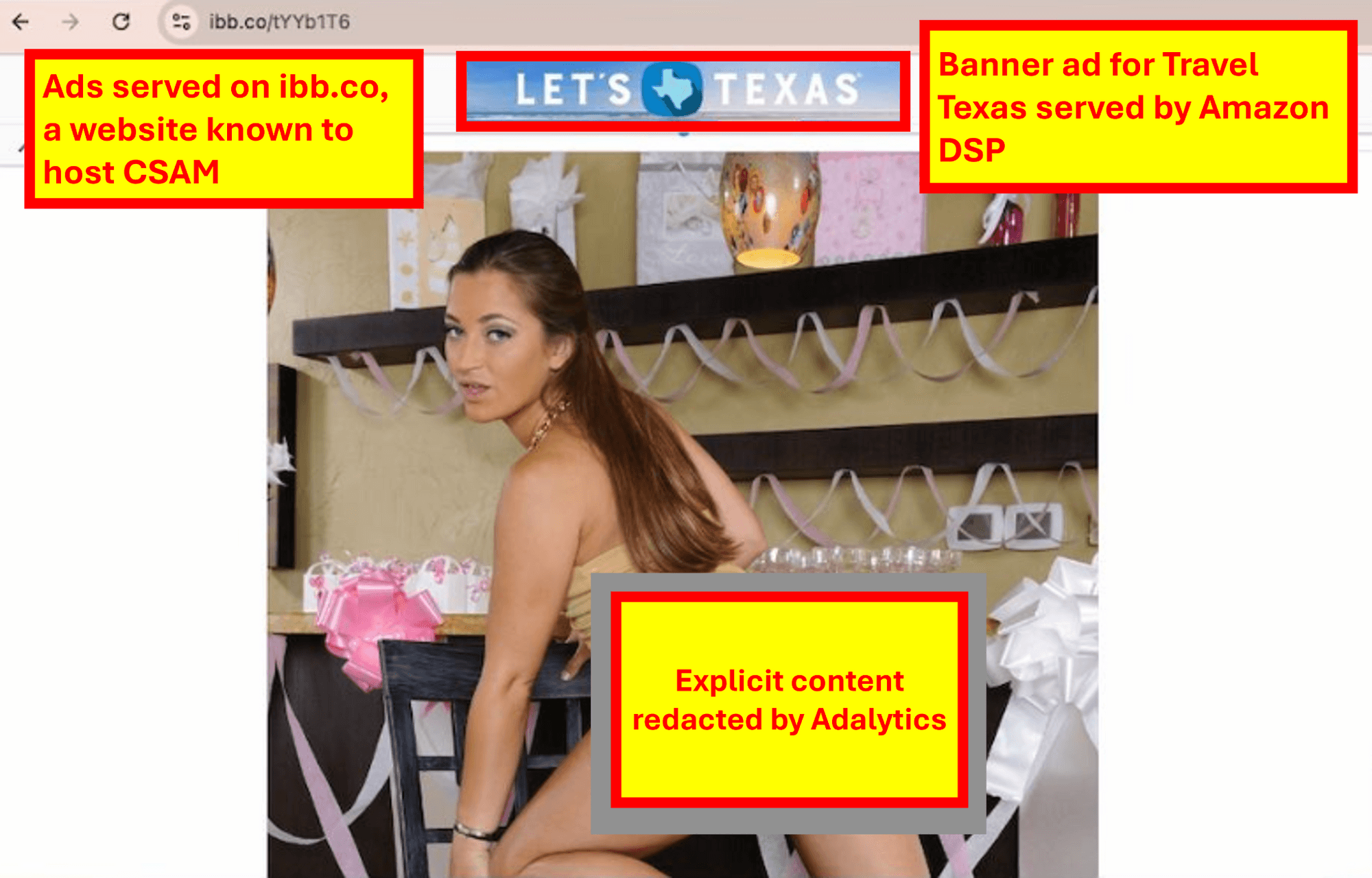

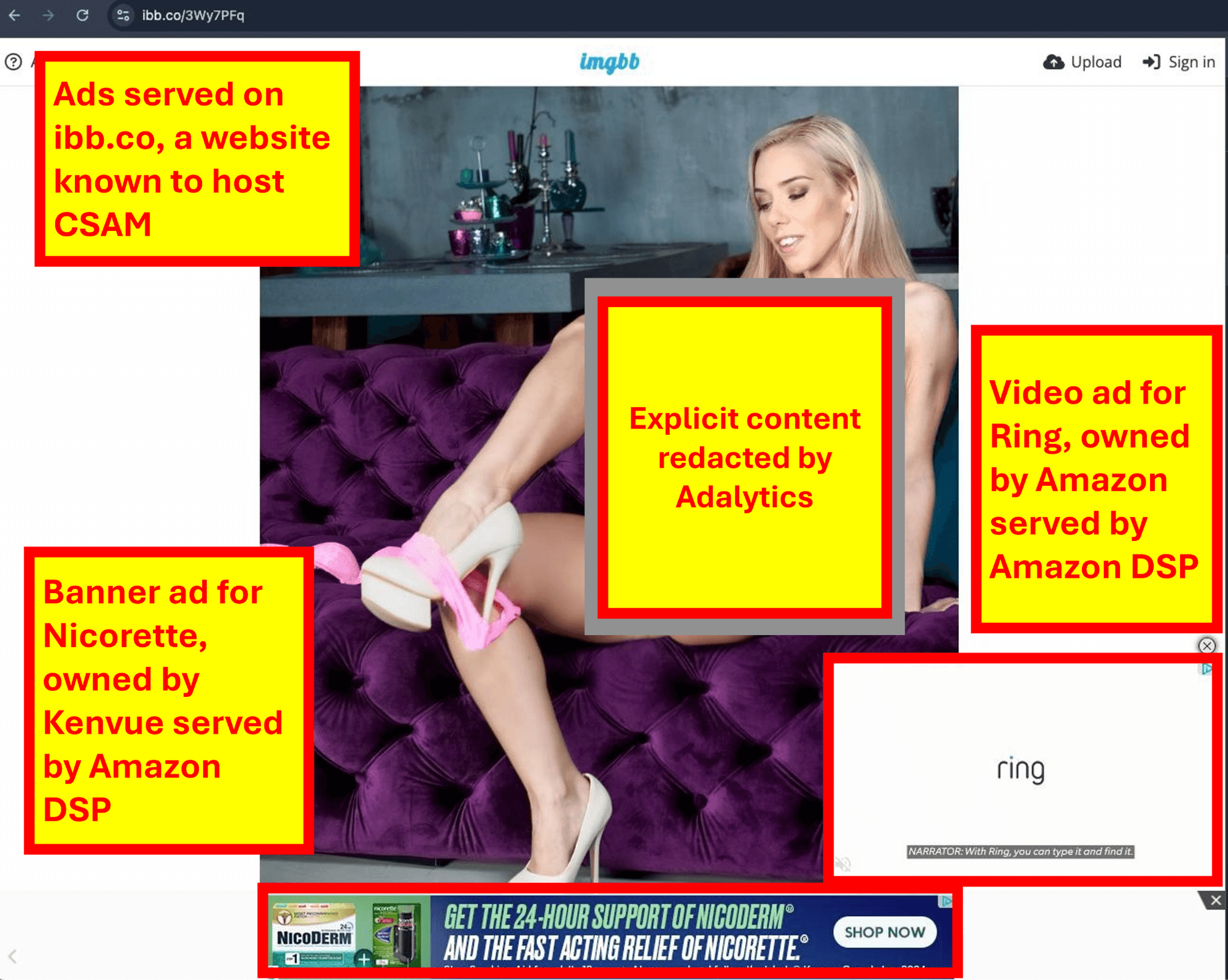

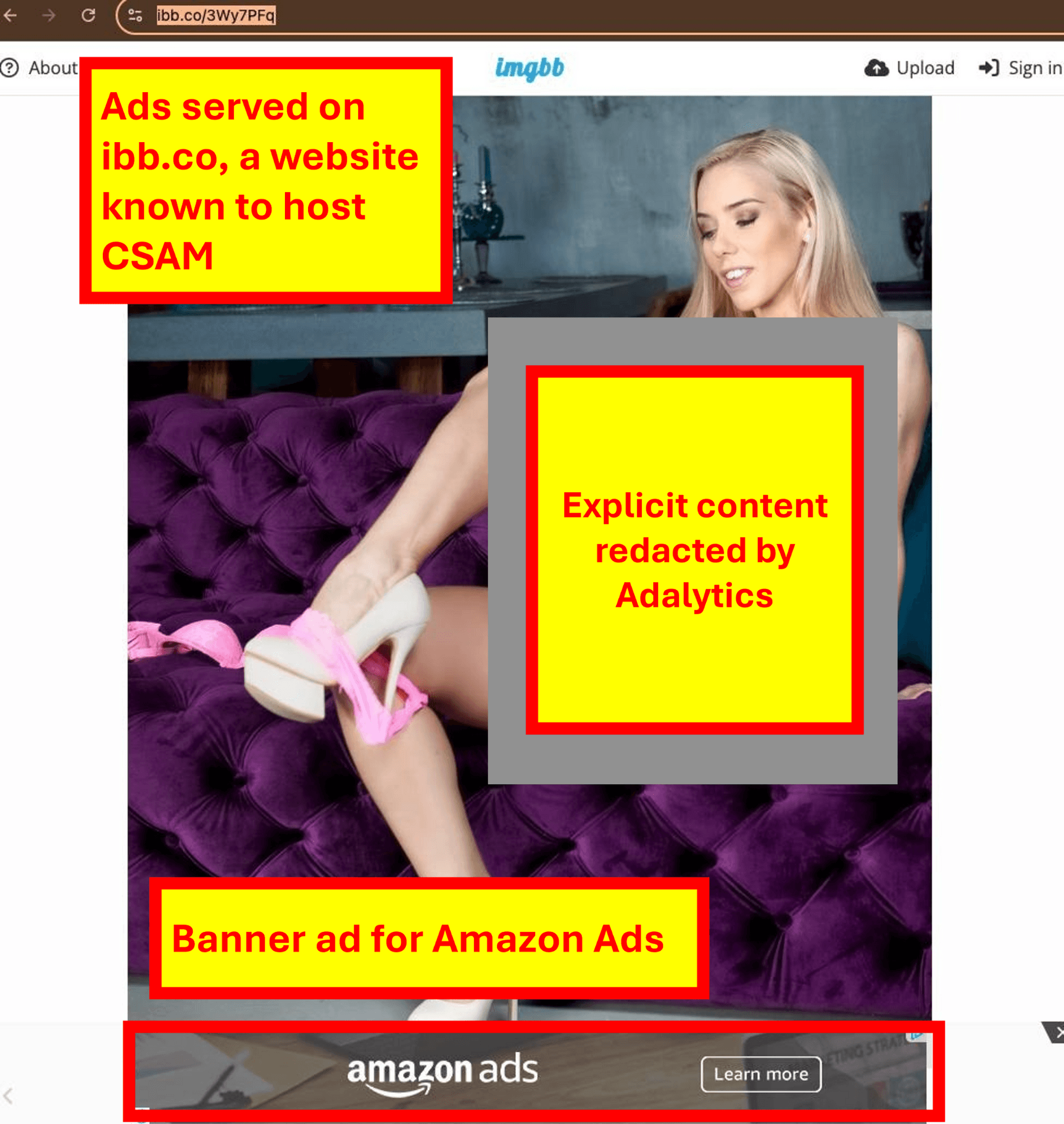

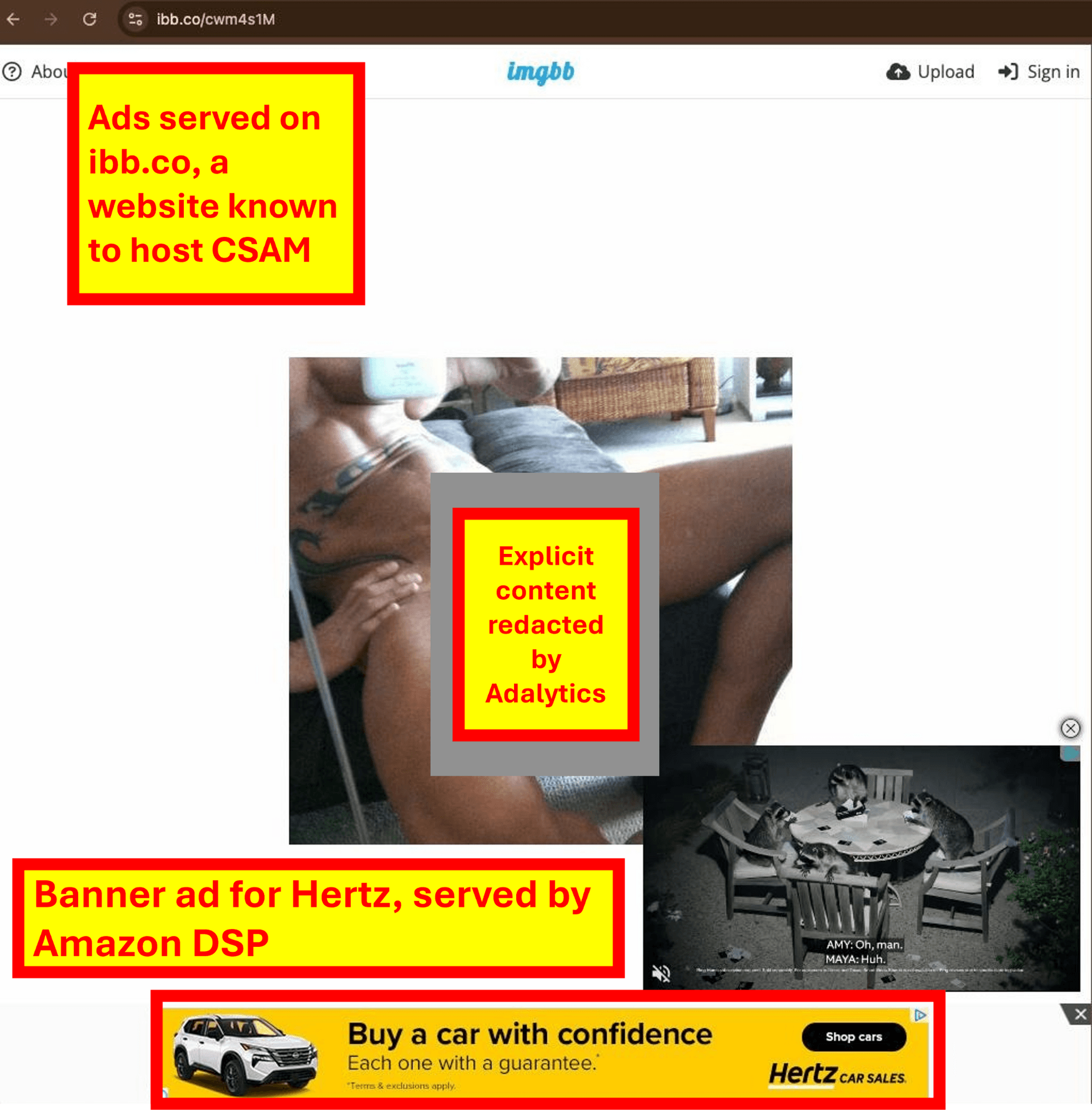

Screenshot of a Travel Texas ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Travel Texas ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

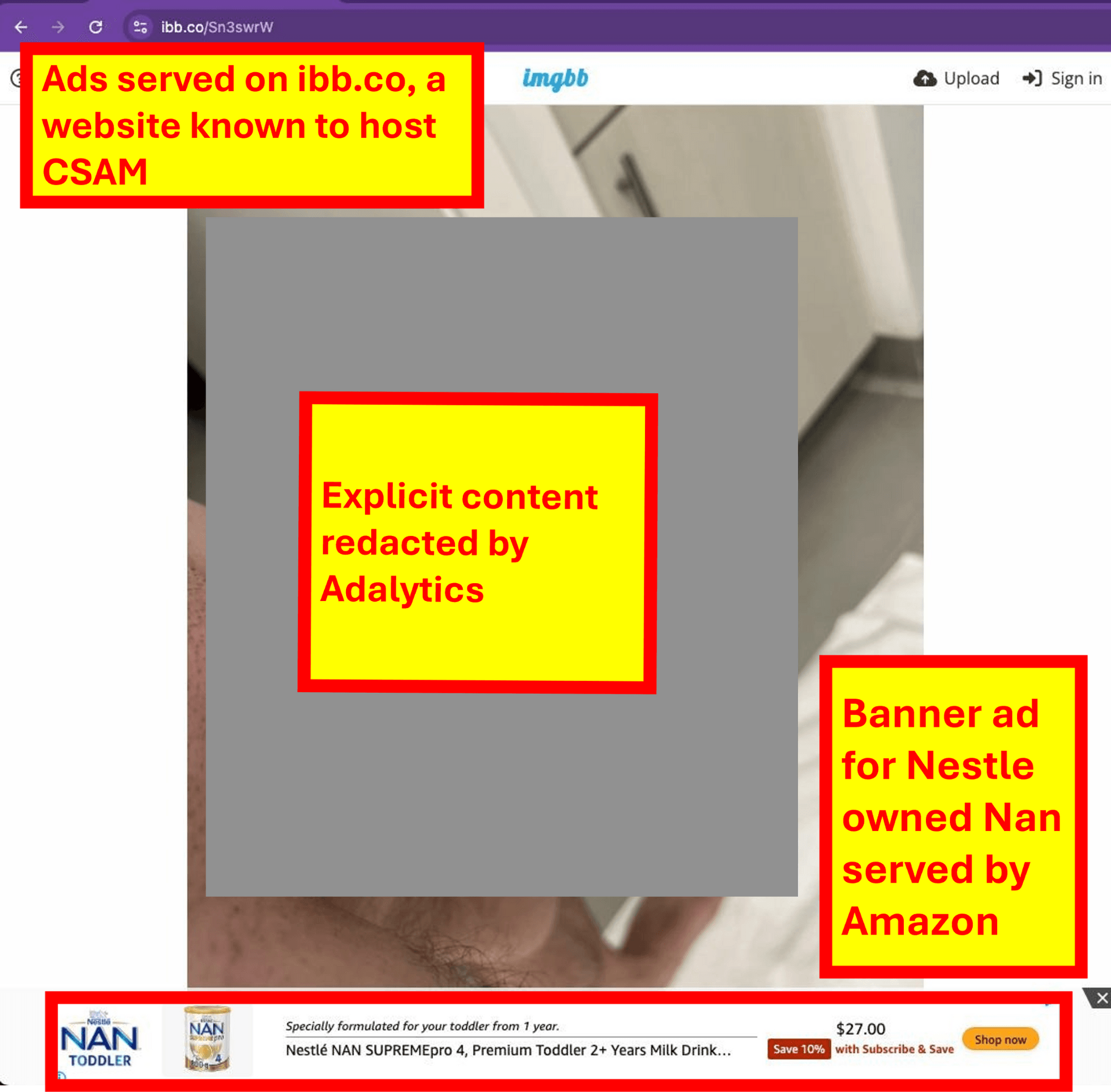

Screenshot of a Nestle owned Nan ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Nestle owned Nan ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

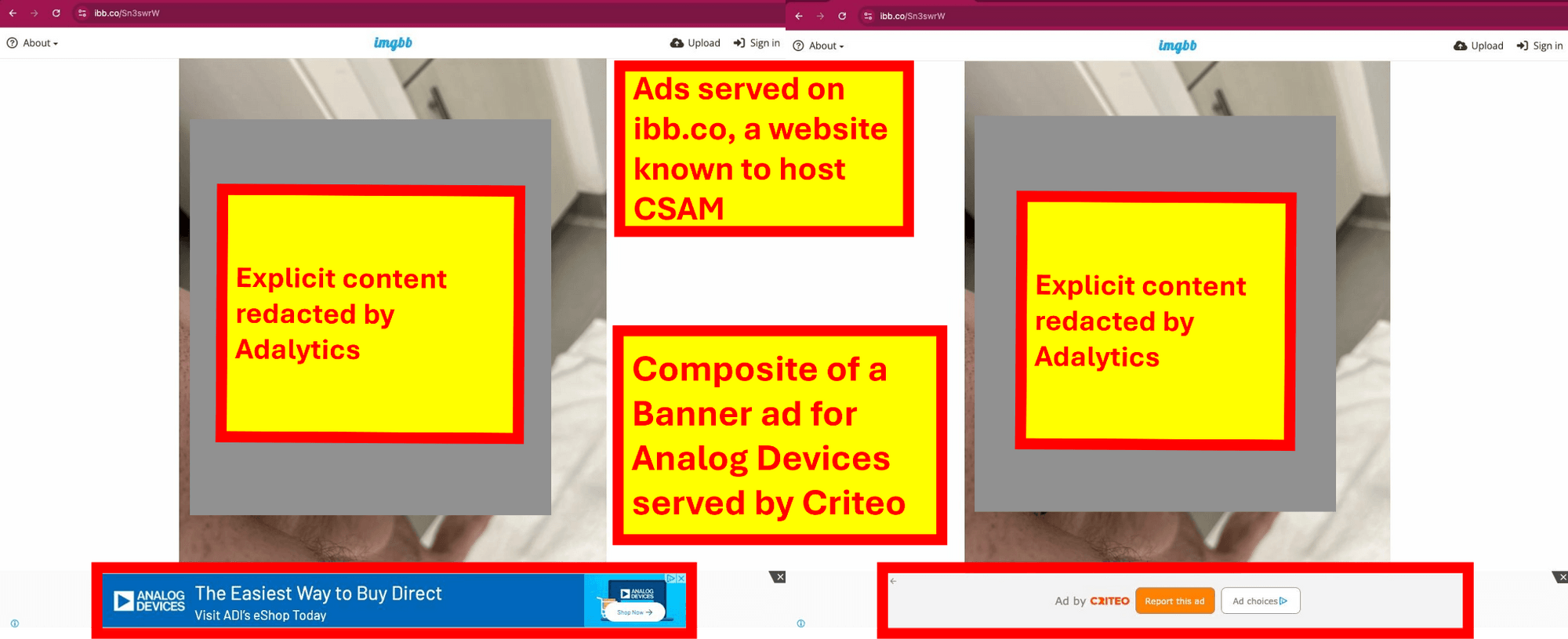

Composite screenshot of an Analog Devices ad served by Criteo on ibb.co, a website known to host child sexual abuse materials

Composite screenshot of an Analog Devices ad served by Criteo on ibb.co, a website known to host child sexual abuse materials

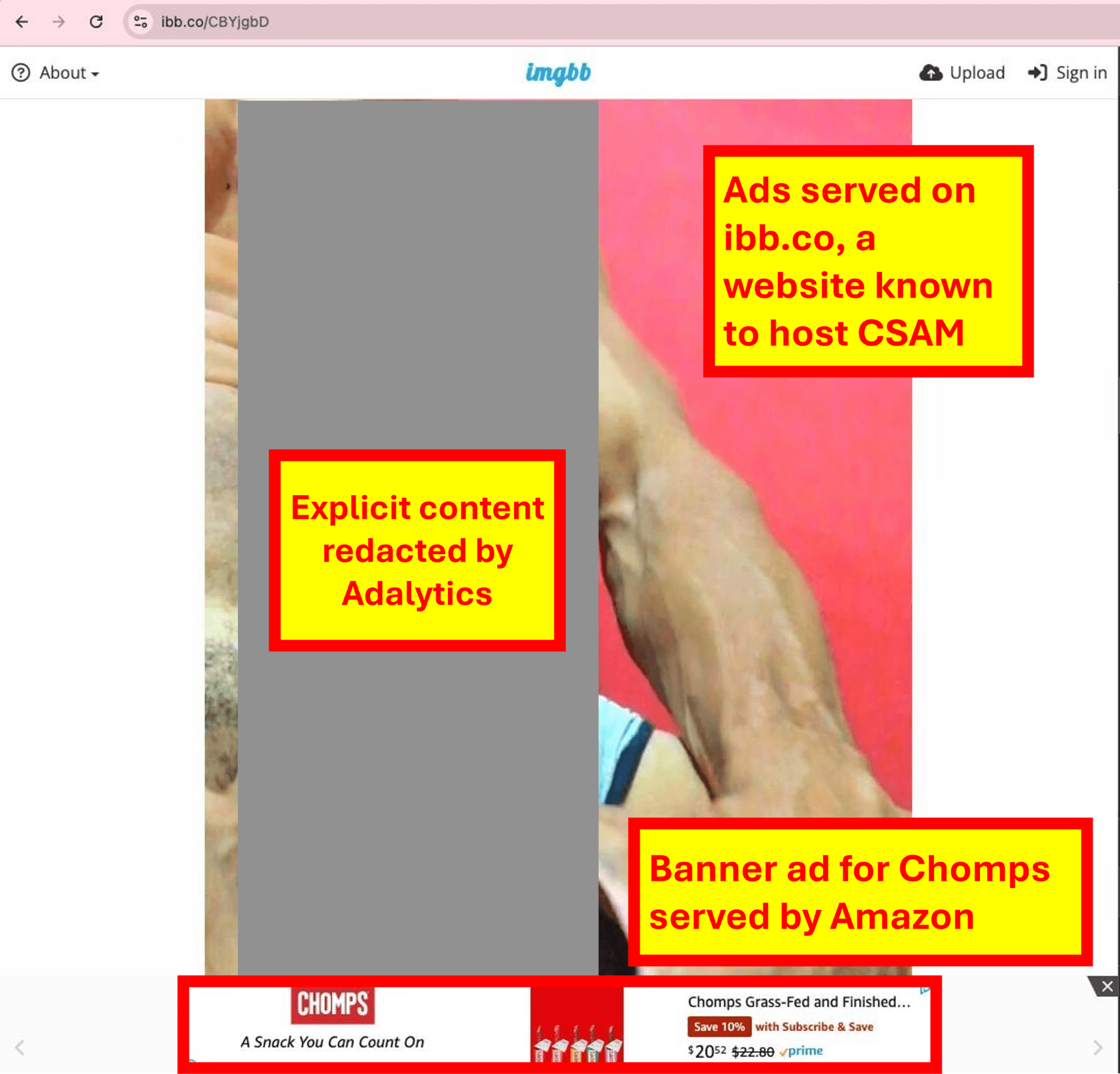

Screenshot of a Chomps ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Chomps ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

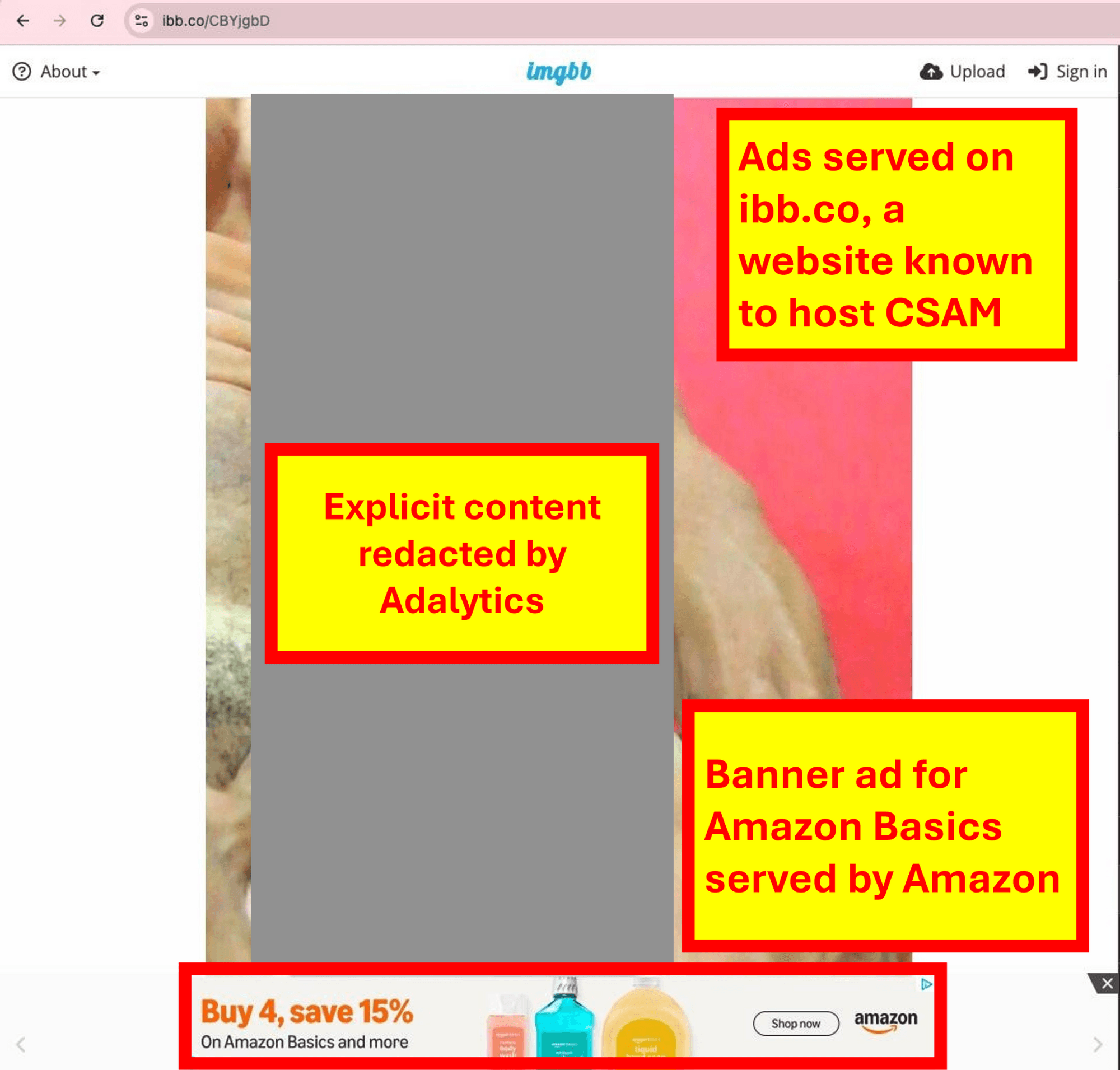

Screenshot of a Amazon owned Amazon Basics ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Amazon owned Amazon Basics ad served by Amazon on ibb.co, a website known to host child sexual abuse materials

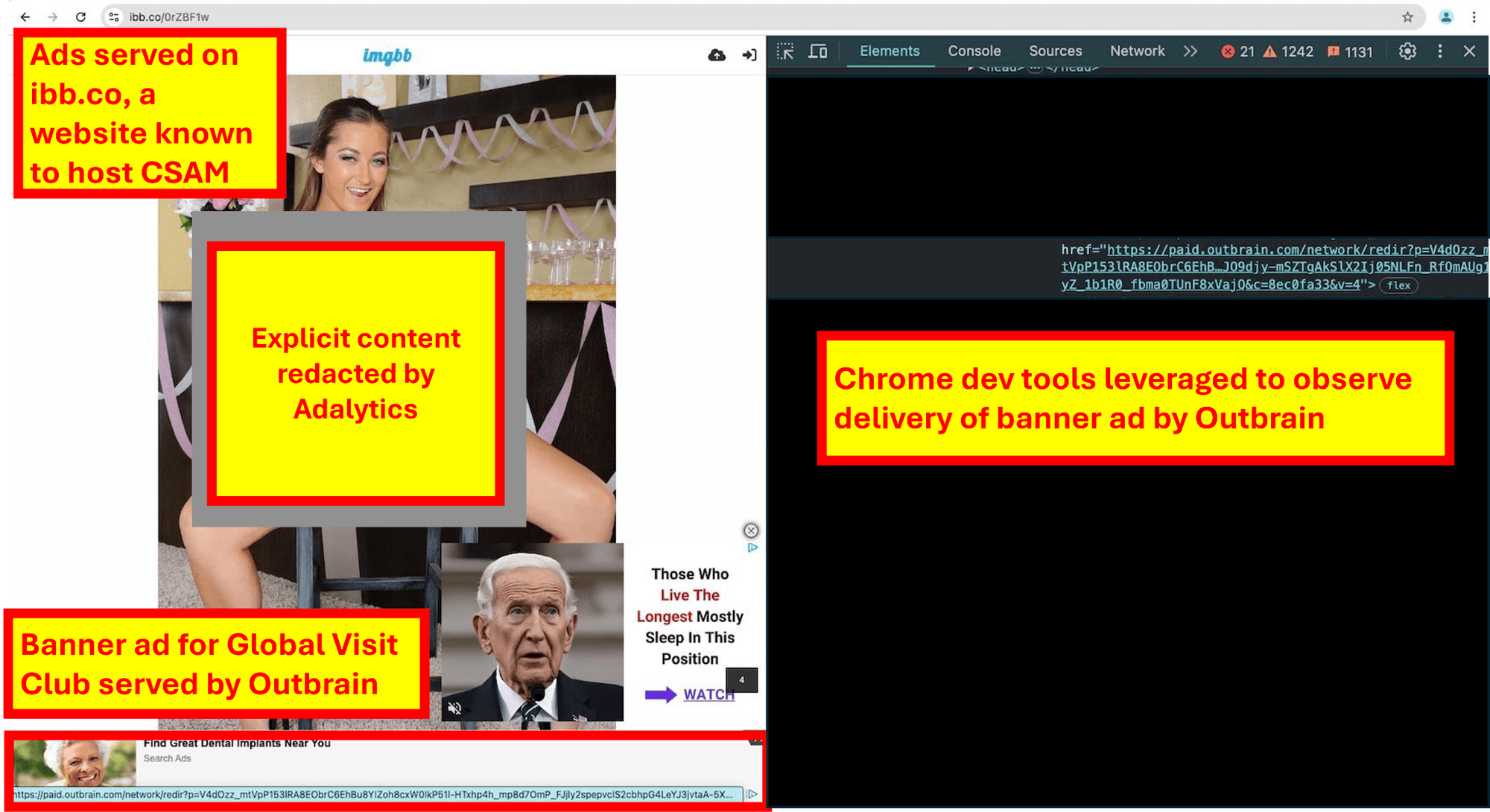

Screenshot of a Global Visit Club ad served by Outbrain on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Global Visit Club ad served by Outbrain on ibb.co, a website known to host child sexual abuse materials

Screenshot of an Airbnb ad transacted through Triplelift by Microsoft on ibb.co, a website known to host child sexual abuse materials

Screenshot of an Airbnb ad transacted through Triplelift by Microsoft on ibb.co, a website known to host child sexual abuse materials

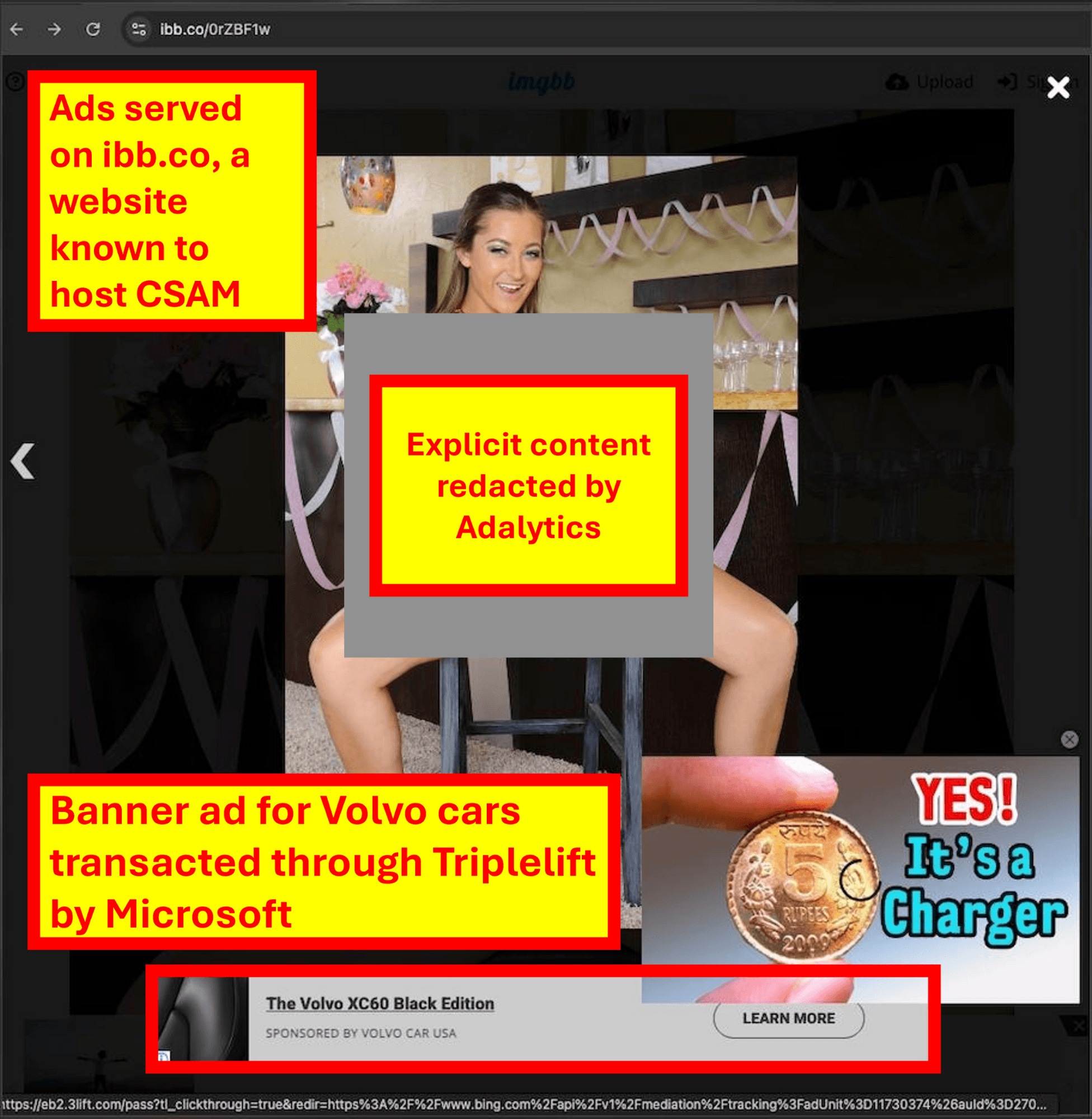

Screenshot of a Volvo ad transacted through Triplelift by Microsoft on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Volvo ad transacted through Triplelift by Microsoft on ibb.co, a website known to host child sexual abuse materials

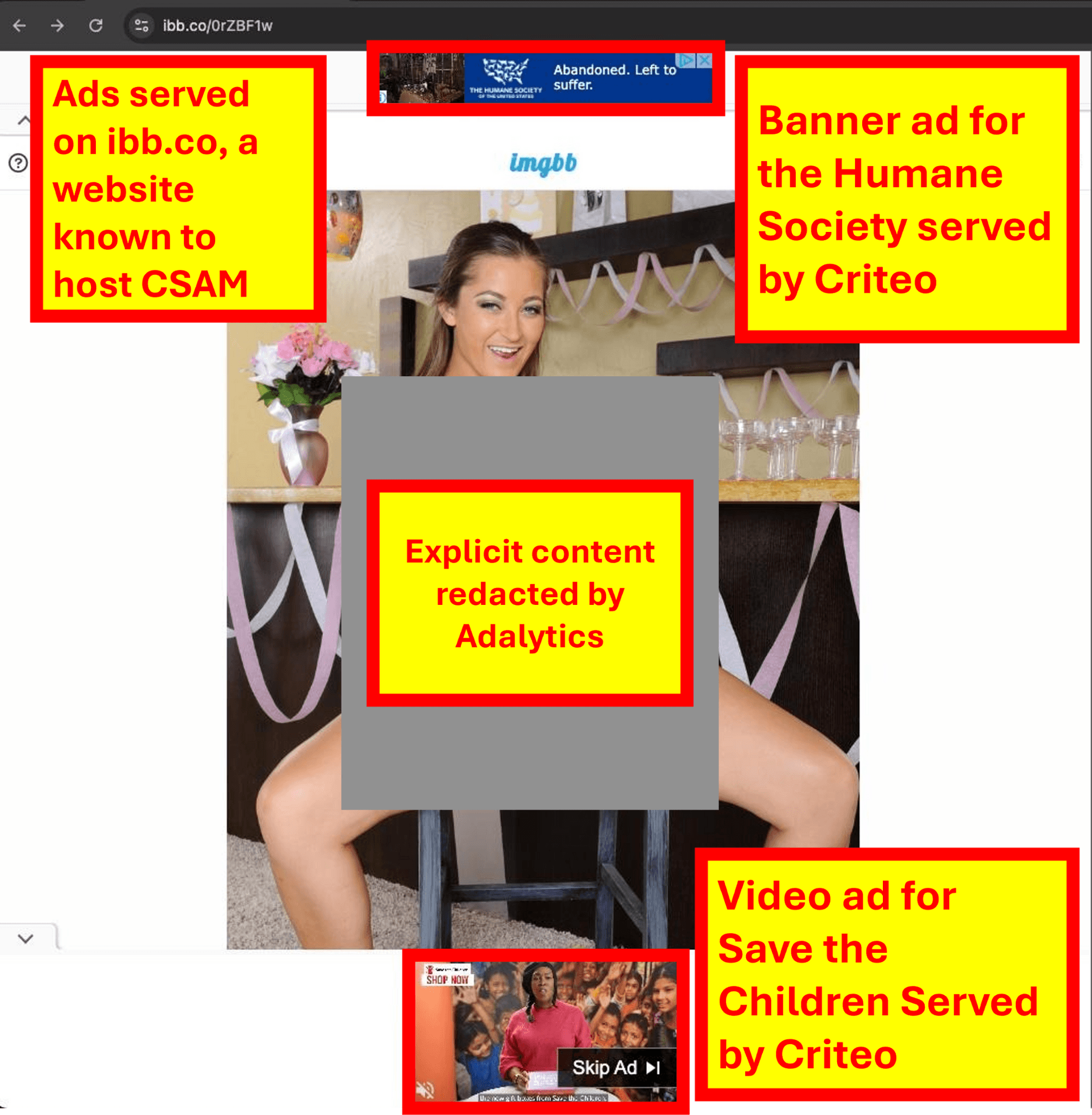

Screenshot of a Humane Society ad & a Save the Children ad served by Criteo on ibb.co, a website known to host child sexual abuse materials

Screenshot of a Humane Society ad & a Save the Children ad served by Criteo on ibb.co, a website known to host child sexual abuse materials