Executive Summary

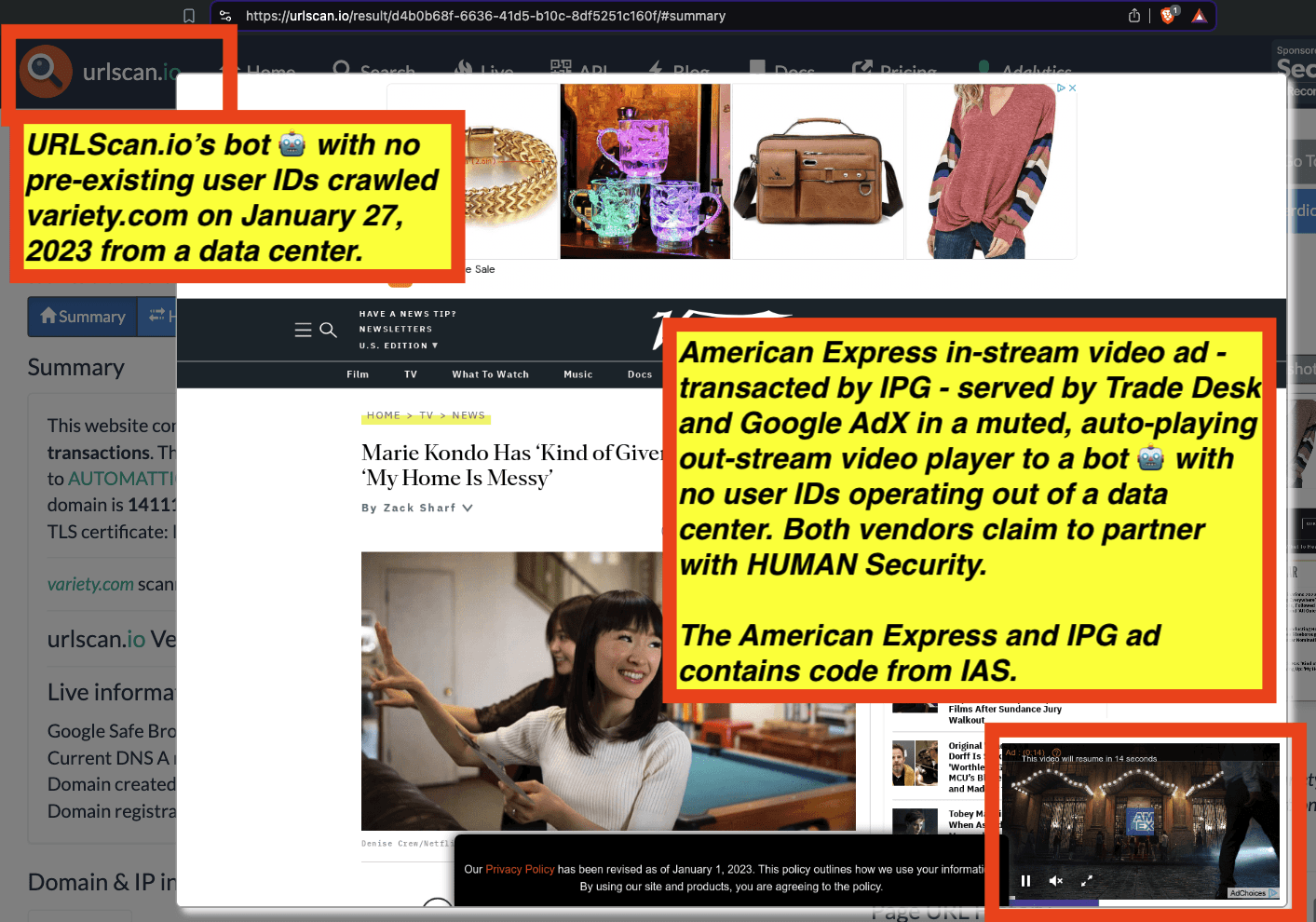

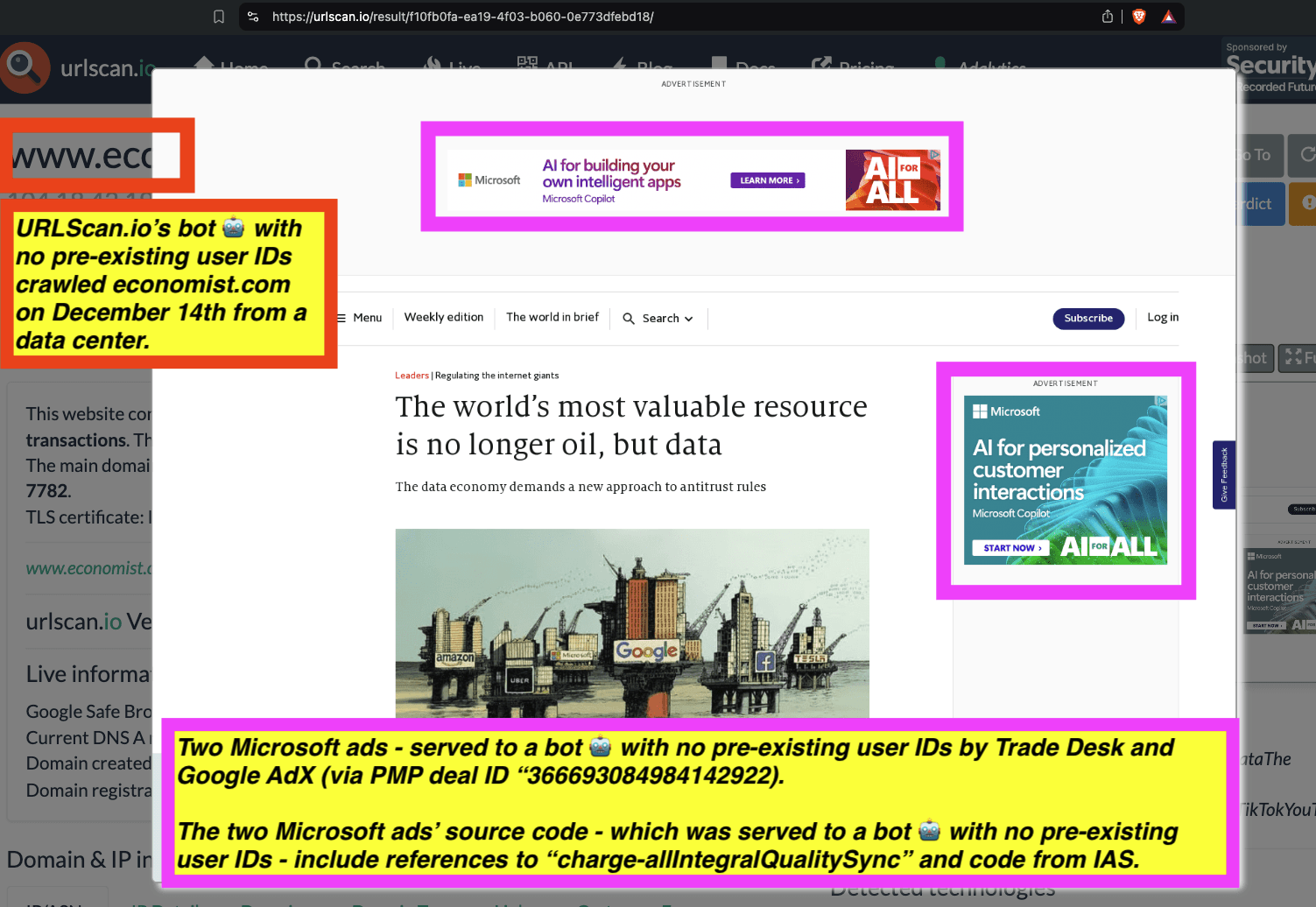

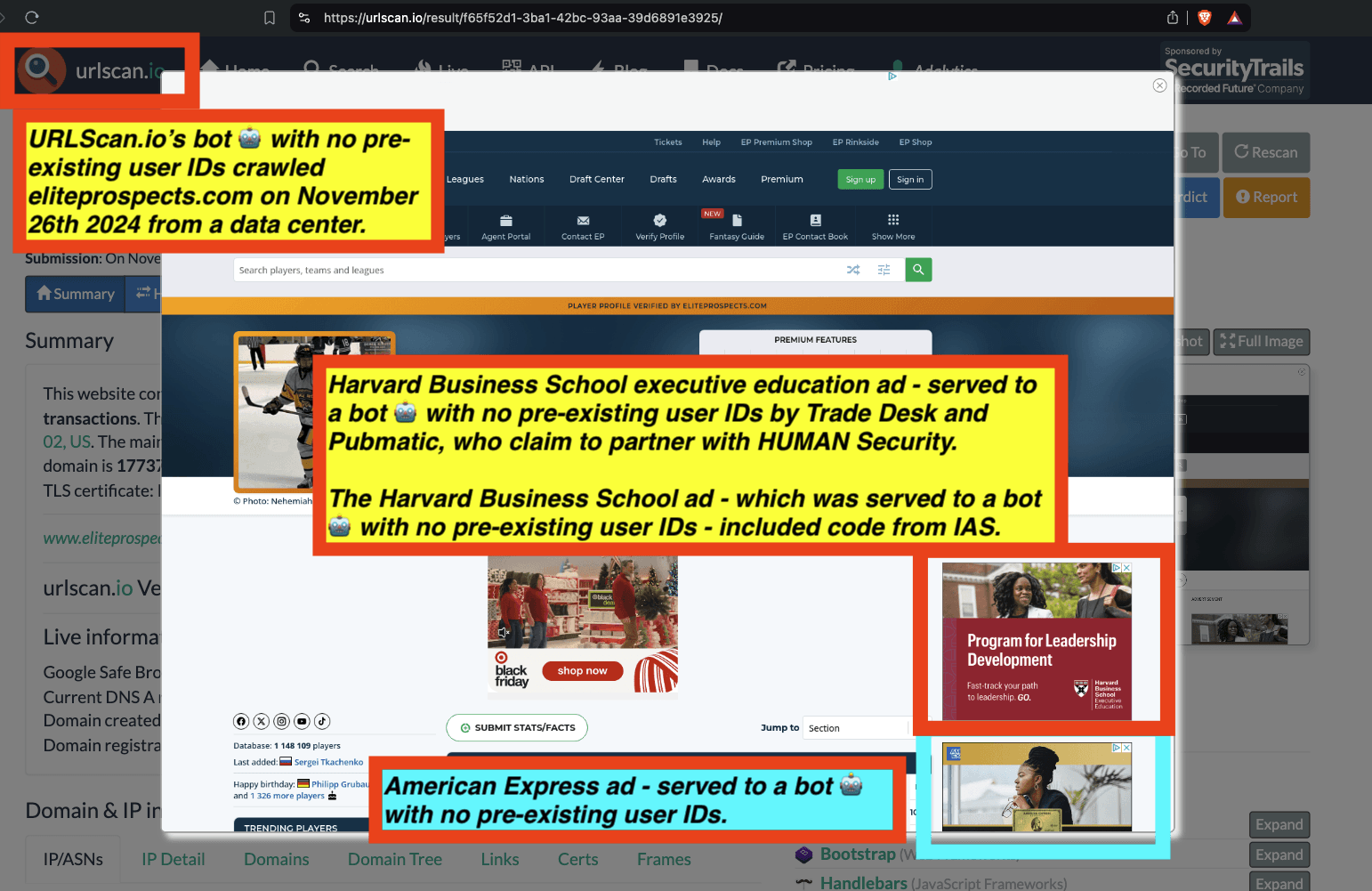

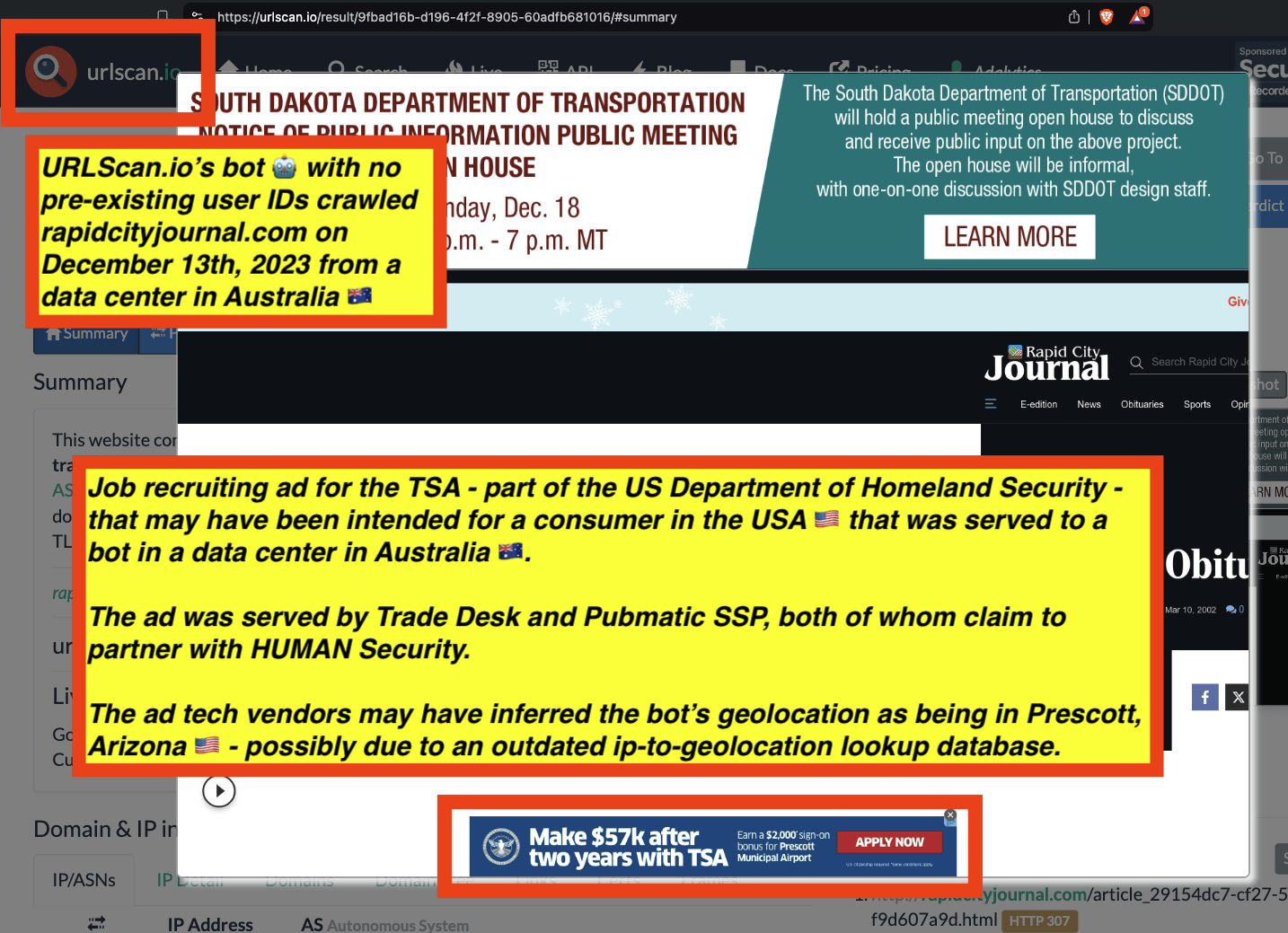

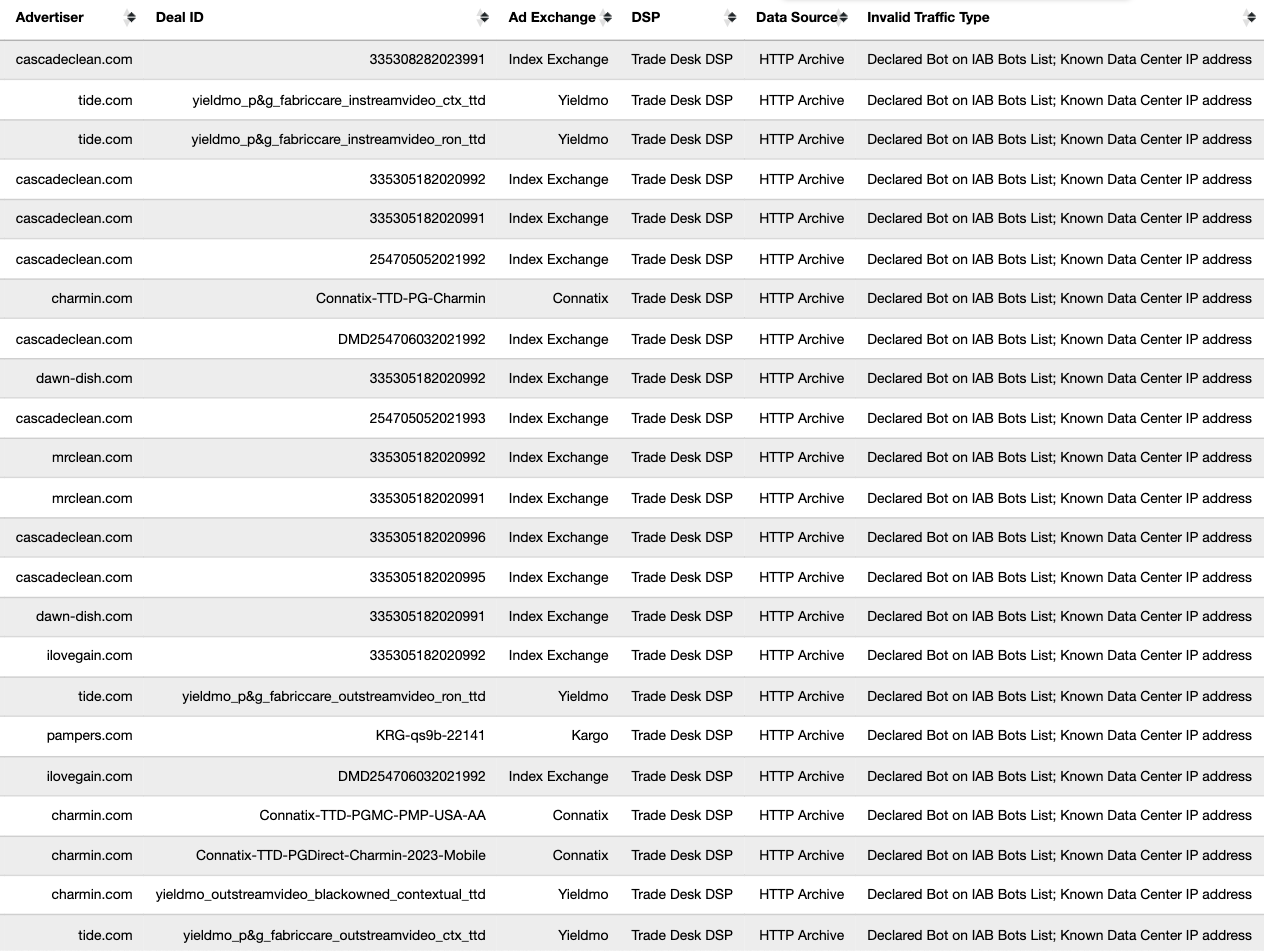

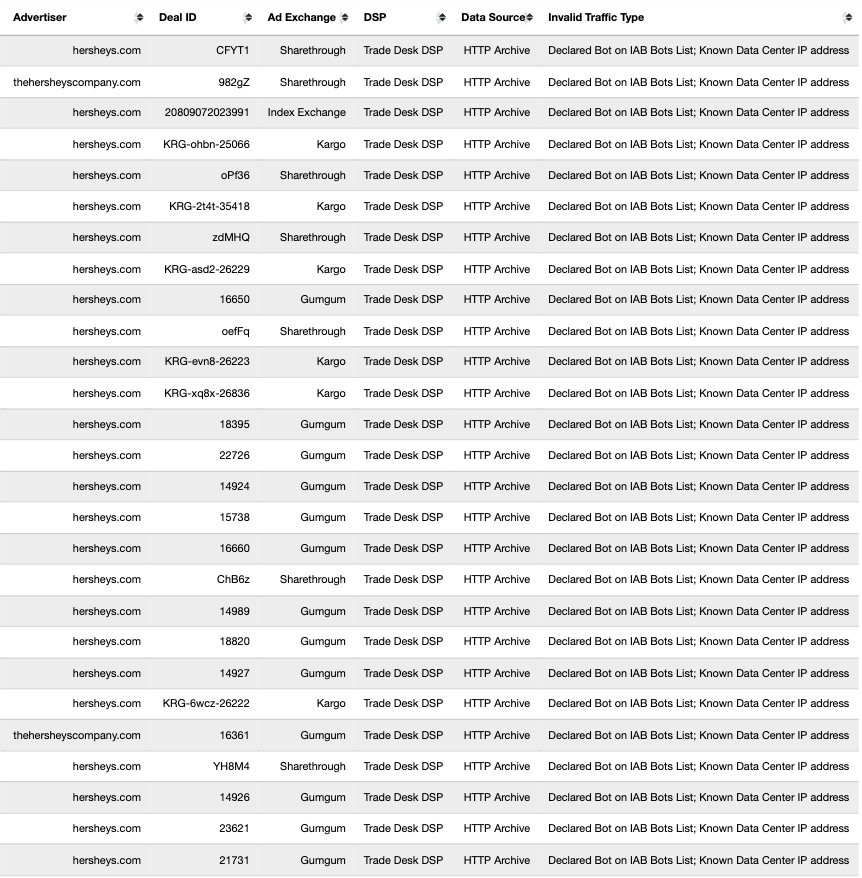

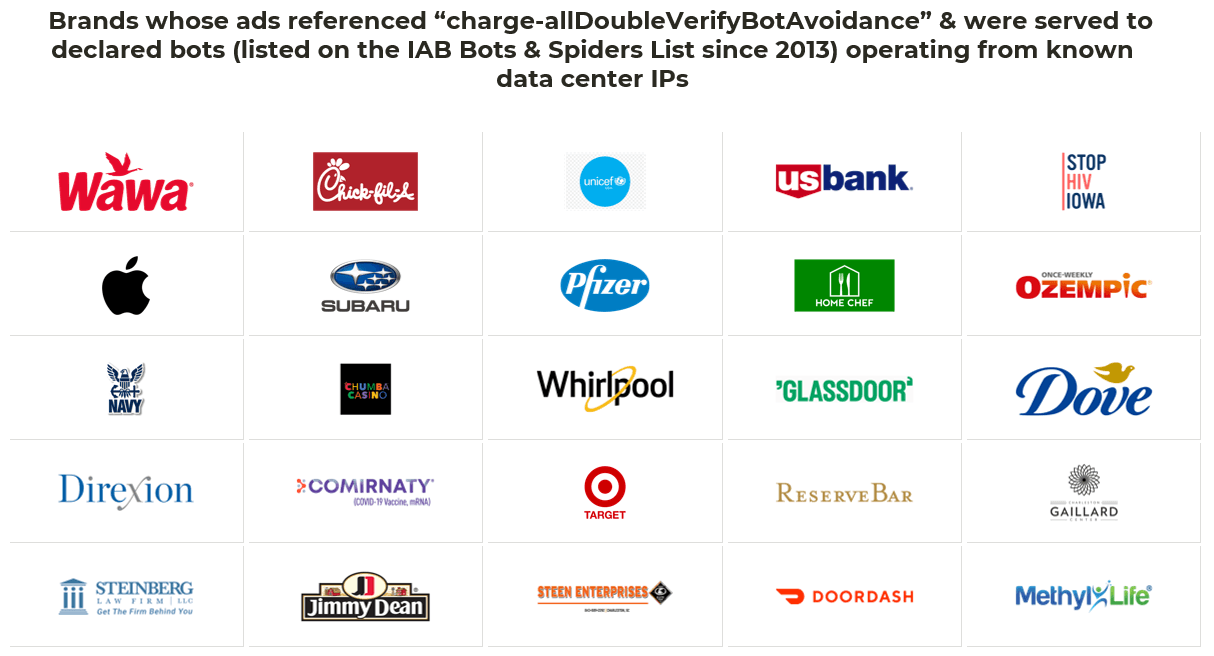

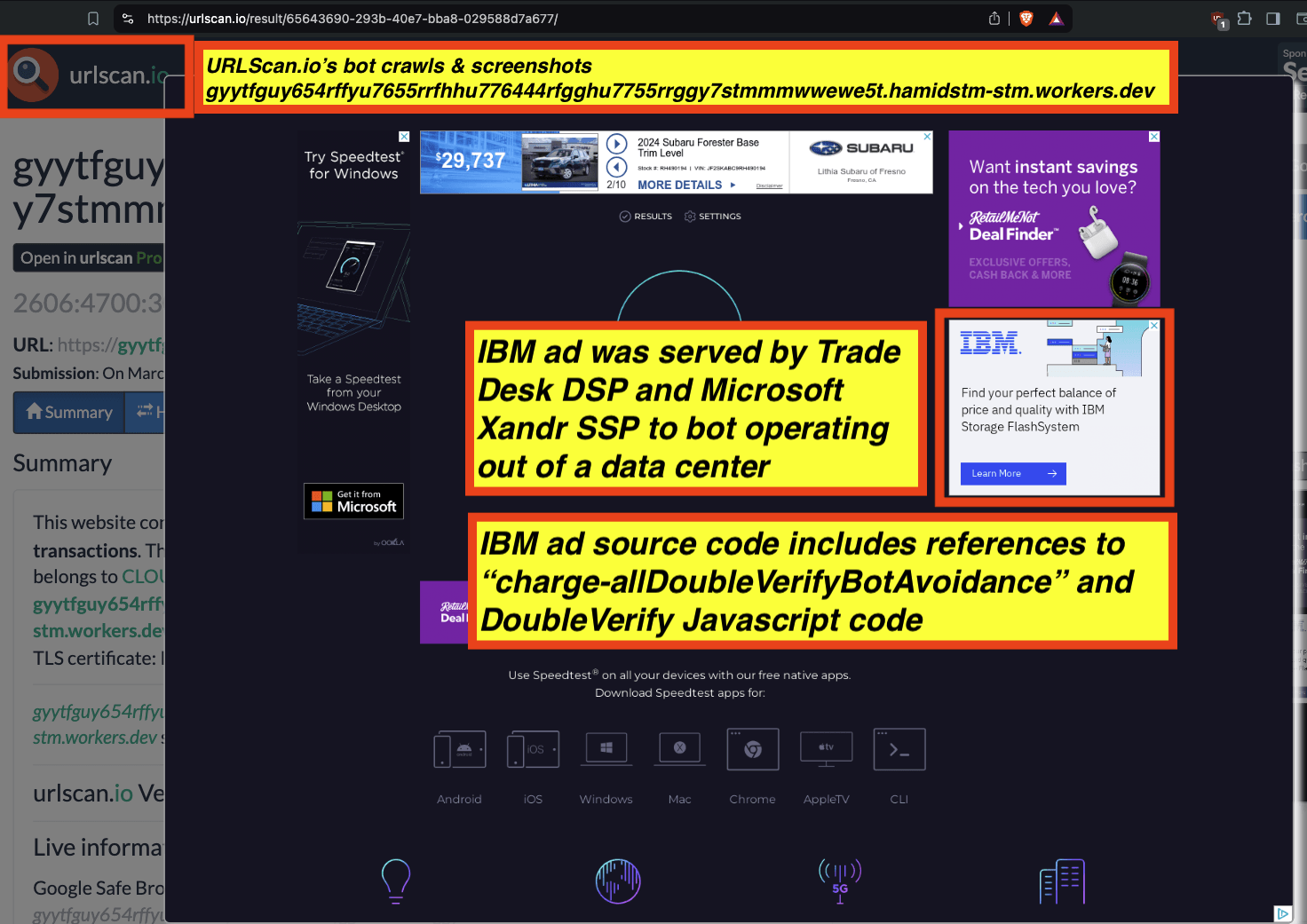

During the course of several analyses on behalf of Fortune 500 advertisers, impression level log file data and financial invoices suggested that advertisers were billed by ad tech vendors for ad impressions served to declared bots operating out of known data center server farms. Some of these bots are on the IAB Tech Lab Bots & Spider List, and some of the data center IP addresses are on an industry reference list of known datacenter IPs with non-human (bot) traffic.

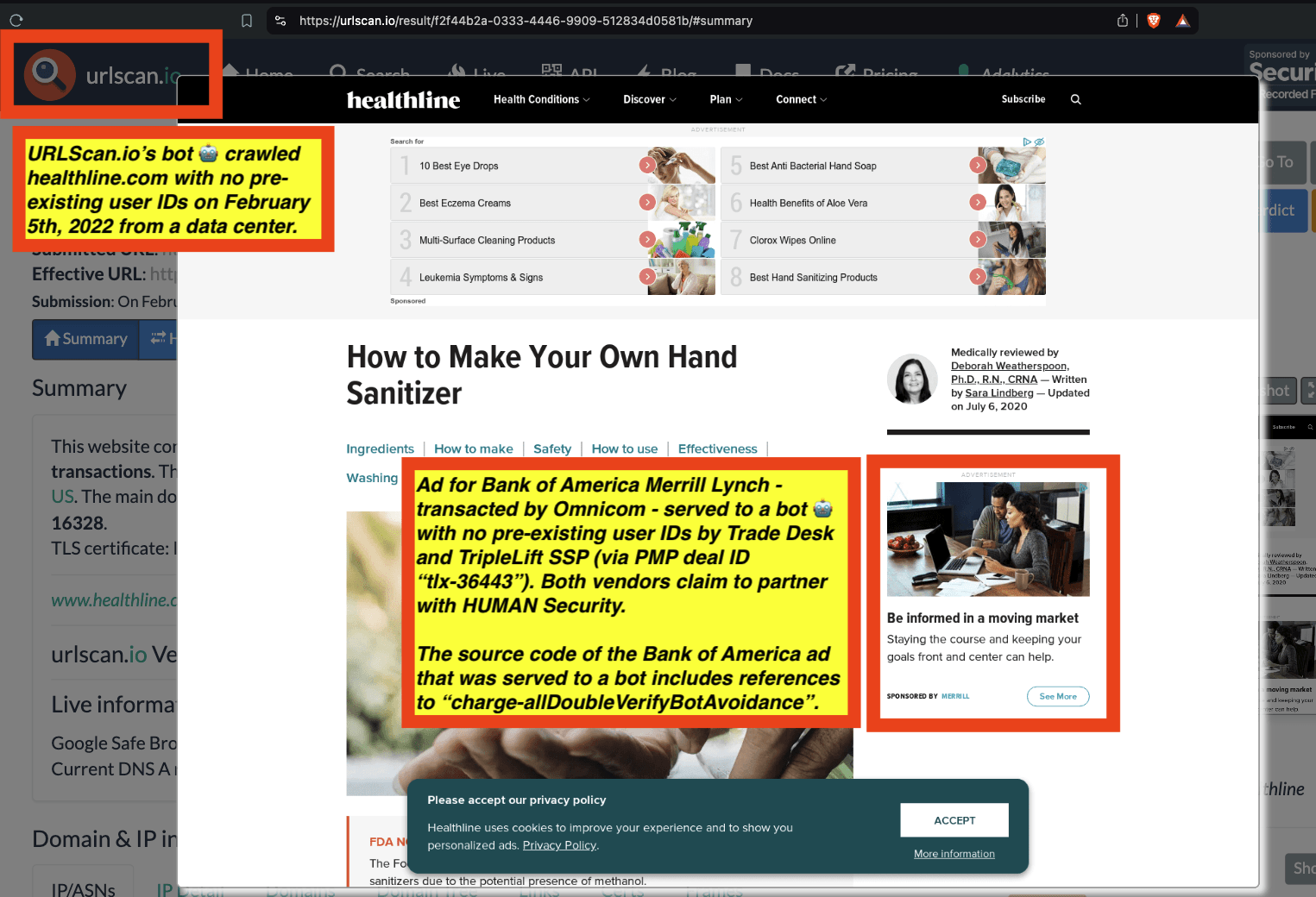

Some of these brands reported that they were spending millions of dollars each year specifically for “bot avoidance” pre-bid segments and technology from various ad verification vendors. This technology is allegedly intended to prevent advertisers’ ads from being served to bots, invalid, or fraudulent traffic.

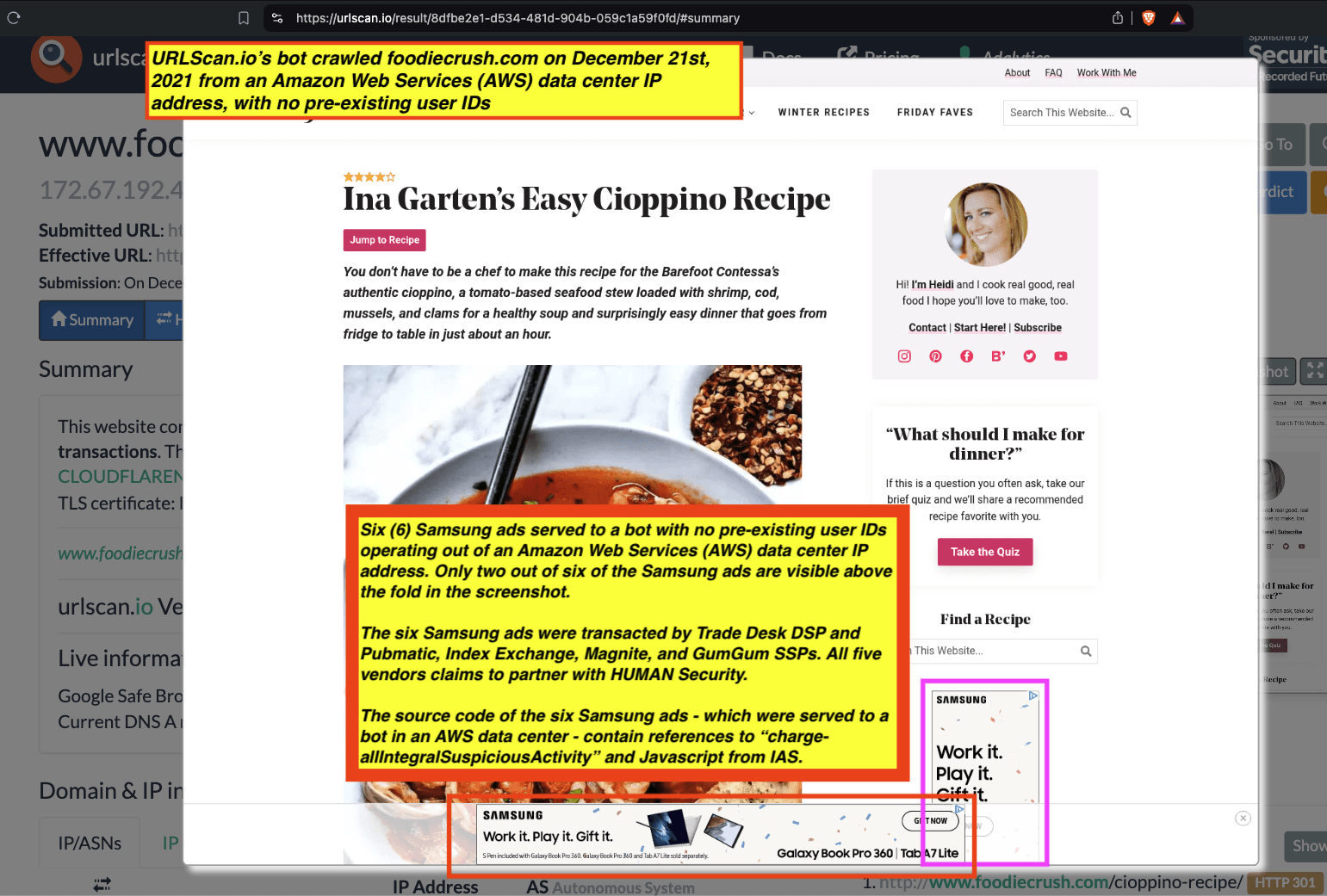

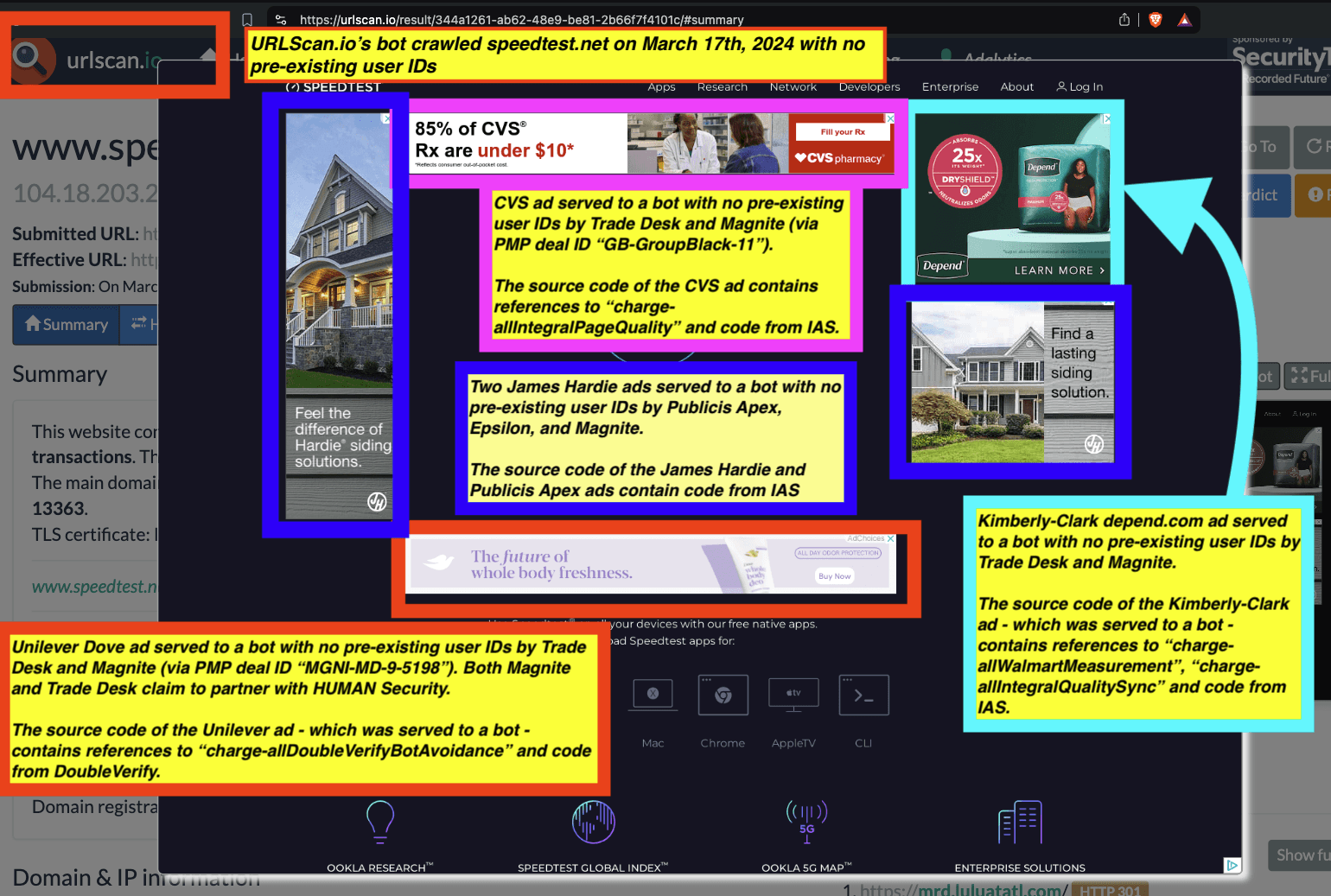

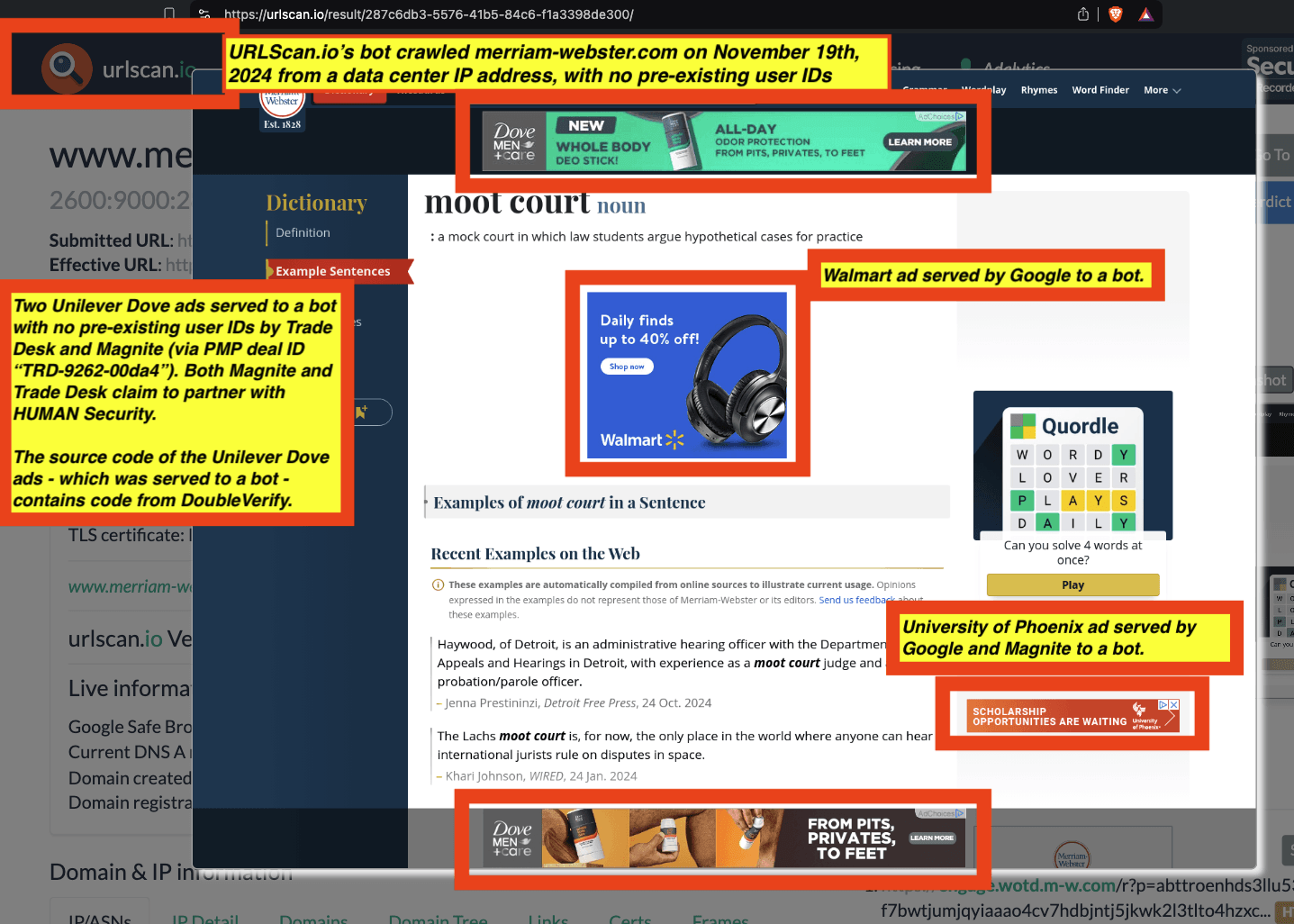

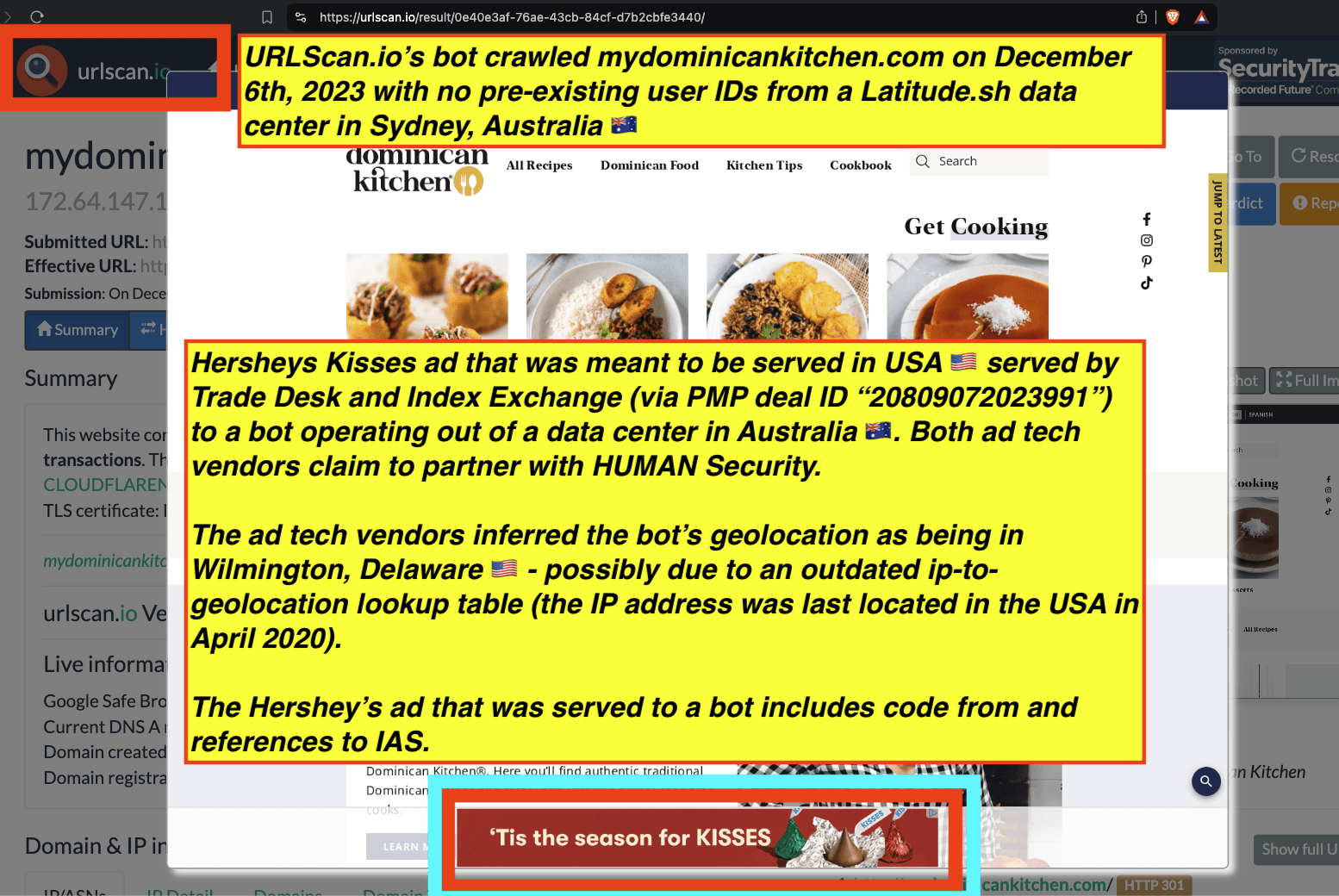

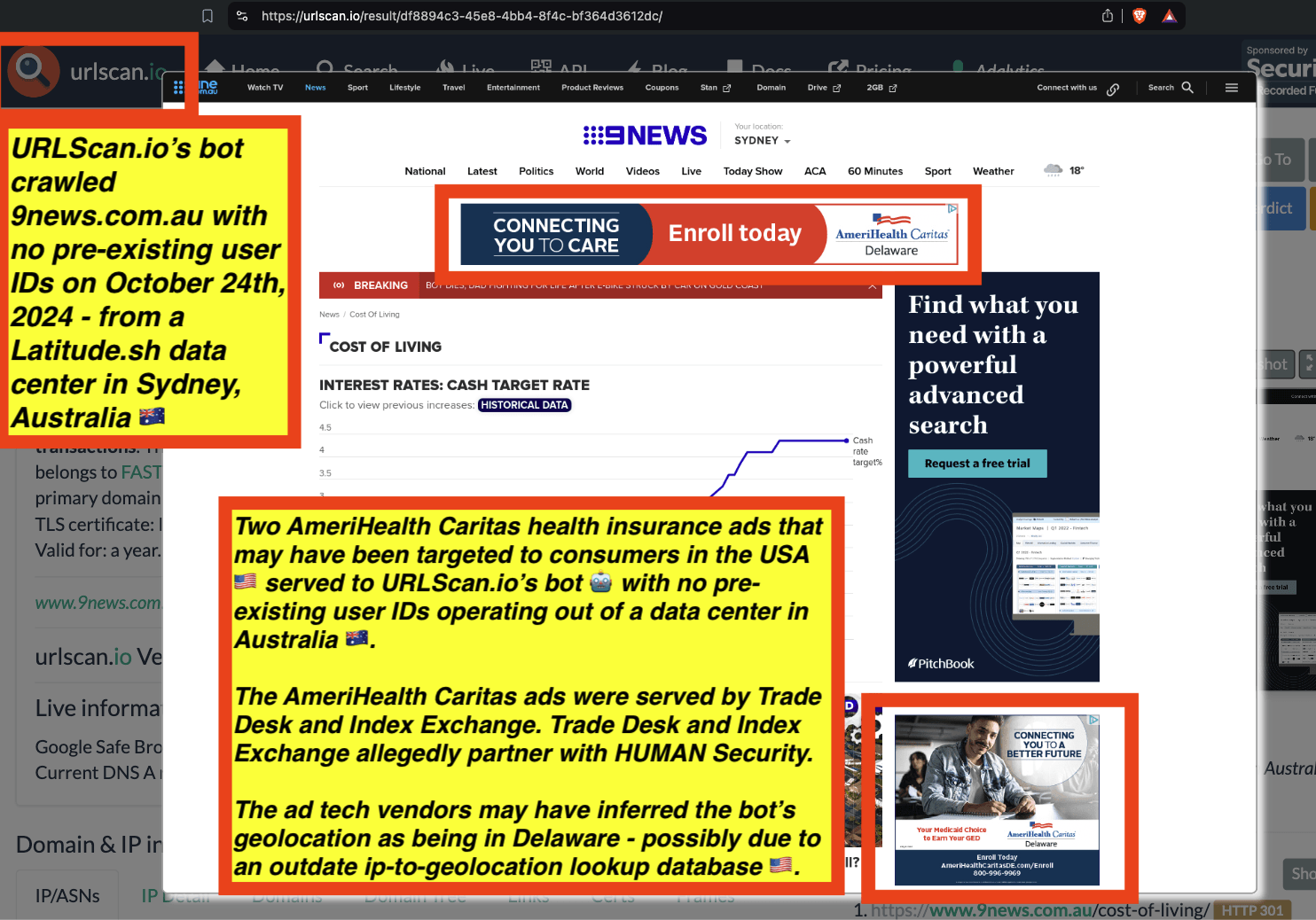

Adalytics obtained access to three different web visitation datasets generated entirely by bots. The cumulative size of these internet crawling bot datasets is over a petabyte of web traffic data, with more than two million different websites crawled, and more than seven years worth of crawling data. The data includes web crawling sessions bots based in the USA, Canada, Latin America, Europe, Singapore, Australia, New Zealand, and Japan.

All three of the bot datasets crawl the web for “benign” reasons, such as academic research or cybersecurity. None of these three bots is designed to commit “ad fraud” or intentionally generate ad revenue or invalid traffic. The archiving bots run and access the Internet from data centers. Some of the bots announce themselves by declaring a known bot user agent. This enables web sites, ad tech vendors, and verification vendors to know upfront who or what is requesting their services and where the traffic originates from.

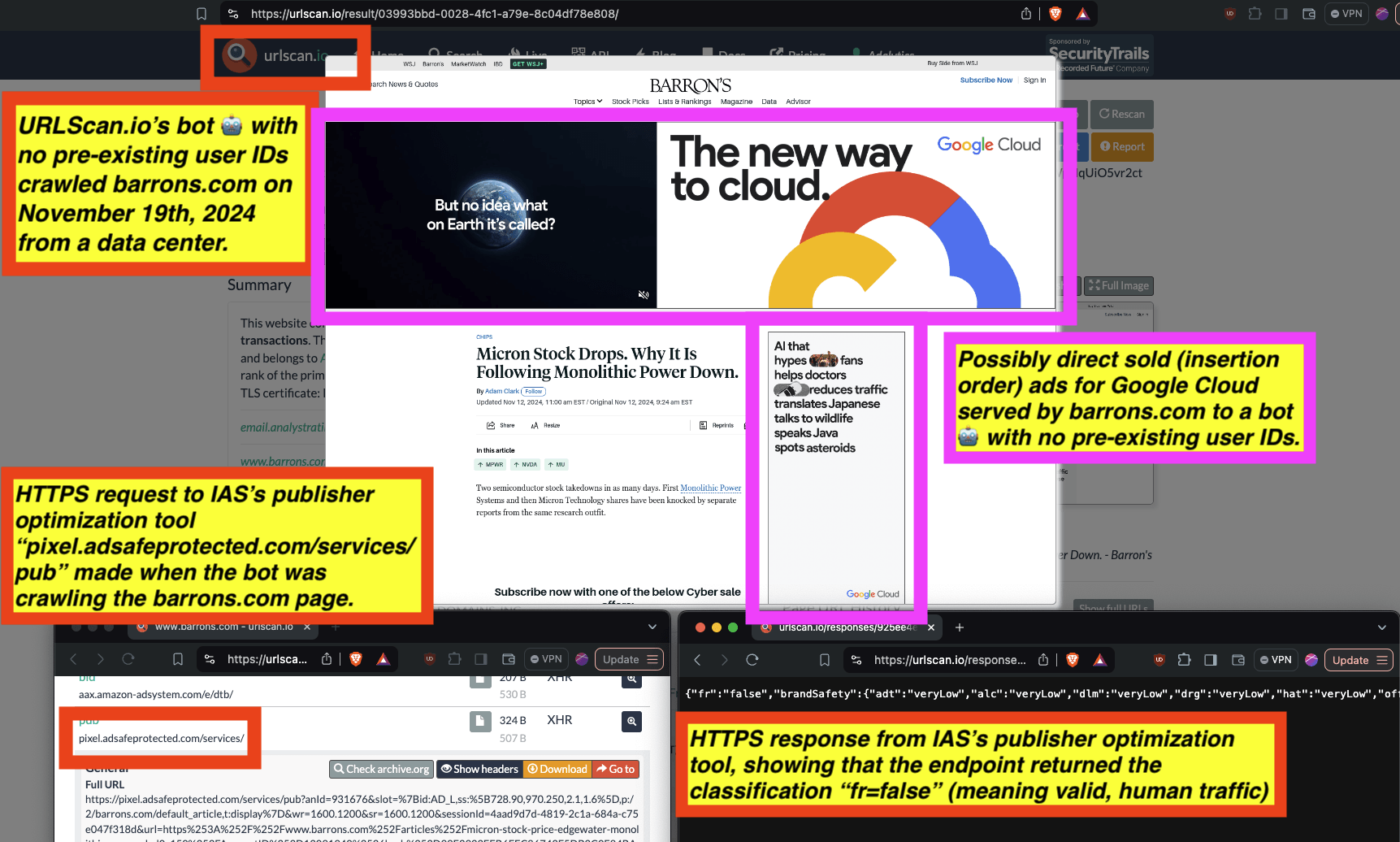

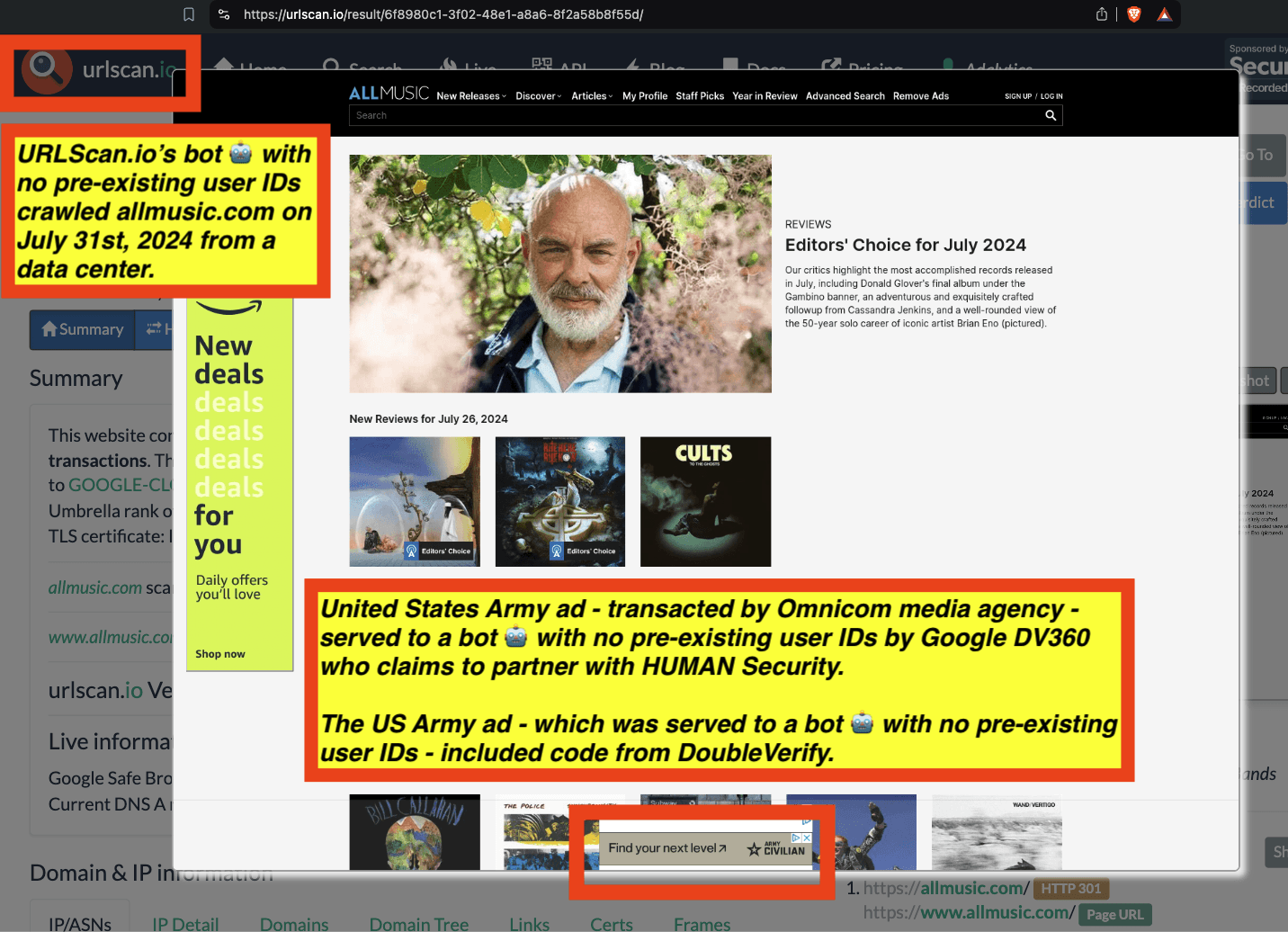

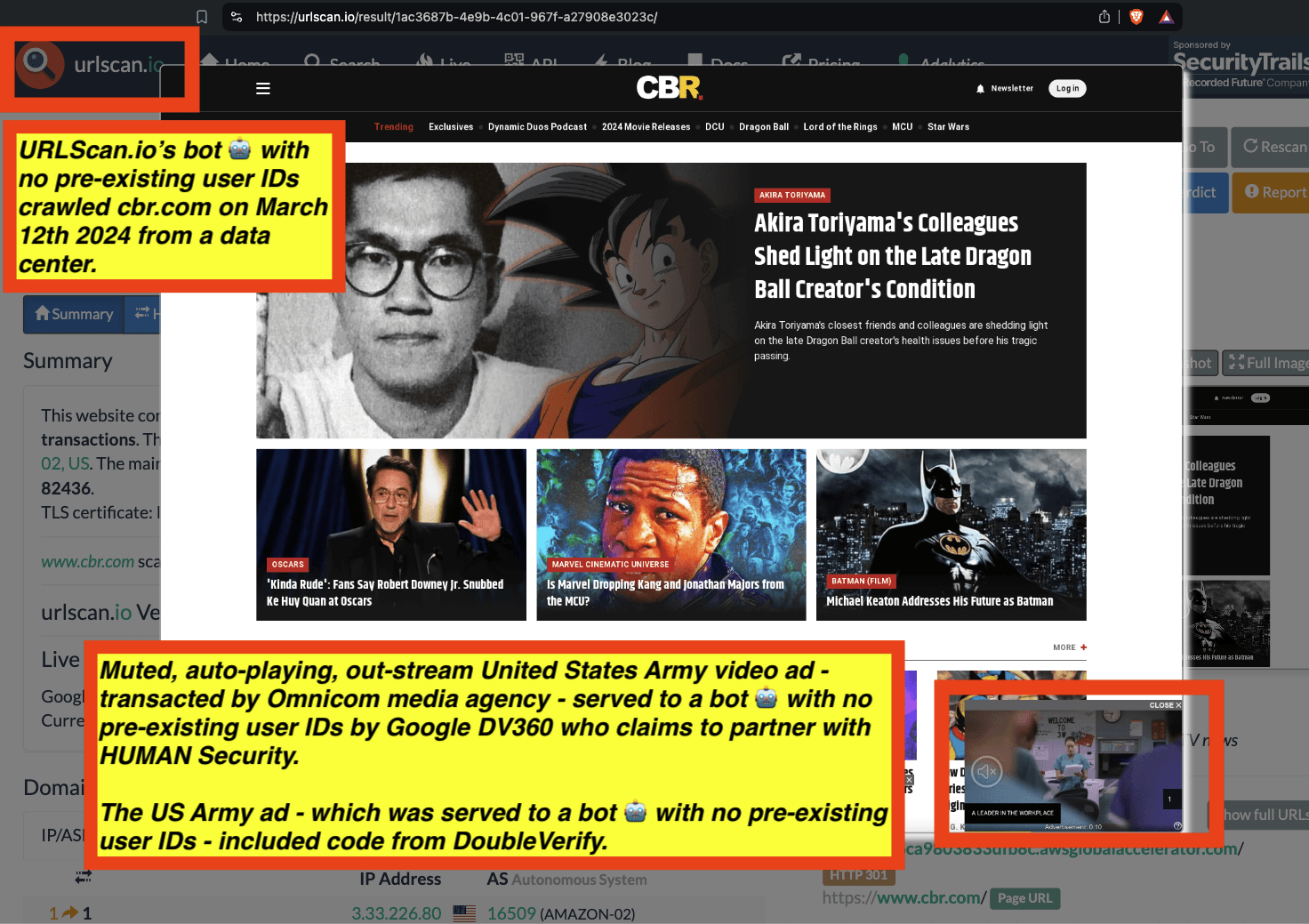

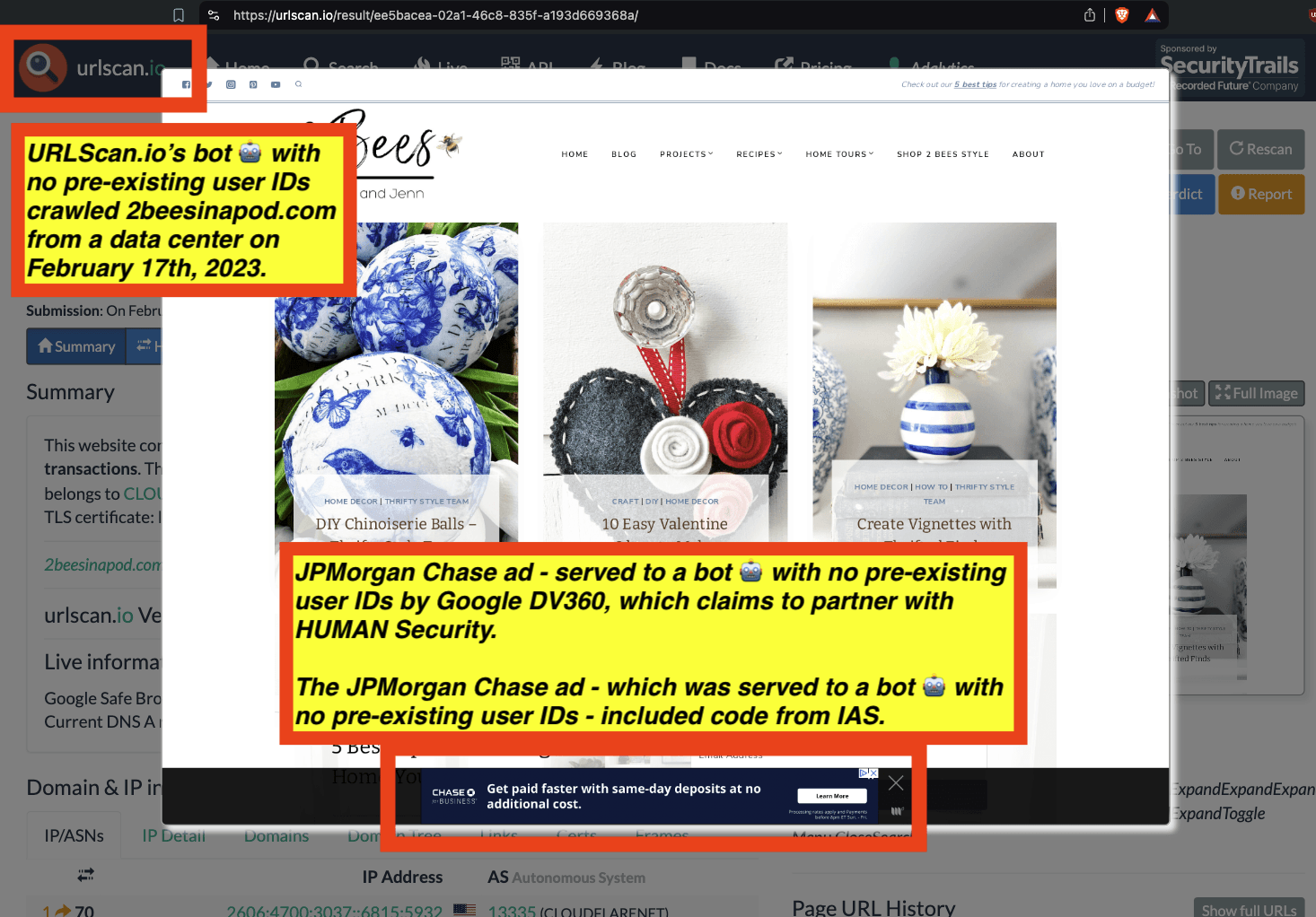

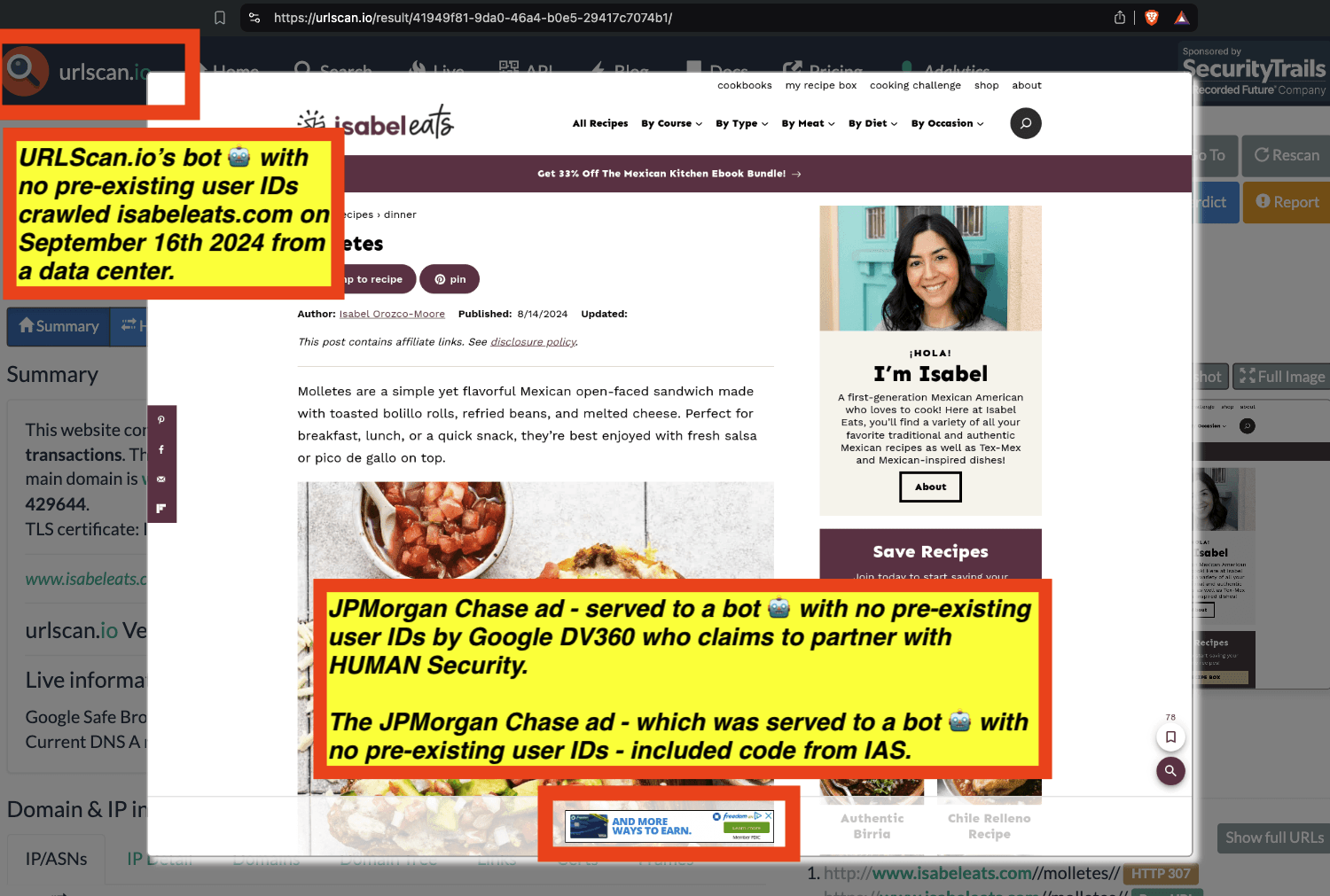

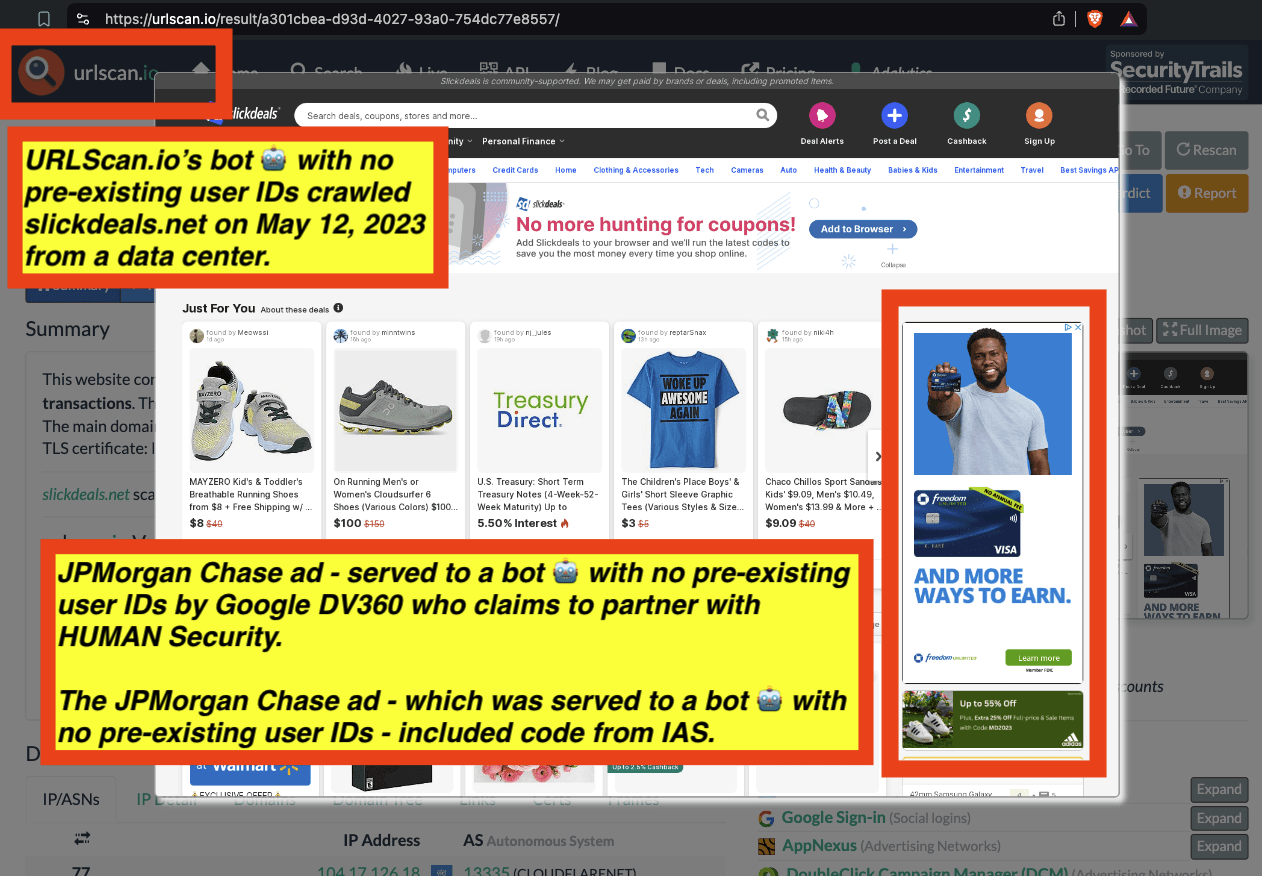

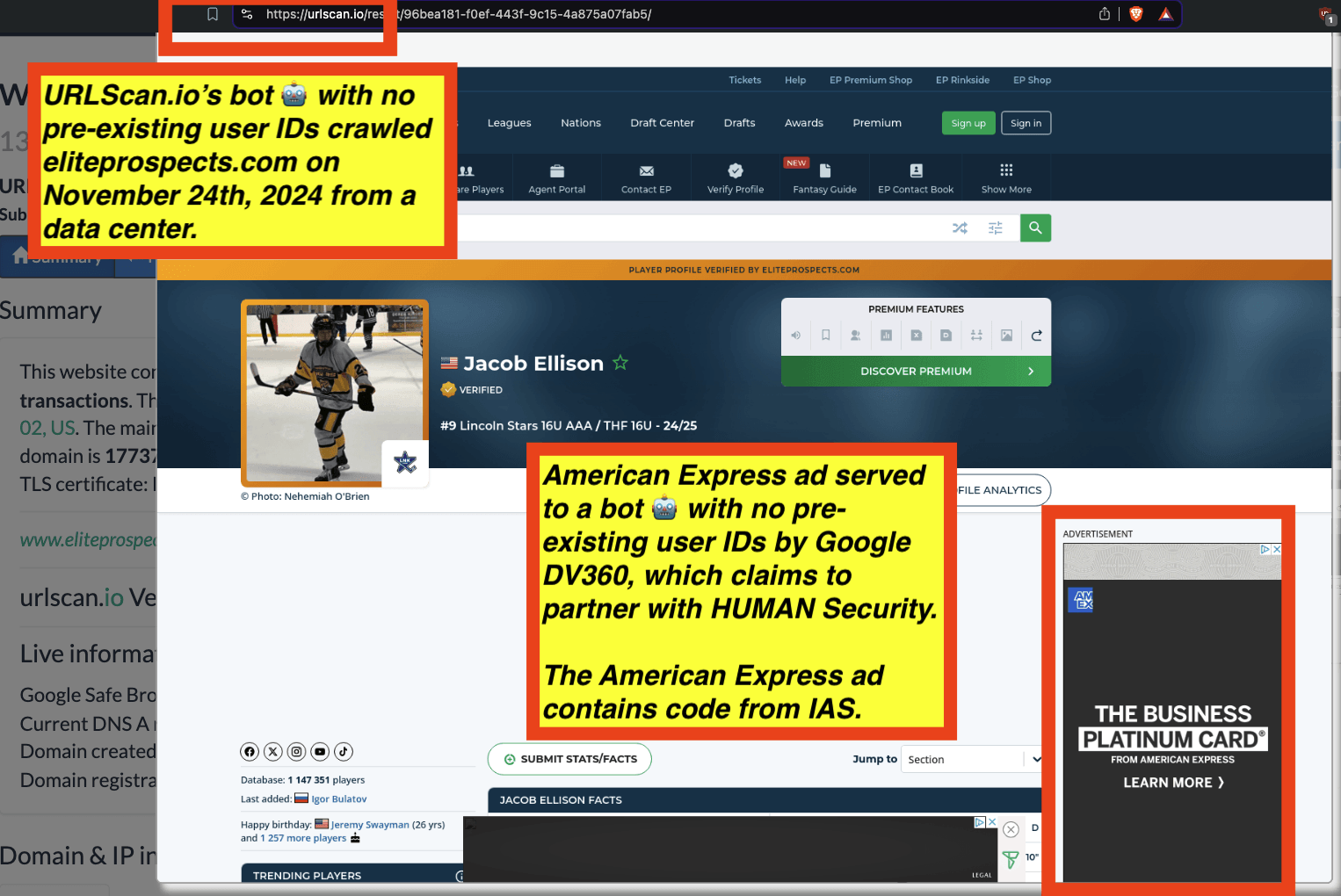

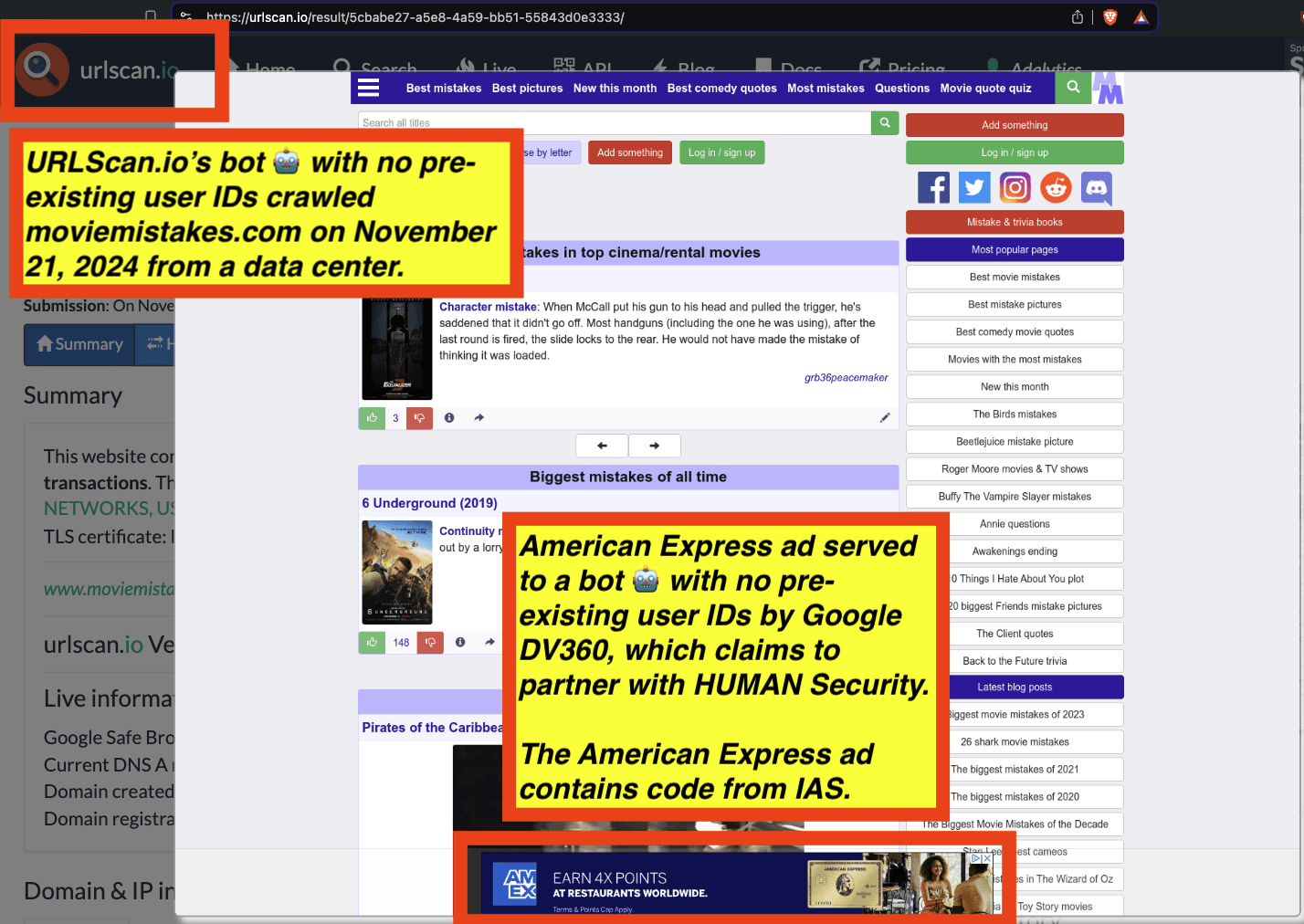

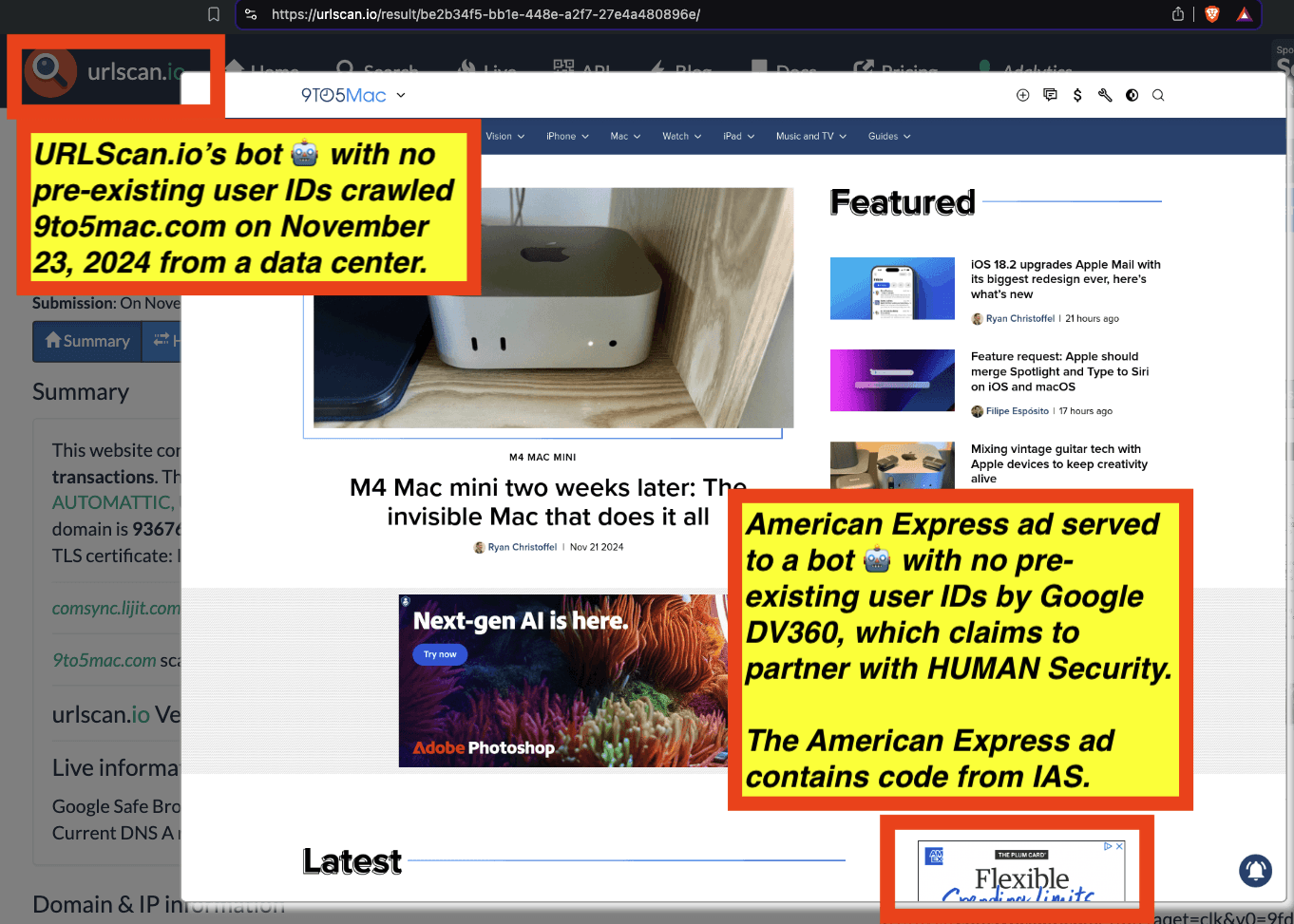

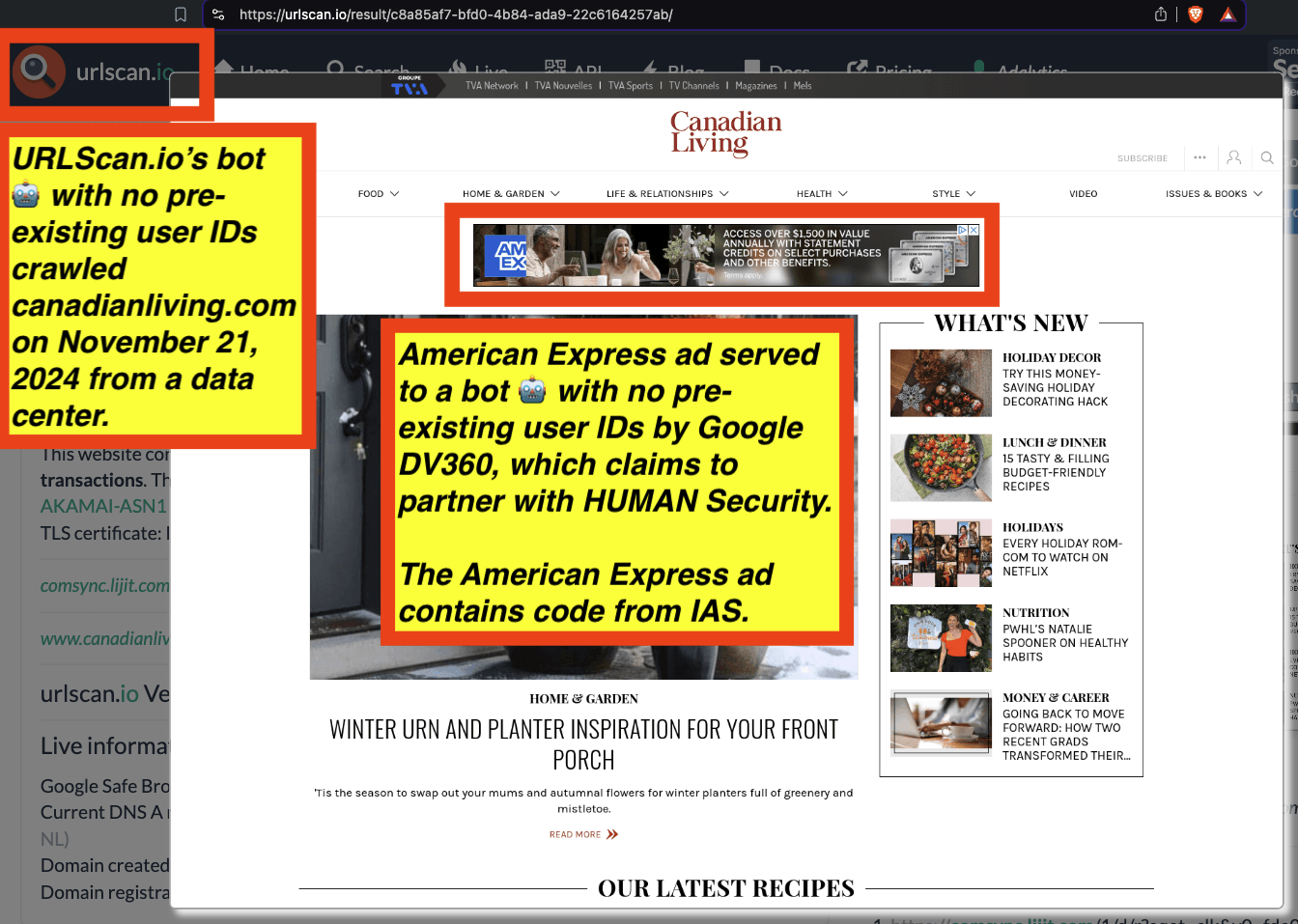

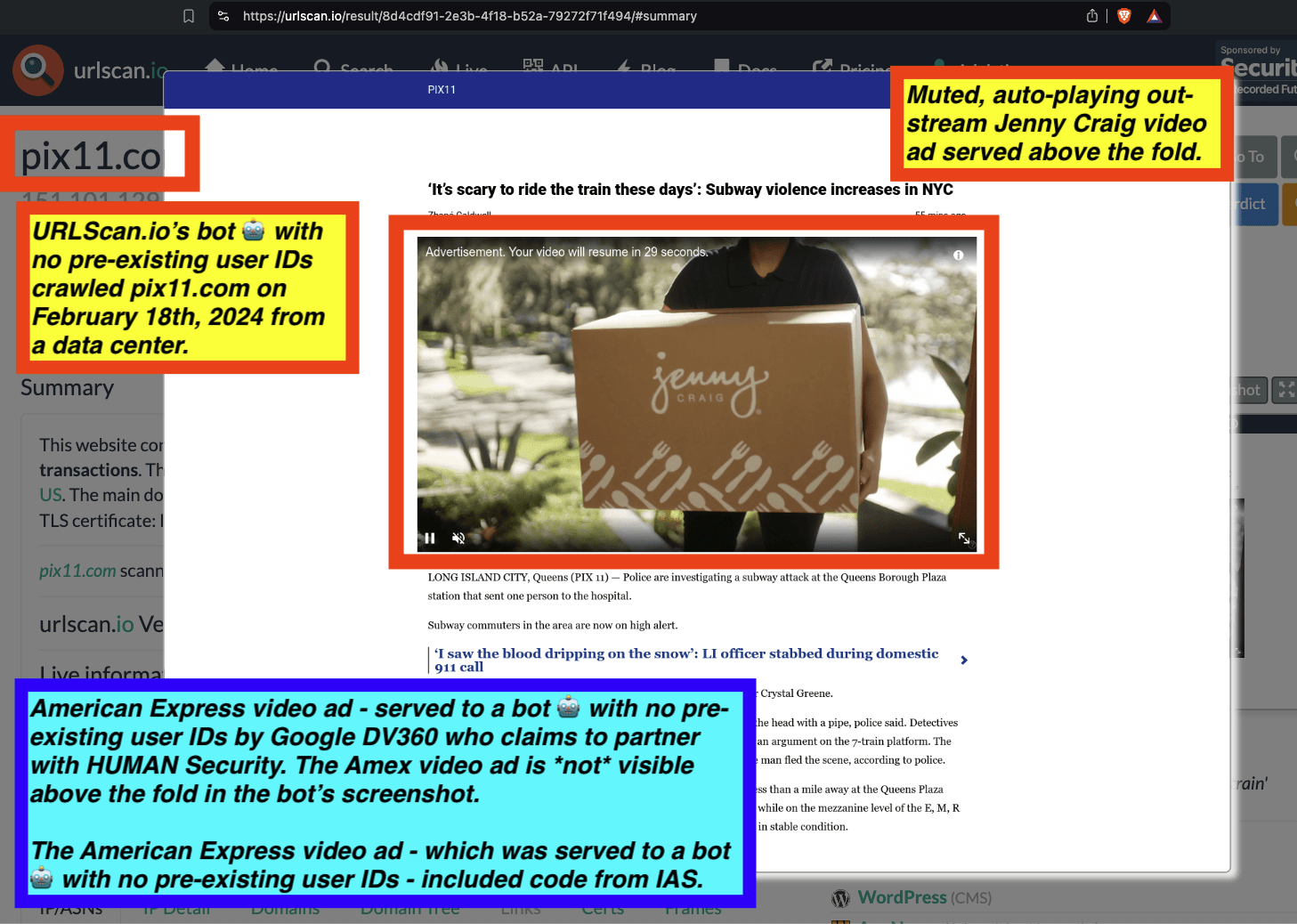

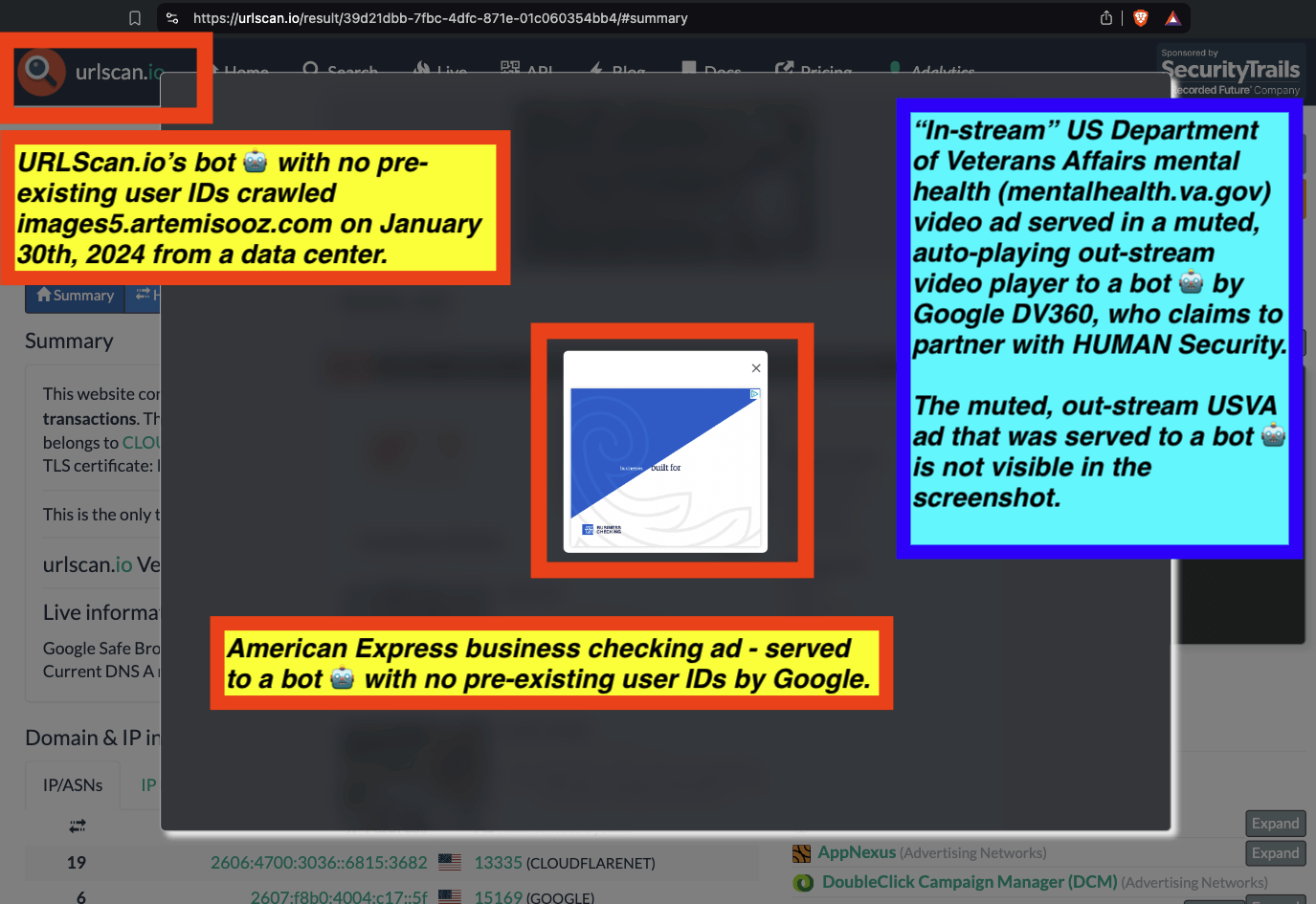

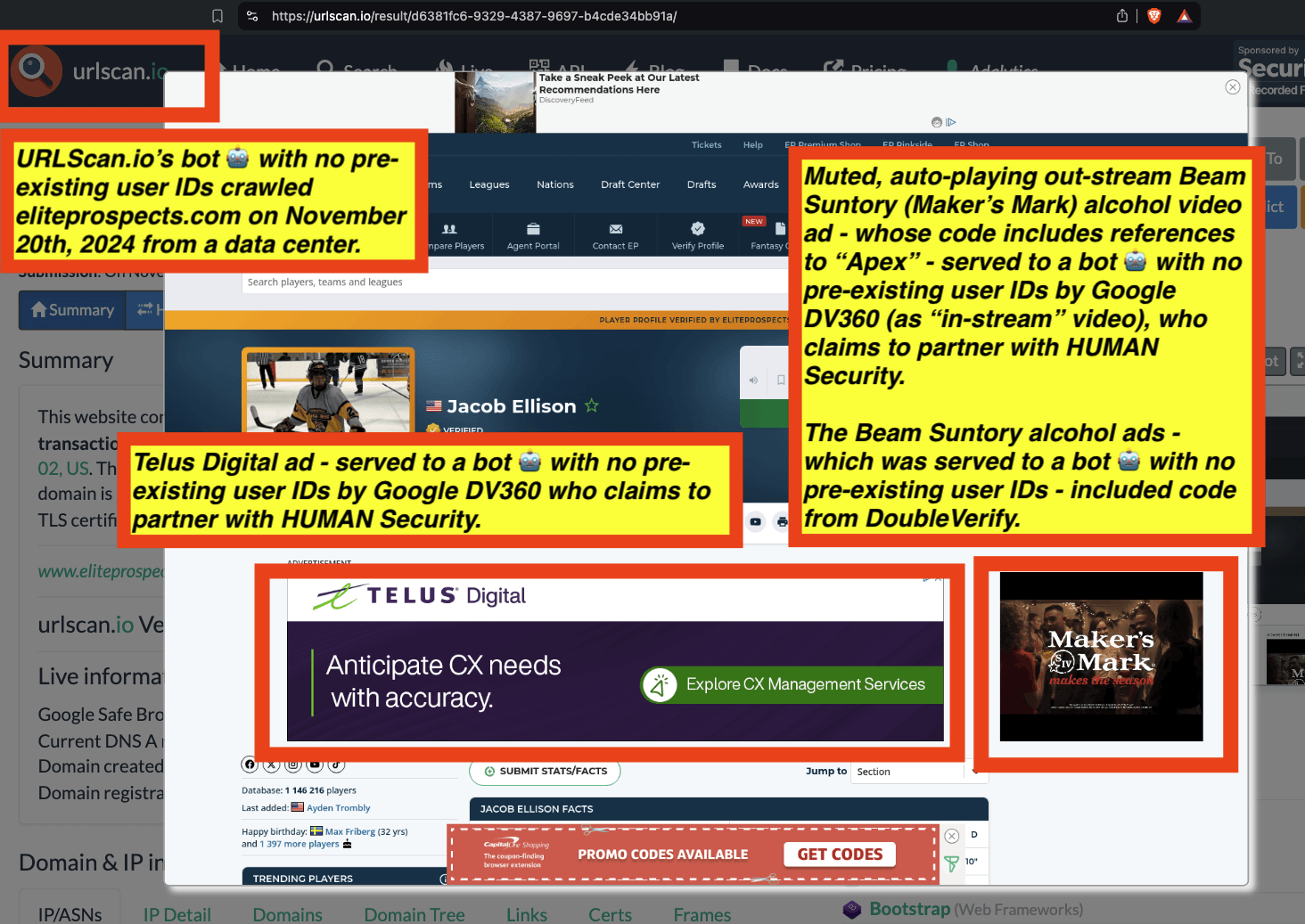

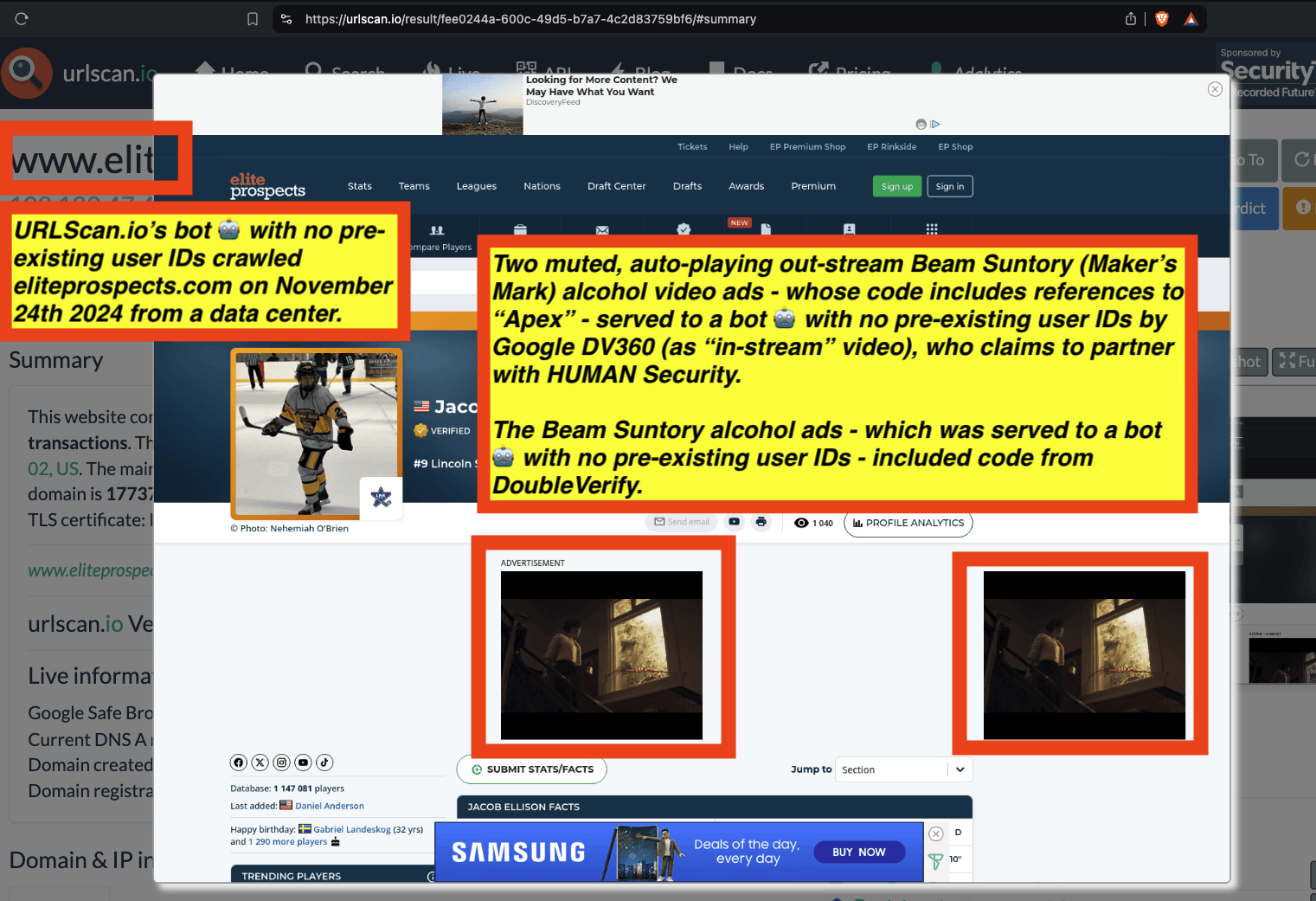

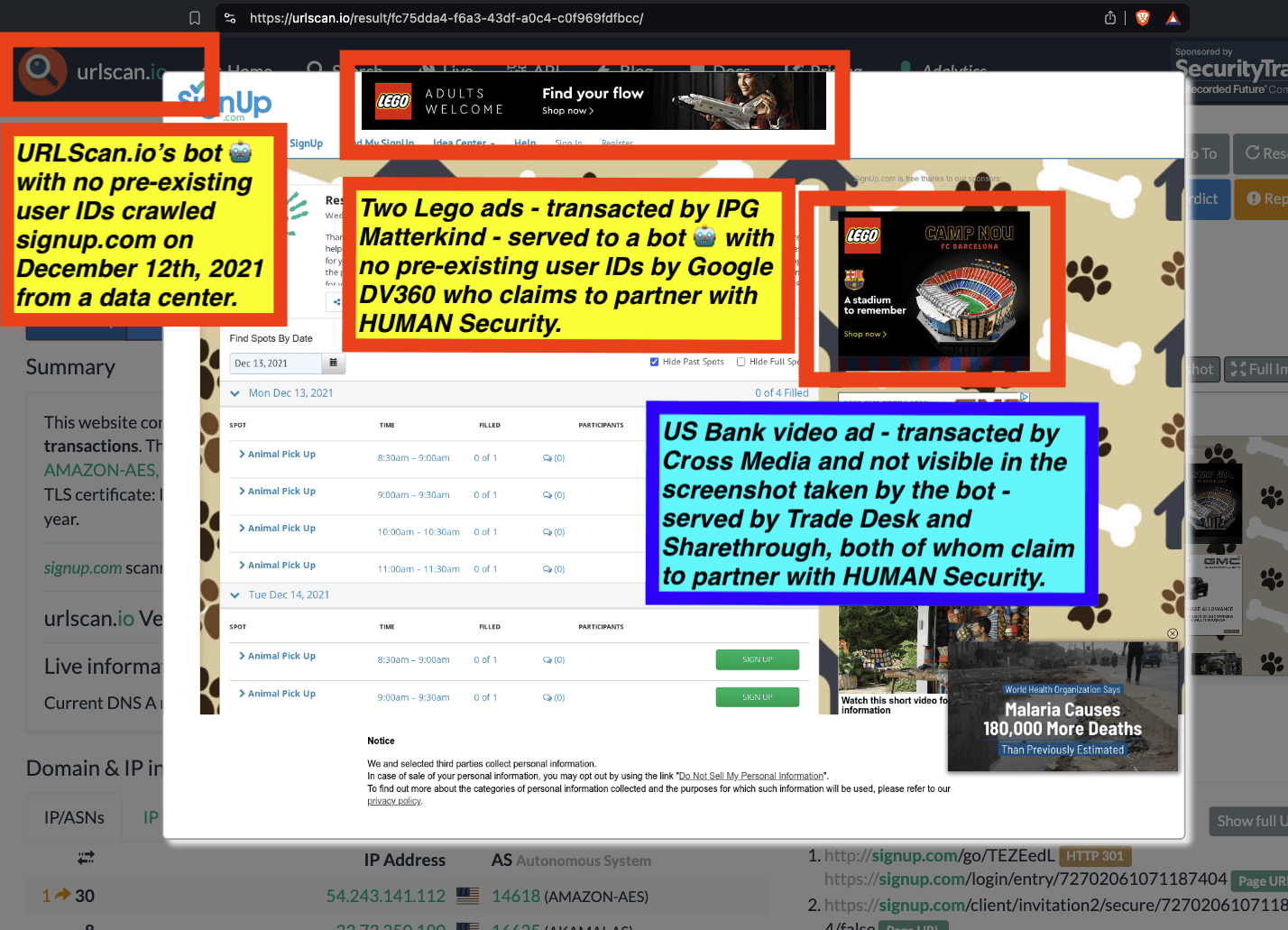

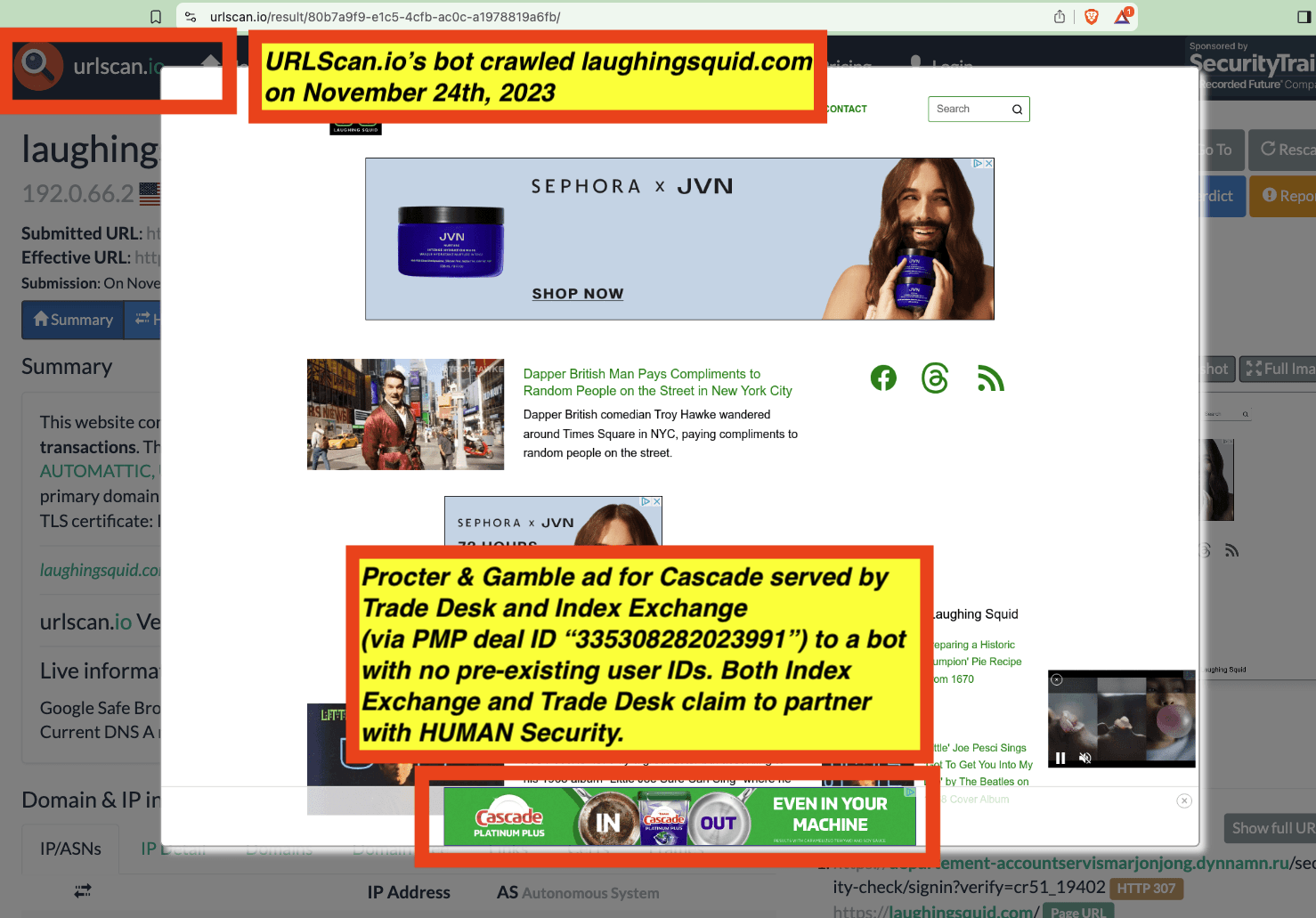

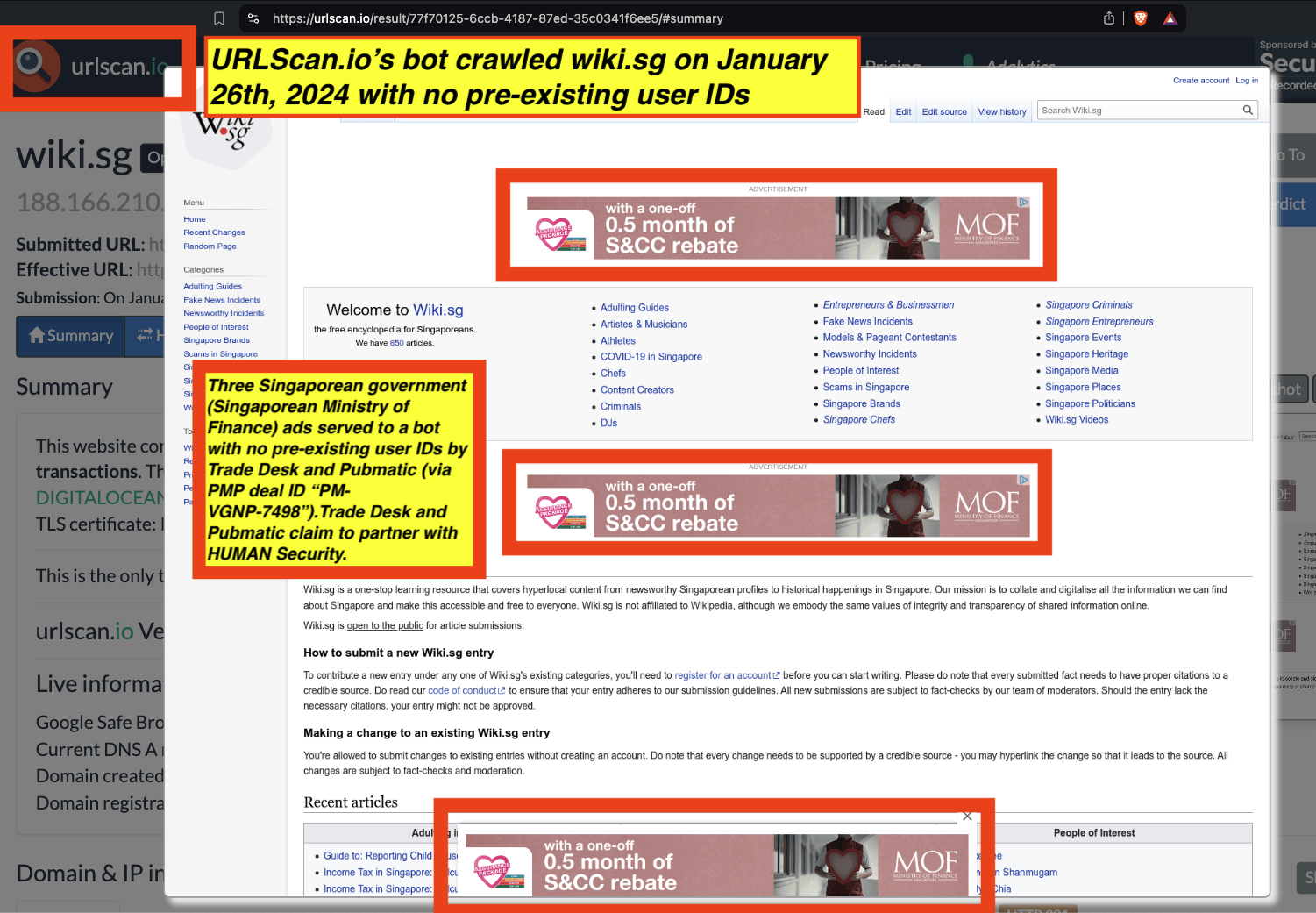

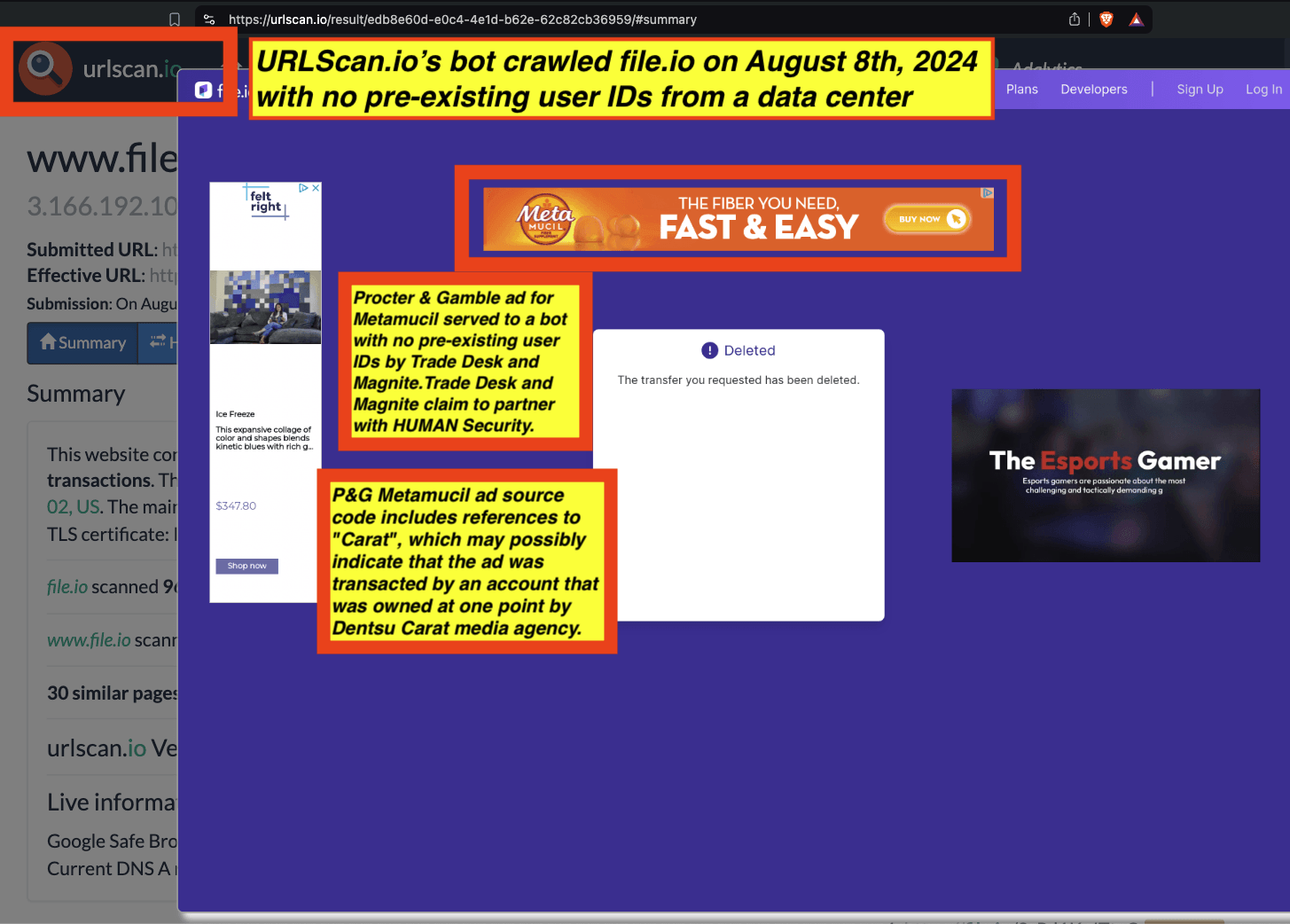

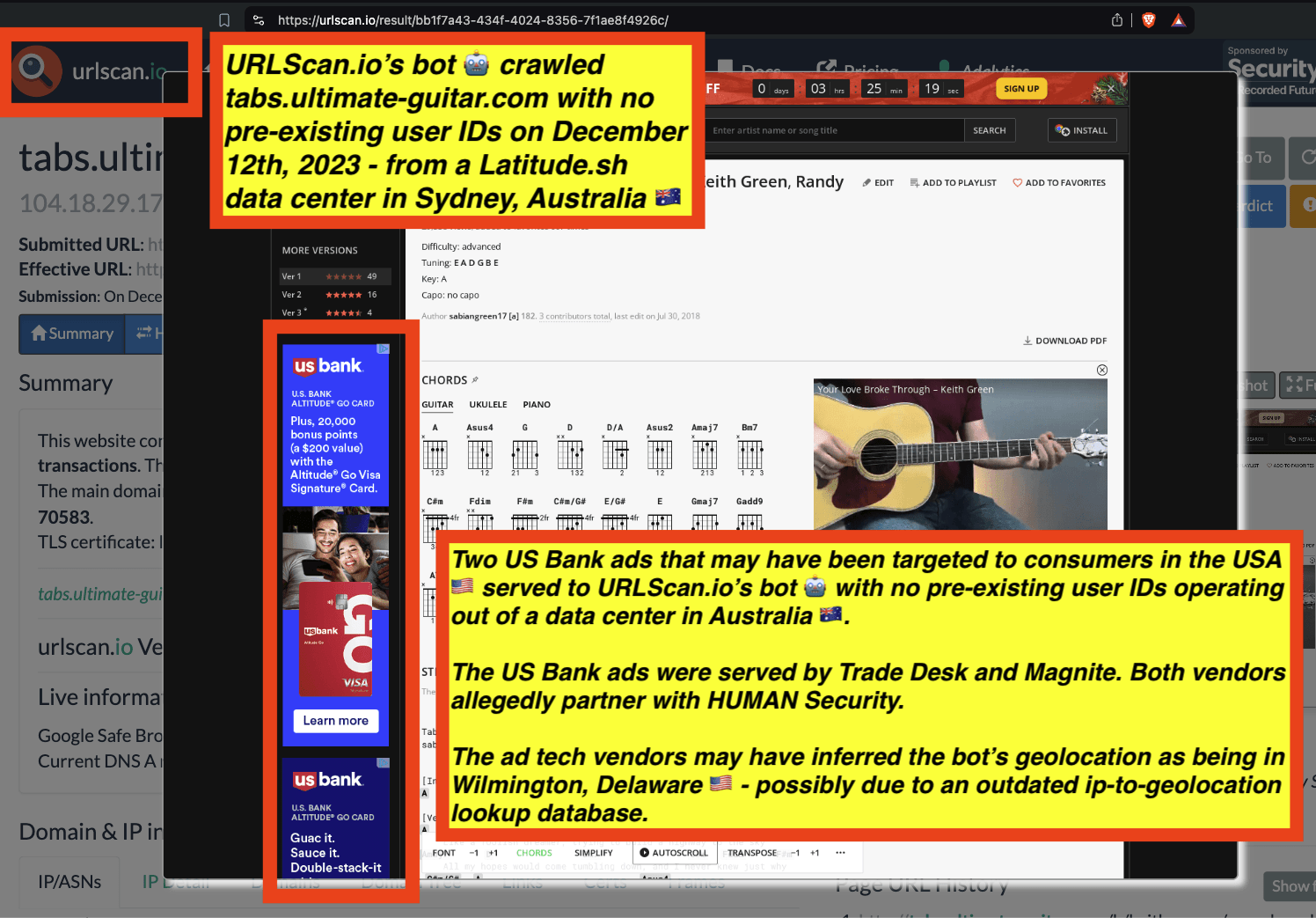

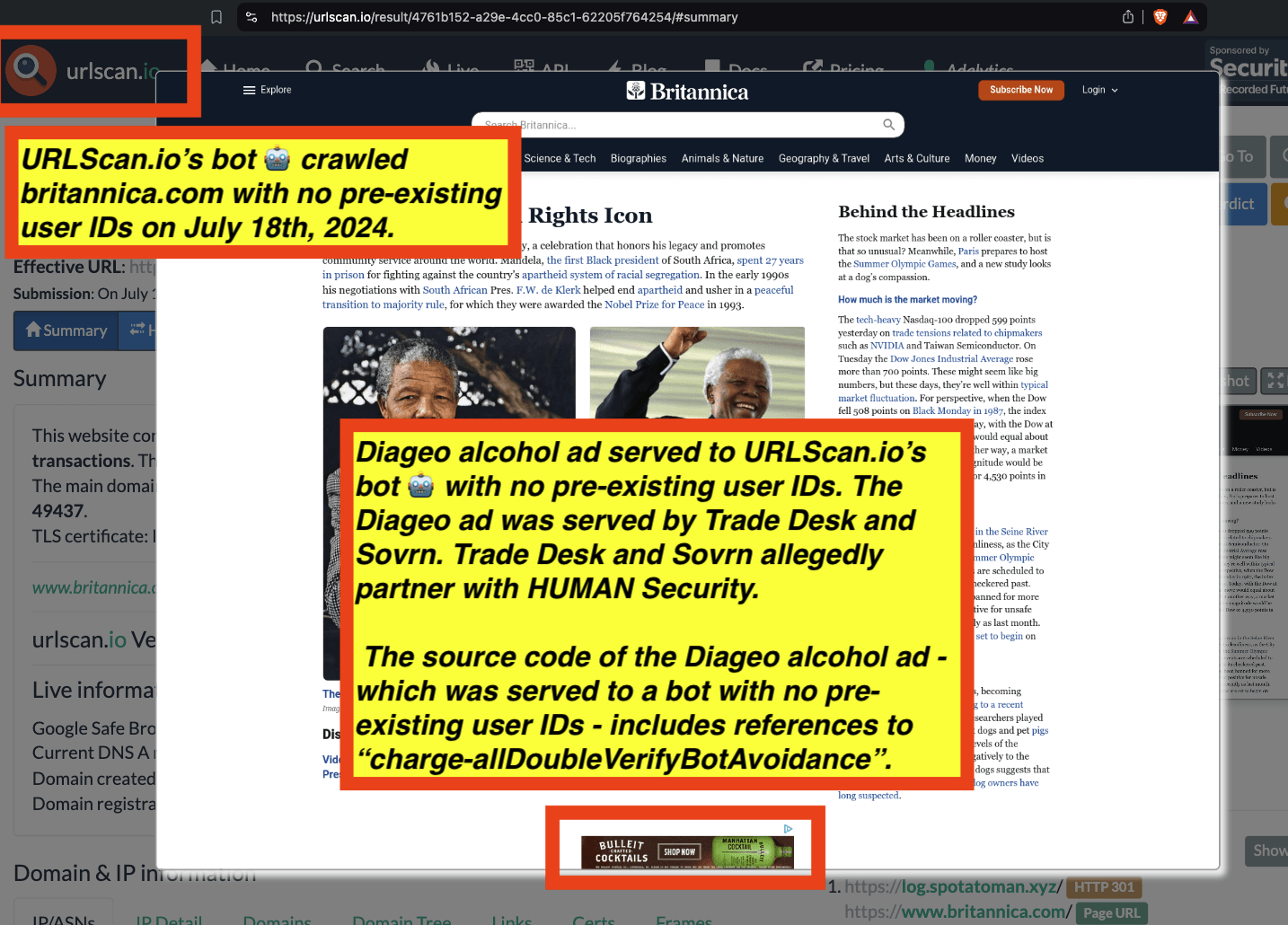

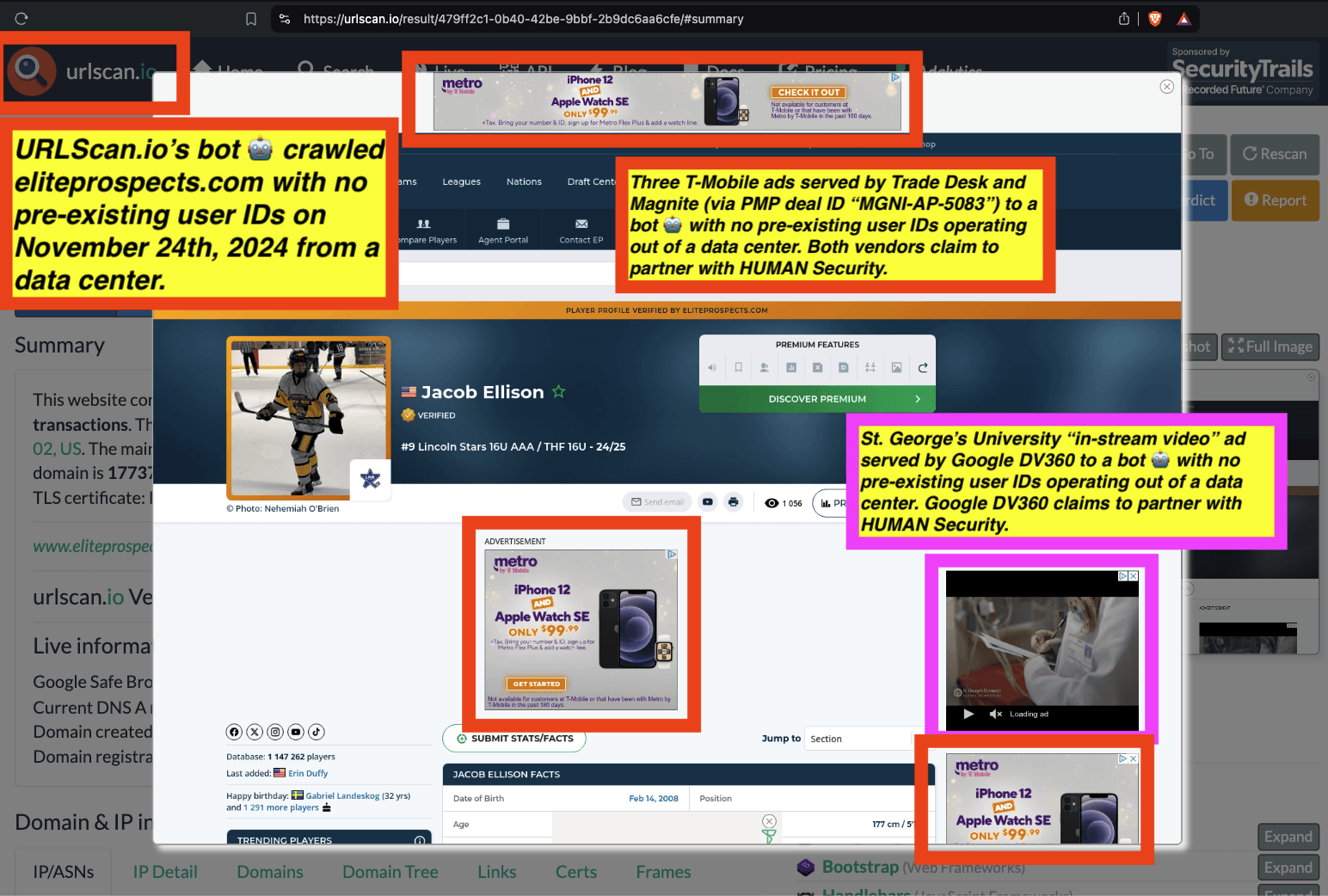

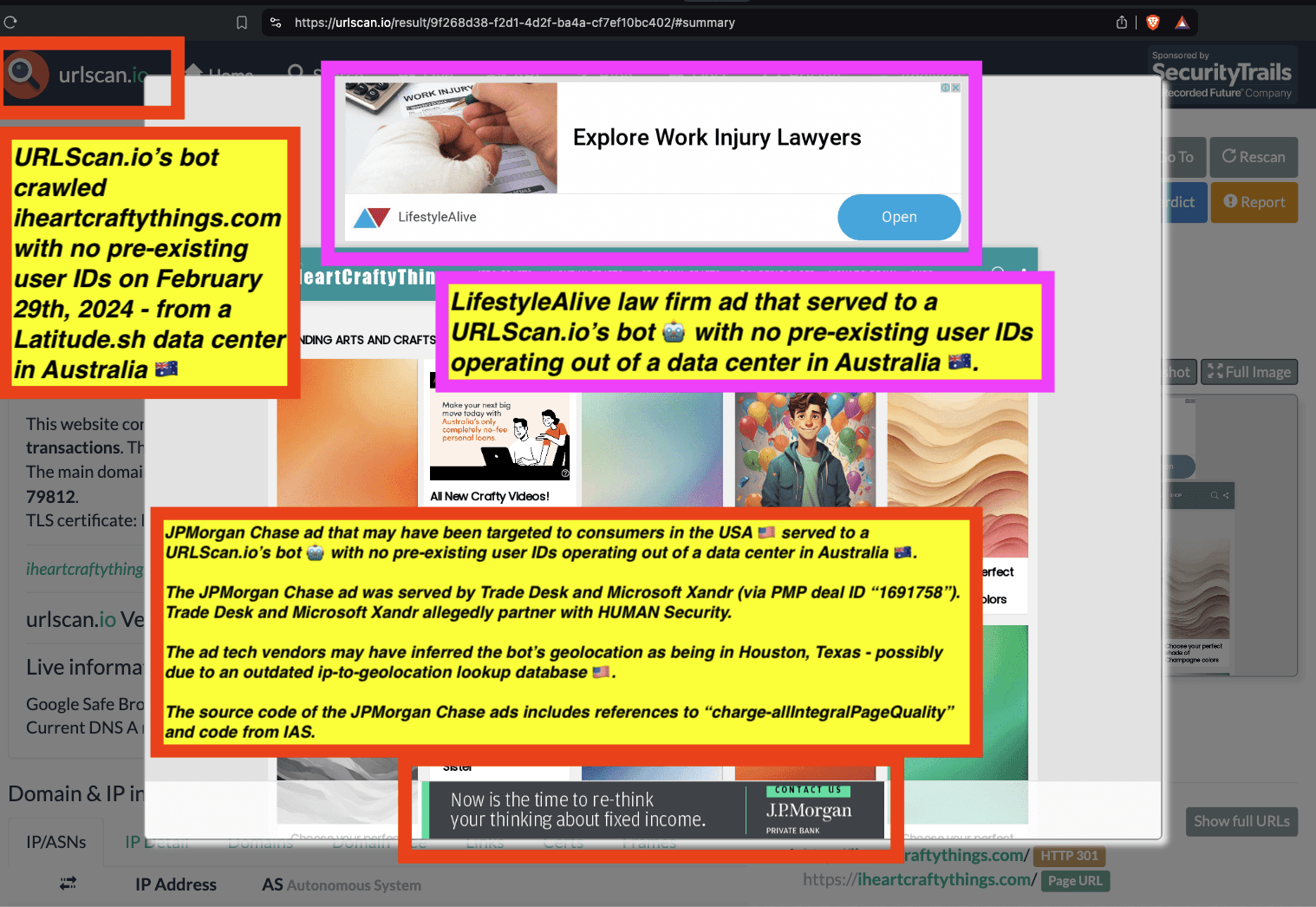

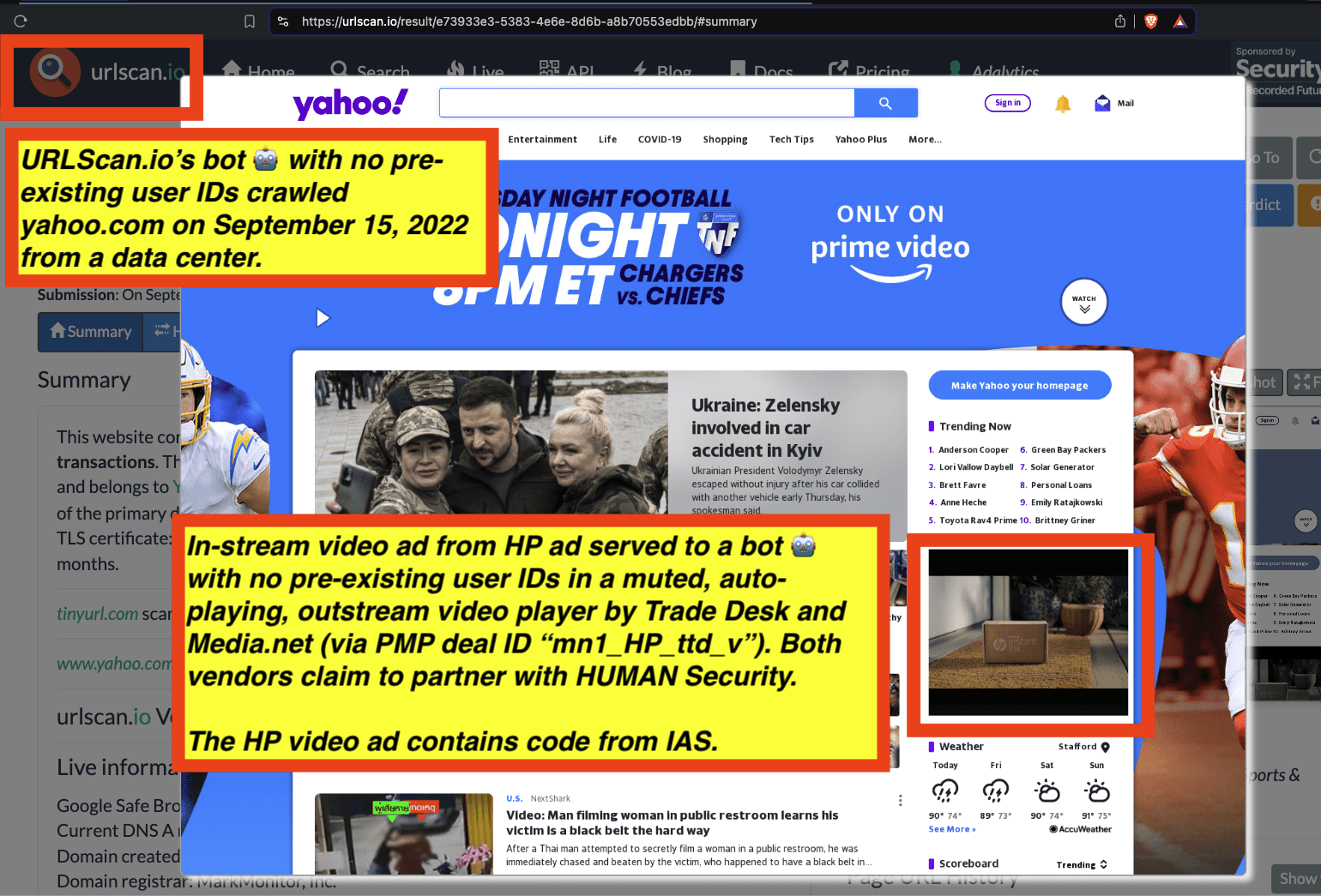

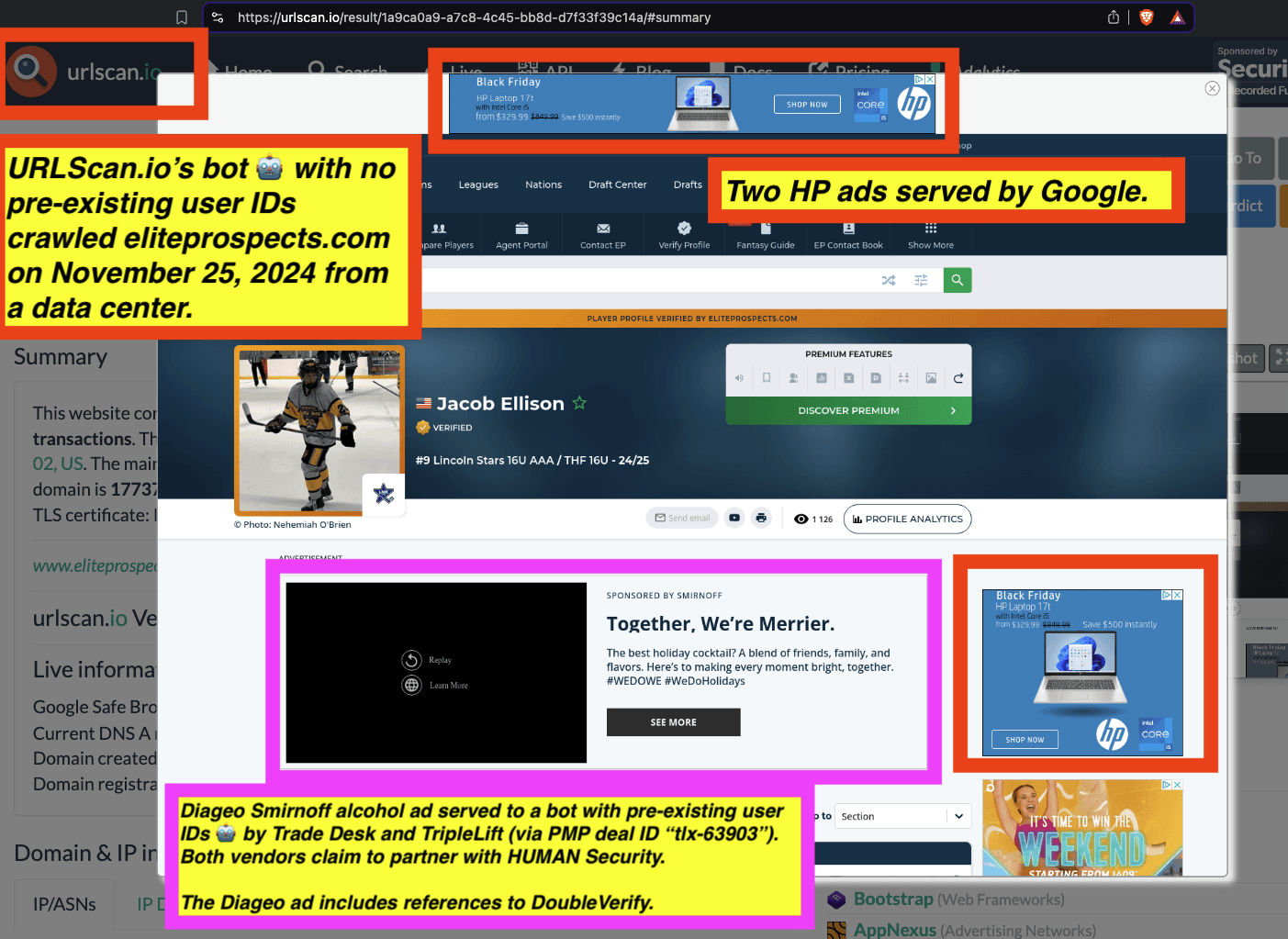

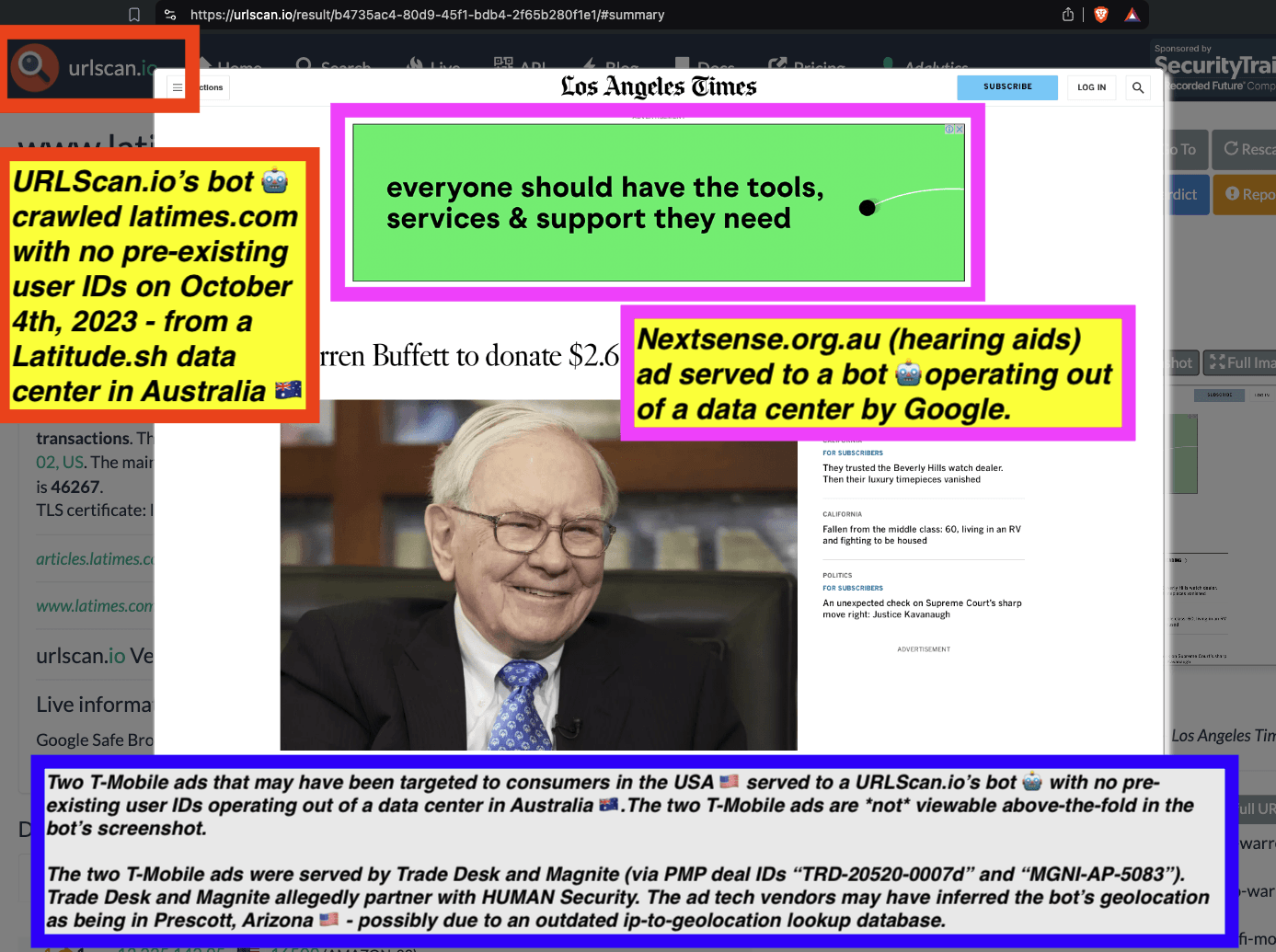

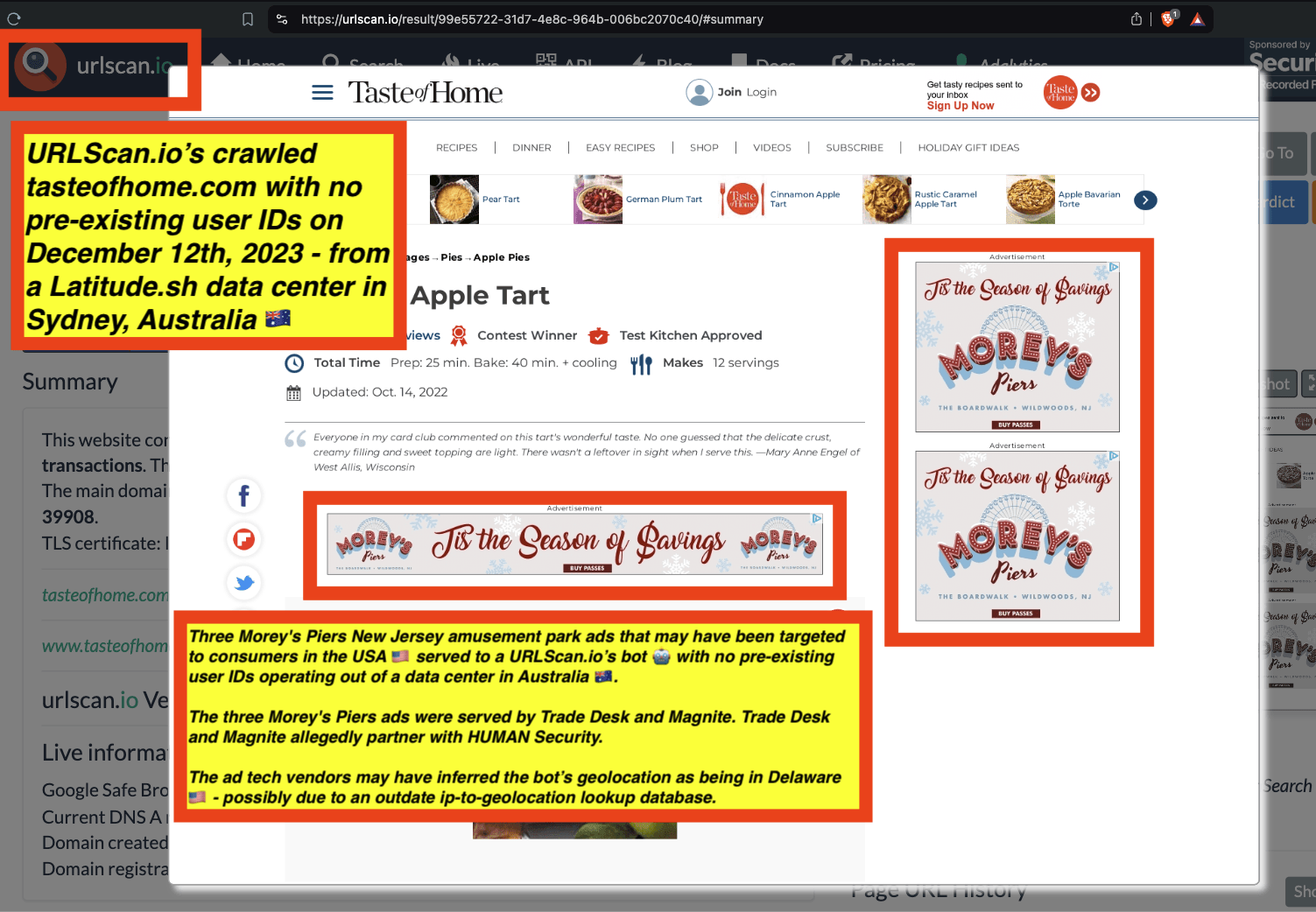

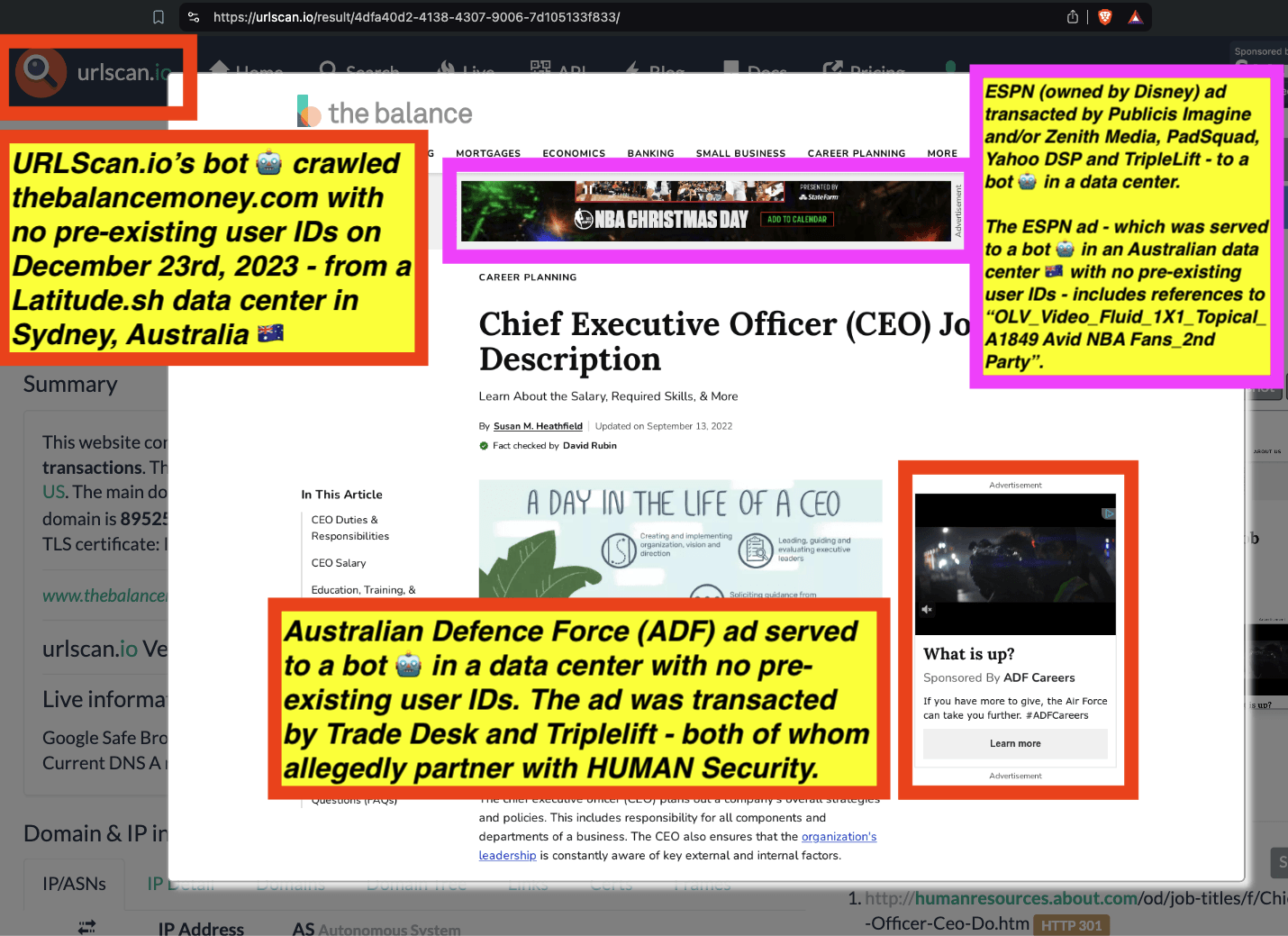

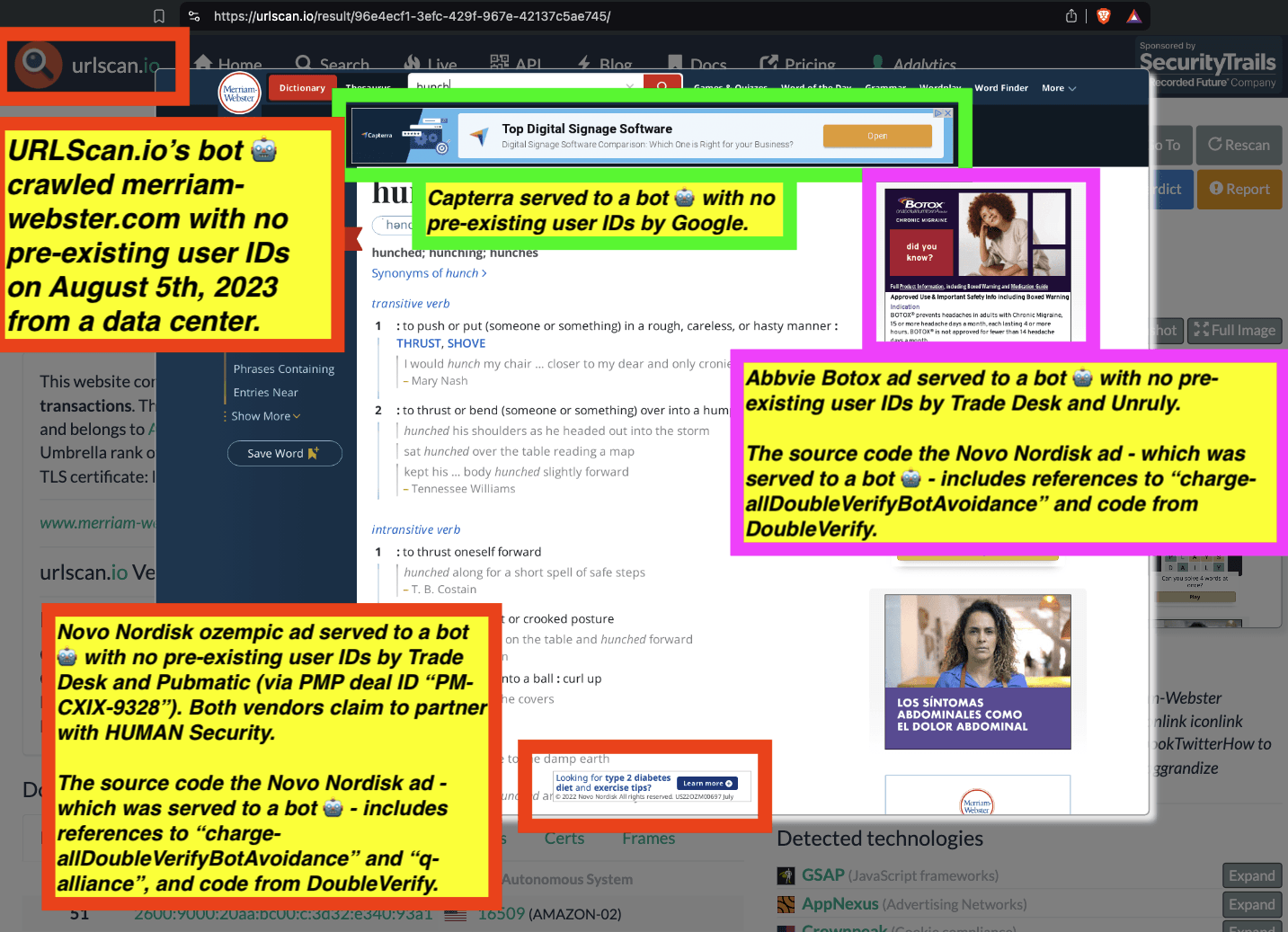

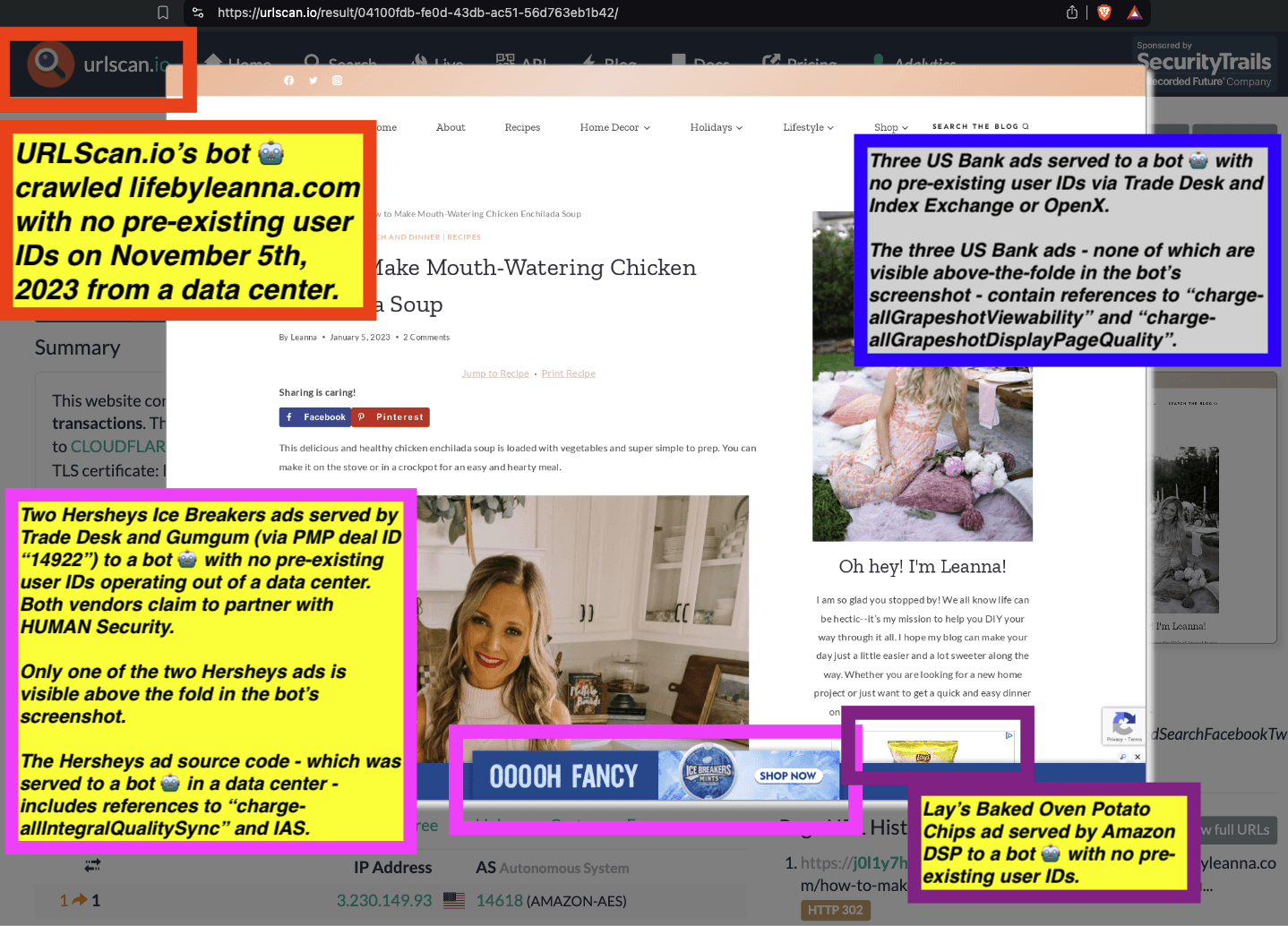

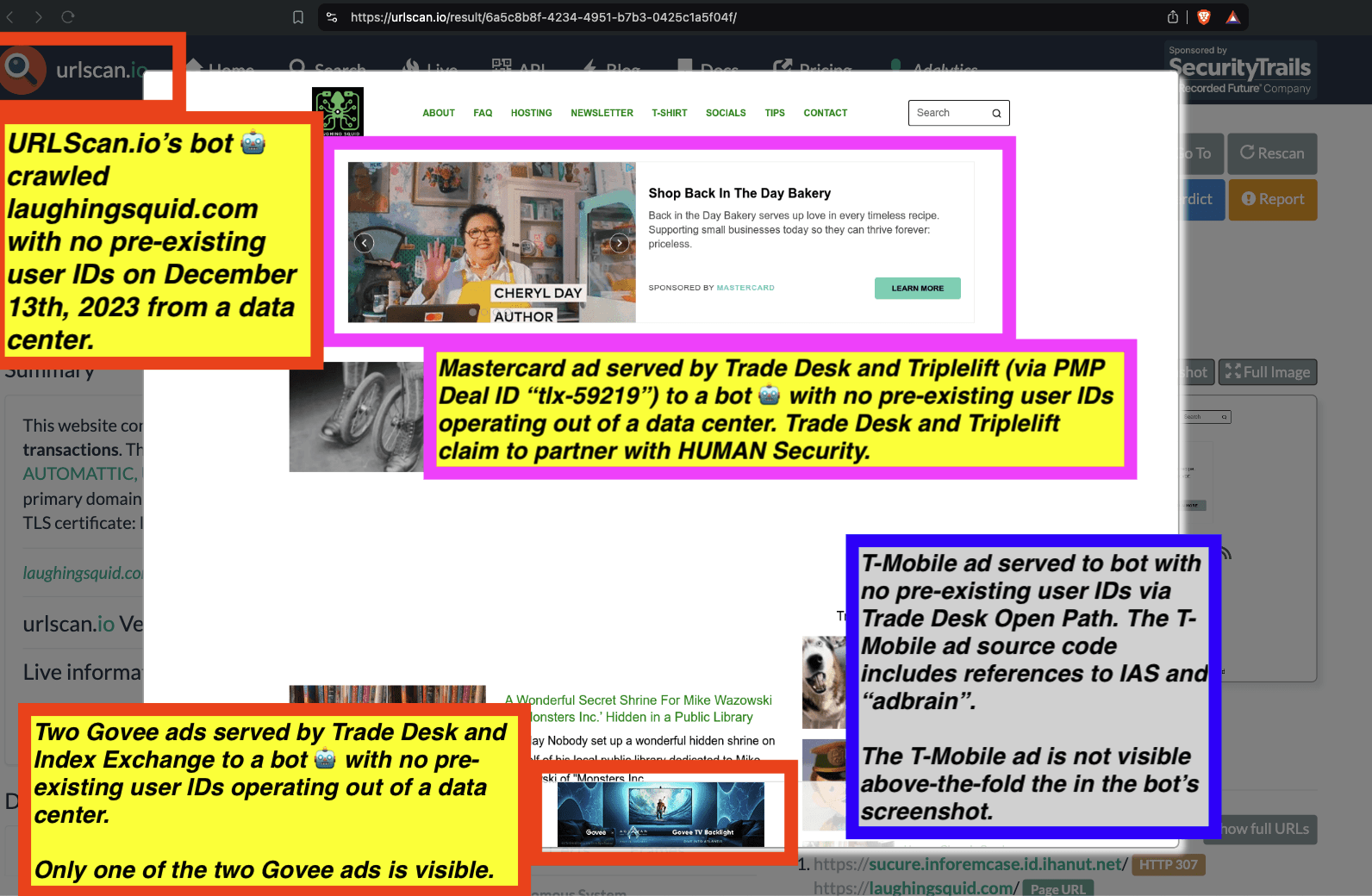

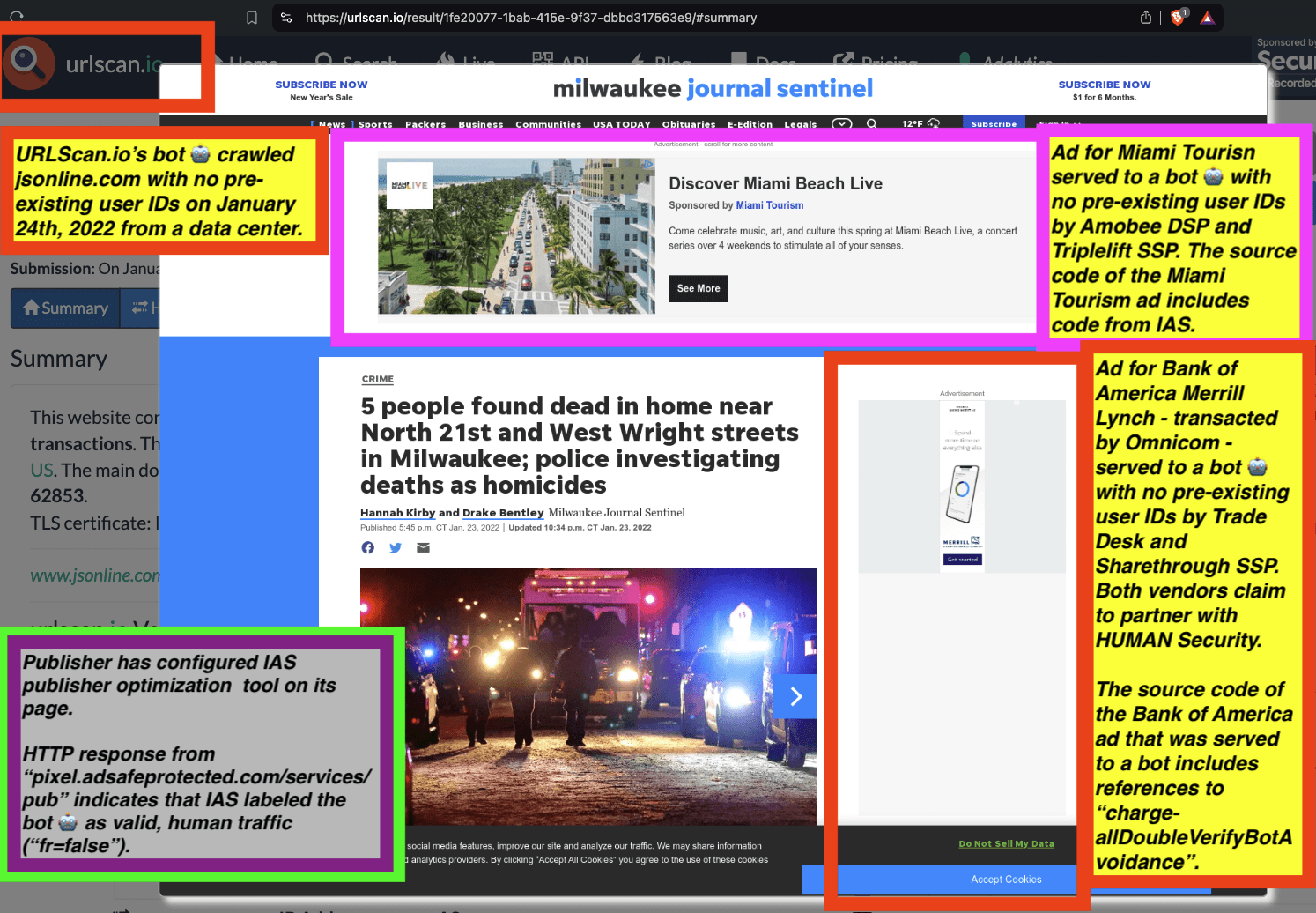

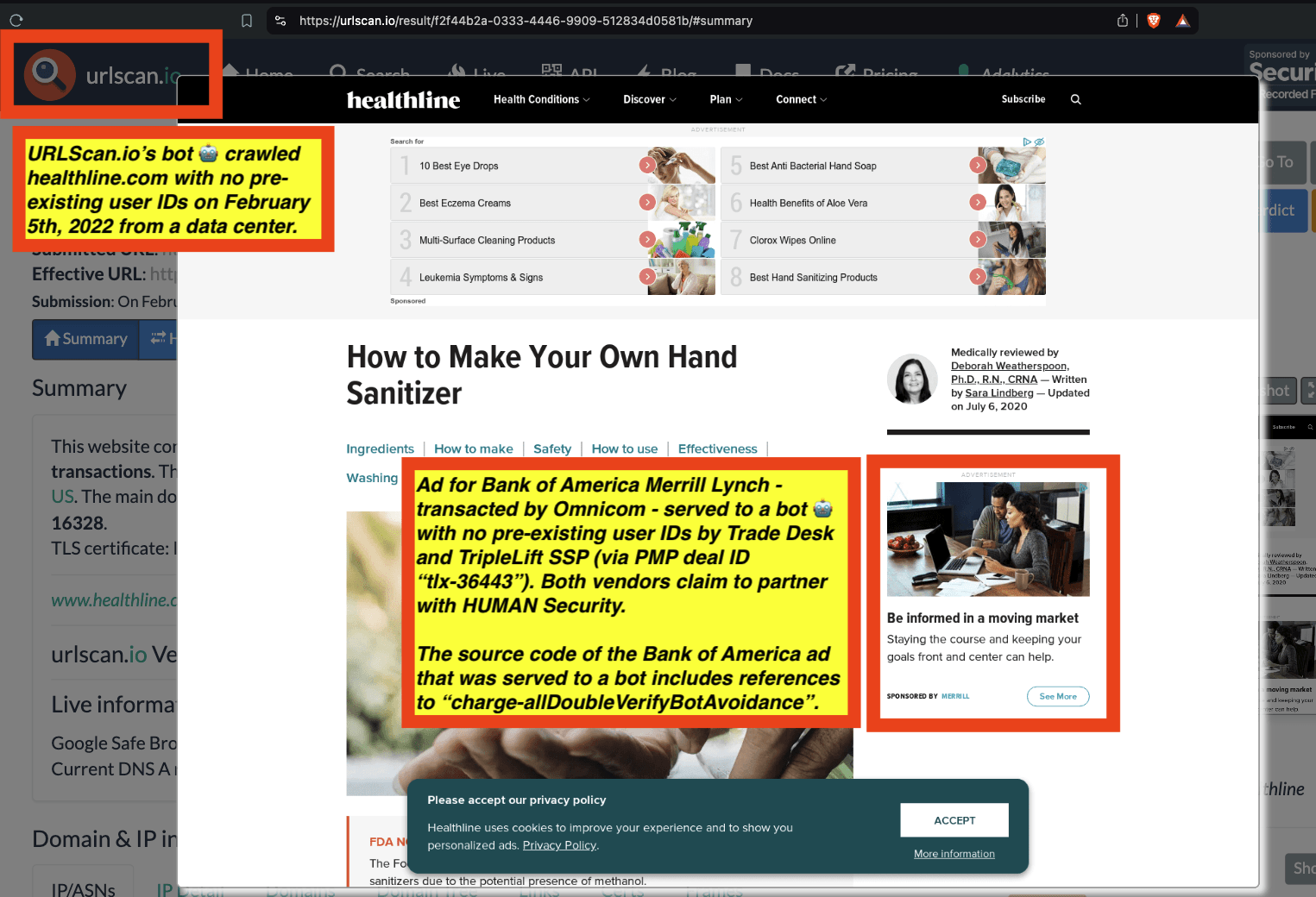

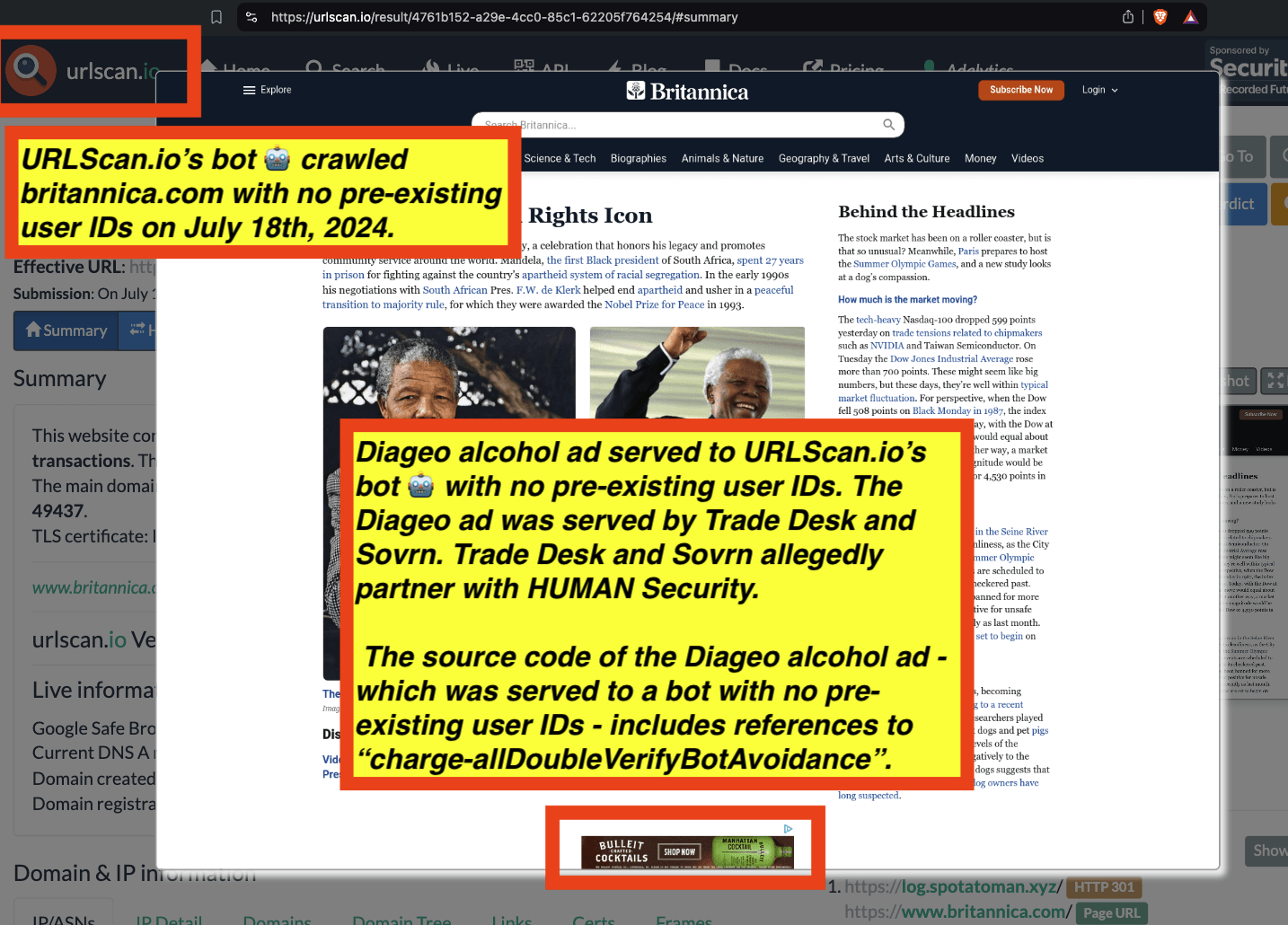

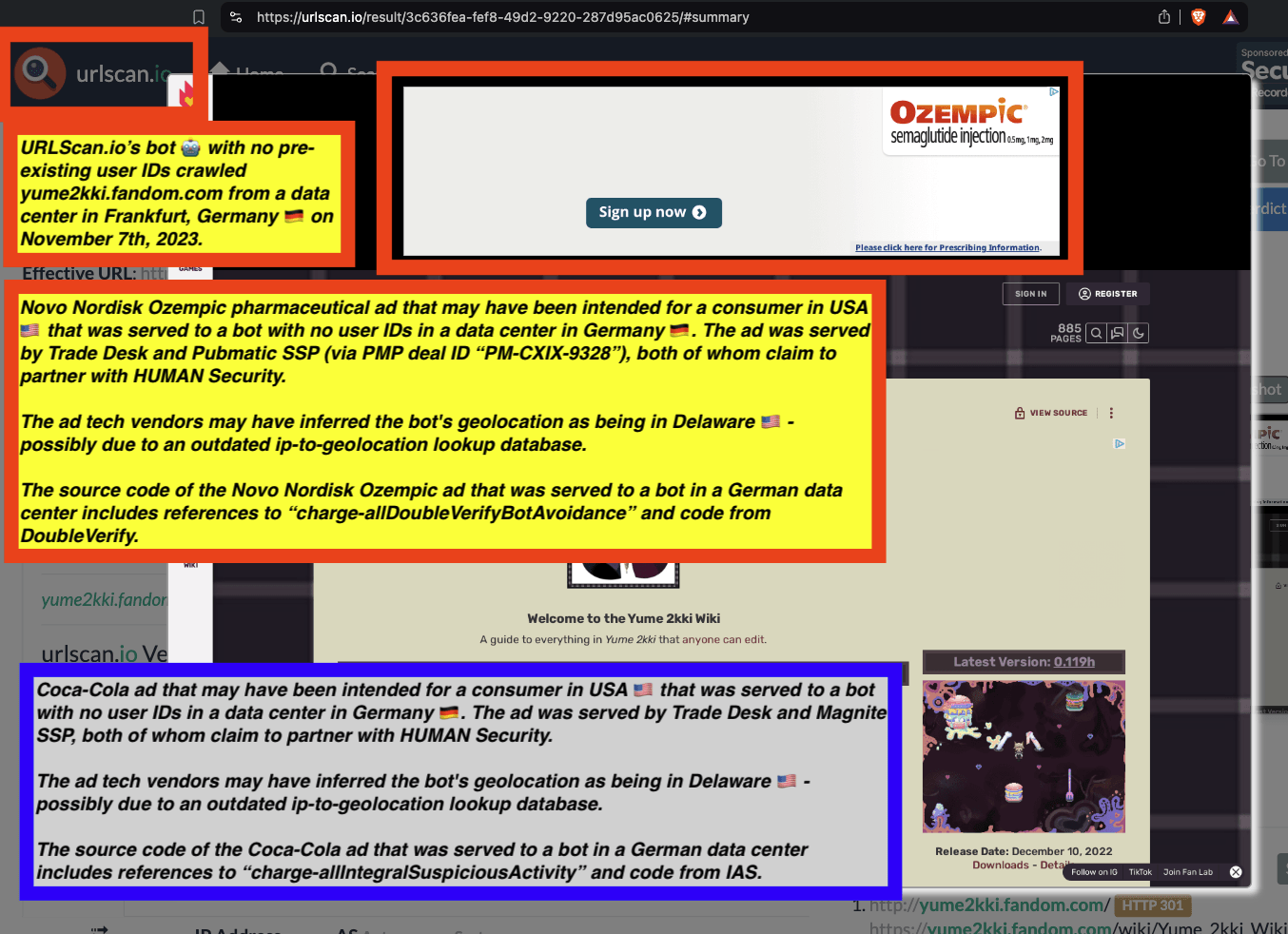

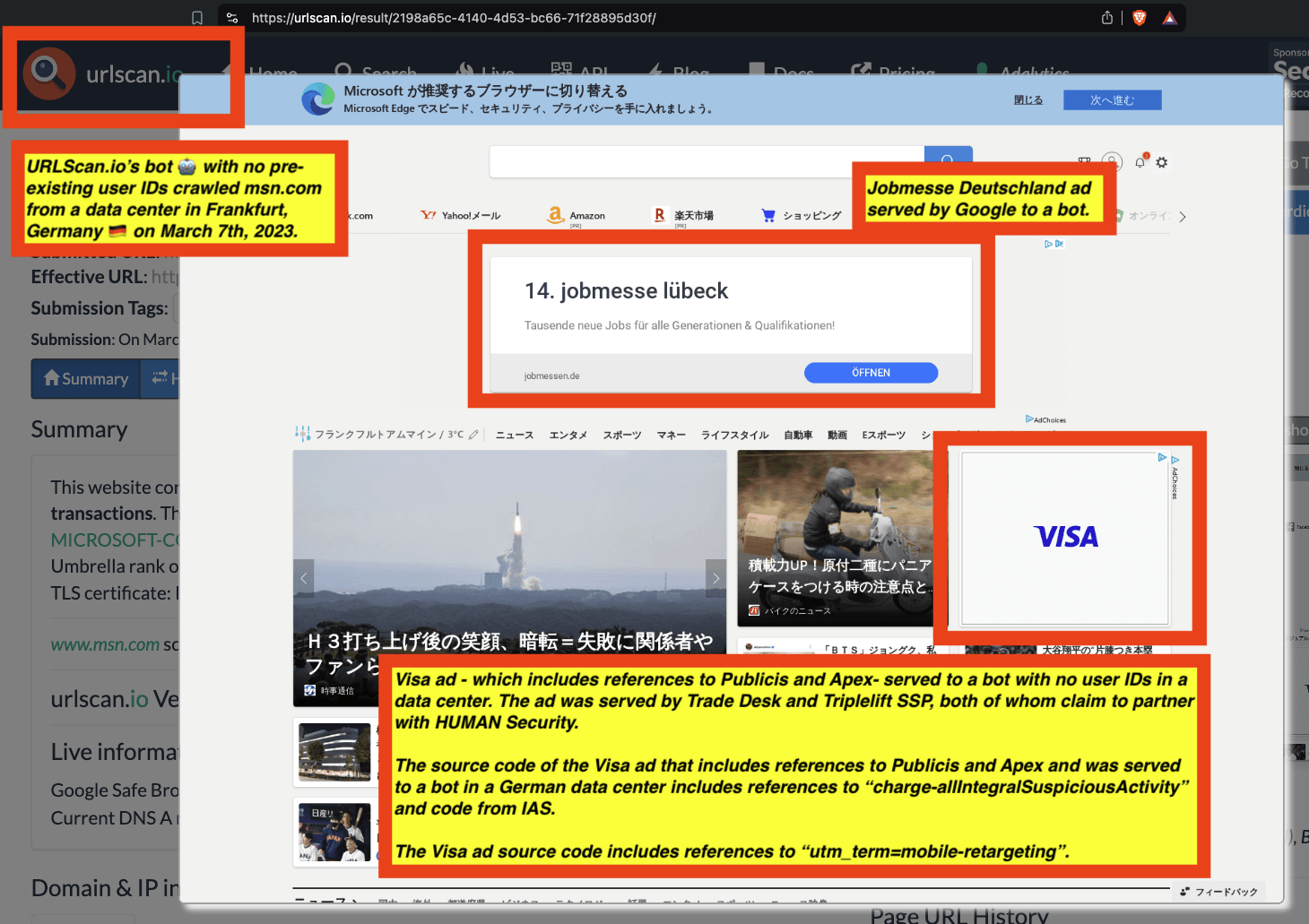

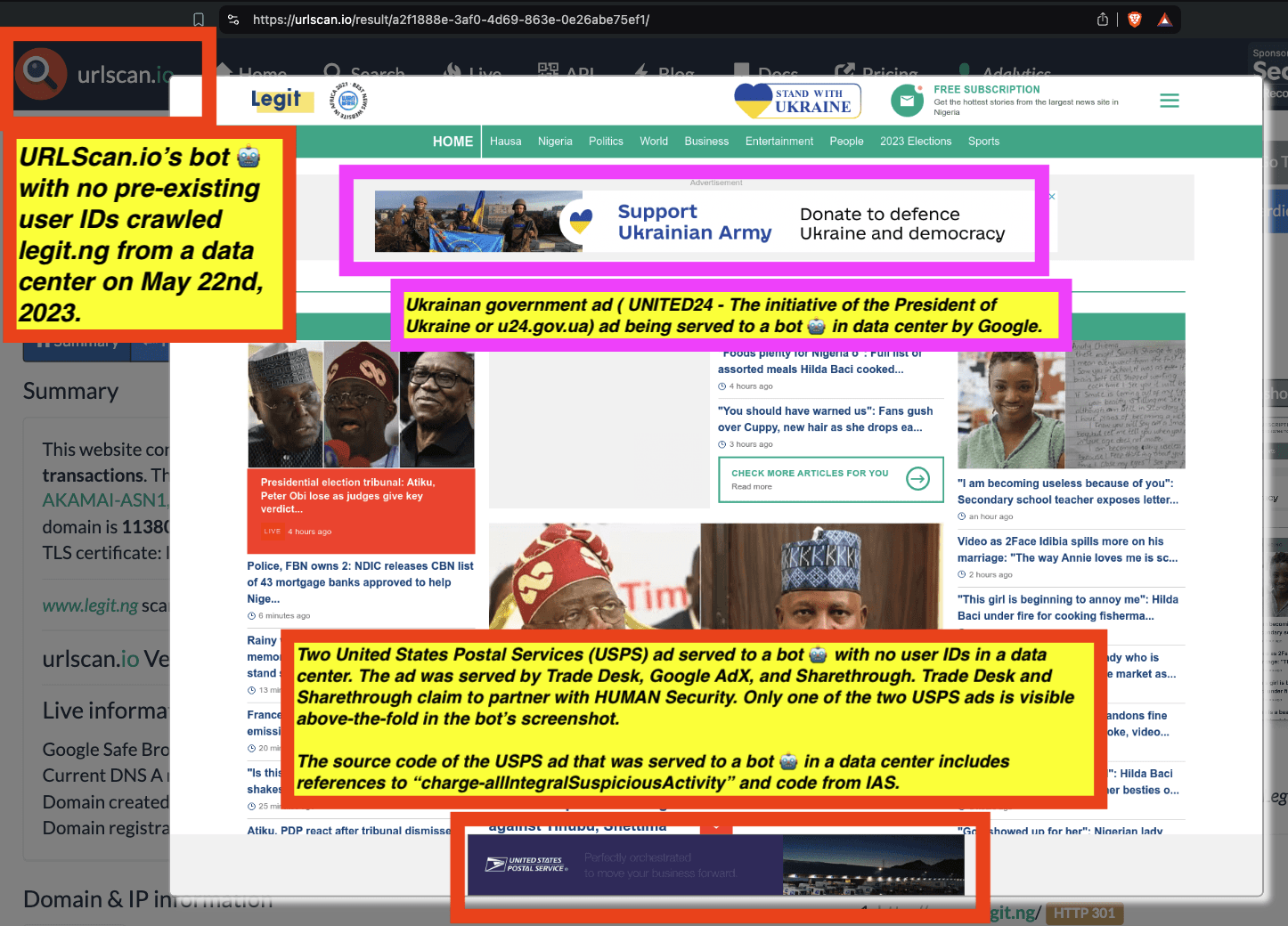

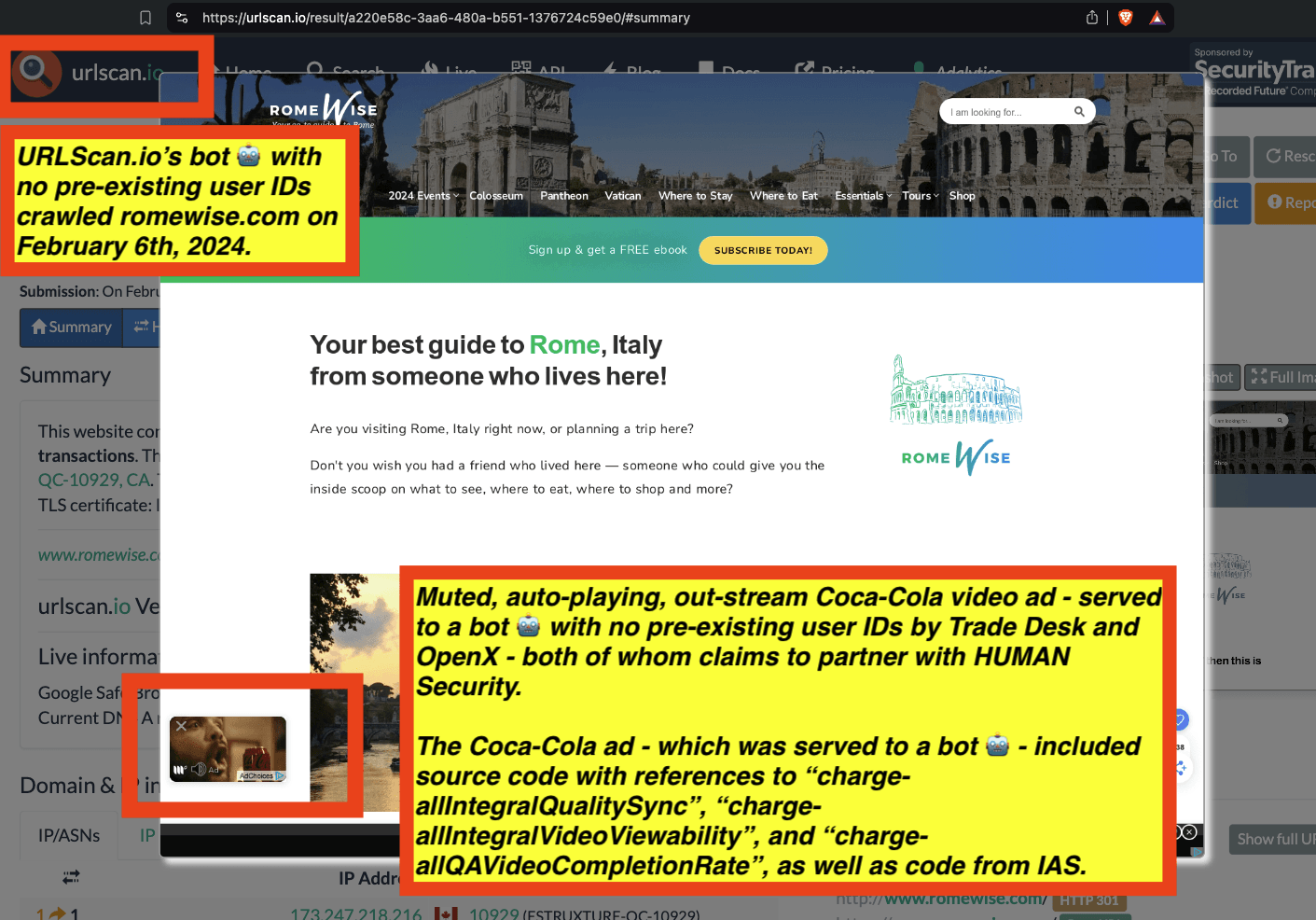

Some ad tech vendors appear to be serving audience targeted or “personalized” ads (including retargeting ads) to bots in data centers that have no stateful attributes, cookies, session storage, fingerprints, user IDs, or browsing history.

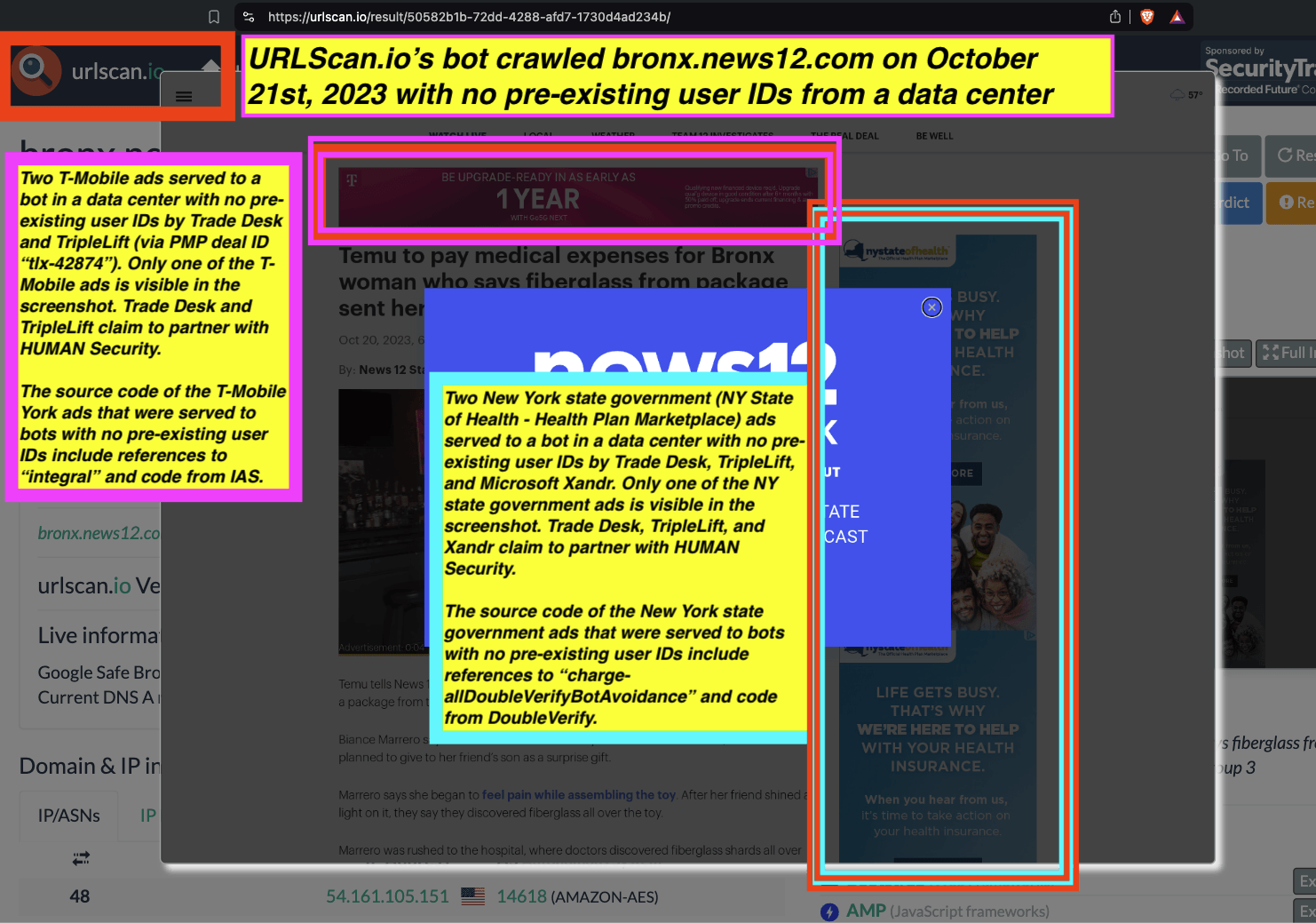

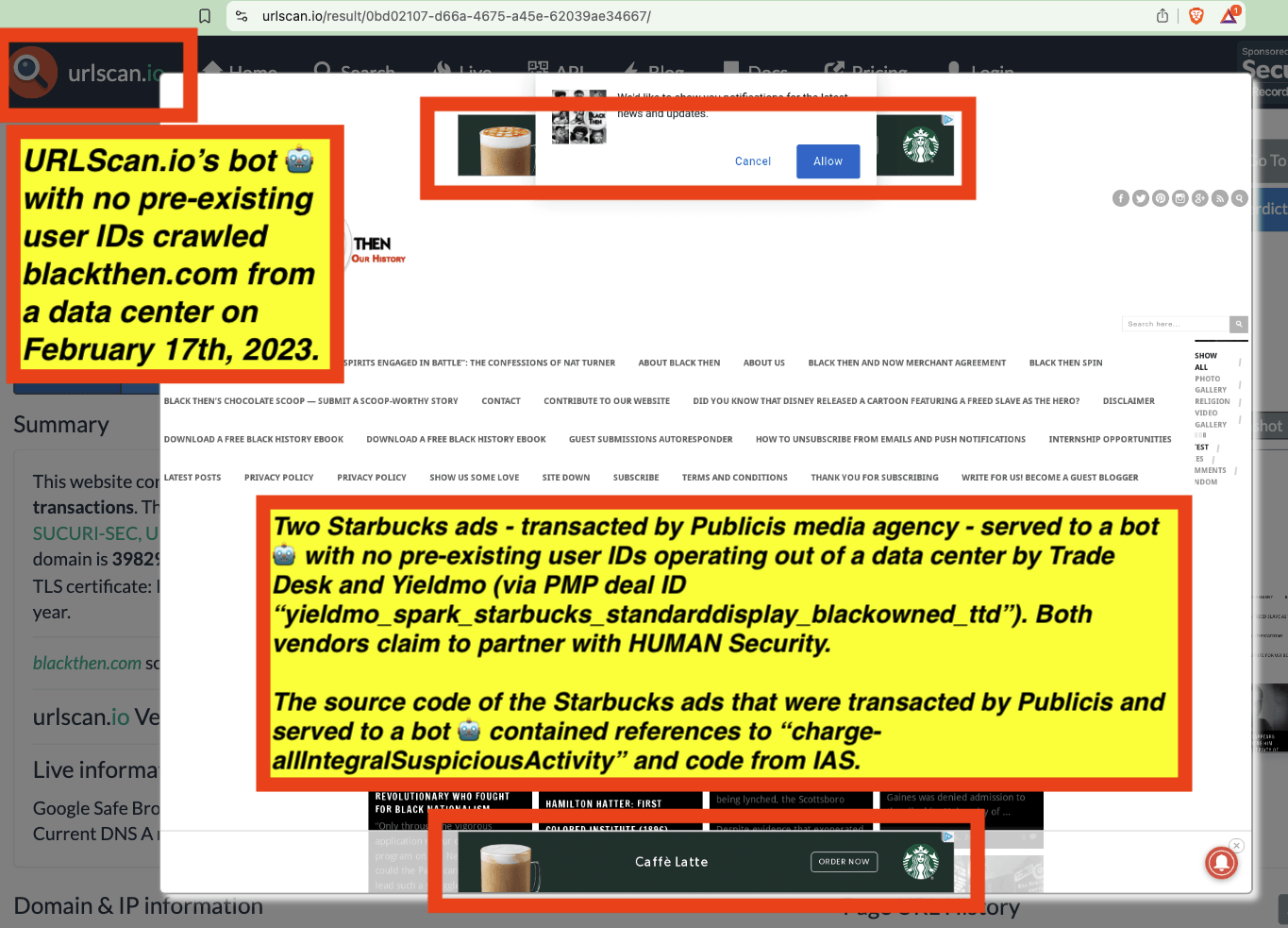

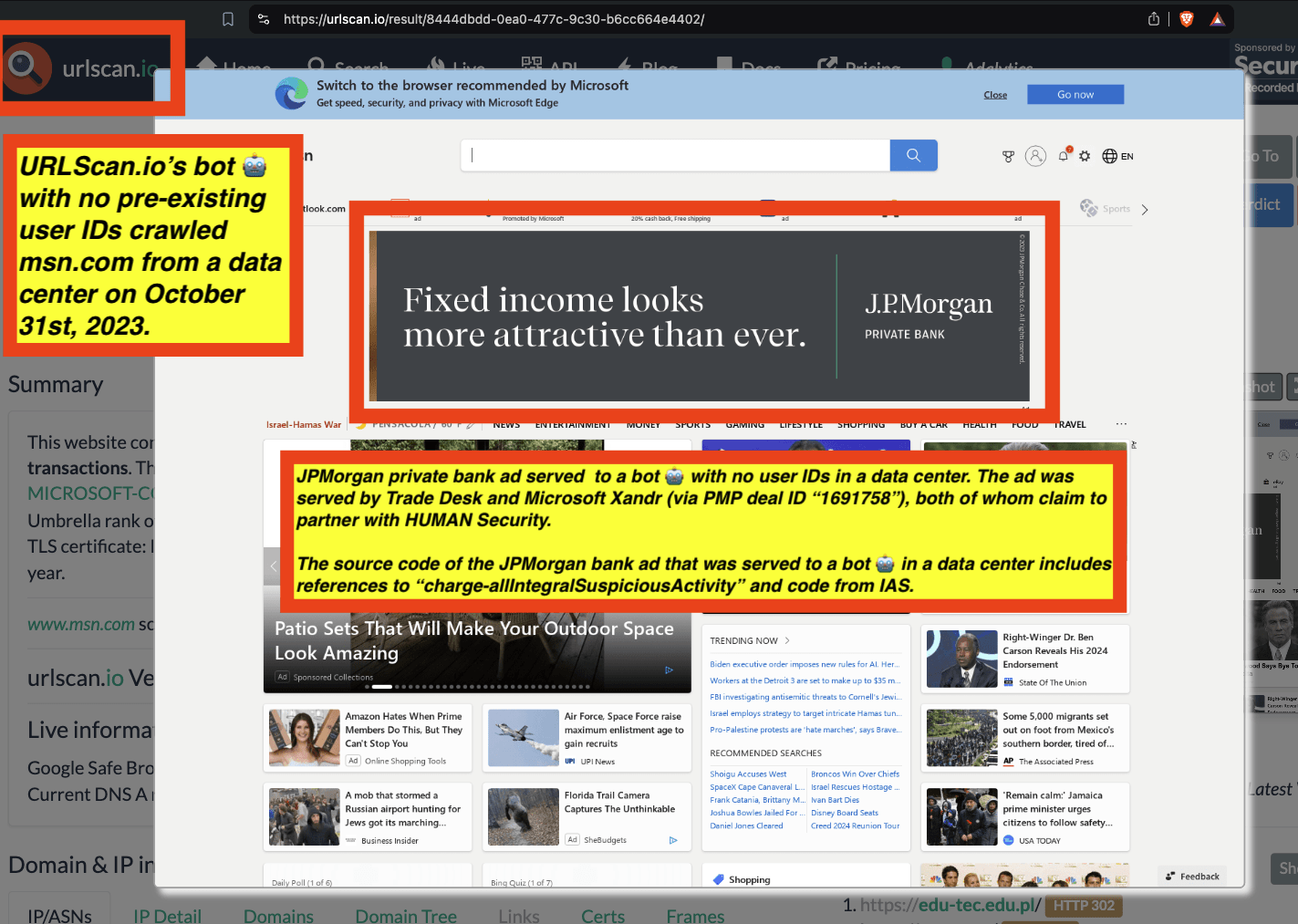

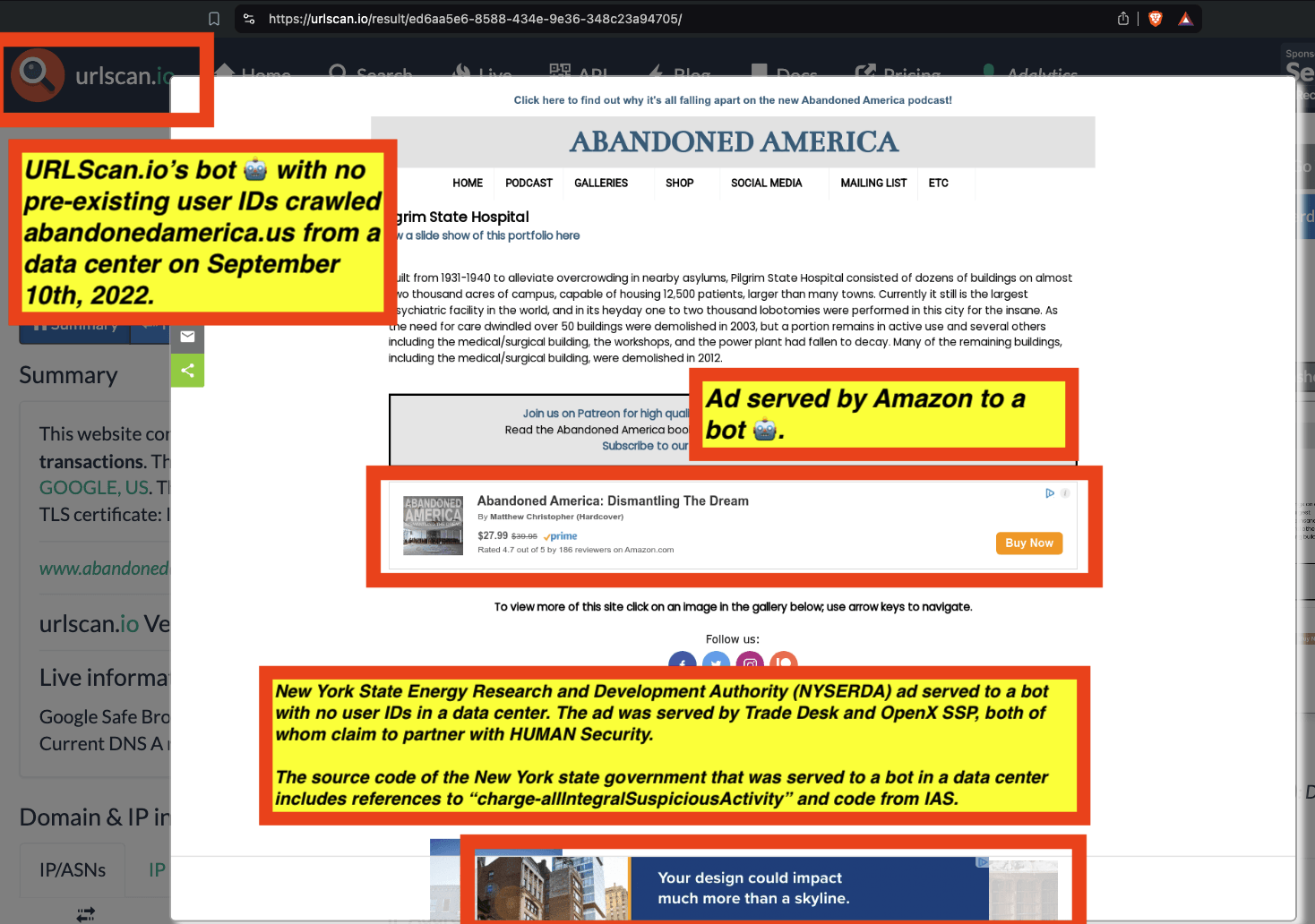

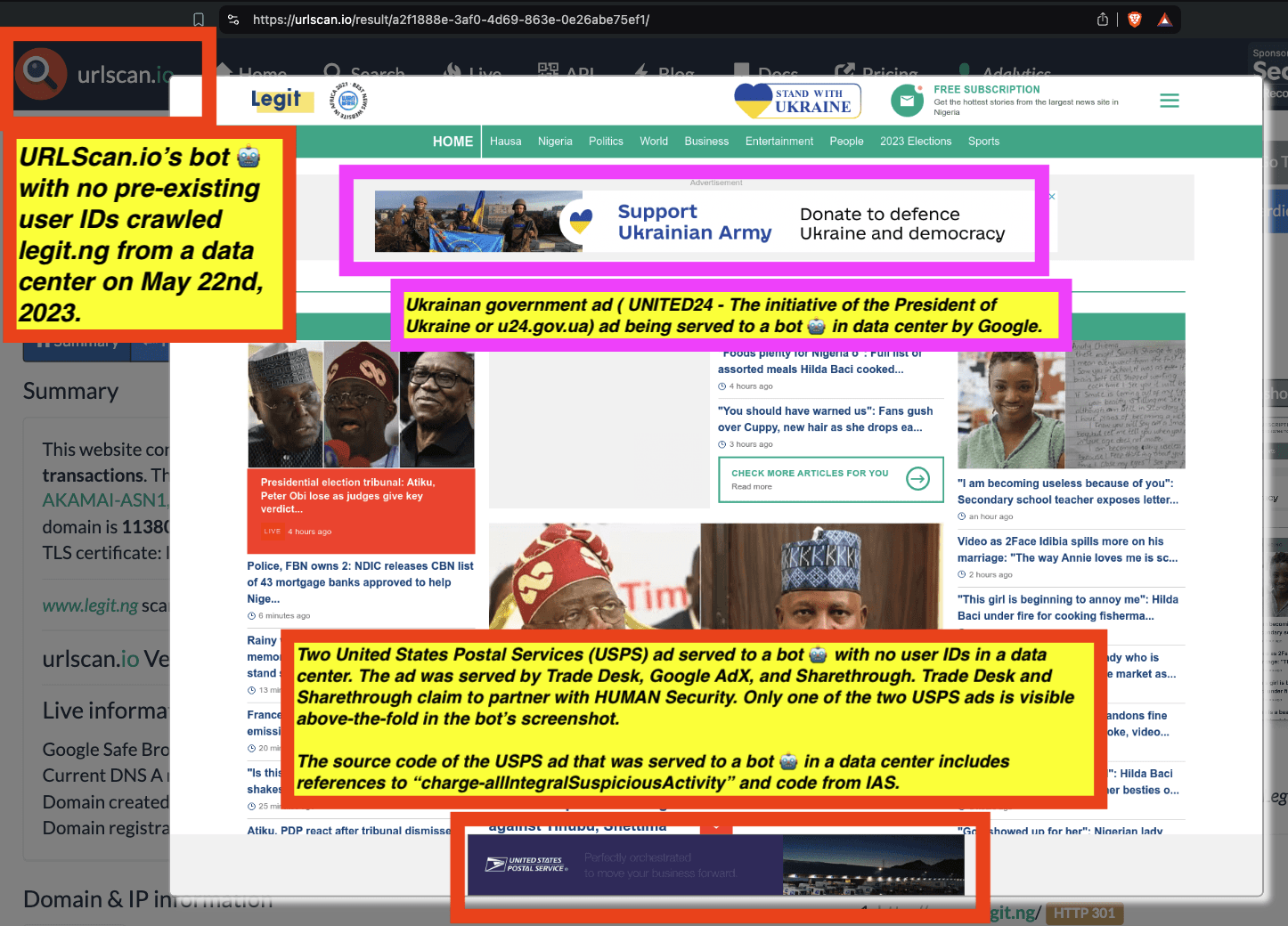

These bot datasets reveal that thousands of different brands - including Procter & Gamble, Hershey’s, HP, Wall Street Journal, T-Mobile, United States Postal Service, Pfizer, IBM, JPMorgan Chase, Bank of America, Microsoft, Haleon, Australian Defence Force, New York, Utah, Oregon, Florida, Indiana & Virginia state governments, Bayer Healthcare, MasterCard, Ernst & Young, Visa, Kenvue, Pfizer, US Bank, Unilever, Disney, American Express, Beam Suntory, Diageo, and United States government agencies such as the New York City Police (NYPD), Department of Homeland Security (DHS TSA), US Census, healthcare.gov, US Army, US Air Force, US Navy, Department of Veterans Affairs, and the Centers for Disease Control and Prevention - had their ads served by ad tech vendors to bots in data centers for at least 5+ years (since at least 2020).

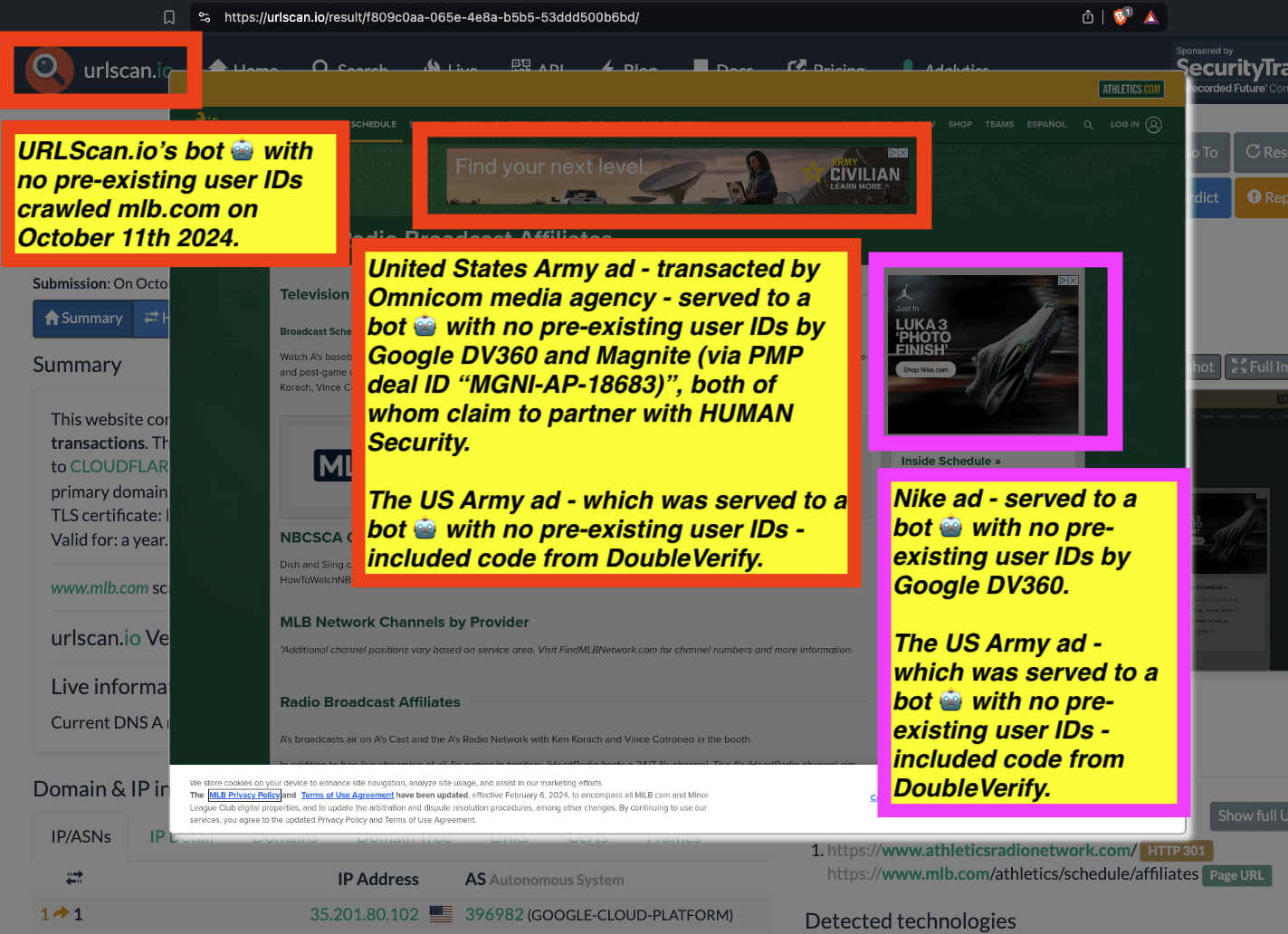

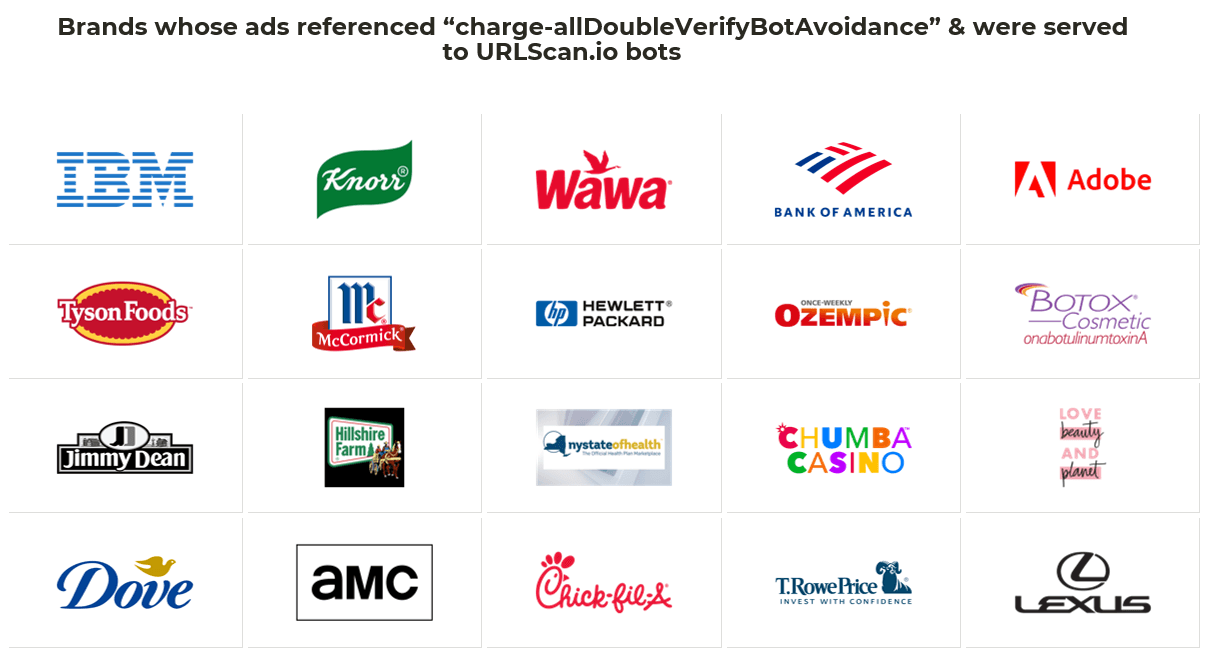

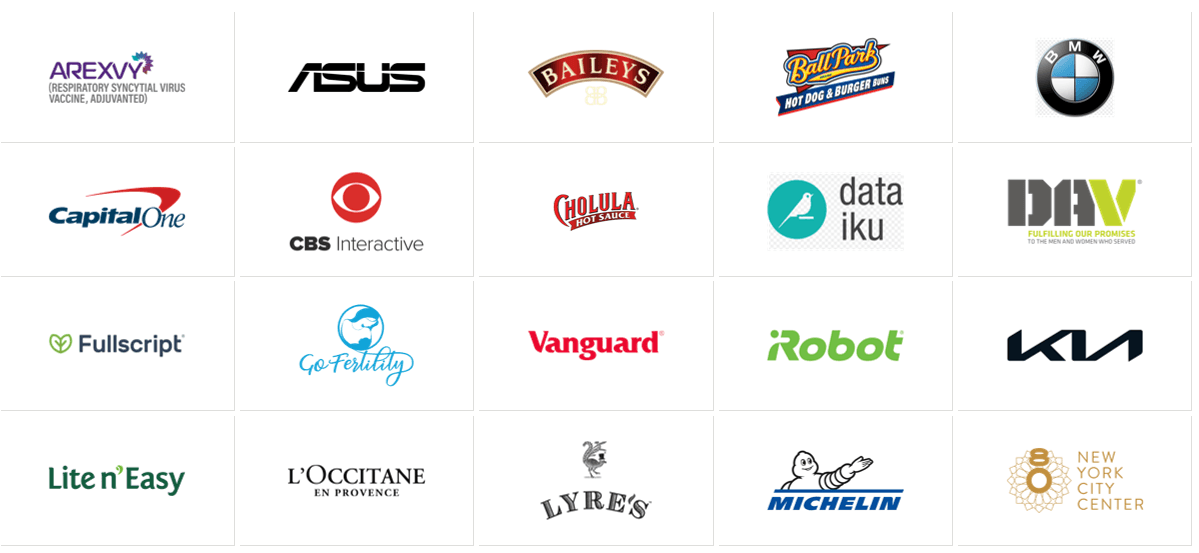

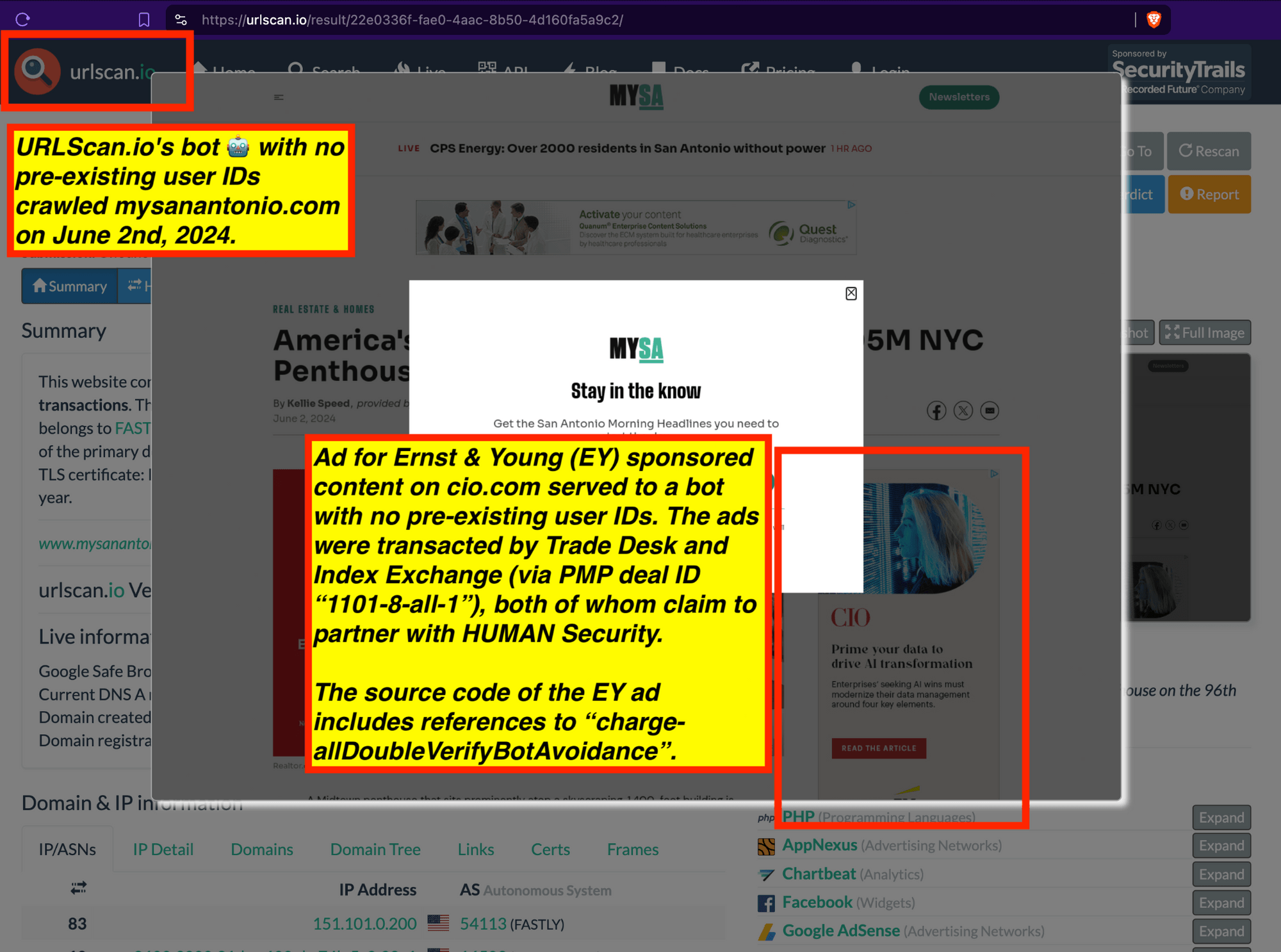

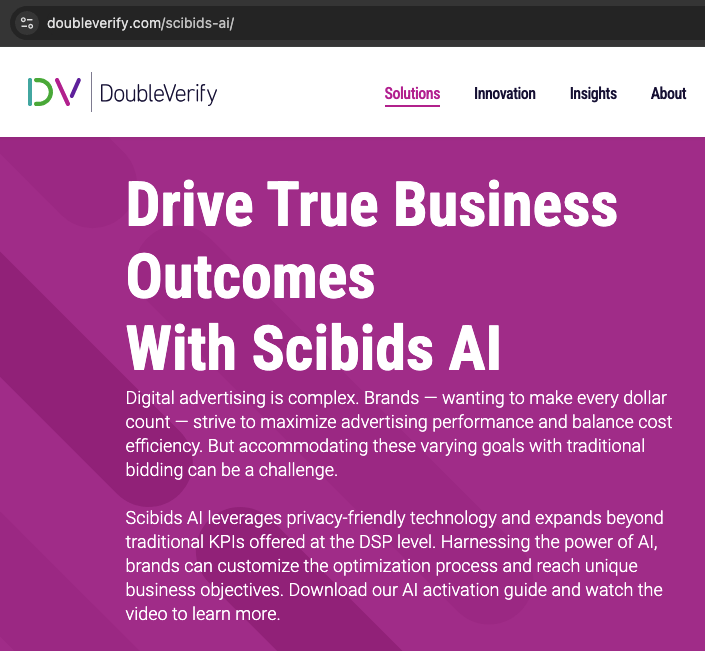

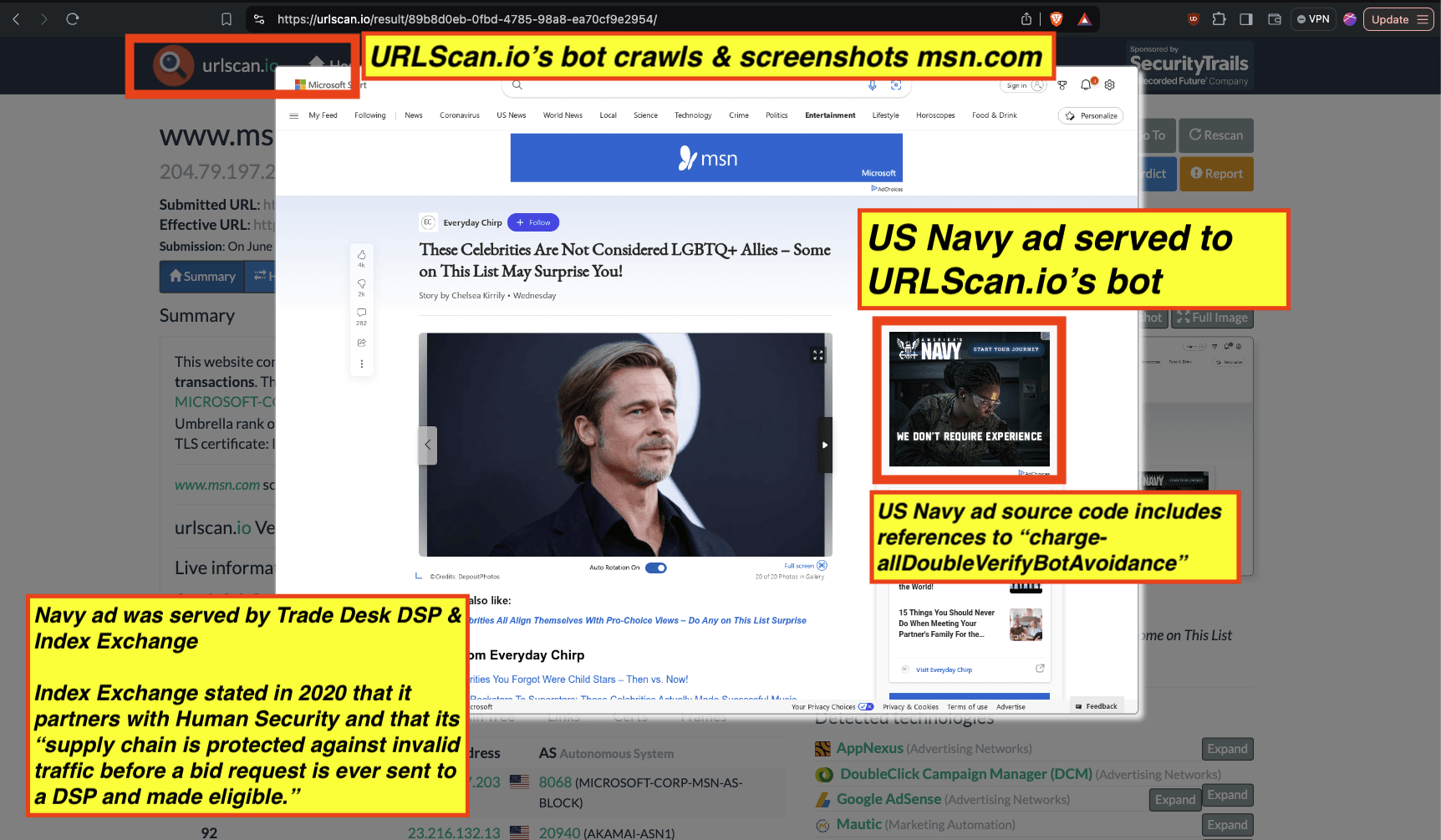

Many of these ads which were served to bots appear to be using various “pre-bid” bot avoidance or filtration tools from vendors such as Integral Ad Science (IAS) and DoubleVerify. For example, the US Navy had ads served to declared bots running out of Google Cloud server farms, whereas the US Navy’s ads contain source code references to “charge-allDoubleVerifyBotAvoidance”.

In just the observed sample dataset, Google was observed serving thousands of healthcare.gov ads (from the U.S. Centers for Medicare & Medicaid Services) for years to bots running out of data center server farms.

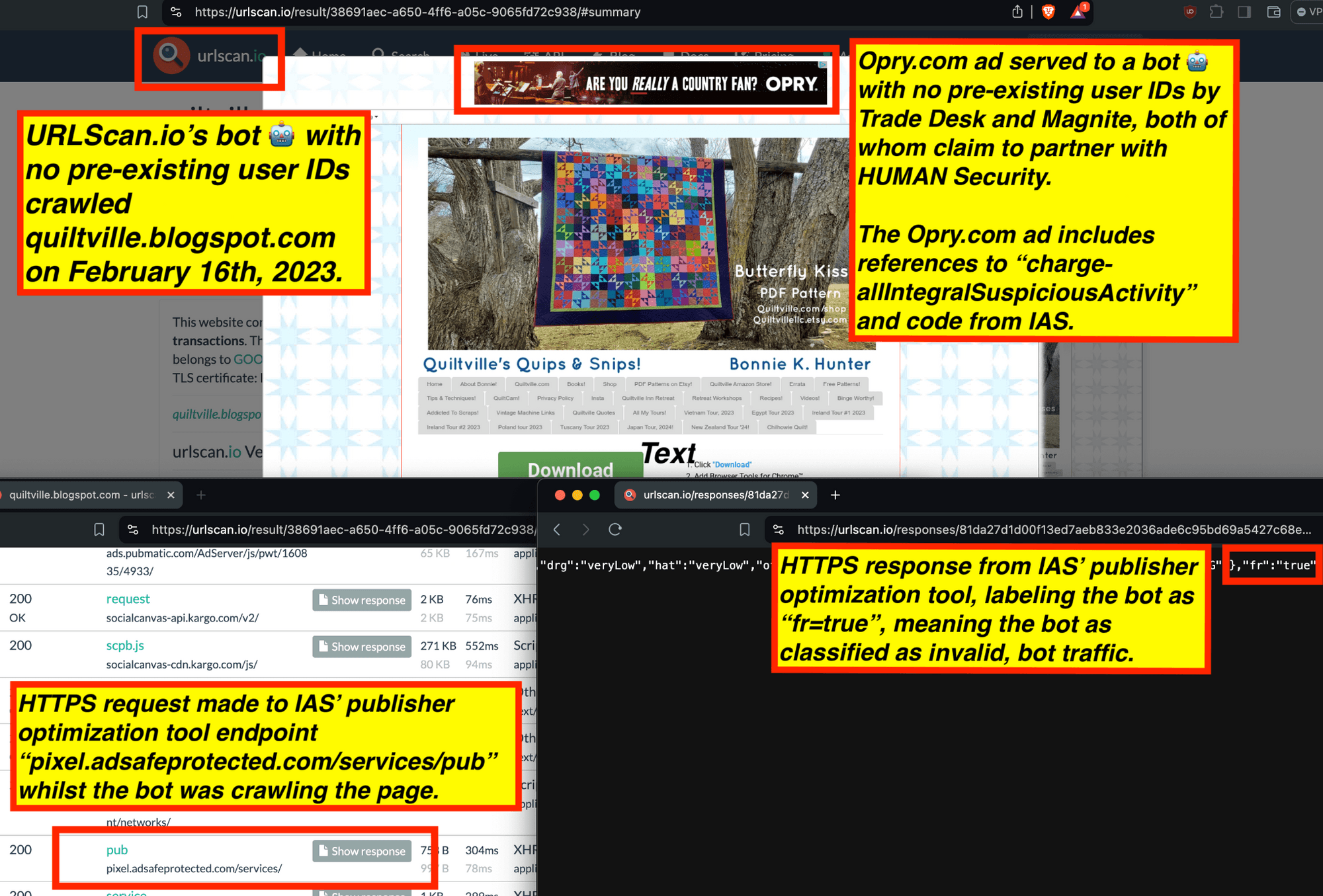

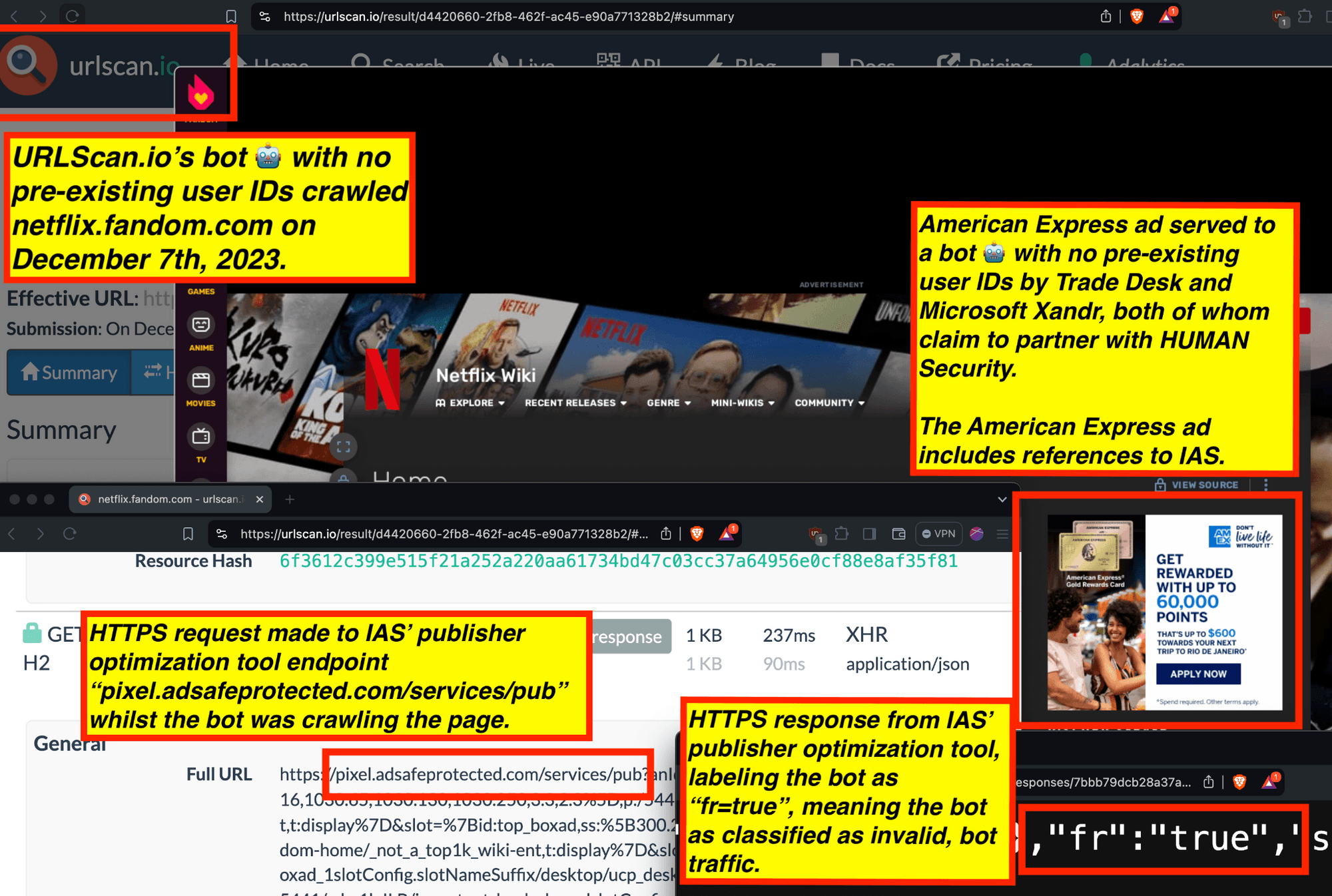

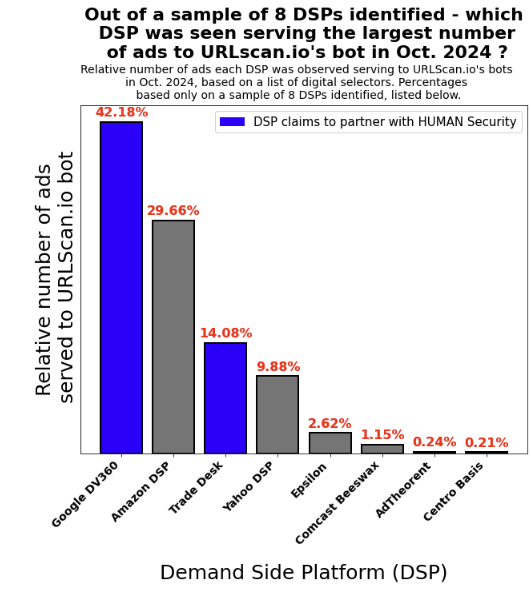

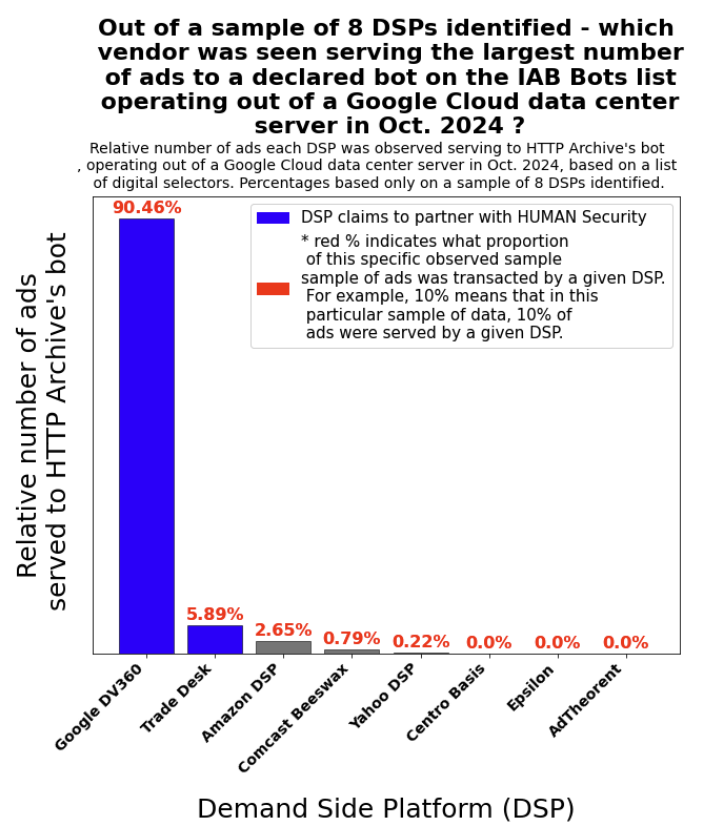

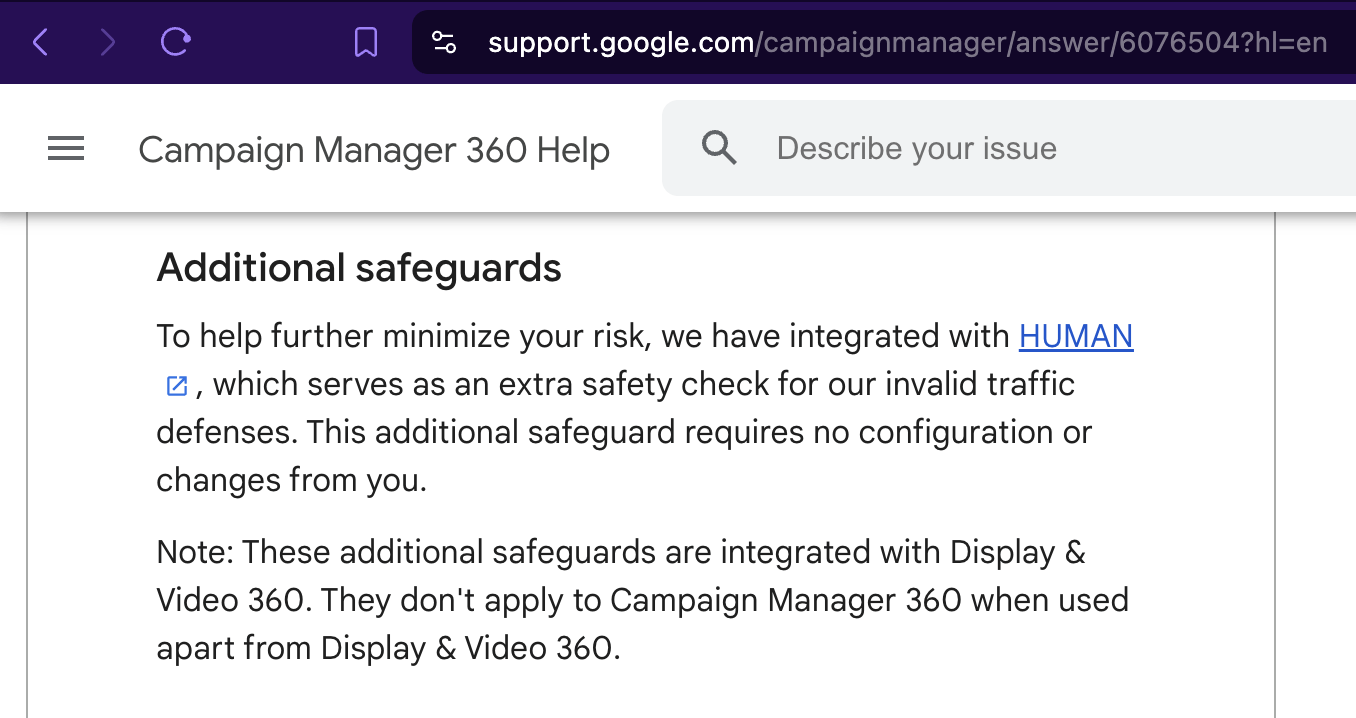

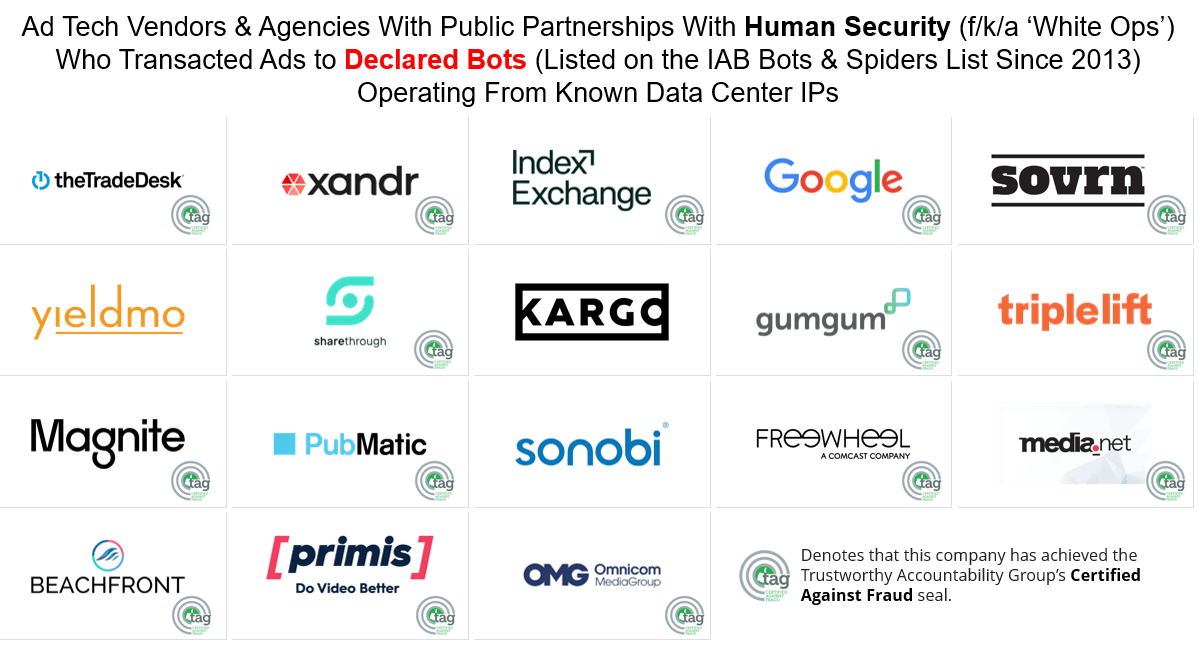

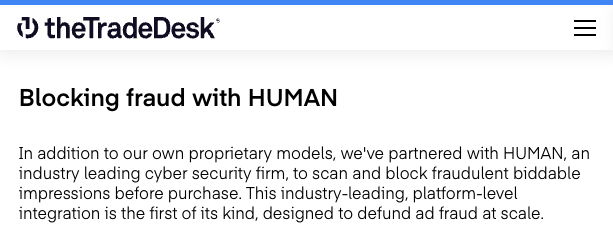

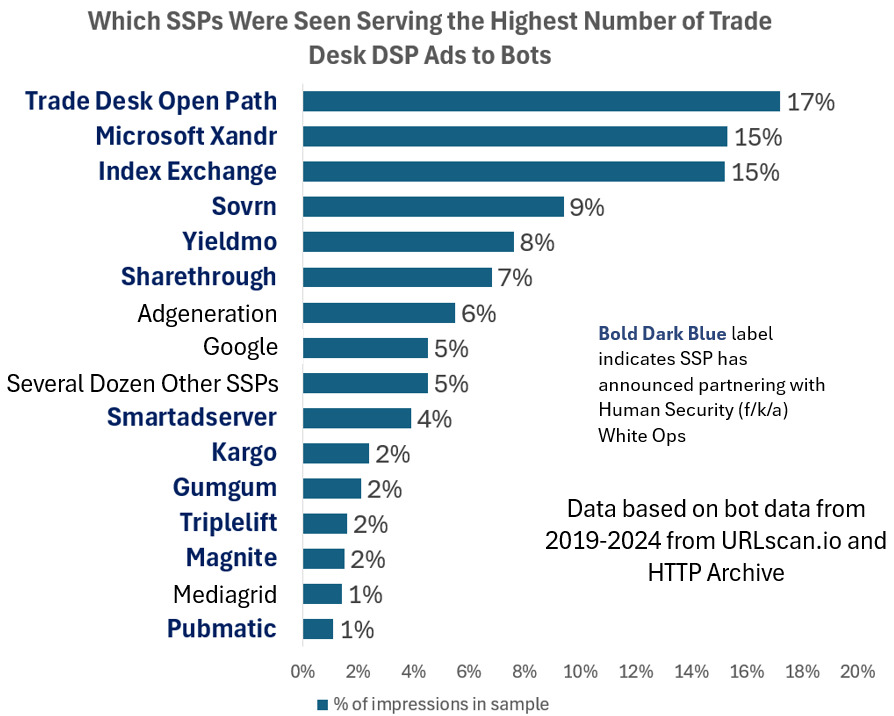

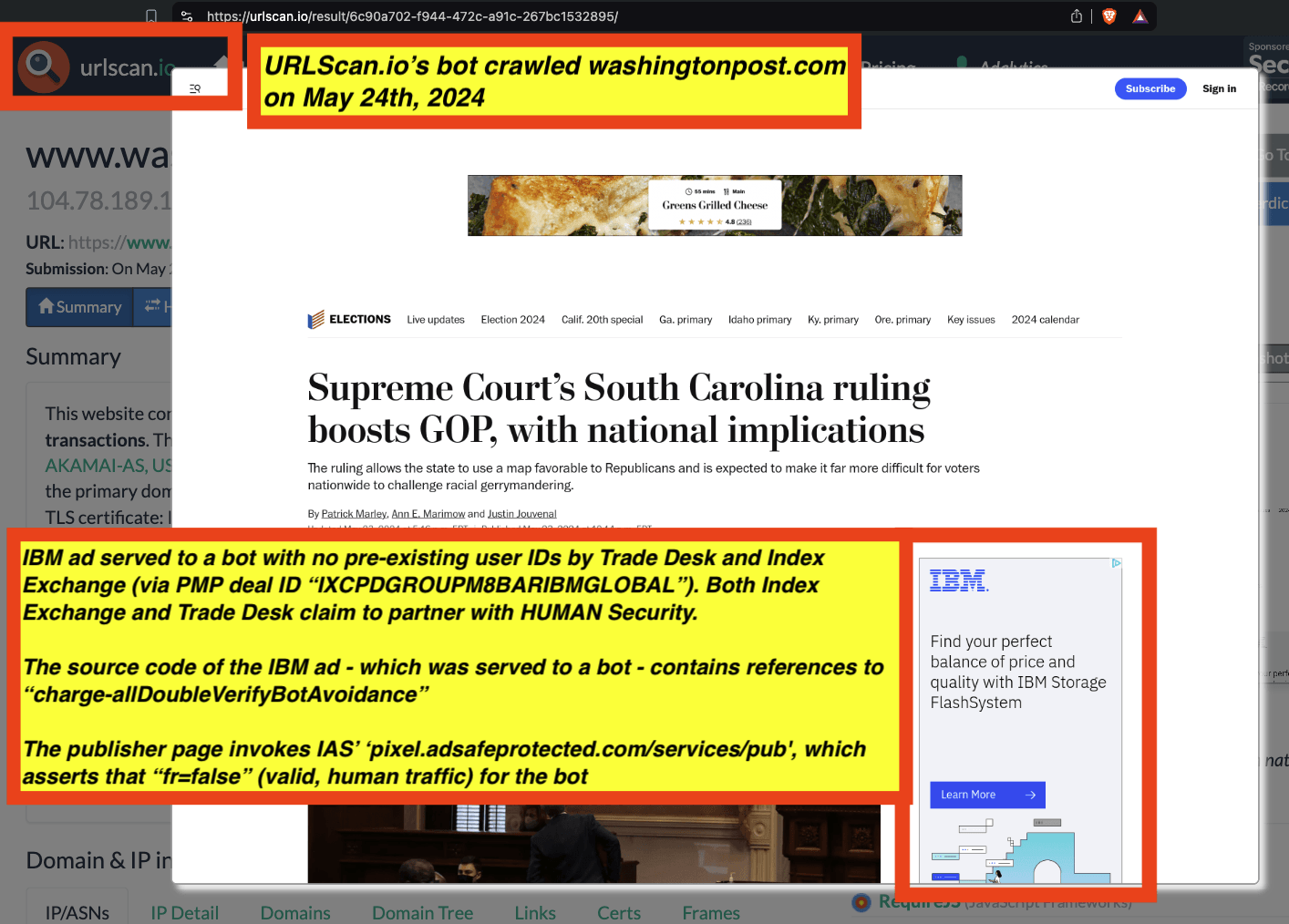

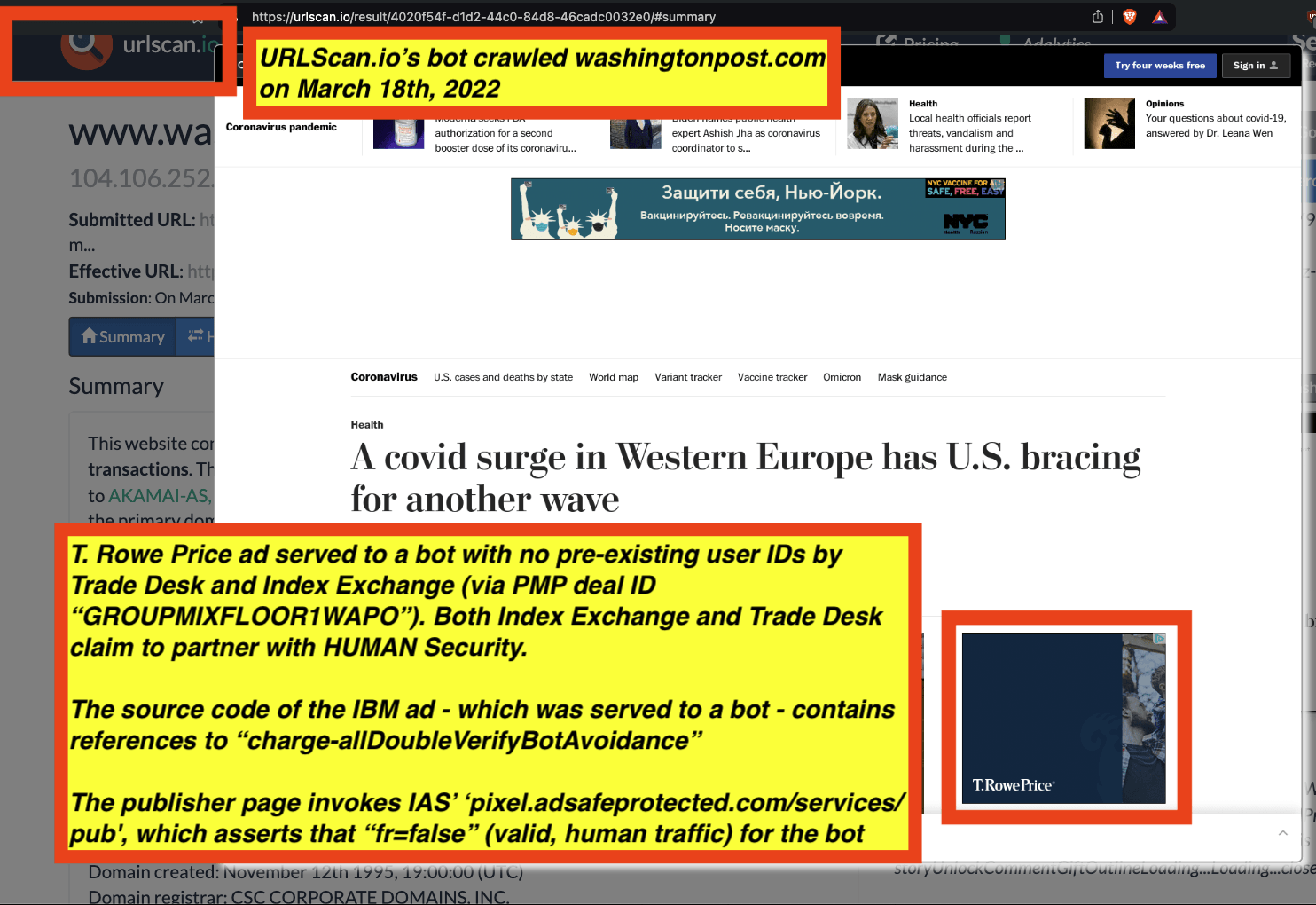

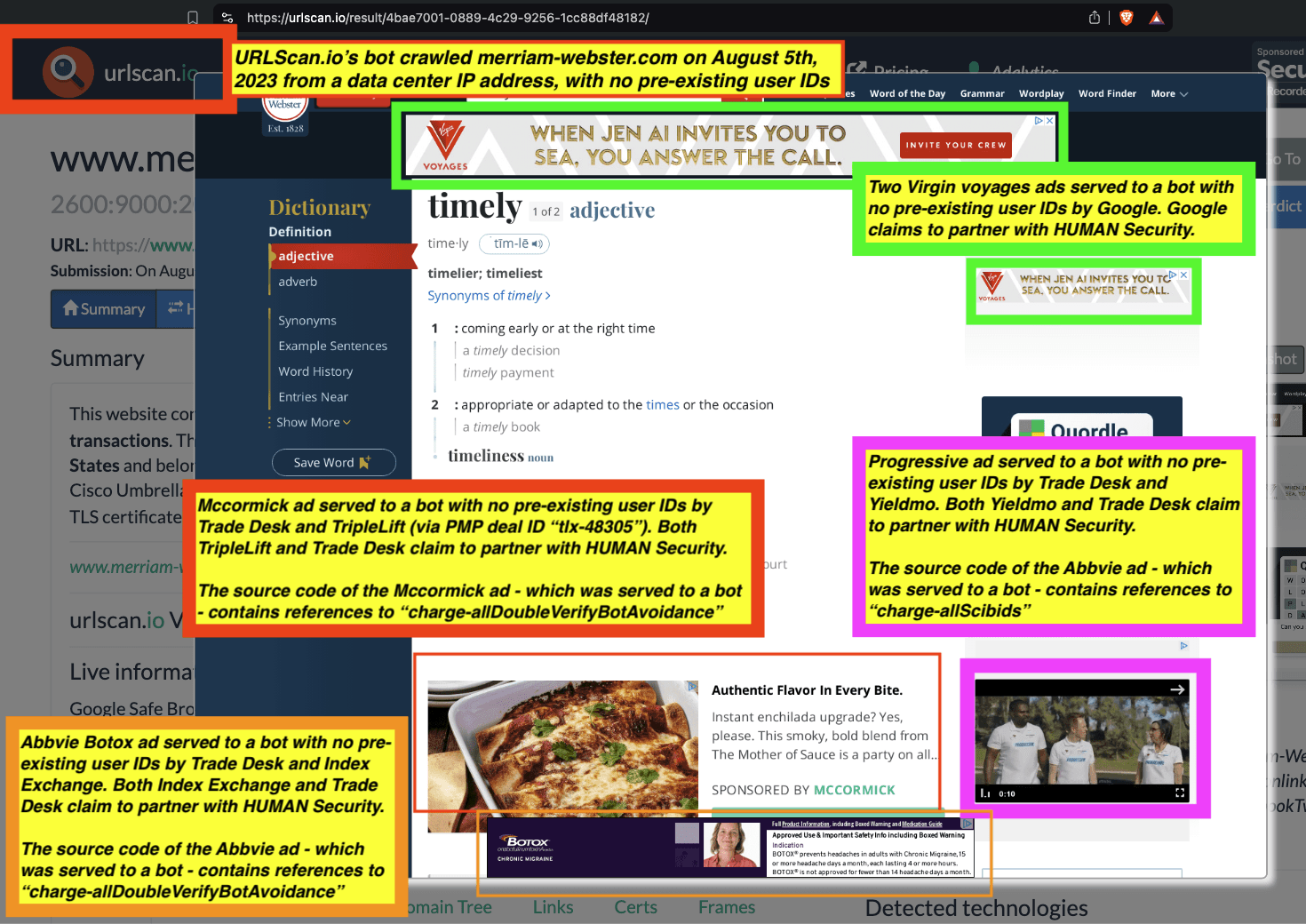

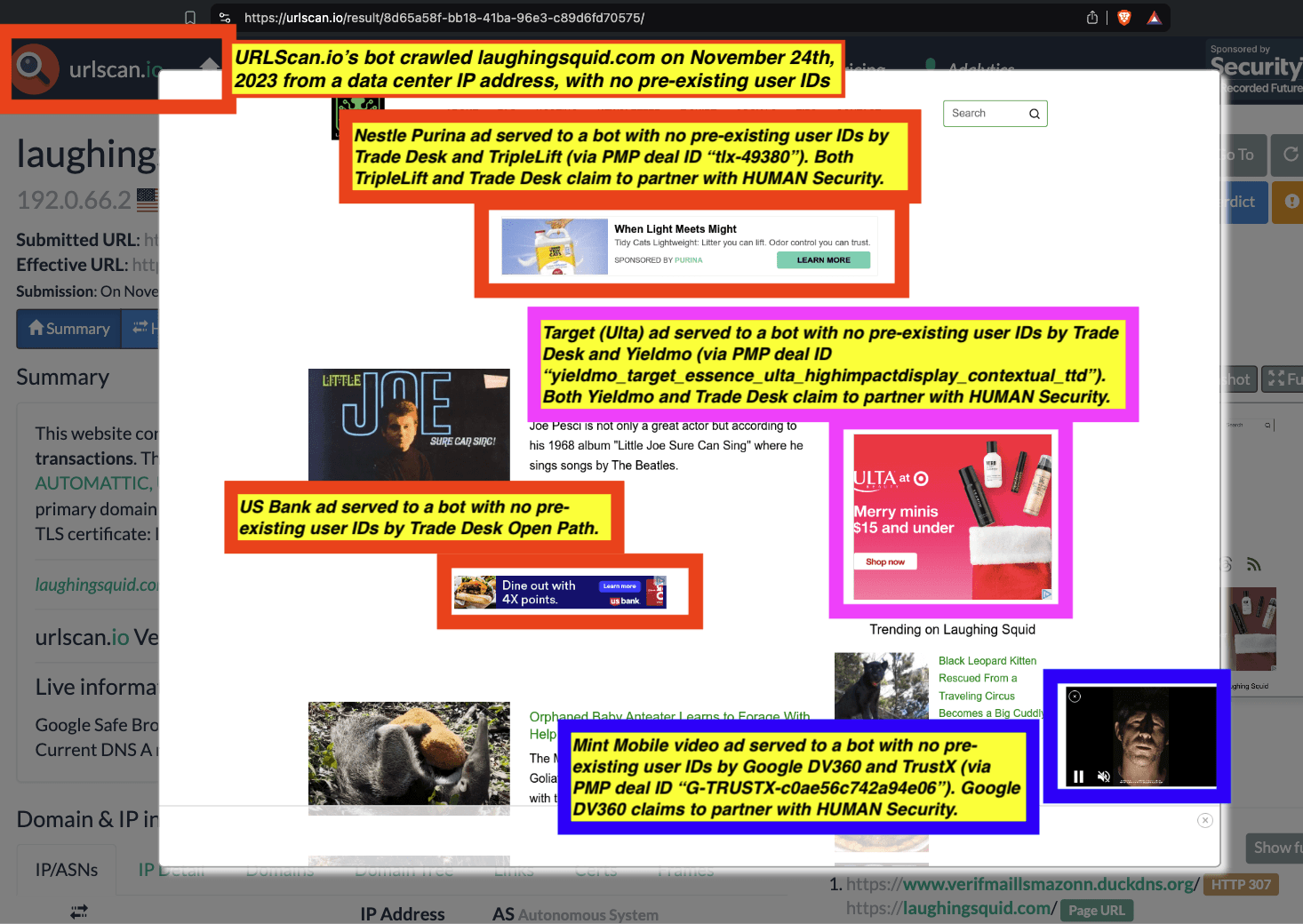

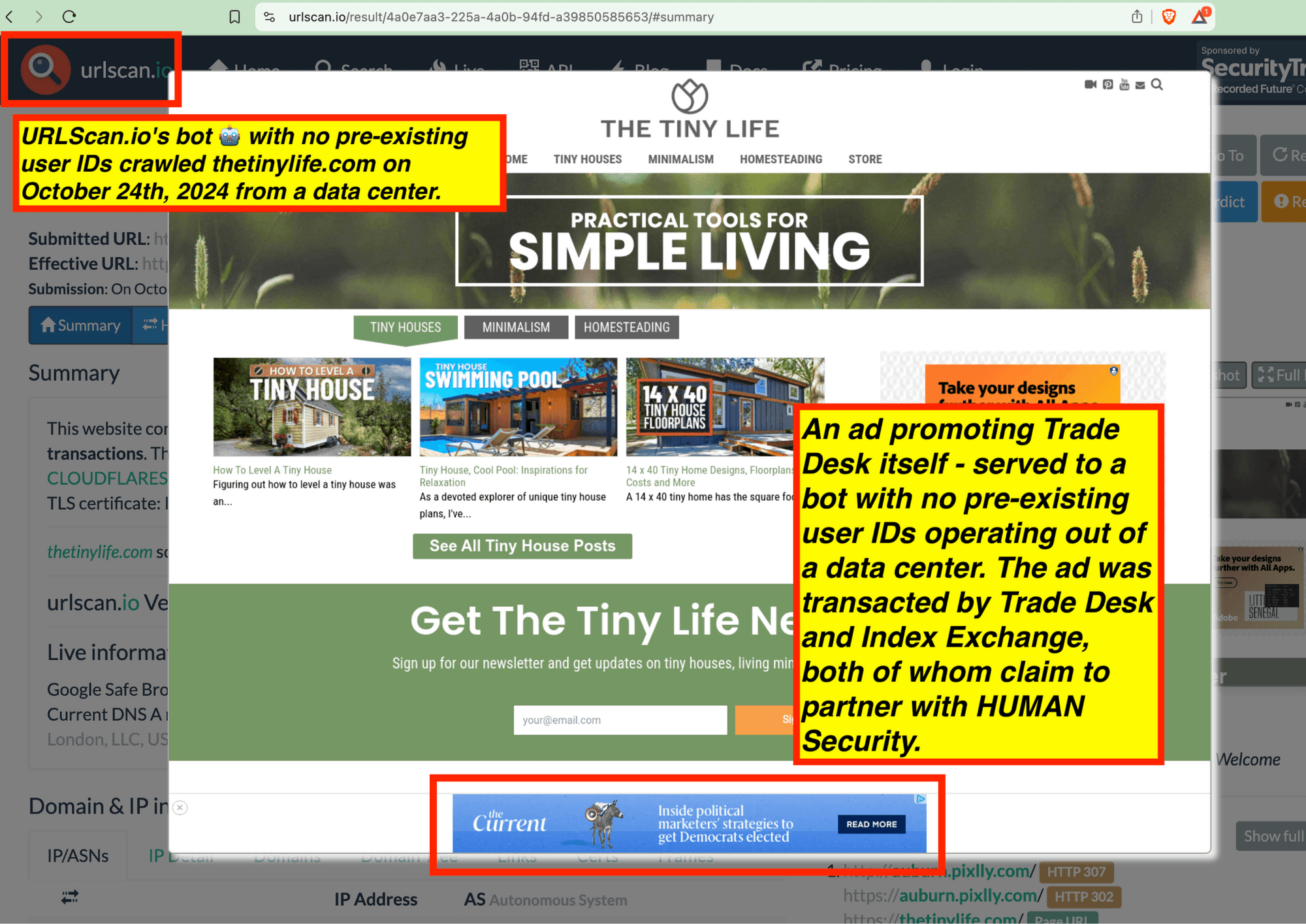

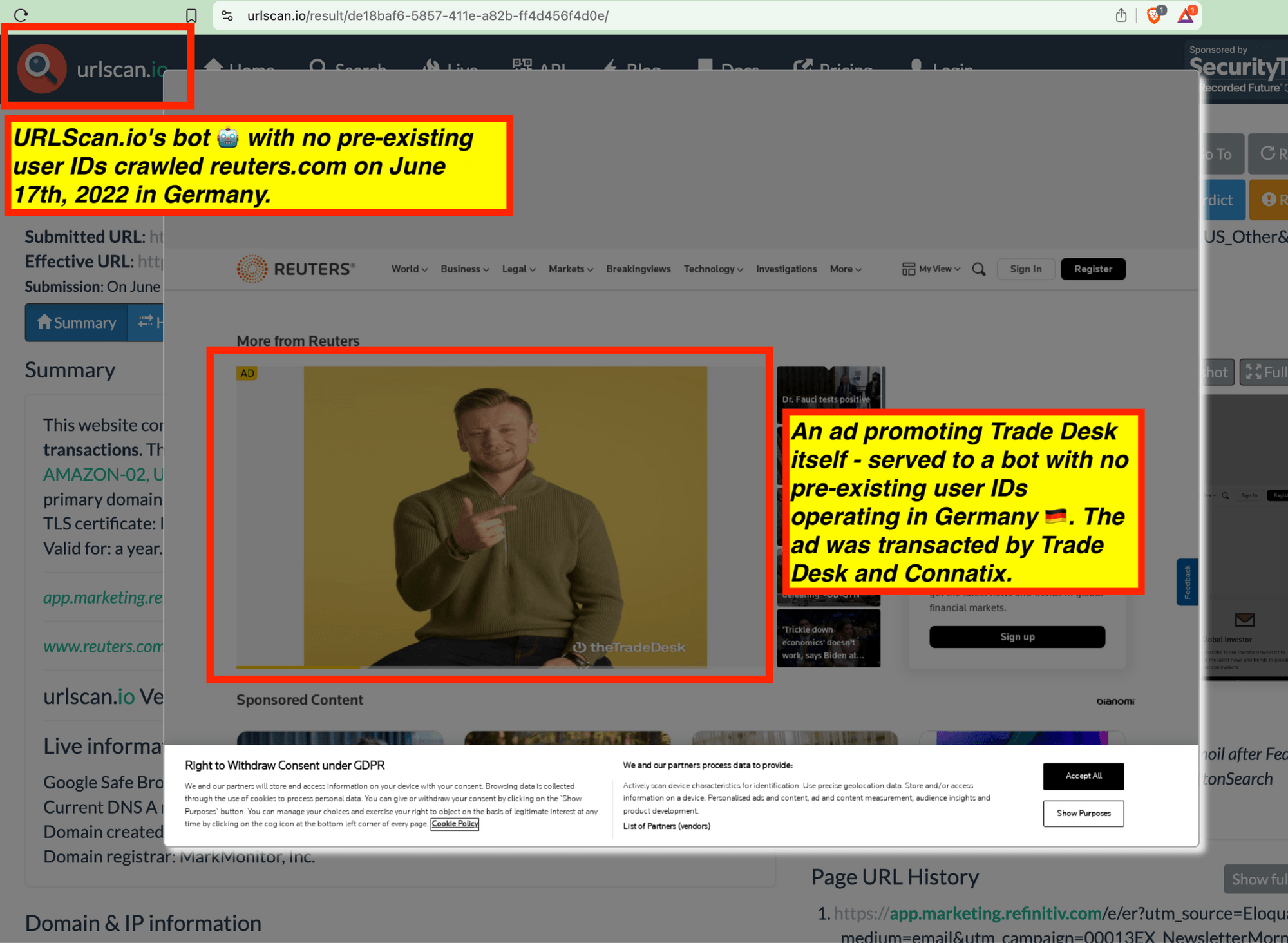

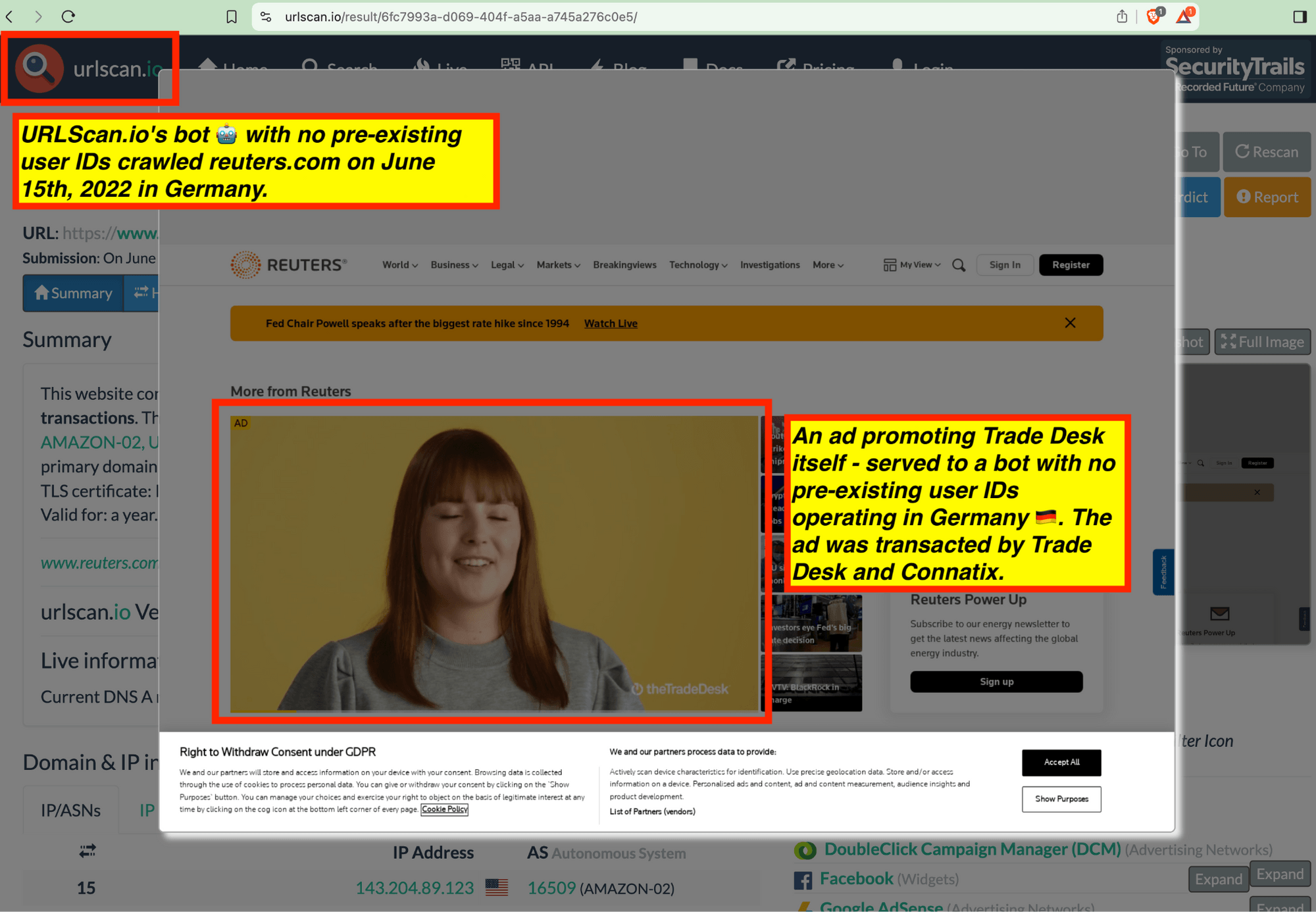

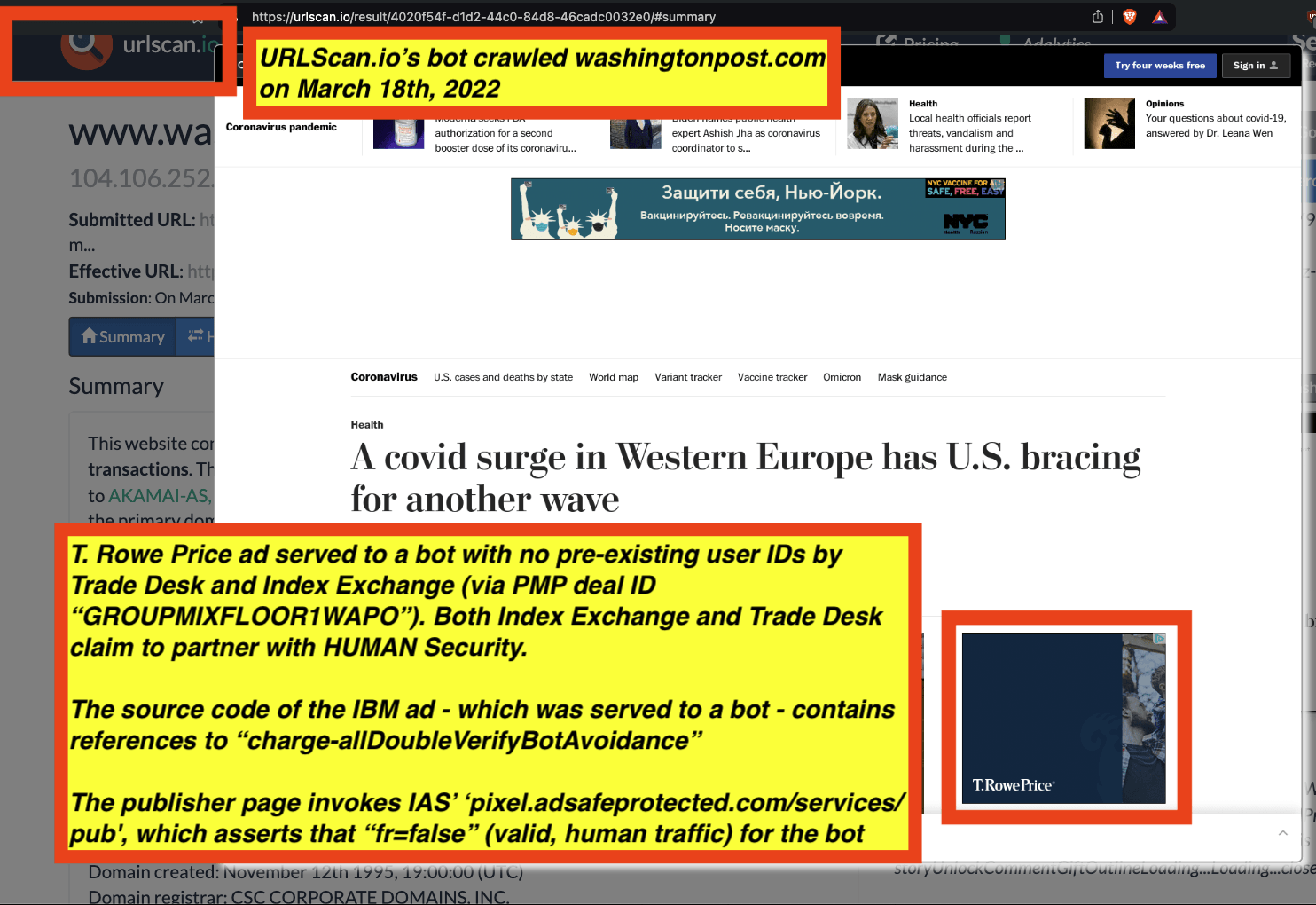

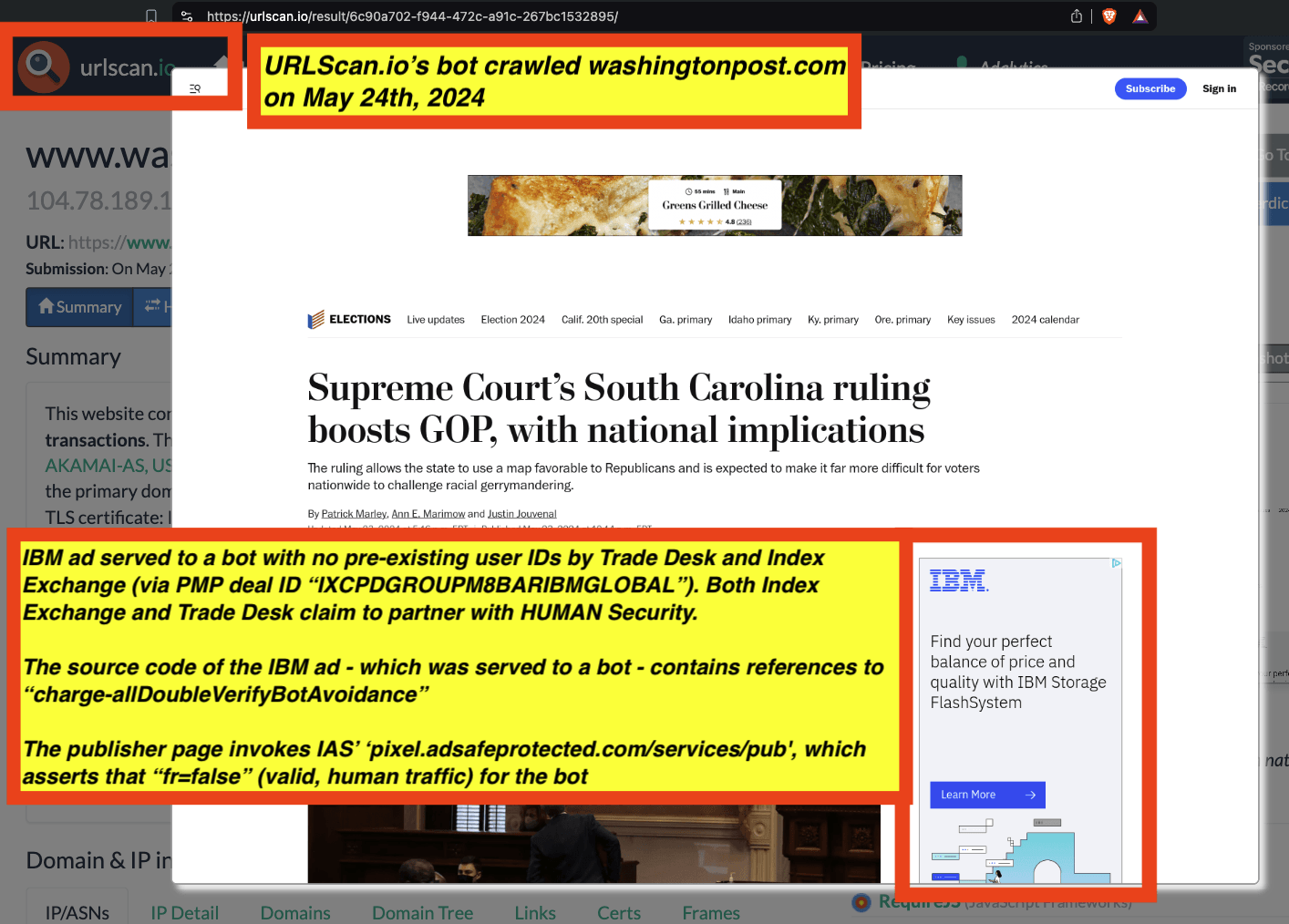

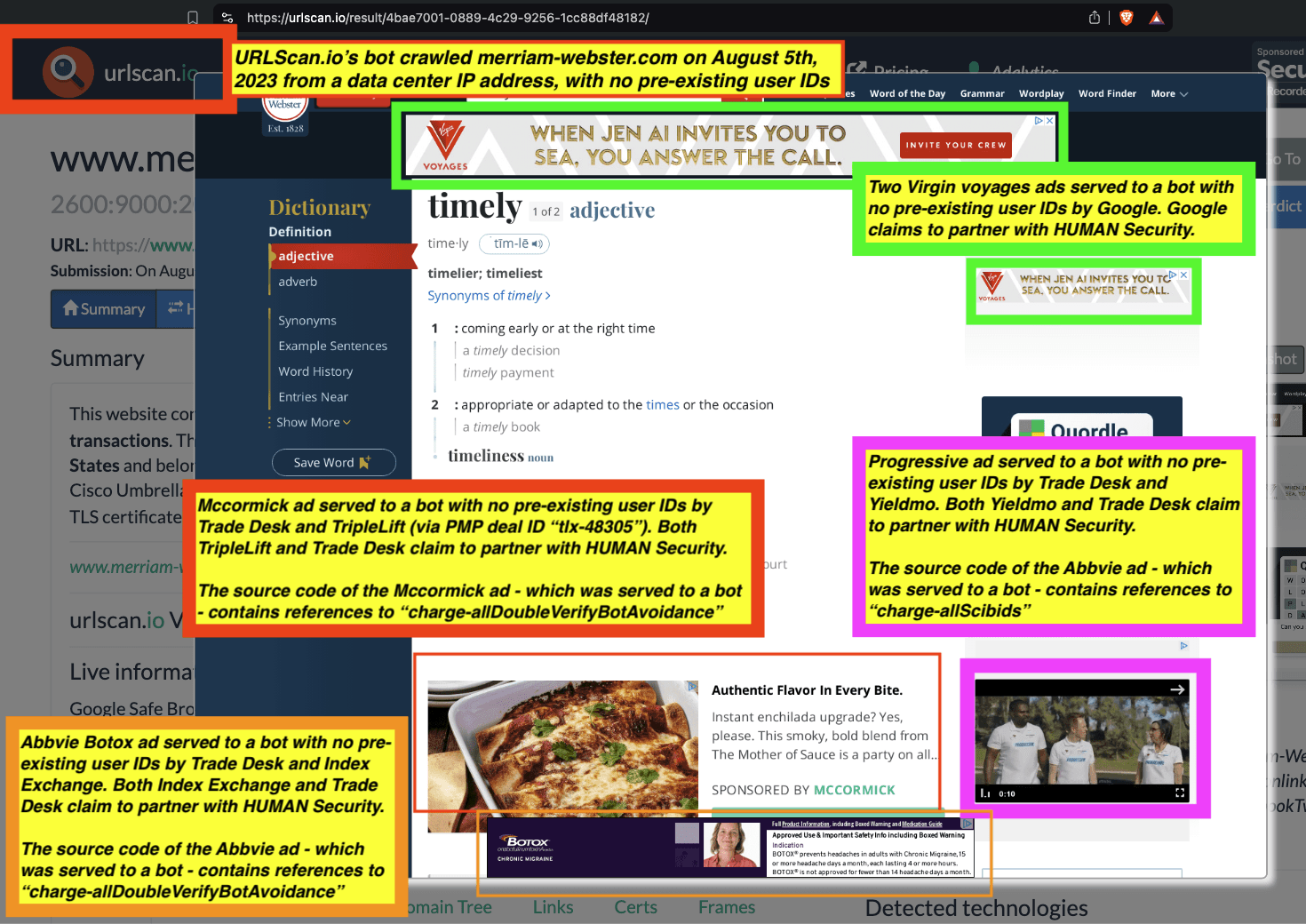

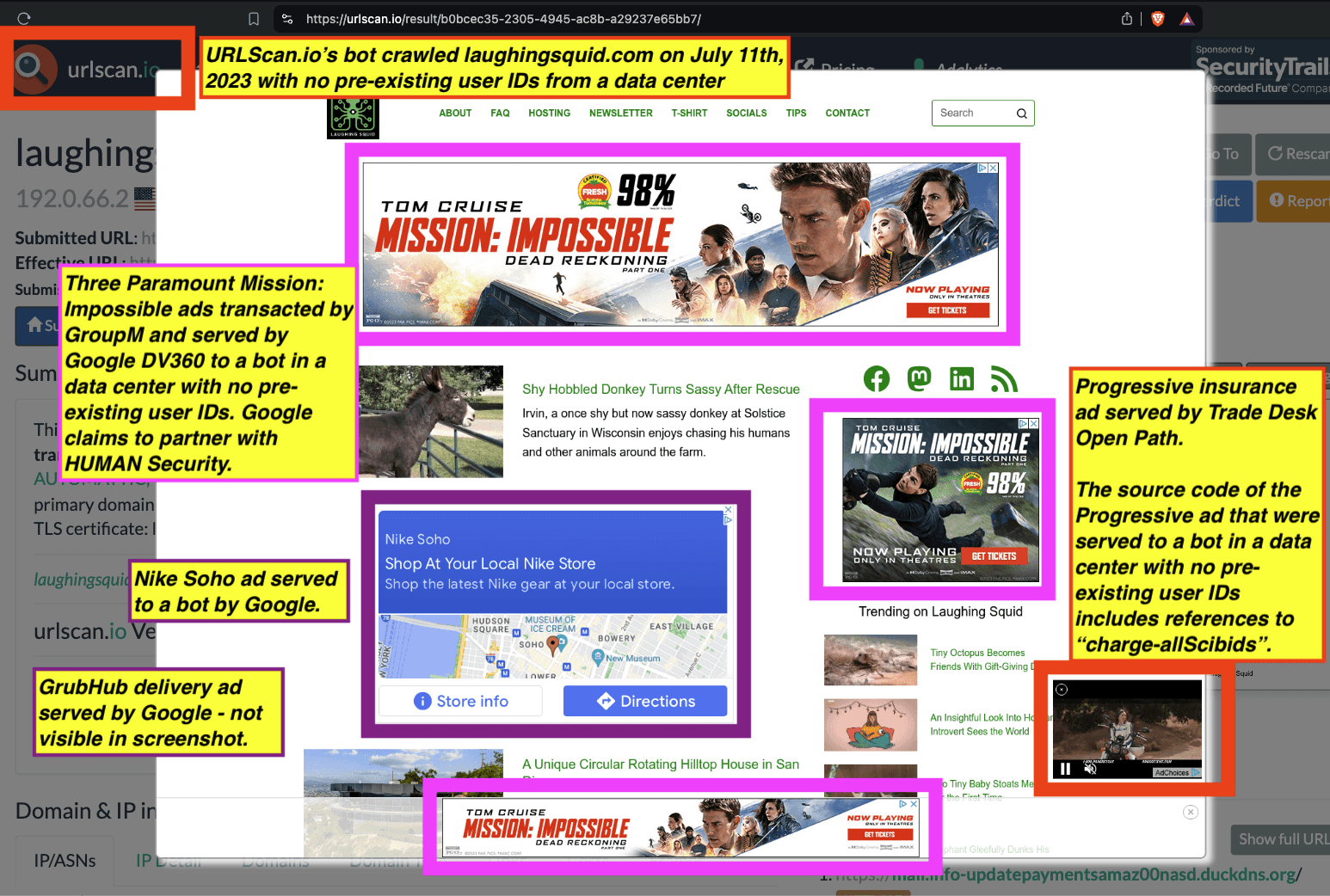

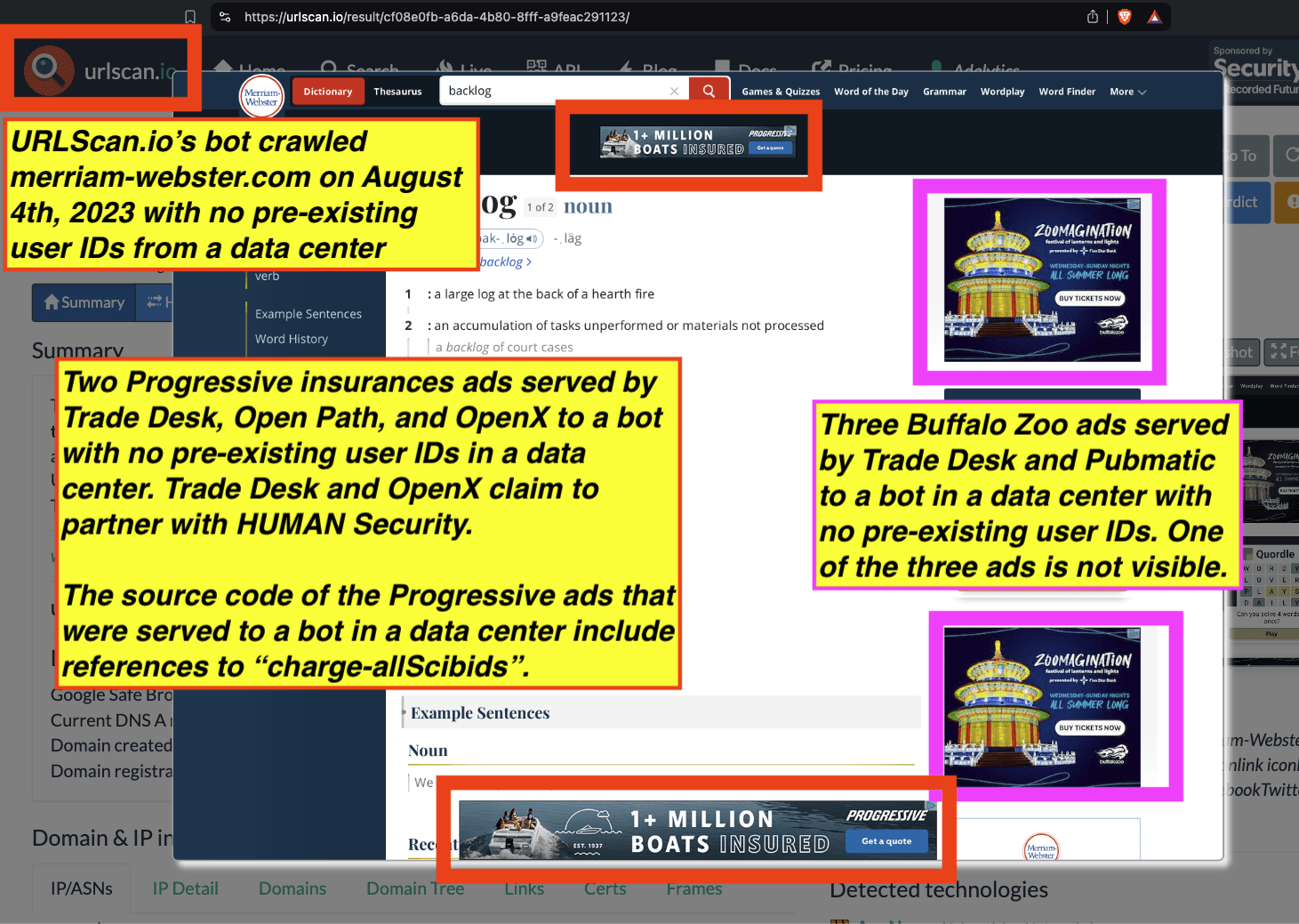

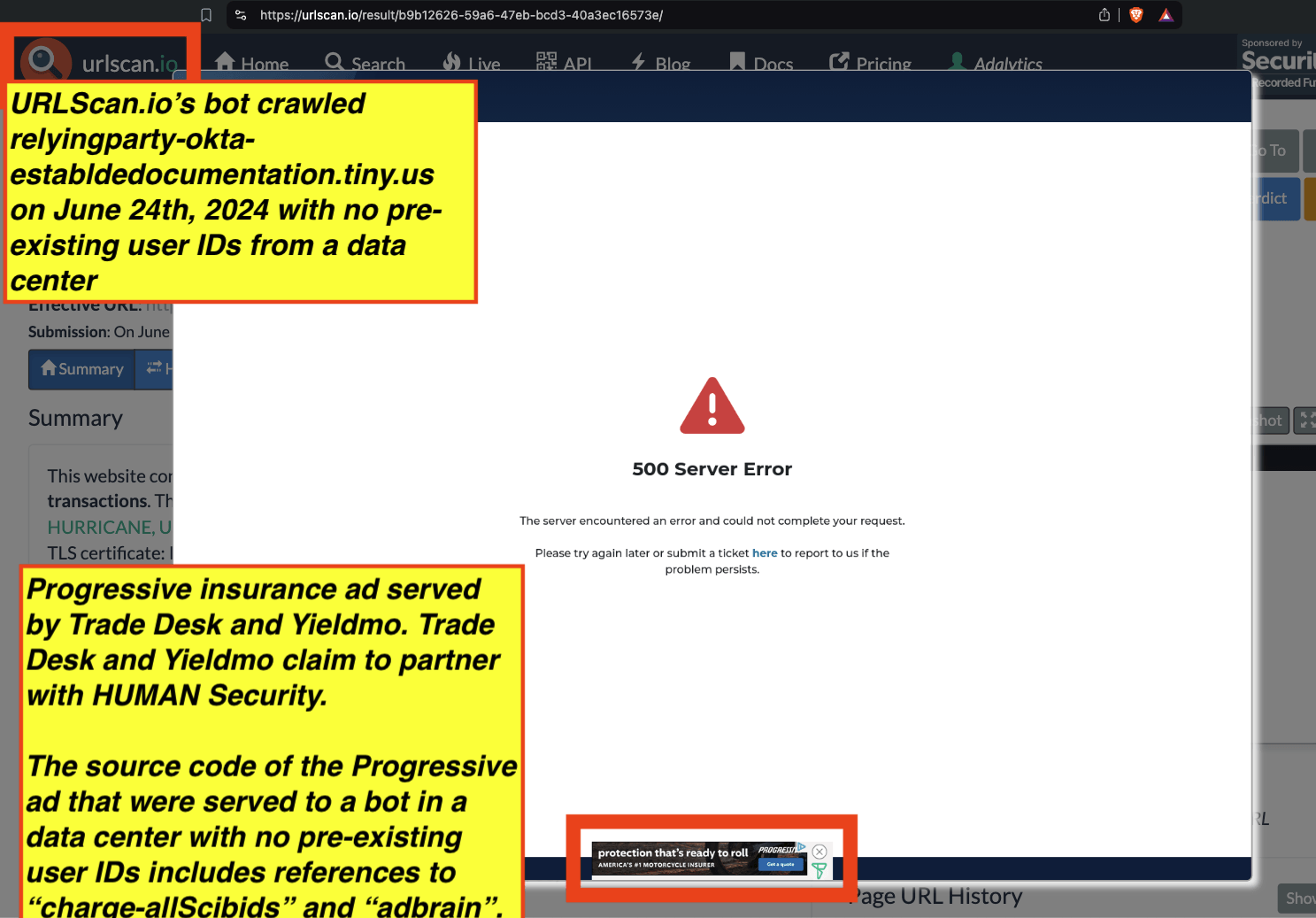

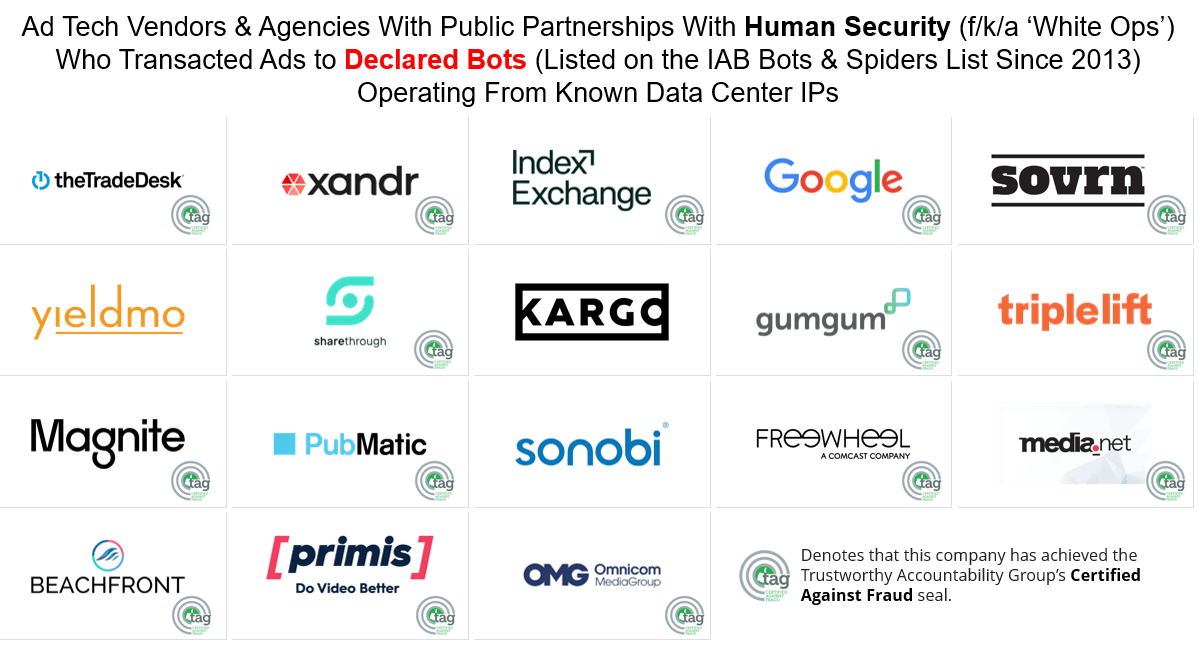

Many of the ad tech platforms which were observed serving ads to declared bots in data center server farms have declared publicly that they filter bot traffic pre-bid through partnerships with HUMAN Security (f/k/a “White Ops”). For example, Google, Trade Desk, Pubmatic, Magnite, Index Exchange, Triplelift, OpenX, Microsoft Xandr, Kargo, GumGum, Media.net, Sonobi, and Equativ (f/k/a Smart AdServer) have made public statements about partnering with HUMAN Security to prevent ads from being served to bots. Additionally, some of these ad tech vendors also have announced partnerships with DoubleVerify and IAS.

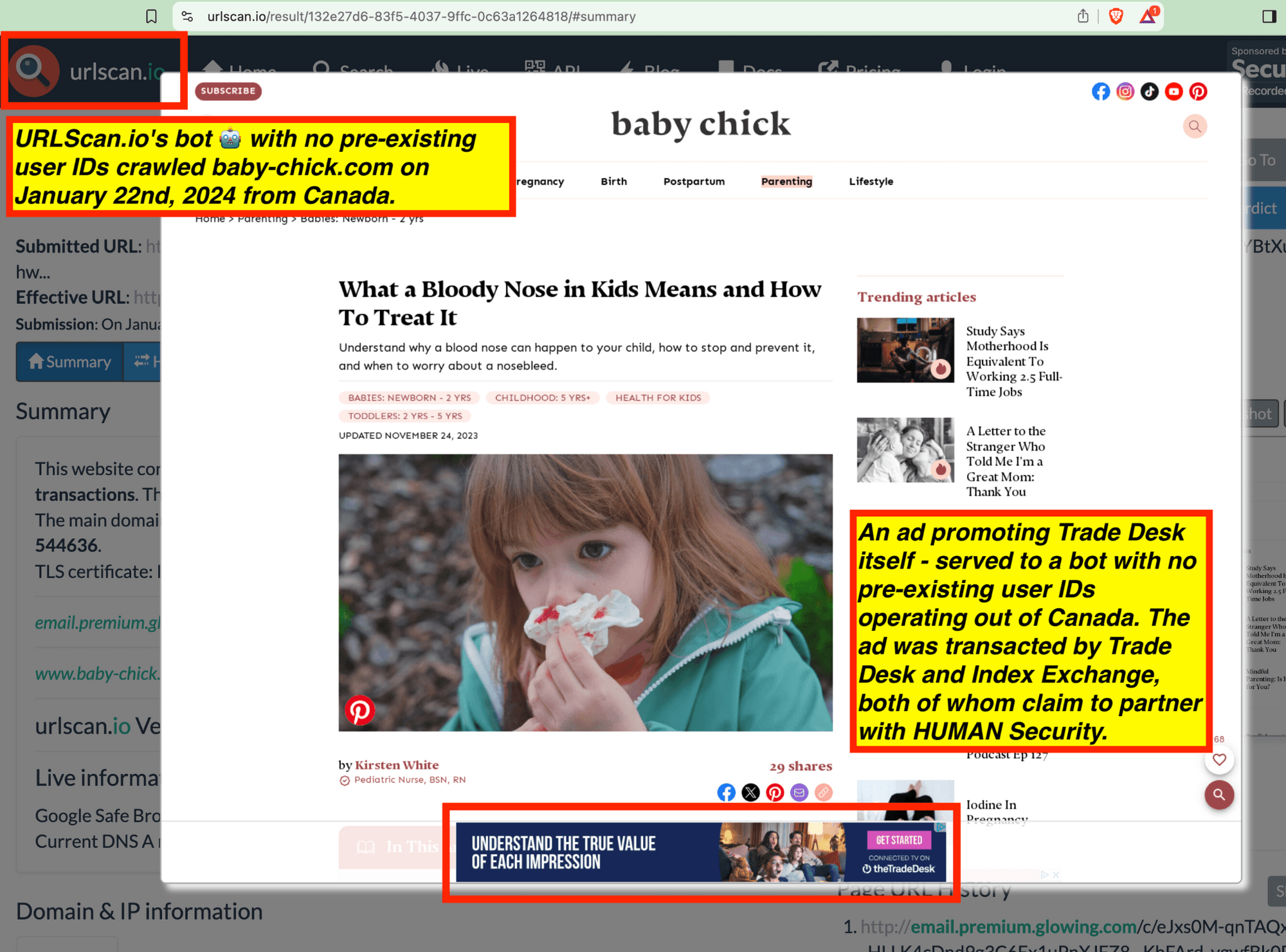

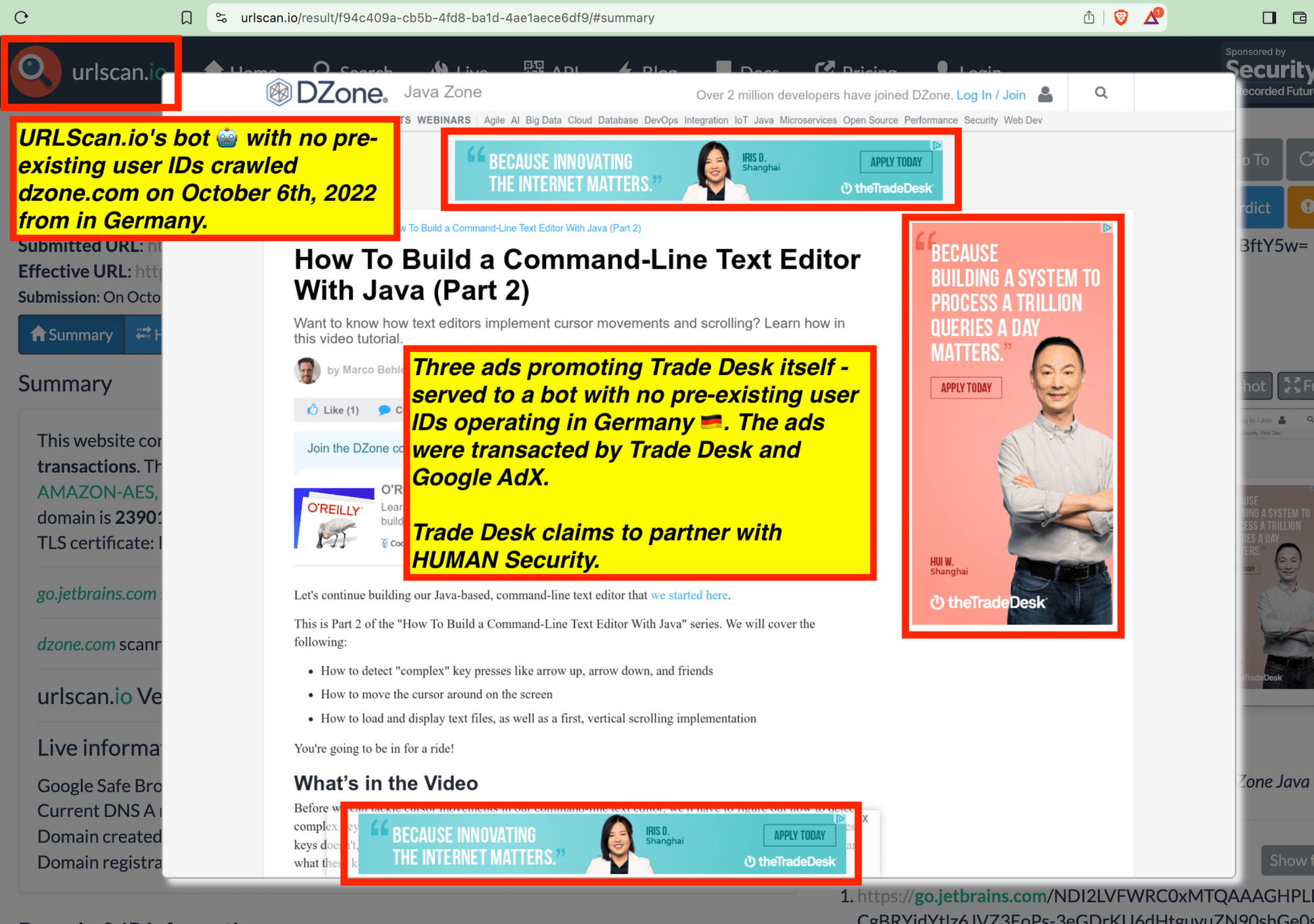

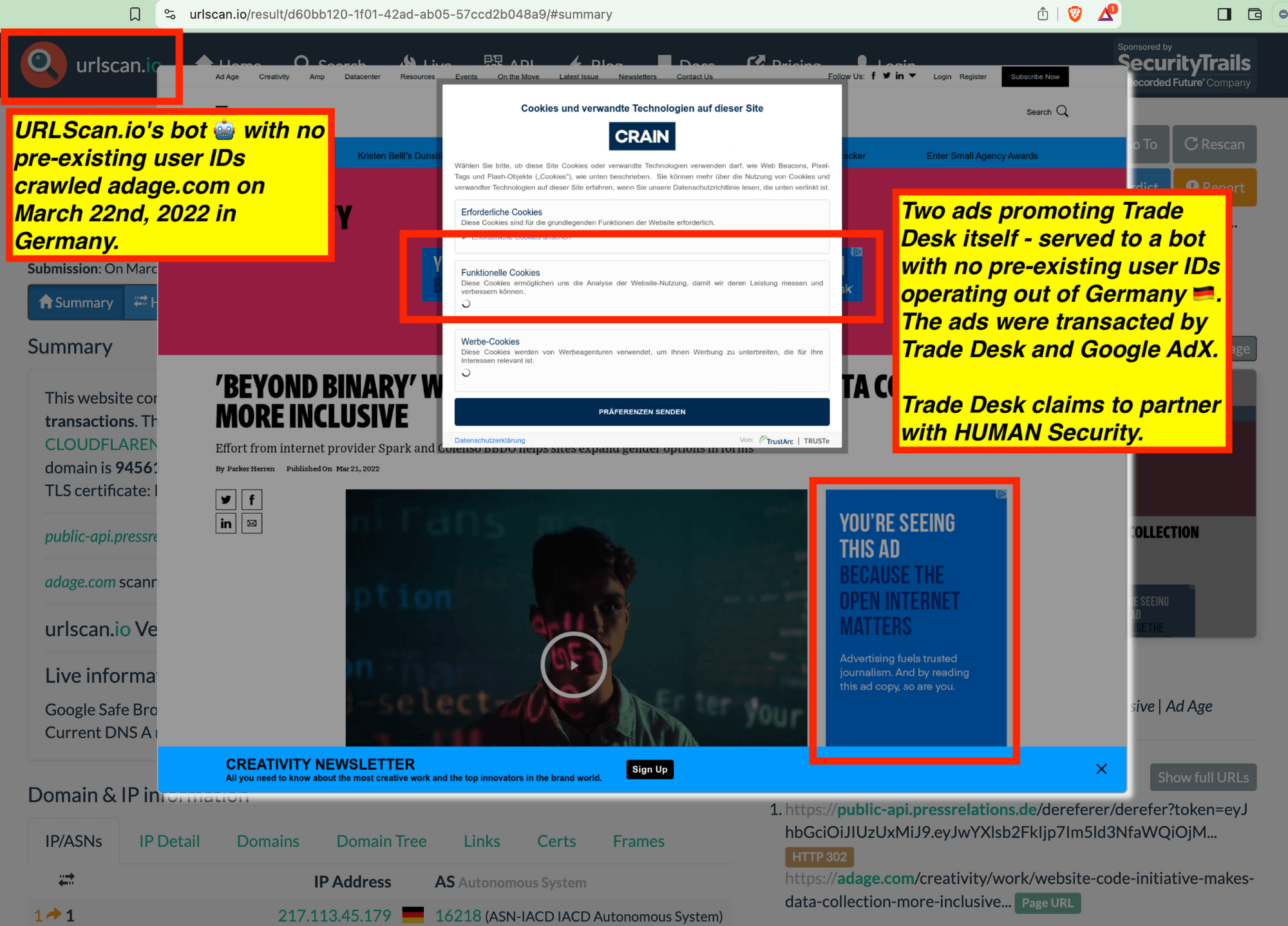

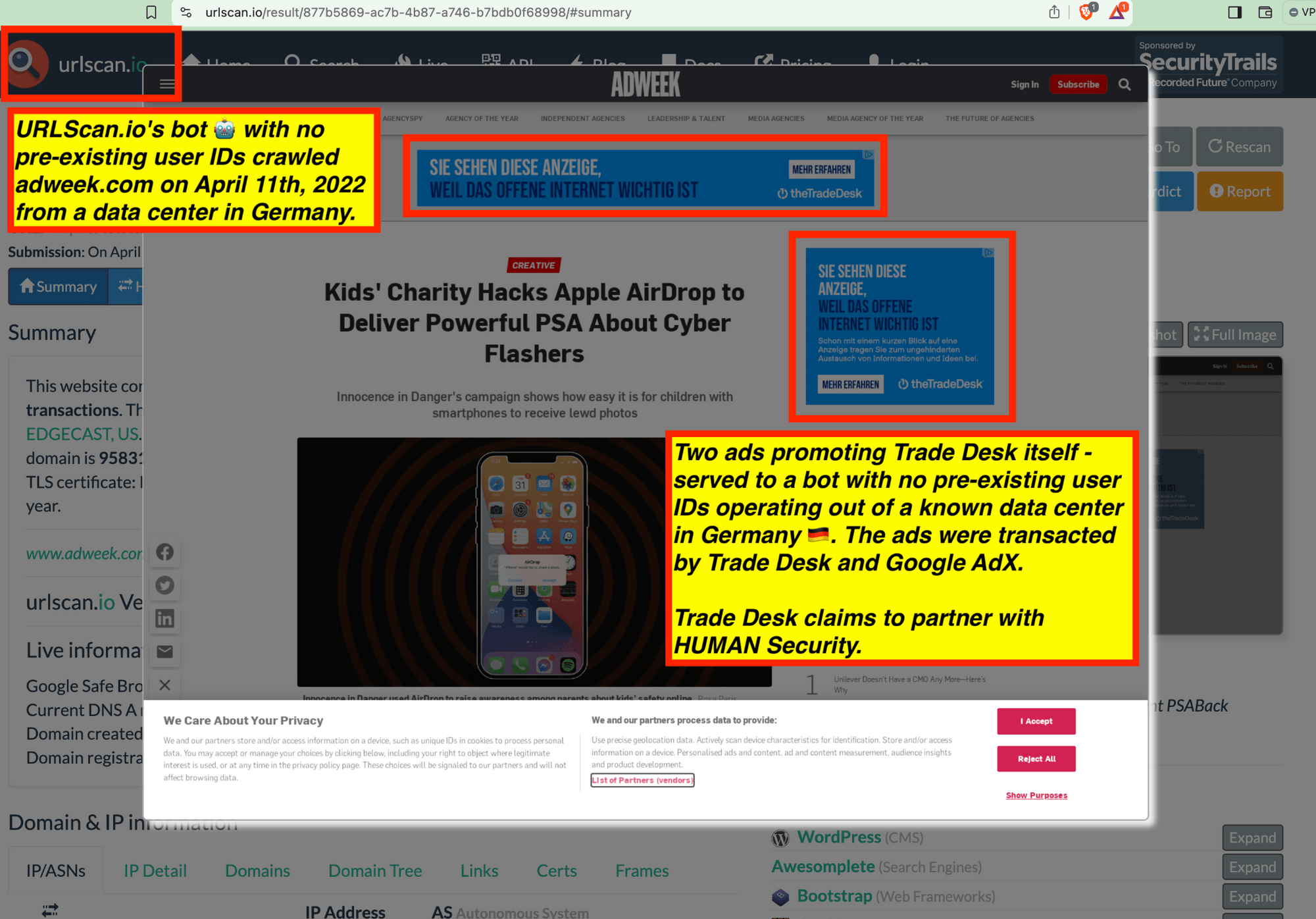

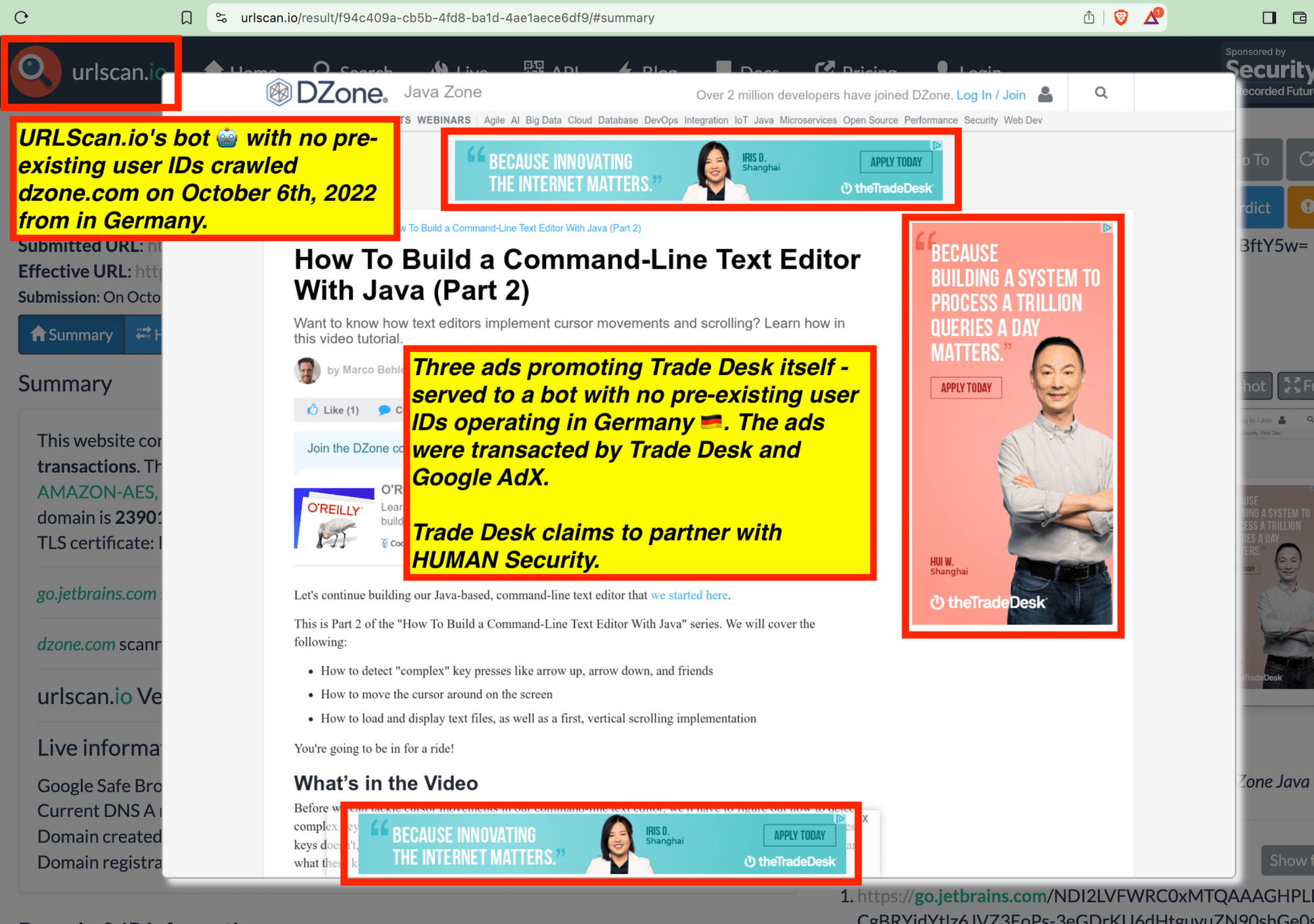

Trade Desk and Google’s own ads promoting their own products or services were observed serving to these bots.

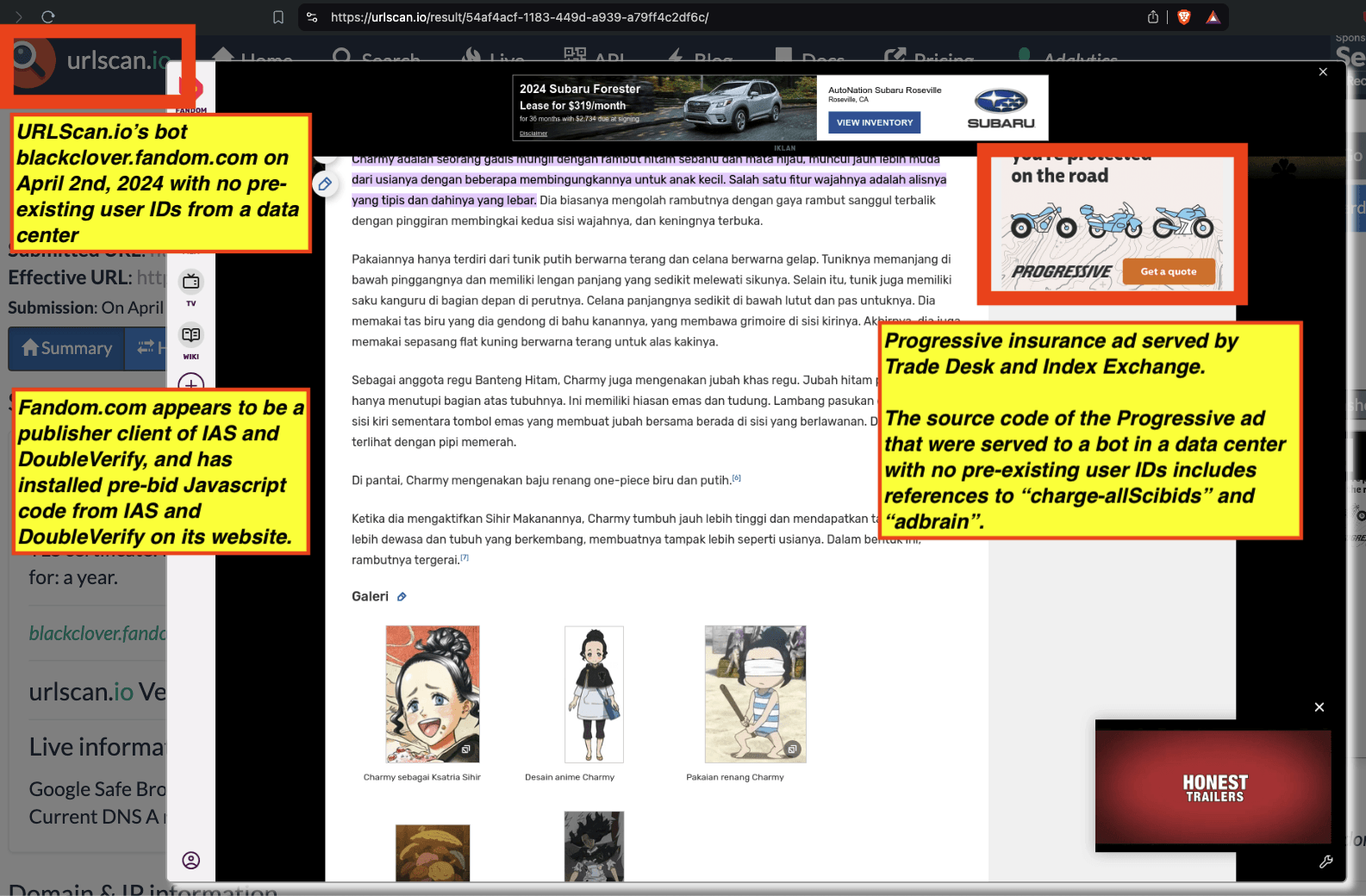

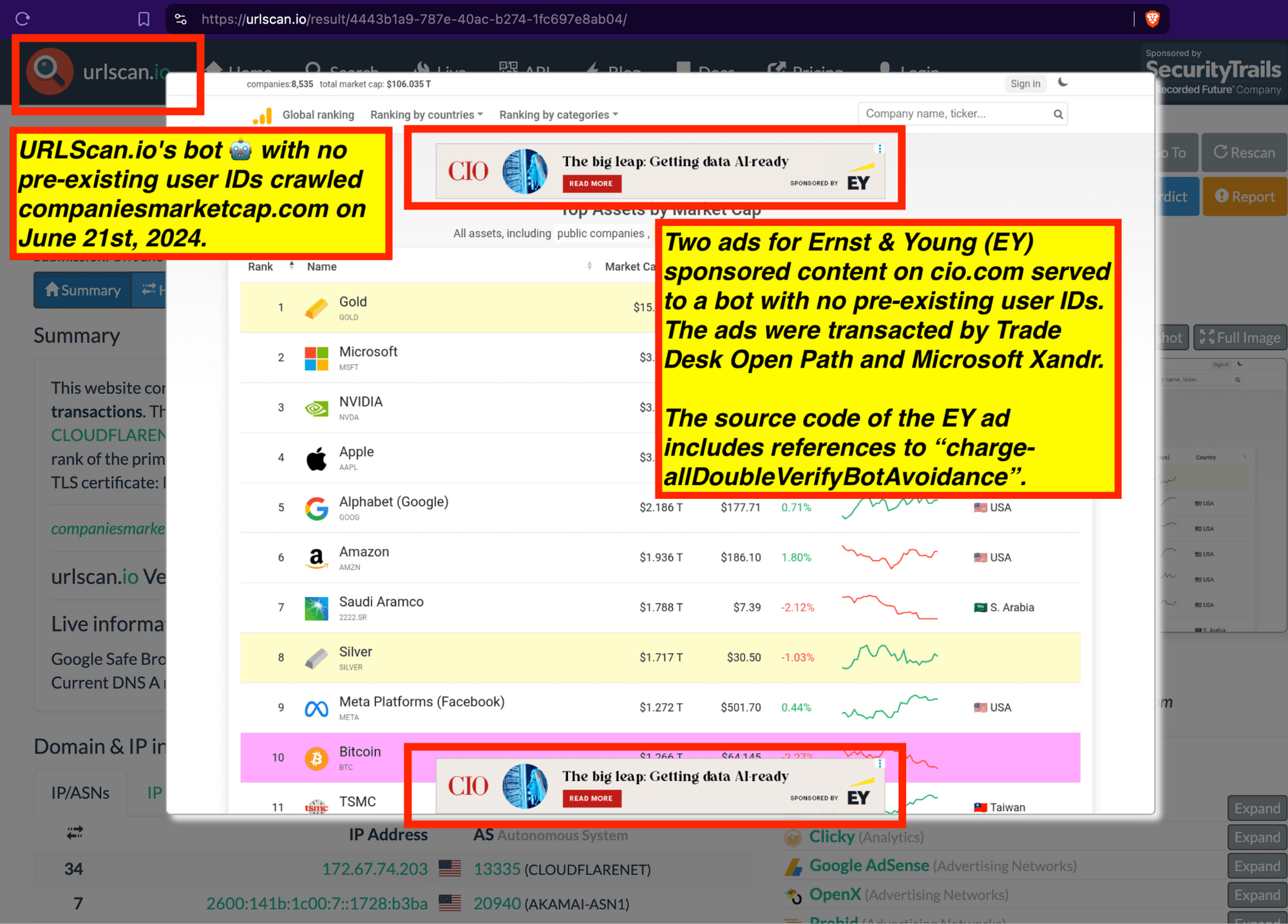

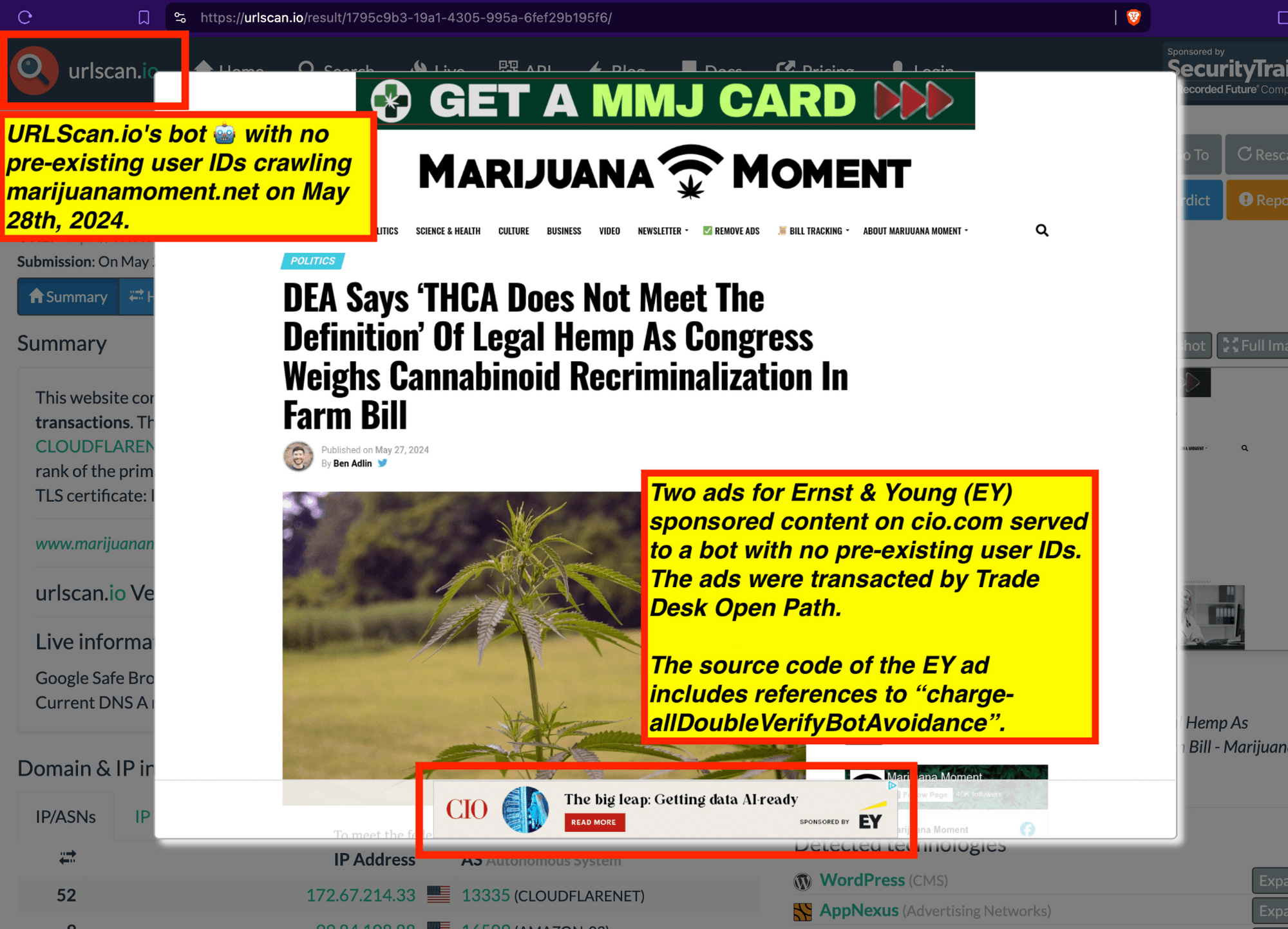

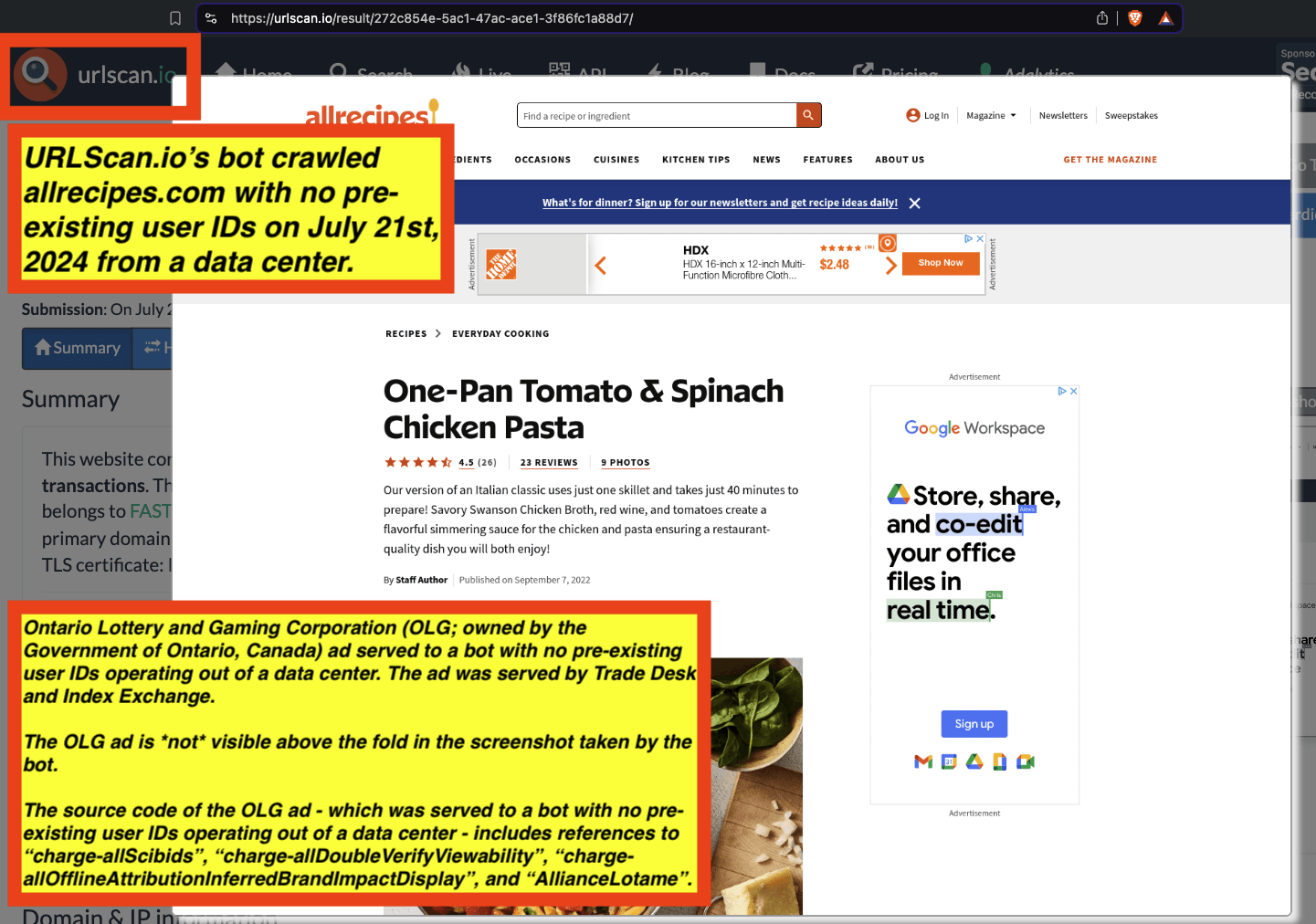

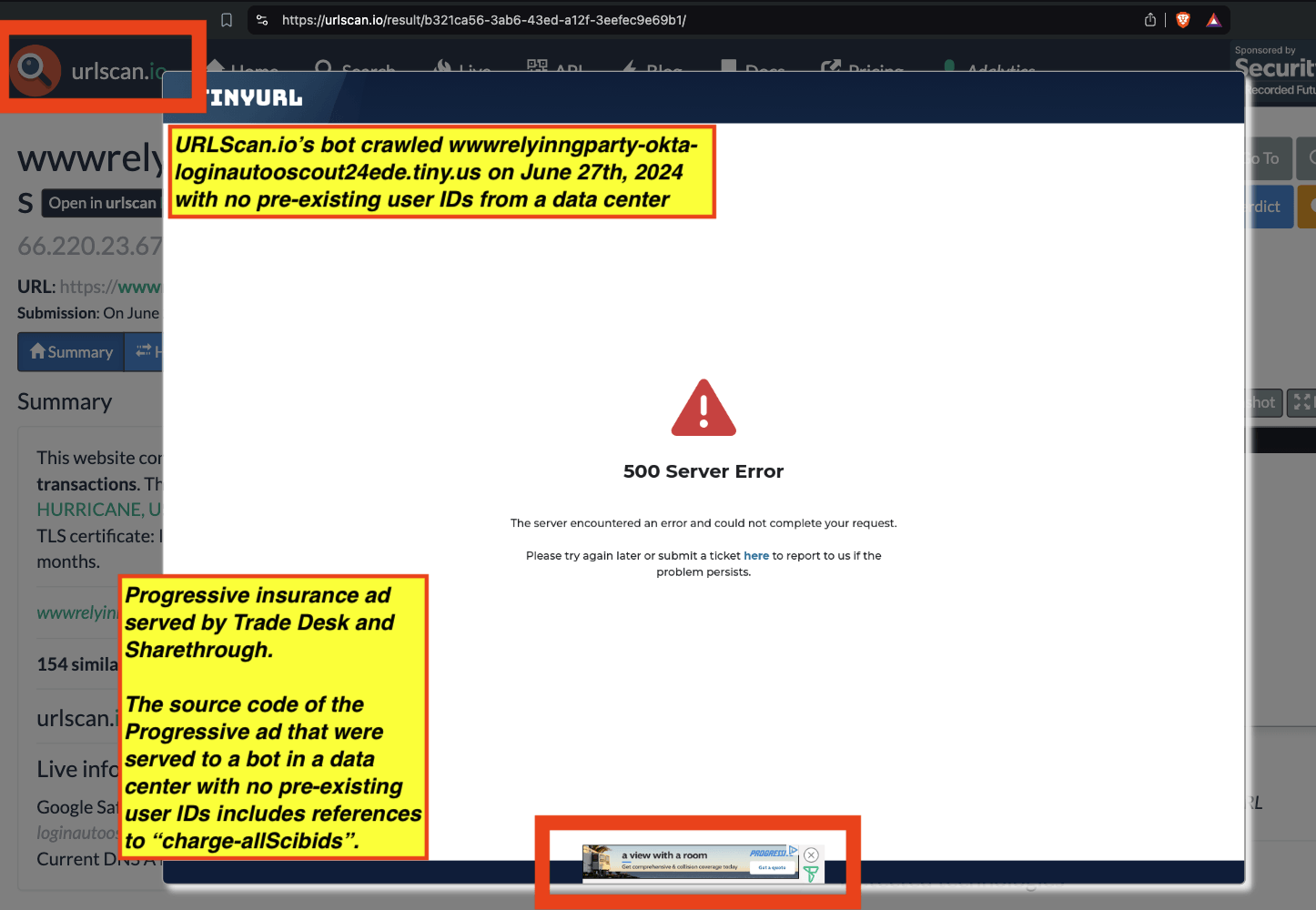

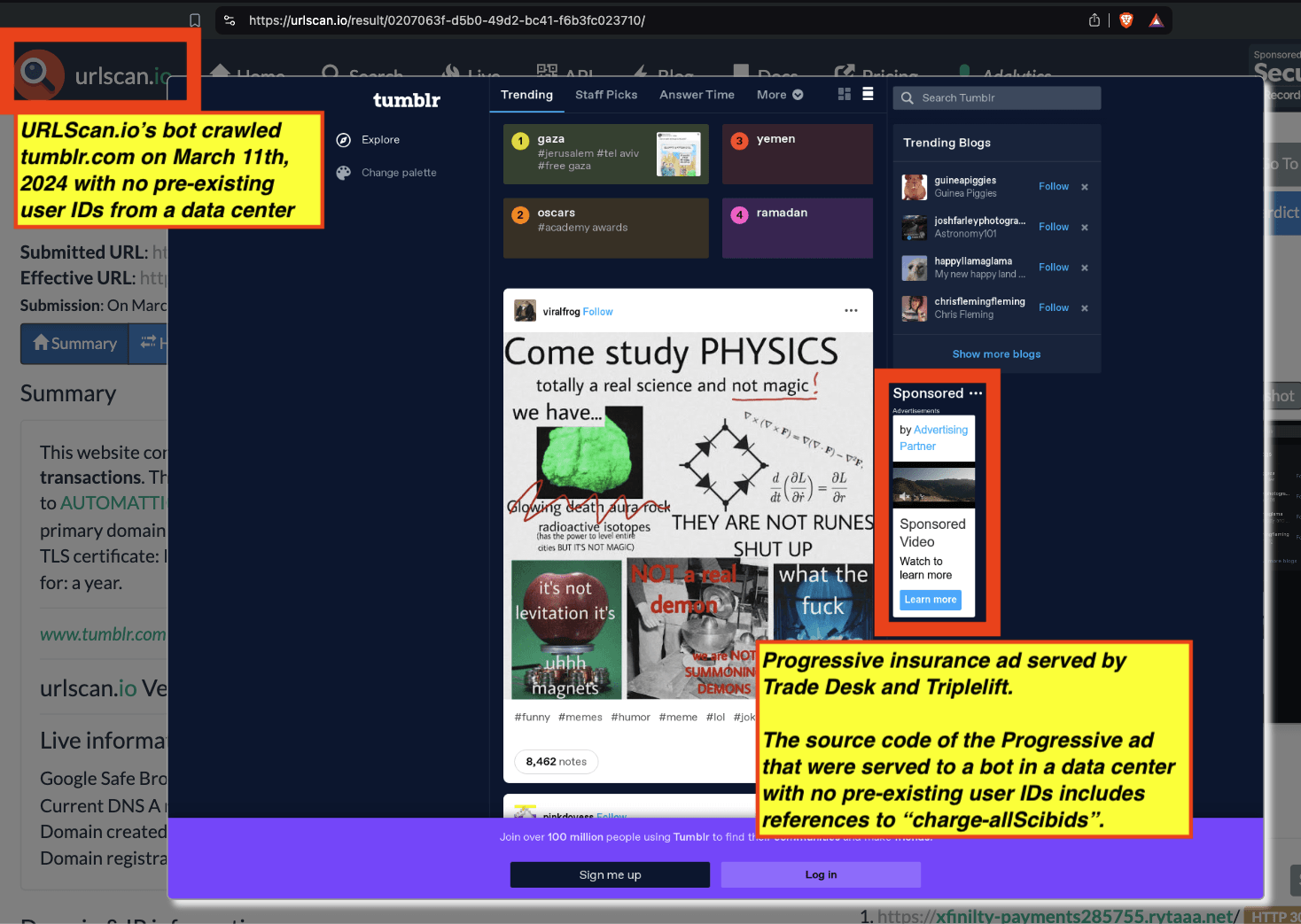

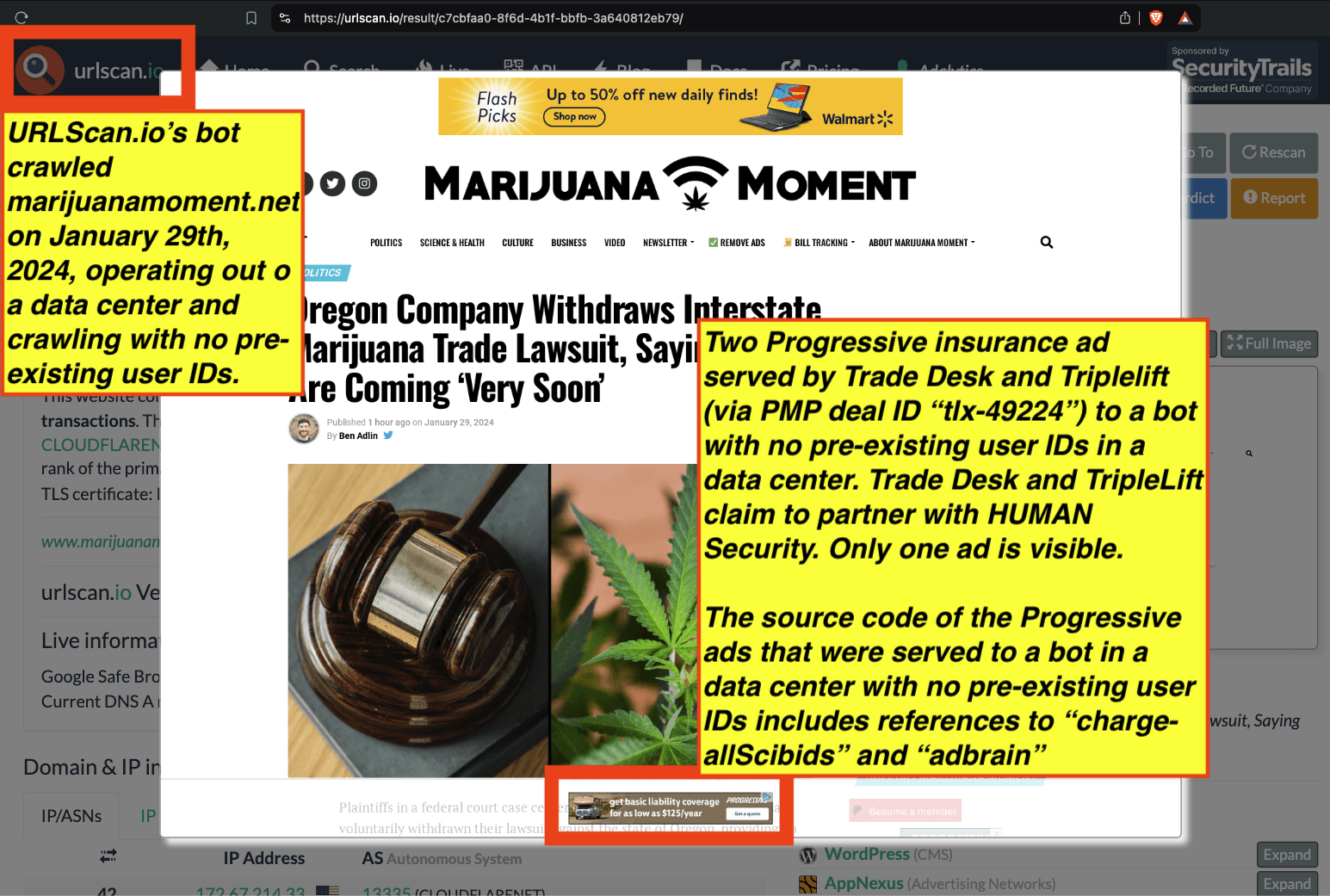

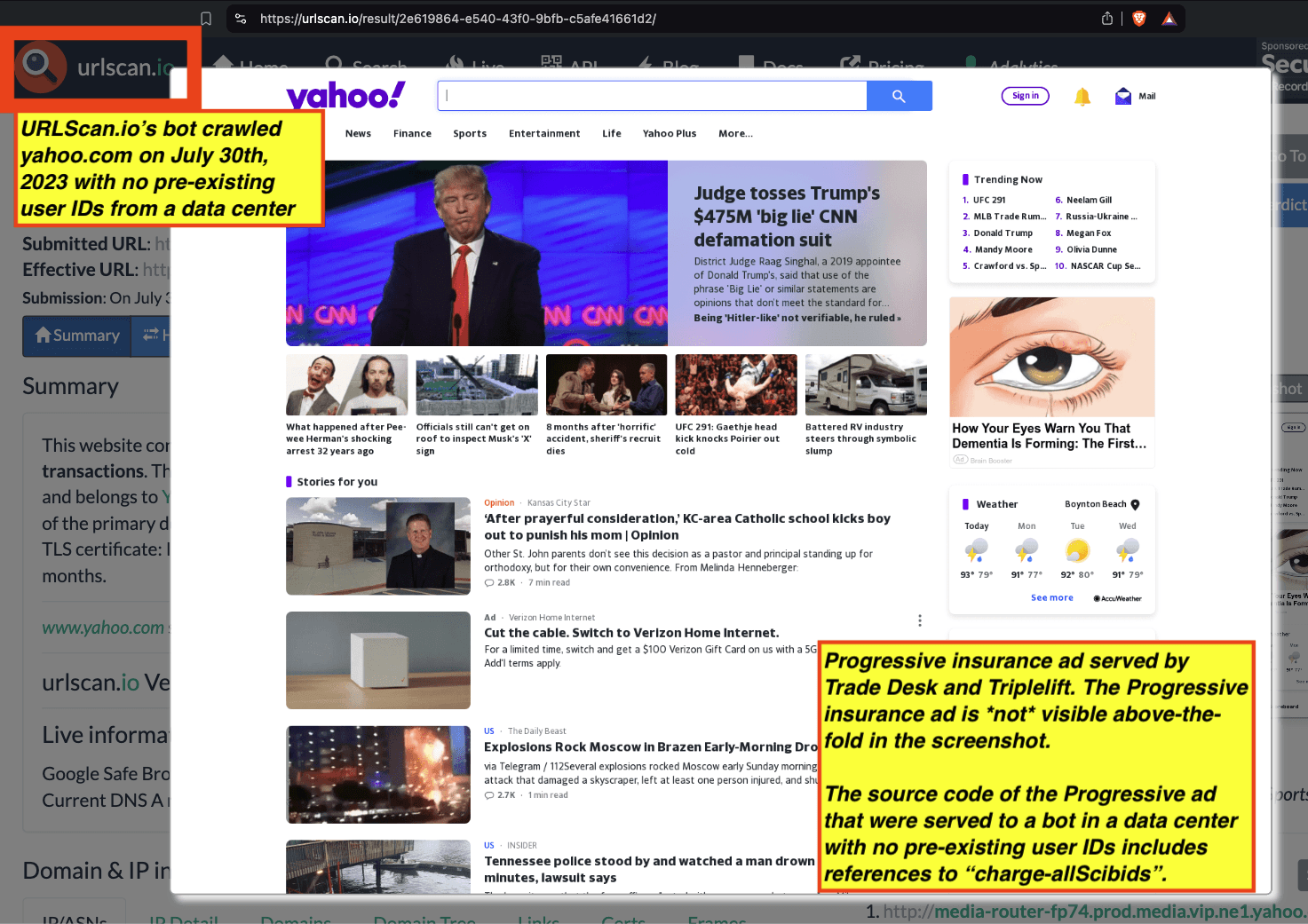

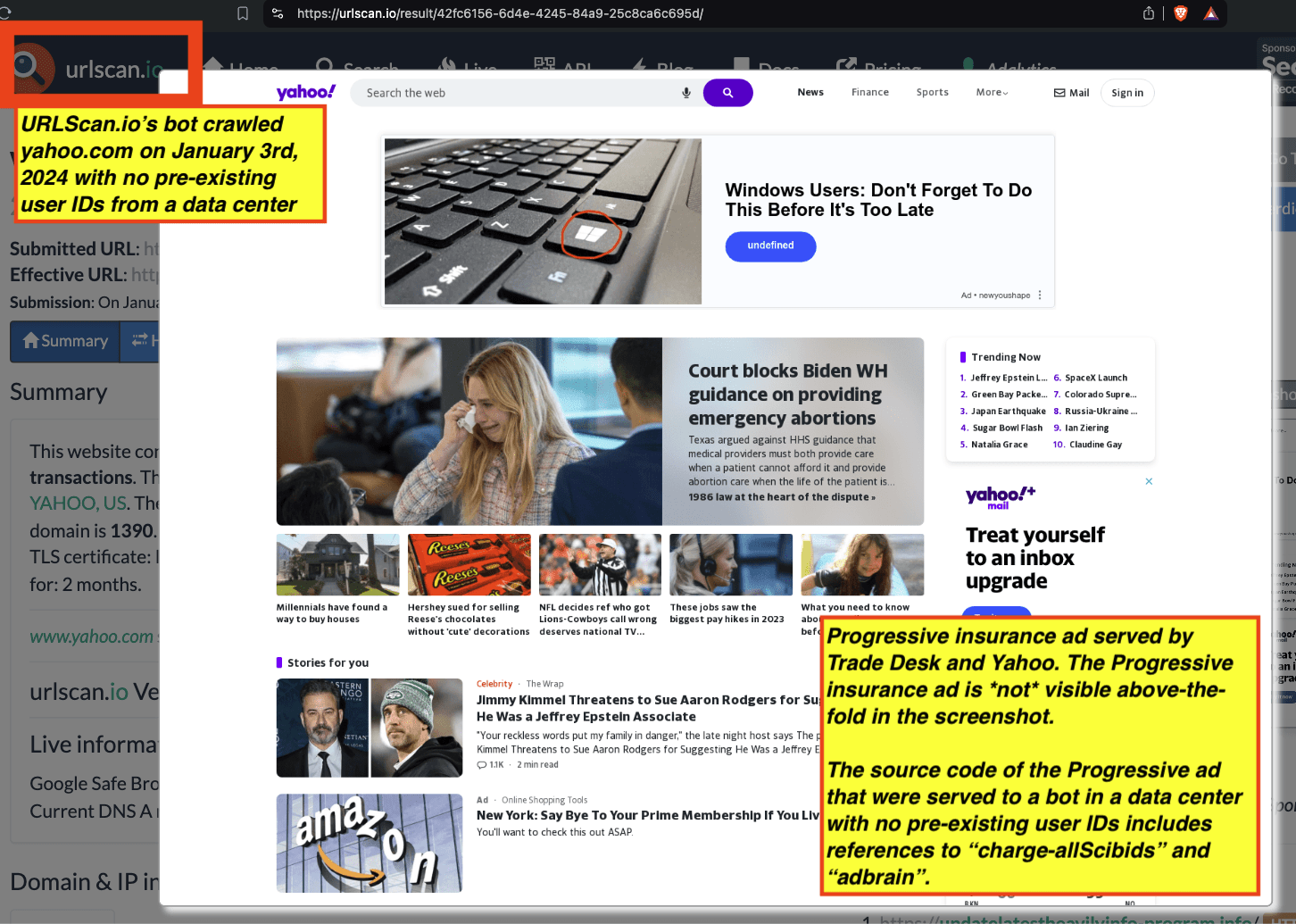

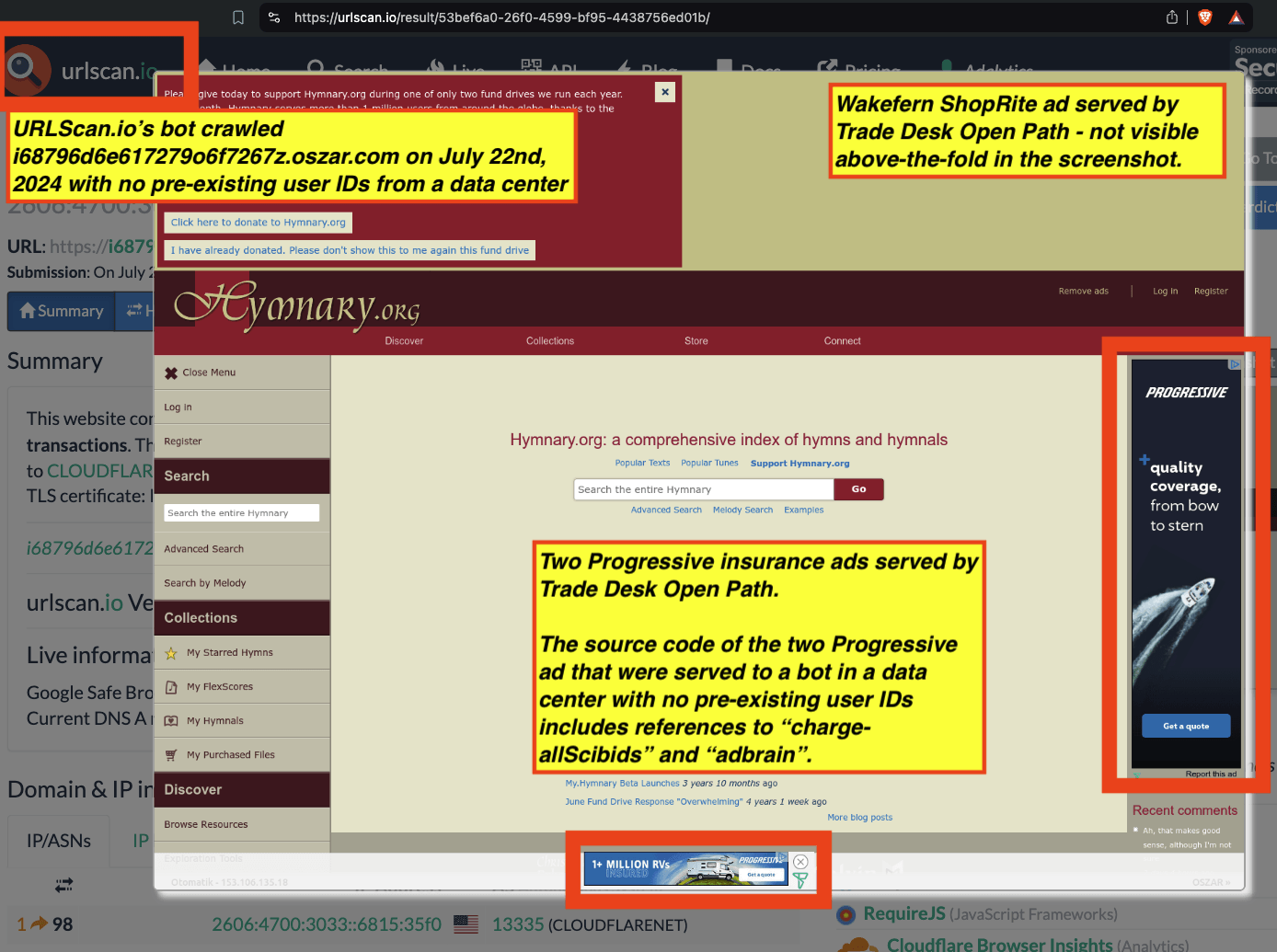

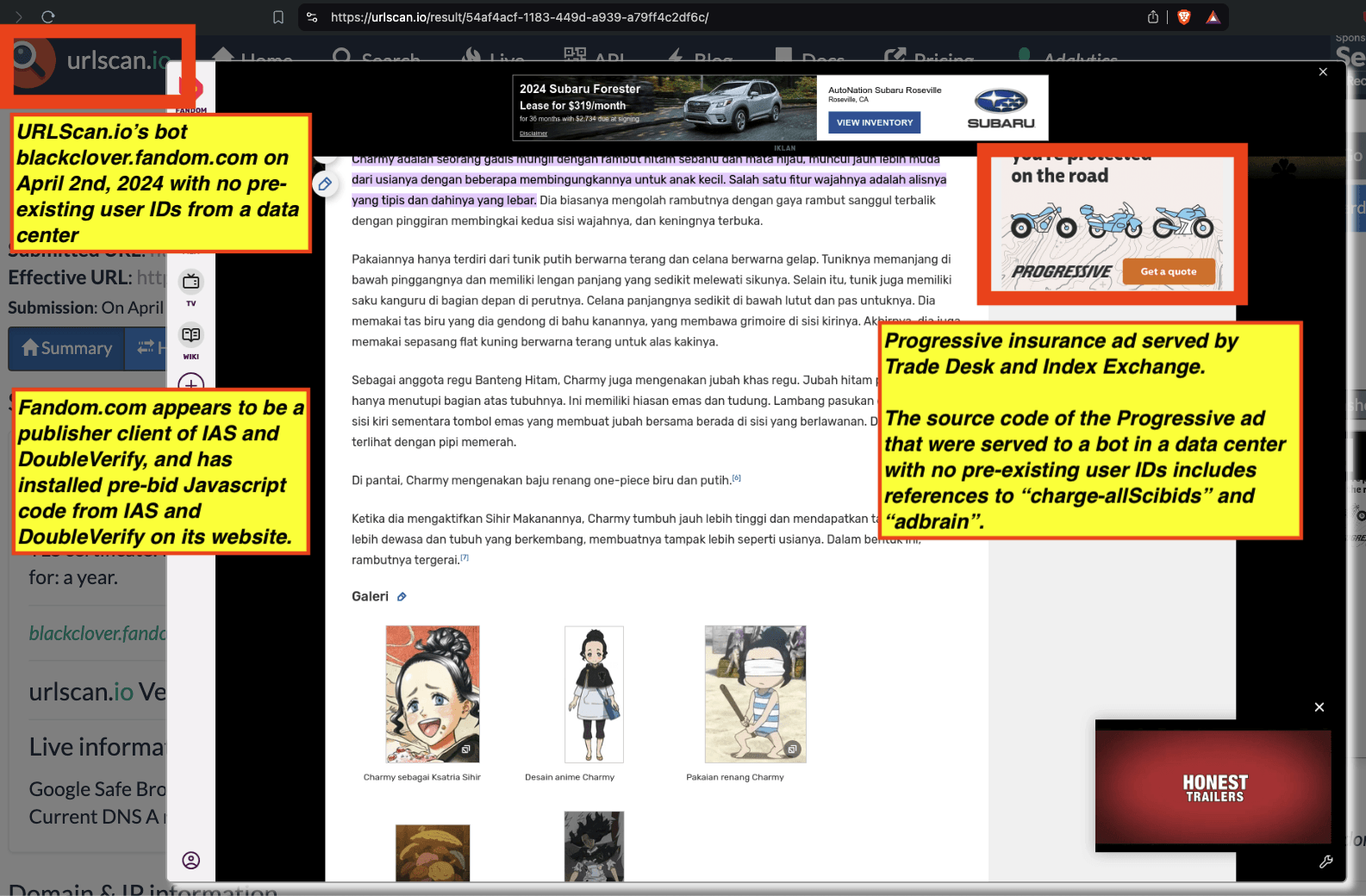

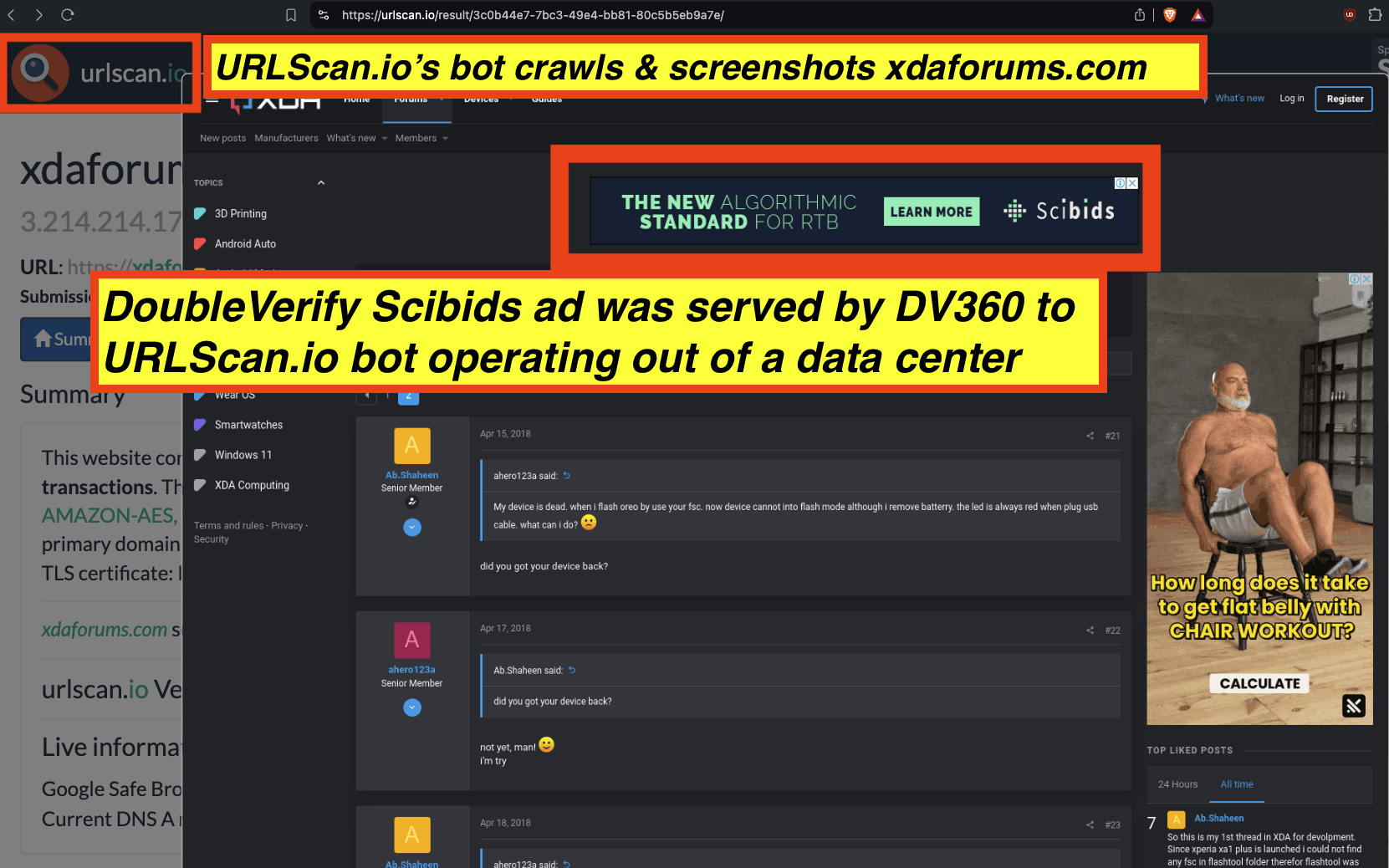

Some advertisers such as the Government of Ontario, Canada and Progressive (insurance) appear to be utilizing DoubleVerify’s Scibids to target their ads. Scibids is a subsidiary that allegedly uses AI to target ads. It appears that some of these DoubleVerify Scibids-targeted ads were served to declared bots with no long term cookies or user IDs running out of data center server farms.

Ads promoting Scibids’ own products were served to bots with no pre-existing user IDs by Google

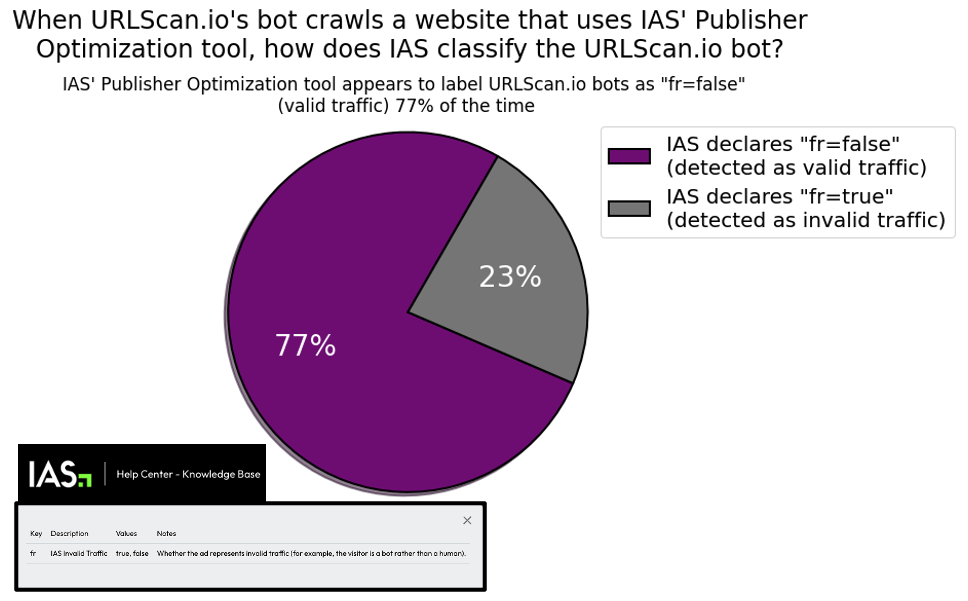

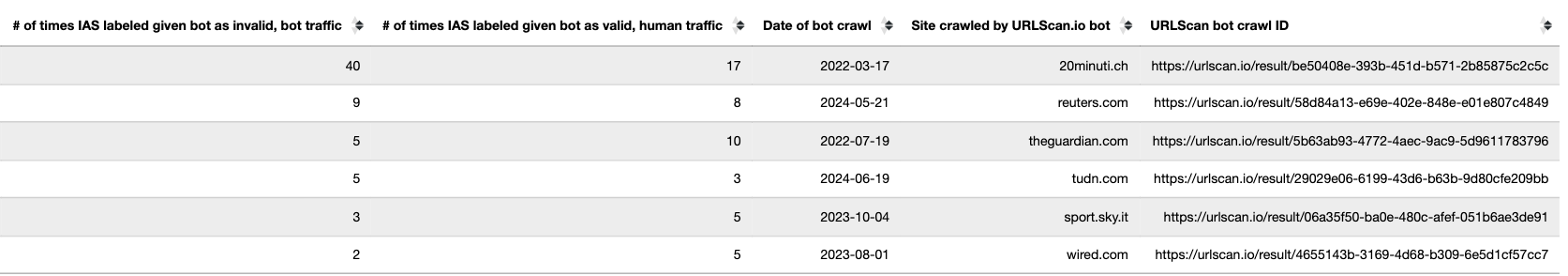

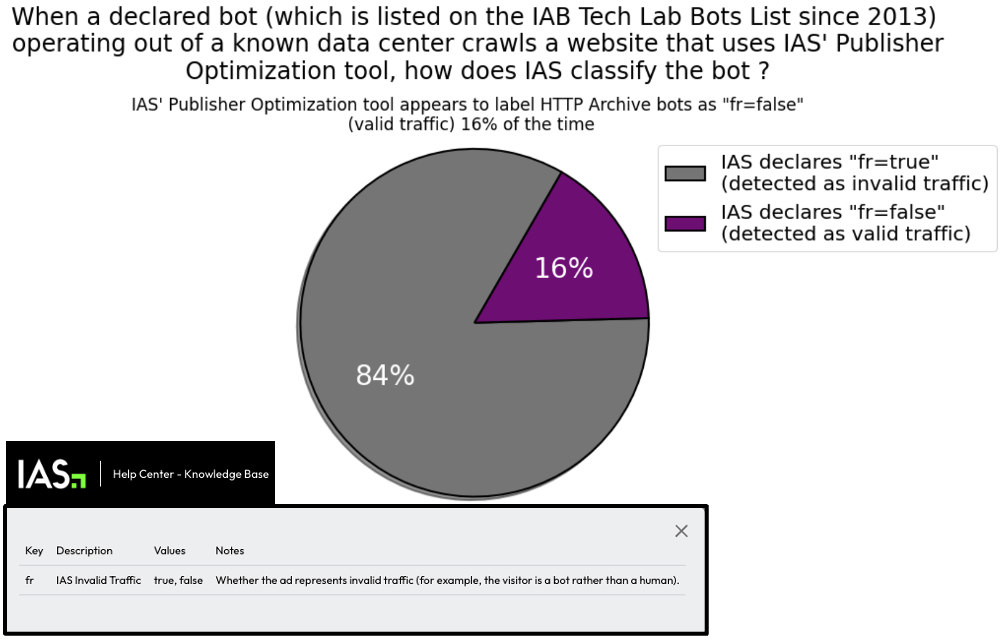

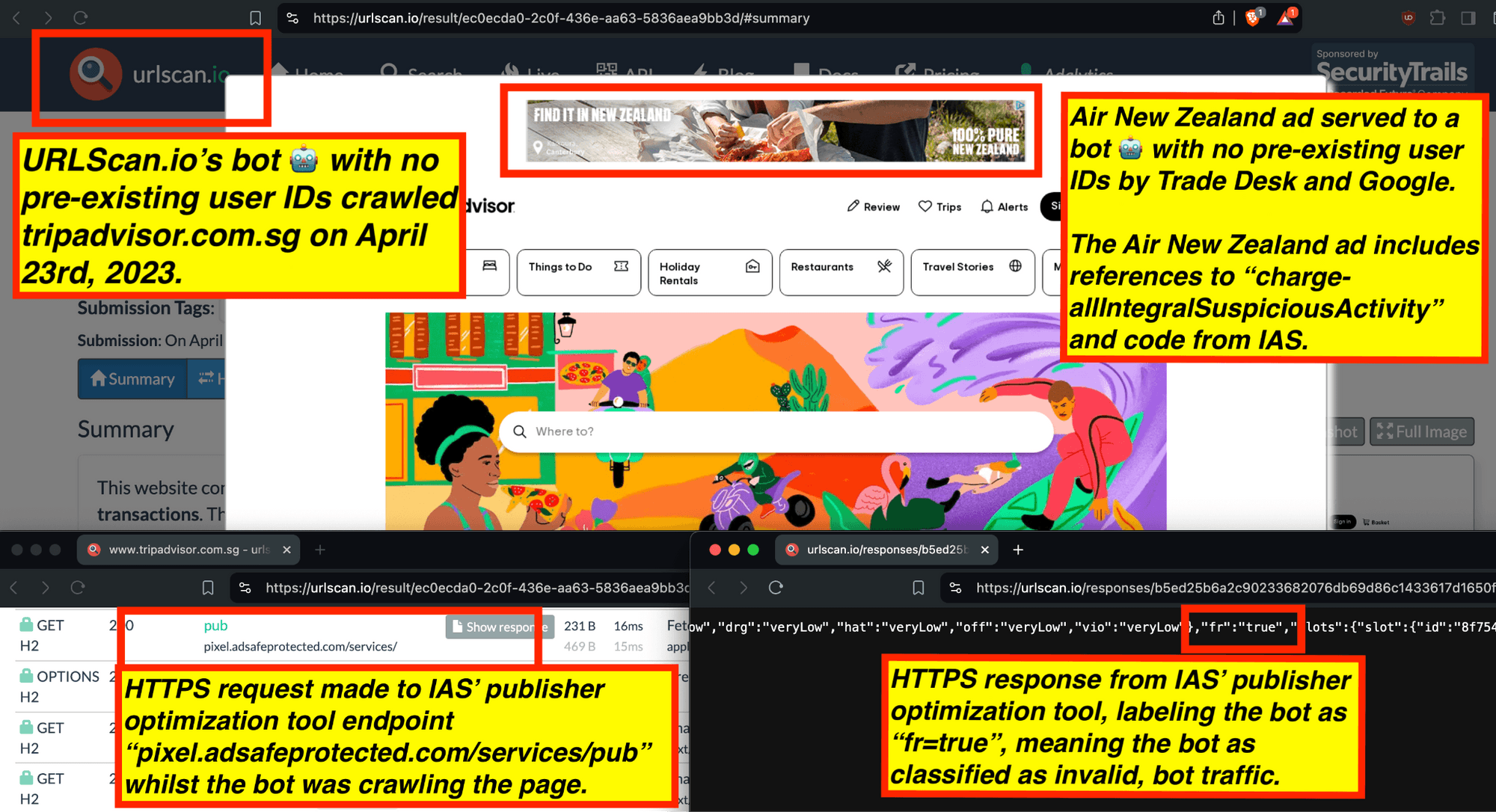

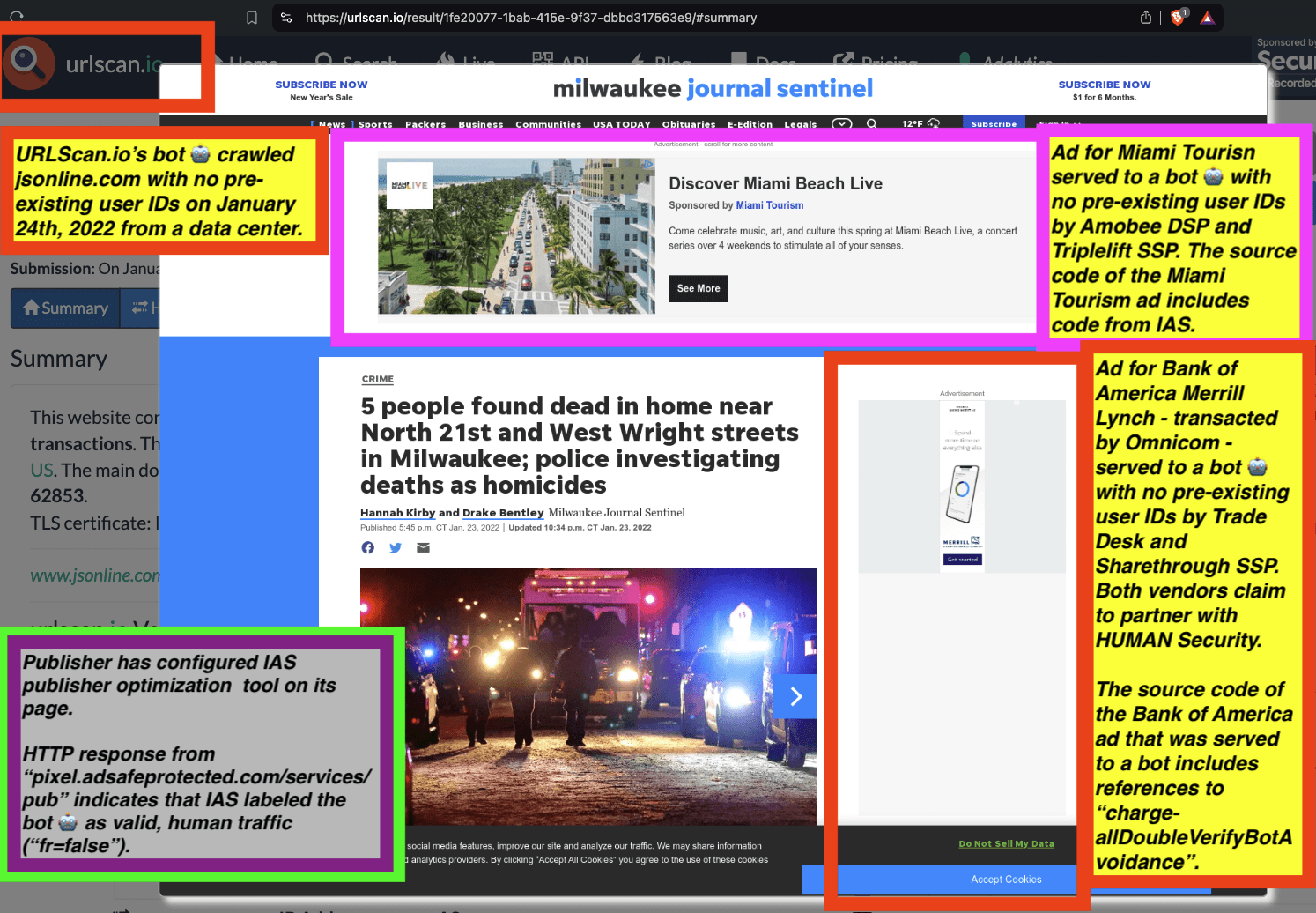

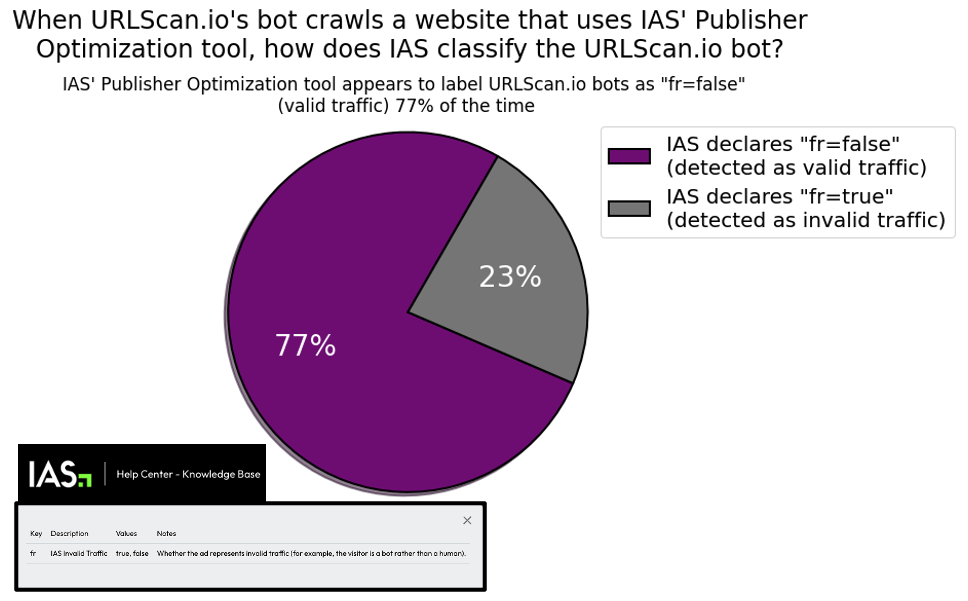

IAS’s publisher services pixel appears to label declared bots (whose user agents are on the IAB Tech Lab Spiders and Bots list since 2013) running out of known data center server farms as valid, human traffic 17% of the time (meaning for every 100 declared bots observed herein, IAS labels 17 bot page view sessions as valid, human traffic). For non-declared bots operating out of data centers or other IPs, IAS’s pixel appears to label the bot traffic as valid, human traffic 77% of the time in the observed sample dataset.

In some cases, it appears that IAS’ publisher optimization tool labeled the same bot and page view session as both valid, human traffic (“fr=false”) and simultaneously as invalid, bot traffic (“fr=true”).

Furthermore, in some cases, even when IAS’ publisher optimization tool identified a given user as a bot, there were still ads served to the bot on behalf of advertisers who appeared to be using IAS’ advertiser-side tools.

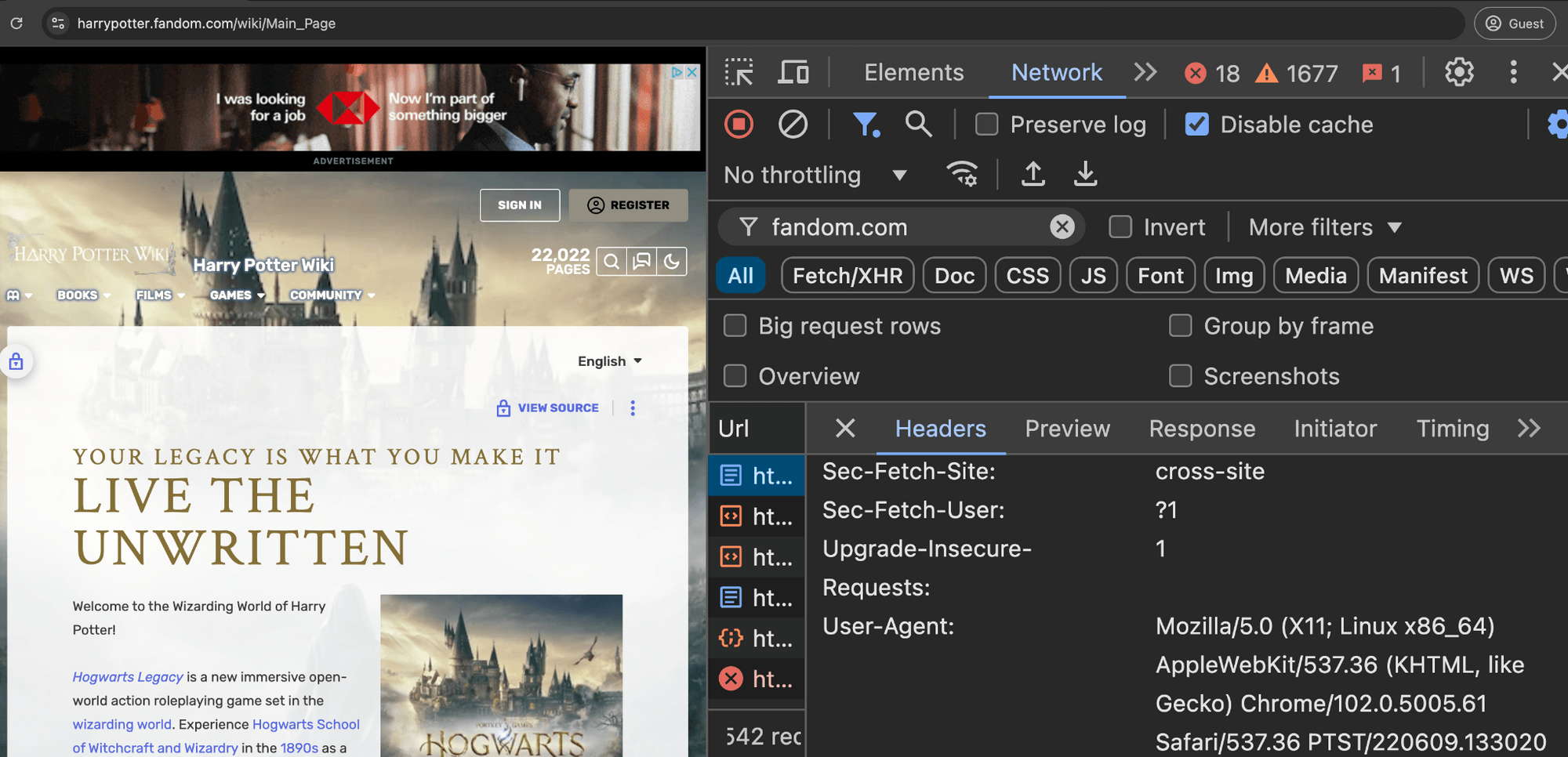

Many premium publishers and ad tech industry trade press websites were observed serving ads to bots. For example, the trade press adweek.com and adage.com were observed serving Trade Desk ads promoting Trade Desk’s own services to bots. Many publishers which appear to use IAS’ and/or DoubleVerify publisher optimization tools - such as Wall Street Journal, Reuters, Fandom, Forbes, Weather.com, Condé Nast, and Washington Post - were observed serving major advertisers’ ads to bots in data centers for years

Many media agencies were observed transacting clients’ ads to declared bots on the IAB Tech Lab Spiders and Bots list running out of data center server farms. These included: GroupM / WPP, Interpublic Group (IPG), Dentsu, Publicis Groupe, Havas, Omnicom, Horizon Media, and MiQ.

Google Ad Manager (GAM) - a publisher ad server - was observed transacting millions of ad impressions from thousands of different advertisers to bots. Some of these bots were declared bots operating out of Google’s own data center.

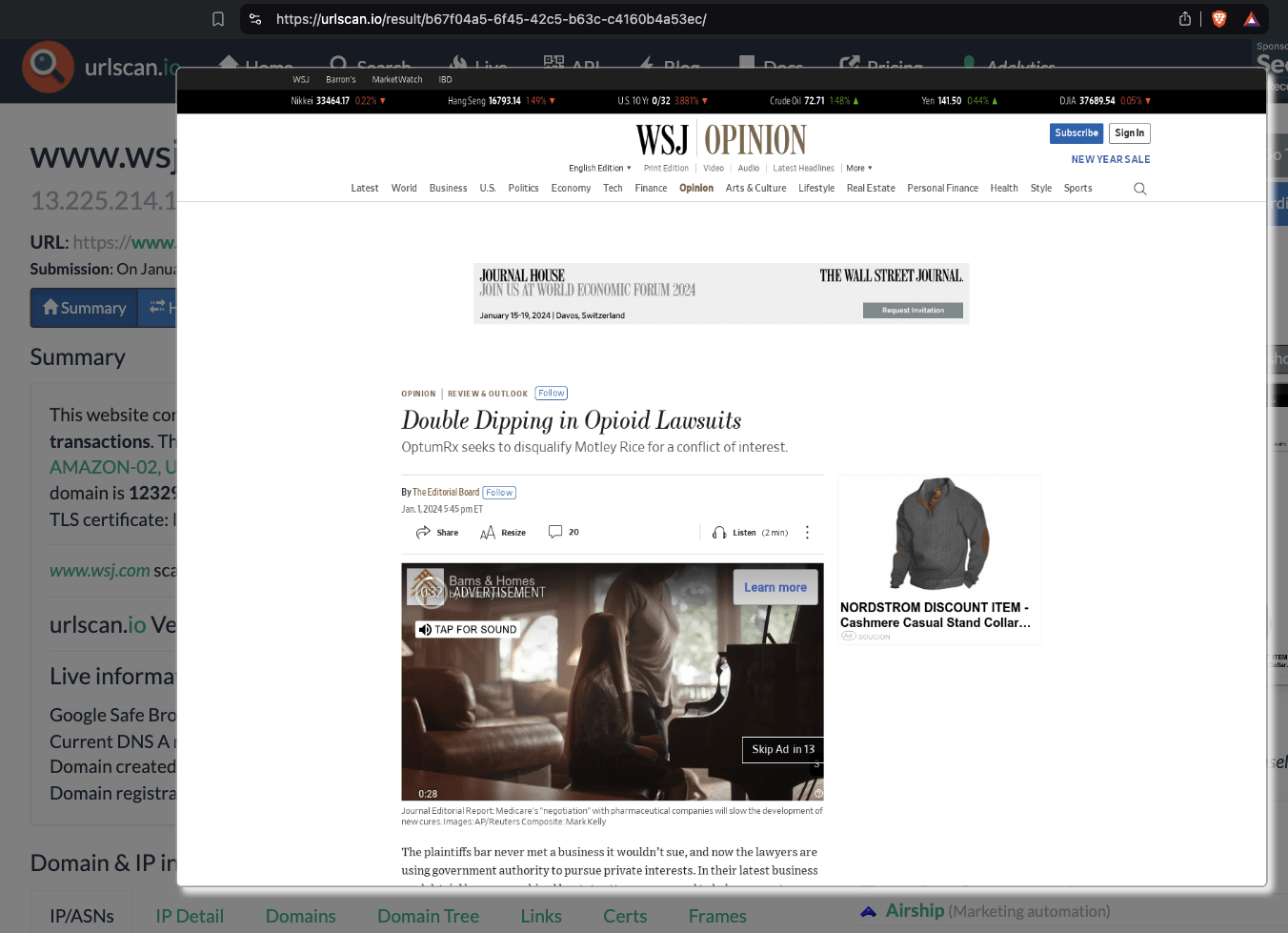

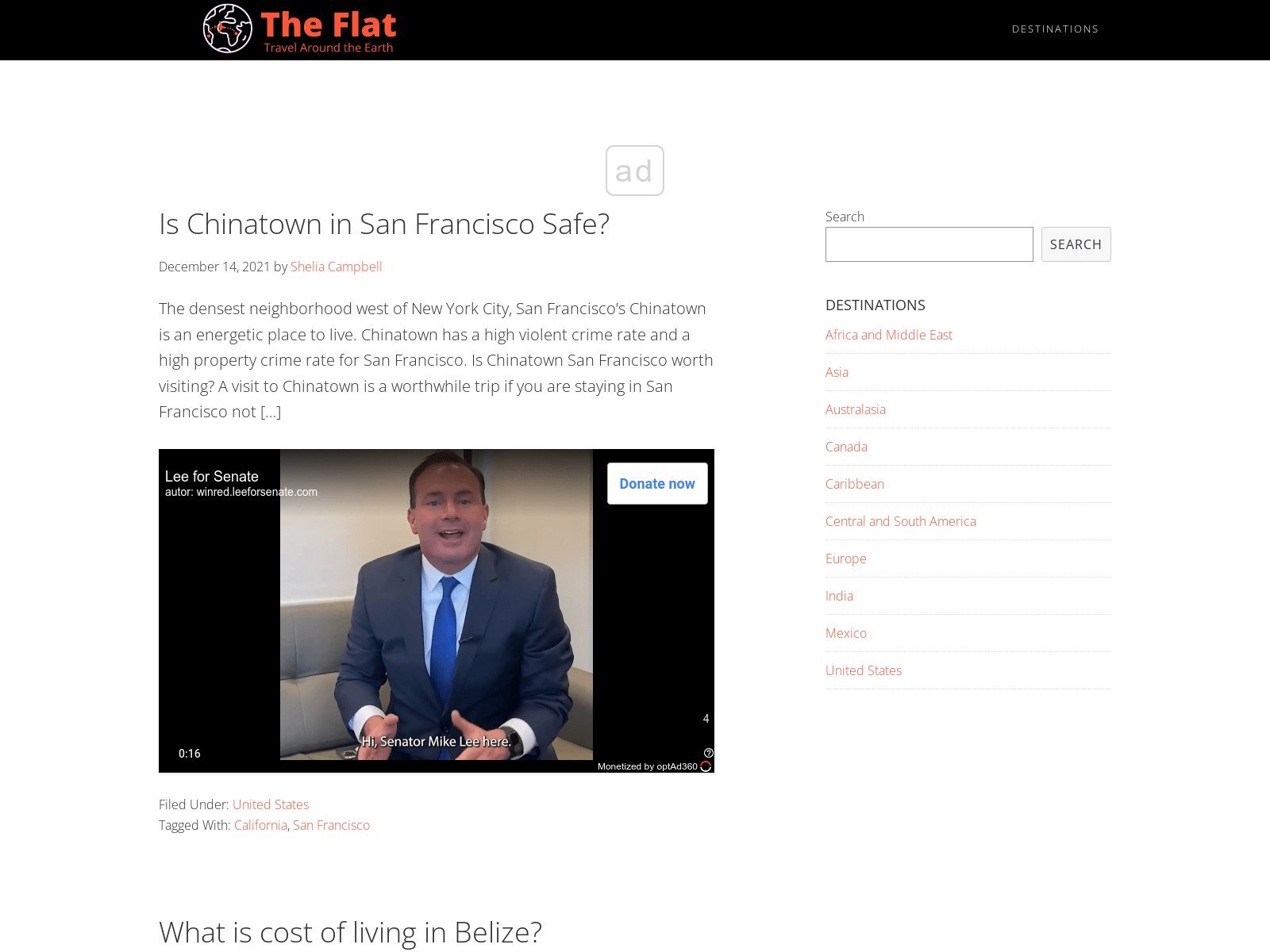

YouTube was observed serving TrueView skippable in-stream video ads for many different brands for years to bots running out of data center server farms - via the Google Video Partners (GVP) network. Some of these TrueView ads were served to declared bots operating out of Google Cloud data centers. Brands whose TrueView ads were served to bots include ads for Senator Mike Lee (R-UT), the Wall Street Journal, Miami-Dade County government Special Victims Bureau, Sexual Predator and Offender Unit (SPOU), Ernst & Young, Lego, Kelloggs, Kenvue (f/k/a J&J Consumer), Mercedes-Benz, and Visa.

What are bots, and how prevalent are they on the open internet ? 7

What is the estimated financial impact of bot traffic or ad fraud on digital advertising ? 10

Background - What is “invalid traffic” in ad tech industry jargon? 11

Background - What is the IAB Tech Lab Spiders and Bots list ? 15

Background: OpenRTB Protocol Guidance on ad bidding and serving to bots 16

Background - Trustworthy Accountability Group (TAG) - Certified Against Fraud 18

Background - Media Rating Council (MRC) Accreditation for IVT Detection 20

Background - Ad tech vendors which partner with HUMAN Security 23

Background - What is “Pre-bid targeting” in digital advertising? 32

Background - Integral Ad Science (IAS) ad fraud solutions 33

Adalytics public interest research objectives 42

Research Methodology - Bot web traffic source #1 - HTTP Archive 43

Research Methodology - Bot web traffic source #2 - Anonymous web crawler vendor 51

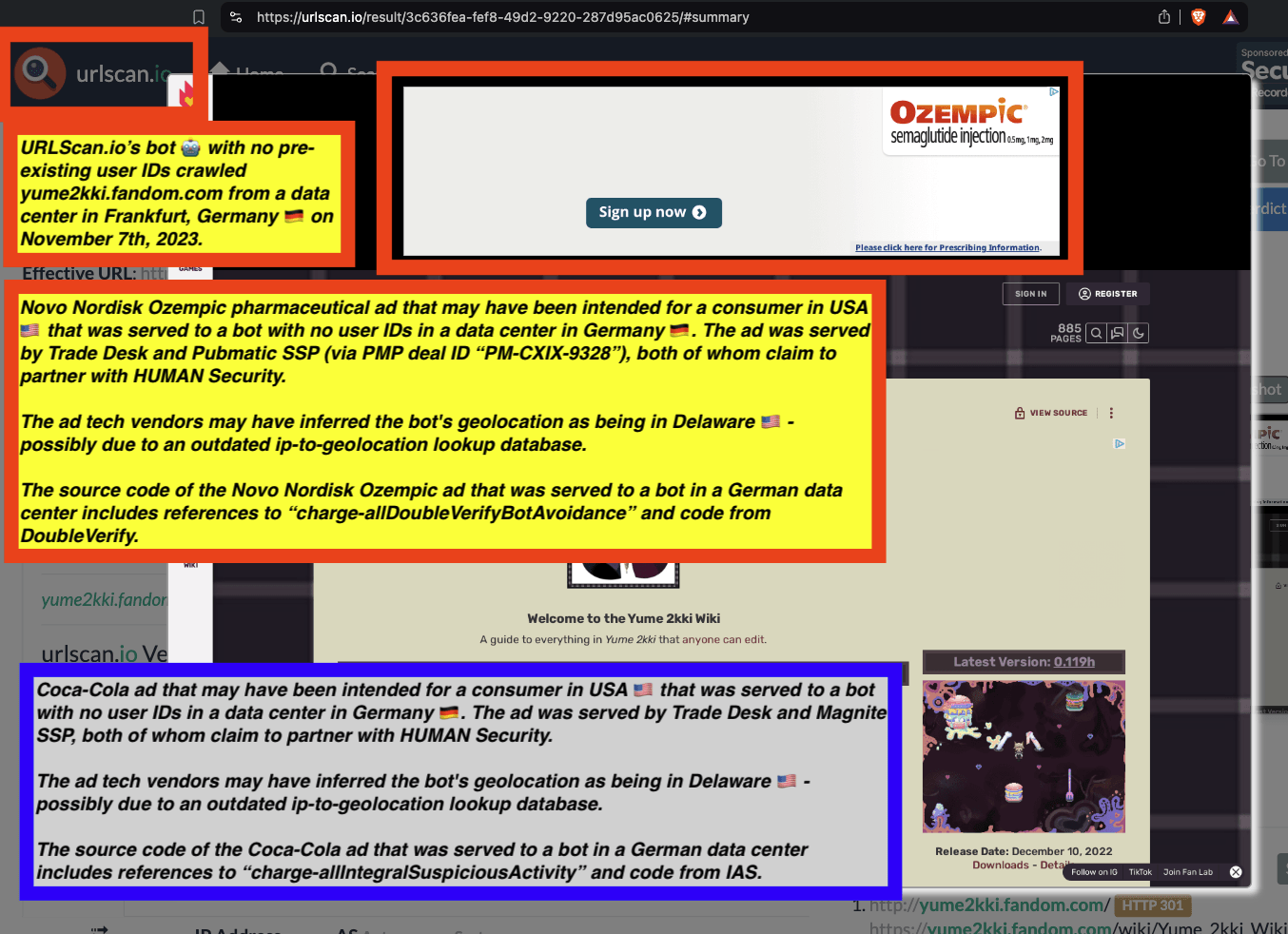

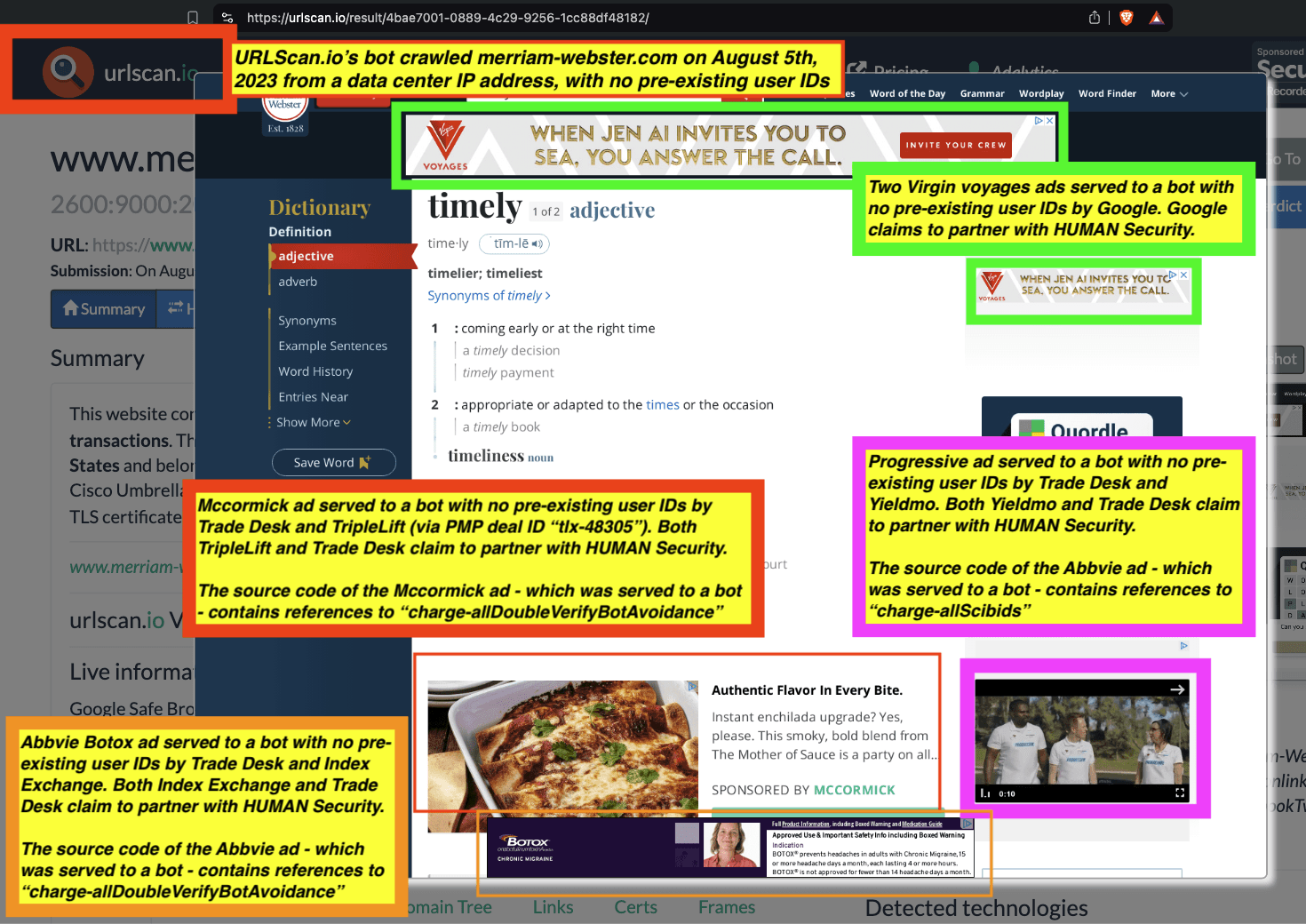

Research Methodology - Bot web traffic source #3 - URLScan.io 52

Research Methodology - Digital forensics of ad source code 60

Google DV360 ads served to bots 98

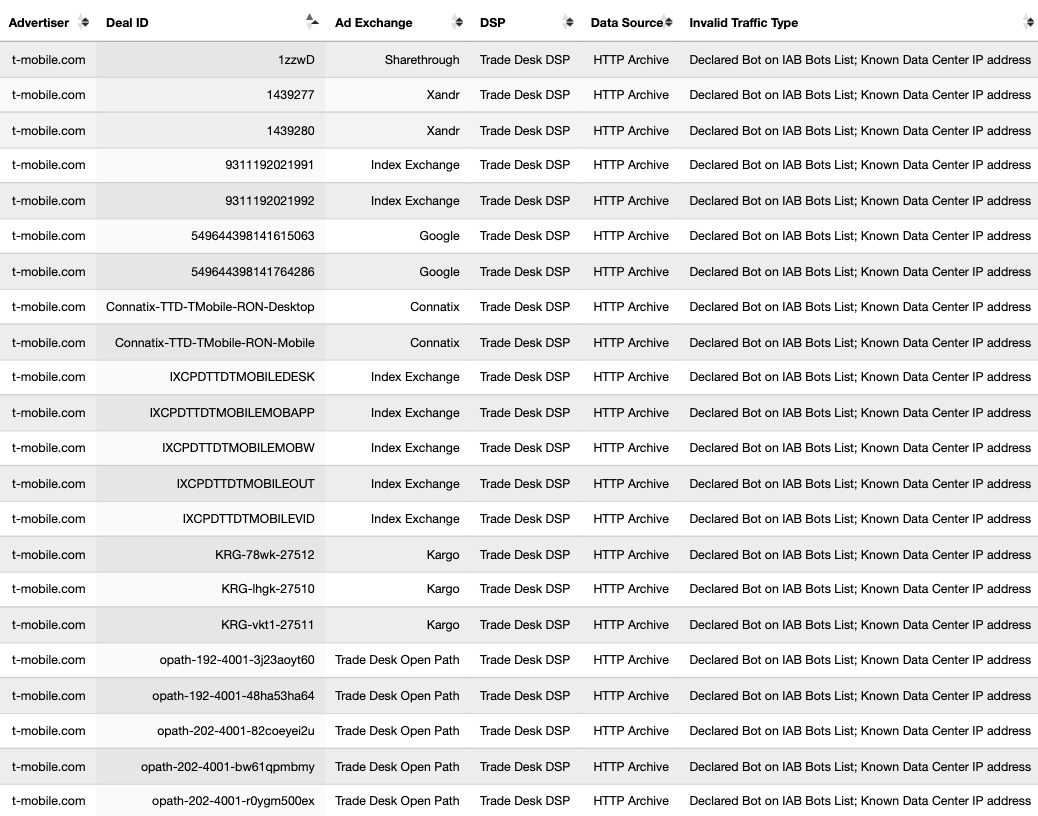

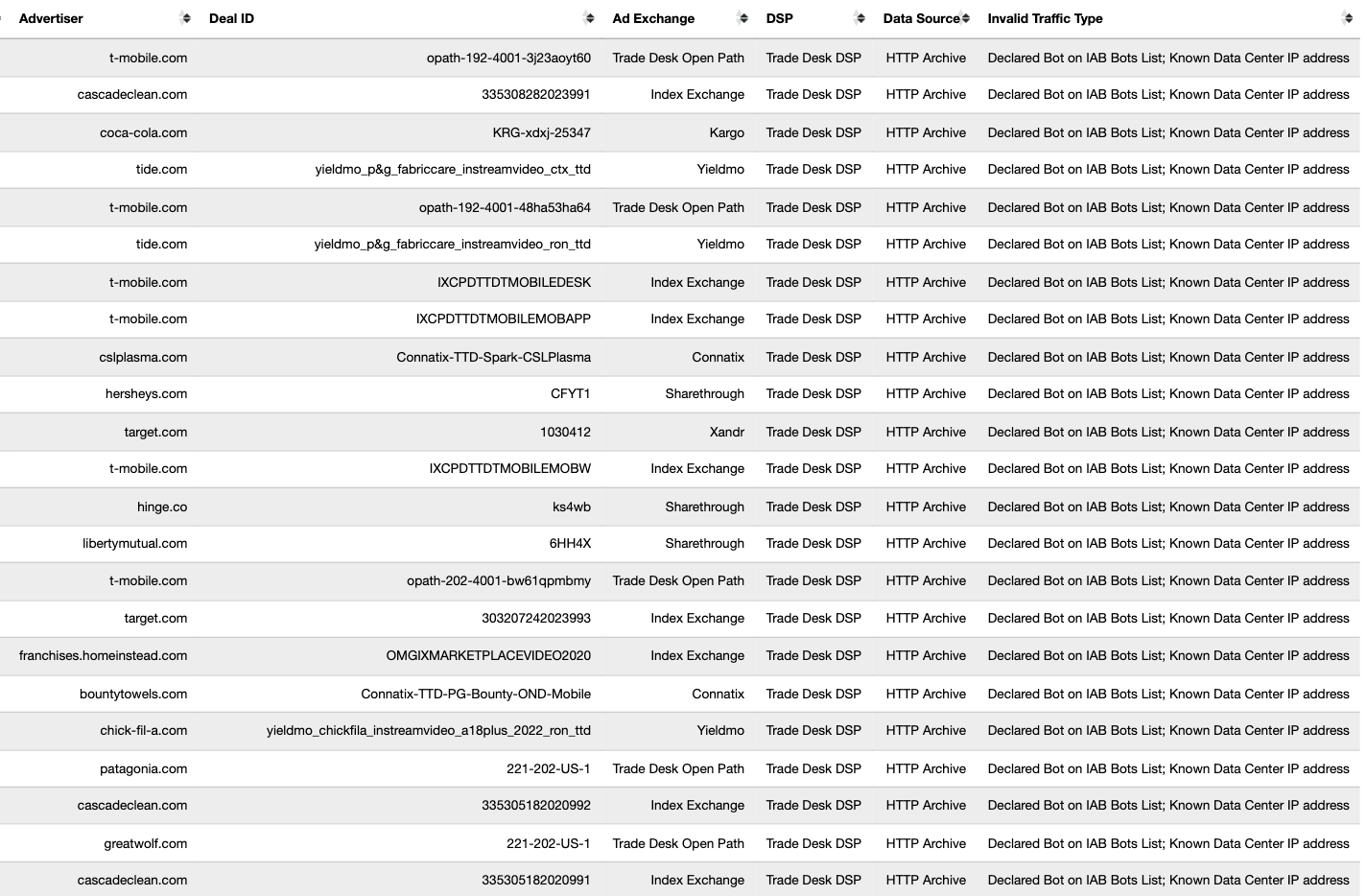

Trade Desk and various SSP ads served to bots 119

Research Results: YouTube TrueView ads served to bots on Google Video Partner sites 227

Introduction

Over the course of the last year, Adalytics has conducted several research reports and worked with various public sector and Fortune 500 brand advertisers and media agencies to analyze their marketing data.

Several media buyers requested that Adalytics review their ad delivery data and/or log files generated by their advertising vendors. Some of these advertisers reported that they were paying several million dollars per year for bot avoidance pre-bid segments or technology. According to the advertisers, this technology was designed as an extra insurance policy to ensure that the advertisers’ ads would not be served to bots or “invalid traffic” (industry jargon for ad delivery that does not conform with various industry standards and buyers’ expectations, such as mis-declared or falsified ad placements).

The vendors providing this technology to advertisers, publishers and media agencies have various certifications from various advertising trade groups or accreditation bodies.

Despite paying for this technology, the brands were surprised to observe that their log files contained evidence that the brands had been charged and billed by their ad tech vendors for ads that were served to openly declared bots. Some brands reportedly saw that their ads were served to “Headless Chrome”, various AI company crawlers and scrapers, and search engine crawlers. Some brands reportedly even had their ads served to a contextual classification bot operated by the very ad verification company the brands were paying millions of dollars to for bot avoidance.

In a few instances, it appeared that a verification vendor who was providing bot avoidance technology was marking significant percentages of an openly declared bot operating out of a known data center as valid, human traffic.

Furthermore, during the course of several research projects, Adalytics observed that the United States Navy, US Army, Centers for Disease Control and Prevention, Department of Veterans Affairs, and US Postal Service had their ads served to confirmed bots. Even members of Congress, such as Republican Senator Mike Lee, sponsor of the AMERICA Act, were observed as having their ads served to bots.

Prompted by these experiences and observations, the requests of numerous Fortune 500 brand marketing executives, and the public interest component of the United States government itself being affected, Adalytics decided to perform exploratory research to try to better understand the phenomenon of how ad tech vendors allow digital ads to be served to bots in data centers.

Background

This section of the report is intended to provide readers with background on what bots are, the prevalence of bot traffic on the open internet, various advertising industry jargon, advertising industry standards regarding ad delivery to bots, and prior research investigating the role of bot avoidance technology vendors.

What are bots, and how prevalent are they on the open internet ?

According to Cloudflare, a hosting company, “Bot traffic describes any non-human traffic to a website or an app. The term bot traffic often carries a negative connotation, but in reality bot traffic isn’t necessarily good or bad; it all depends on the purpose of the bots. Some bots are essential for useful services such as search engines and digital assistants (e.g. Siri, Alexa). Most companies welcome these sorts of bots on their sites. Other bots can be malicious, for example those used for the purposes of credential stuffing, data scraping, and launching DDoS attacks. Even some of the more benign ‘bad’ bots, such as unauthorized web crawlers, can be a nuisance because they can disrupt site analytics and generate click fraud.”

HUMAN Security, a company that provides technology to filter or block bots, states: “When people visit your site to view your products and make purchases, that’s human traffic. When automated software — also called a bot — visits your site, that’s bot traffic.”

Source: HUMAN Security

Cloudflare further describes the negative consequences of bot traffic. Cloudflare states: “unauthorized bot traffic can impact analytics metrics such as page views, bounce rate, session duration, geolocation of users, and conversions. These deviations in metrics can create a lot of frustration for the site owner; it is very hard to measure the performance of a site that’s being flooded with bot activity. Attempts to improve the site, such as A/B testing and conversion rate optimization, are also crippled by the statistical noise created by bots [...] For sites that serve ads, bots that land on the site and click on various elements of the page can trigger fake ad clicks; this is known as click fraud.”

HUMAN Security states: “Automated traffic taxes your infrastructure and increases your costs for bandwidth and compute cycles. Bot traffic can overwhelm your network and slow site performance, which frustrates customers and negatively impacts user experience [...] In addition, bot traffic contaminates your data and skews your analytics — for example, by appearing to show an increase in consumer demand. Flawed metrics on user behavior can lead you to make poor business decisions about pricing, stocking goods, and investing in marketing and advertising.”

Cloudflare further states: “It is believed that over 40% of all Internet traffic is comprised of bot traffic, and a significant portion of that is malicious bots” (emphasis added).

The website of the Audit Bureau of Circulations (ABC UK), a British non-profit that develops standards of media brand measurement, states that “Up to 40% of web traffic is invalid [...] Designed to inflate website and ad campaign numbers, invalid traffic has an impact on advertising costs and can skew reported data making it difficult to measure engagement and traffic sources.”

The 2024 Imperva Threat Research report “reveals that almost 50% of internet traffic comes from non-human sources. Bad bots, in particular, now comprise nearly one-third of all traffic. Bad bots have become more advanced and evasive and now mimic human behavior in such a way that it makes them difficult to detect and prevent.”

According to Akamai Technologies research in June 2024, another hosting company, “bots compose 42% of overall web traffic, and 65% of these bots are malicious.”

According to The Atlantic, “The Internet Is Mostly Bots - More than half of web traffic comes from automated programs—many of them malicious”, citing a 2017 study “which is based on an analysis of nearly 17 billion website visits from across 100,000 domains.”

Source: The Atlantic

Bot traffic can also increase the electricity consumption and Scope3 carbon emissions associated with advertisers media supply chains. According to a December, 2023 research report from company called Scope3 [which monitors carbon emissions associated with digital advertising], “fraud in US programmatic display contributes an estimated 353k mt of carbon emissions.”

Source: Scope3

What is the estimated financial impact of bot traffic or ad fraud on digital advertising ?

In addition to the consequences of unfiltered bot traffic on web measurement and analytics, as well as on Scope3 carbon emissions, various entities have sought to estimate the financial impact of bot traffic or ad fraud on the digital advertising industry.

It bears mentioning that there are many forms of ad fraud beyond or outside of bot traffic. For example, one form of ad fraud may involve a vendor billing an advertiser for digital ads that were never served at all, or served to real humans but on a lower quality context than what was contractually agreed upon.

Different studies have tried to quantify the entire scale of ad fraud, or focused just on the subset of ad fraud related specifically to bot traffic. Furthermore, it is worth noting that not all bot traffic is fraudulent. For example, some bots have benign or beneficial activities, such as search engine crawling and indexing, or monitoring website quality. Readers should be careful not to assume that all bots are malicious or engineered specifically for the purpose of ad fraud. Bots can have beneficial functionality.

According to the World Federation of Advertisers (WFA), a trade group, “Ad fraud likely to exceed $50bn globally by 2025 on current trends second only to the drugs trade as a source of income for organized crime.”

The Association of National Advertisers (ANA) published a study in 2017 that analyzed data collected from ten billion online ads purchased over a two-month period by 49 of the trade body’s advertiser members. One advertiser allegedly had “served 37 per cent of its online ad campaign to bots”.

The Association of National Advertisers (ANA) reported that “A false sense of security enables fraud to thrive.”

In 2022, the ANA wrote that “According to the results of a global 2019 study by Juniper Research, ad fraud costs the marketing industry an estimated $51 million per day, and these losses are likely to increase to $100 billion annually by 2023.” The ANA also wrote that “nearly 18 percent of all internet traffic in the marketing industry can be attributed to nonhuman bots, which are actively engaged in ad fraud.”

Background - What is “invalid traffic” in ad tech industry jargon?

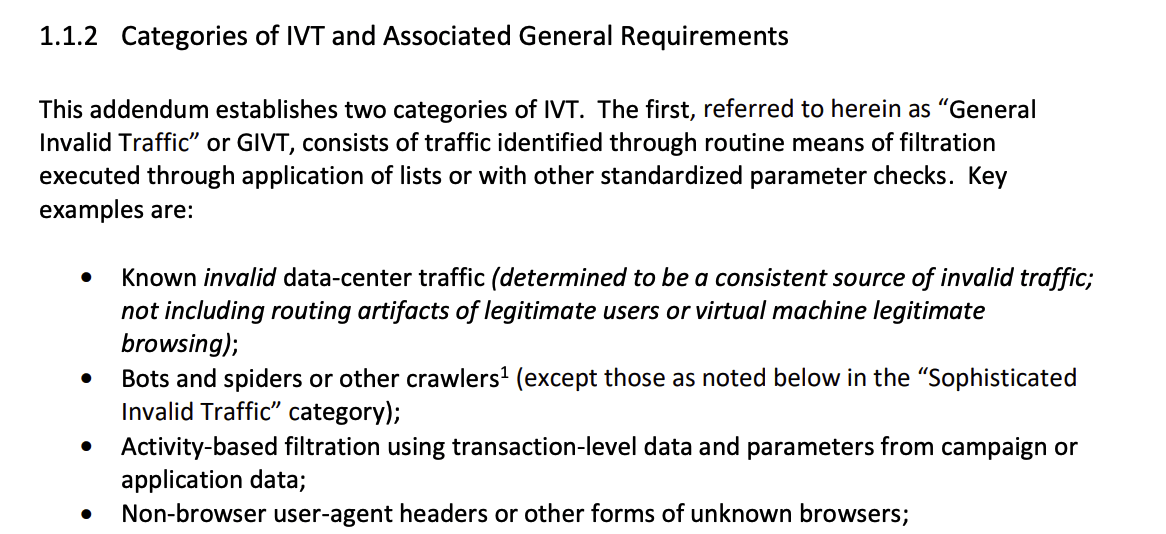

The Media Rating Council, an advertising industry accreditation body, published an “Invalid Traffic Detection and Filtration Standards Addendum” in 2020, defining “invalid traffic” (IVT).

The MRC’s Standard Addendum states that: “Invalid Traffic (IVT) is defined generally as traffic or associated media activity (metrics associated to ad and content measurement including audience, impressions and derivative metrics such as viewability, clicks and engagement as well as outcomes) that does not meet certain quality or completeness criteria, or otherwise does not represent legitimate traffic that should be included in measurement counts. Among the reasons why traffic may be deemed invalid is it is a result of non-human traffic (spiders, bots, etc.), or activity designed to produce IVT.”

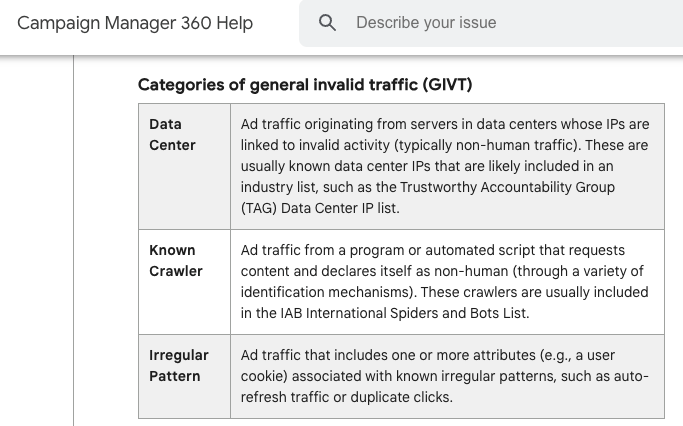

The MRC Addendum defines General Invalid Traffic (GIVT) and Sophisticated Invalid Traffic (SIVT).

The MRC Addendum states: “General Invalid Traffic” or GIVT, consists of traffic identified through routine means of filtration executed through application of lists or with other standardized parameter checks. Key examples are:

Known invalid data-center traffic (determined to be a consistent source of invalid traffic; not including routing artifacts of legitimate users or virtual machine legitimate browsing);

Bots and spiders or other crawlers (except those as noted below in the “Sophisticated Invalid Traffic” category);”

“Non-browser user-agent headers or other forms of unknown browsers;”

“Pre-fetch or browser pre-rendered traffic (where associated ads were not subsequently accessed by a valid user”

The MRC Addendum states that “the second category, herein referred to as “Sophisticated Invalid Traffic” or SIVT, consists of more difficult to detect situations that require advanced analytics, multi-point corroboration/coordination, significant human intervention, etc., to analyze and identify. Key examples are:

Automated browsing from a dedicated device: Known automation systems (e.g., monitoring/testing), emulators, custom automation software and tools; [...]

Bots and spiders or other crawlers masquerading as legitimate users detected via sophisticated means; [...]

Invalid proxy traffic (originating from an intermediary proxy device that exists to manipulate traffic counts or create/pass-on invalid traffic or otherwise failing to meet protocol validation);”

Screenshot of the MRC Invalid Traffic Detection and Filtration Standards Addendum - Categories of IVT and Associated General Requirements

According to Google, “Invalid traffic includes any clicks or impressions that may artificially inflate an advertiser's costs or a publisher's earnings. Invalid traffic covers intentionally fraudulent traffic as well as accidental clicks. Invalid traffic includes, but is not limited to: [...] Automated clicking tools or traffic sources, robots, or other deceptive software.”

Screenshot of Google AdSense Help - Definition of invalid traffic

Source: Google Campaign Manager 360 Help - https://support.google.com/campaignmanager/answer/6076504?hl=en

According to HUMAN Security, an ad tech vendor, “Invalid Traffic (IVT) is generated when malicious bots view and click on ads in order to inflate conversion numbers, resulting in wasted advertiser spend.”

HUMAN Security defines General invalid traffic (GIVT) as “Usually originating from data centers, general invalid traffic is created by simple bots that are not meant to be malicious and are easier to spot with basic bot detection solutions. There are a few different categories of GIVT: Data center: When the IP address associated with non-human ad traffic traces to a server in a data center, it is considered bot traffic. Known crawler: Associated with automated scripts or programs—often called bots or spiders—that are coded to identify themselves as non-human, known crawler traffic is generally thought to be good and legitimate, although not for the purposes of counting ad impressions.”

HUMAN Security defines sophisticated invalid traffic (SIVT) as “Sophisticated and malicious bot activity that is intended to closely mirror human behavior, sophisticated invalid traffic comes from bots that are particularly good at evading detection. Ridding your traffic of SIVT requires advanced bot detection. There are a few different categories of SIVT: Automated browsing: When a program or automated script requests web content (including digital ads) without user involvement and without declaring itself as a crawler, it’s considered automated browsing. These programs and scripts are generally used for malicious purposes. - Ex. Botnets”

Screenshot of HUMAN Security’s documentation on “What are the different types of invalid traffic?”

The Trade Desk defines General Invalid Traffic as: “Invalid traffic that can be identified through routine methods of filtration.” The Trade Desk defines Sophisticated Invalid Traffic as: “A form of invalid traffic that requires advanced detection and analytics tools to identify.”

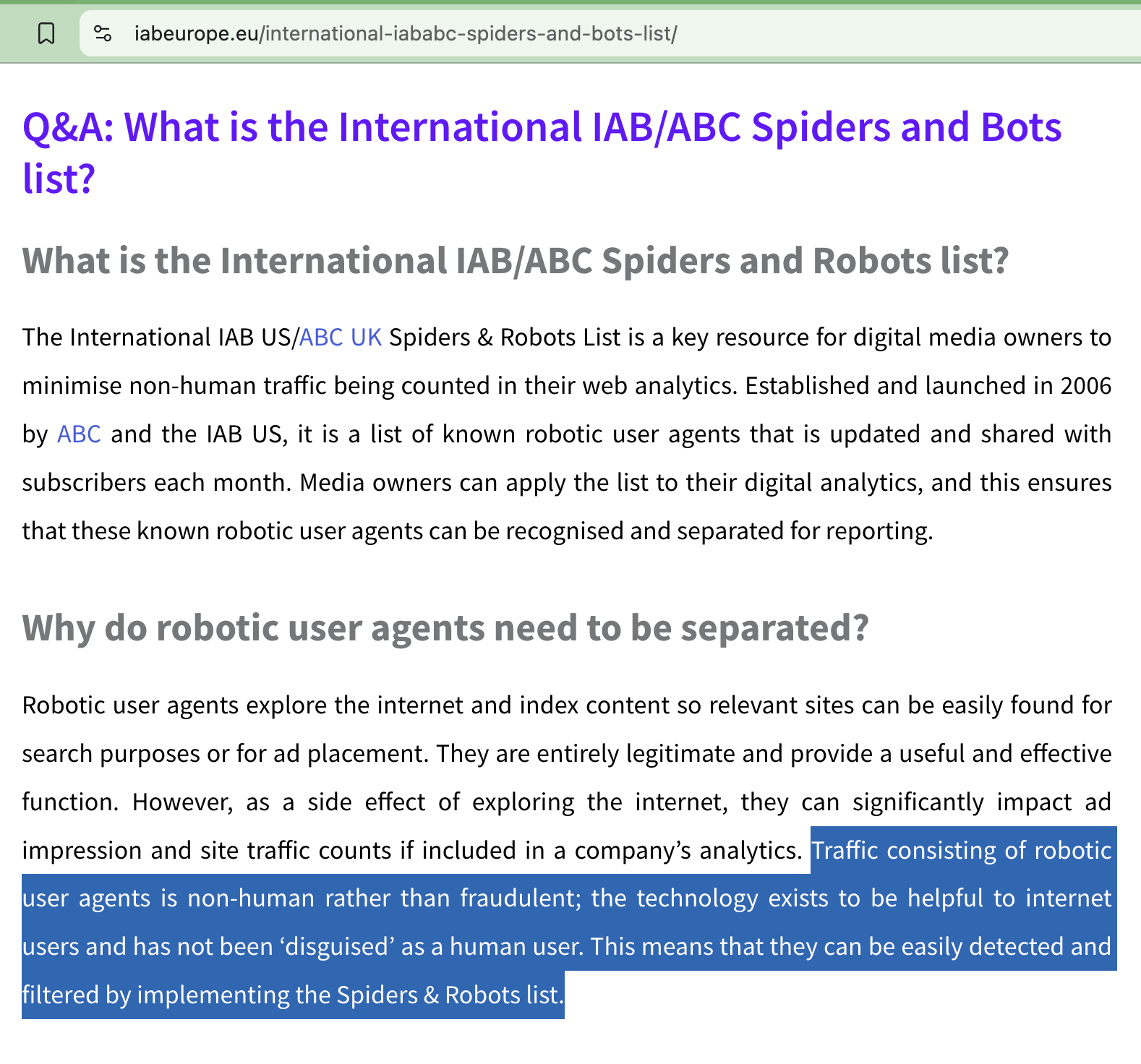

Background - What is the IAB Tech Lab Spiders and Bots list ?

The User-Agent header is an HTTP header intended to identify the user agent responsible for making a given HTTP request. When a person browses the web via a browser on their phone rather than on the desktop, their phone’s browser declares via the HTTP header that the user is browsing the web on a mobile browser (rather than a desktop browser).

A Chrome browser on Windows, a Firefox browser on Android, a Safari brower on iOS, and a Roku connected television device each have a distinct User-Agent header.

Similarly, when various “good” bots, such as GoogleBot or BingBot crawl the open internet, those bots openly declare themselves via the HTTP User-Agent header. If a website does not want to appear in Google or Bing search results, they can choose to block GoogleBot or Bingbot via their web hosting provider.

The Interactive Advertising Bureau (IAB) Tech Lab, an advertising industry trade group, and the Audit Bureau of Circulations (ABC) UK, a British trade group, create a reference list of known bots and spiders for ad tech vendors to use to detect and/or filter basic bot traffic.

According to the IAB Tech Lab, “The IAB Tech Lab publishes a comprehensive list of such Spiders and Robots that helps companies identify automated traffic such as search engine crawlers, monitoring tools, and other non-human traffic that they don’t want included in their analytics and billable counts [...] The IAB Tech Lab Spiders and Robots provides the industry two main purposes. First, the spiders and robots list consists of two text files: one for valid browsers or user agents and one for known robots. These lists are intended to be used together to comply with the “dual pass” approach to filtering as defined in the IAB’s Ad Impression Measurement Guidelines (i.e., identify valid transactions using the valid browser list and then filter/remove invalid transactions using the known robots list). Second, the spiders and robots list supports the MRC’s General Invalid Traffic Detection and Filtration Standard by providing a common industry resource and list for facilitating IVT detection and filtration.”

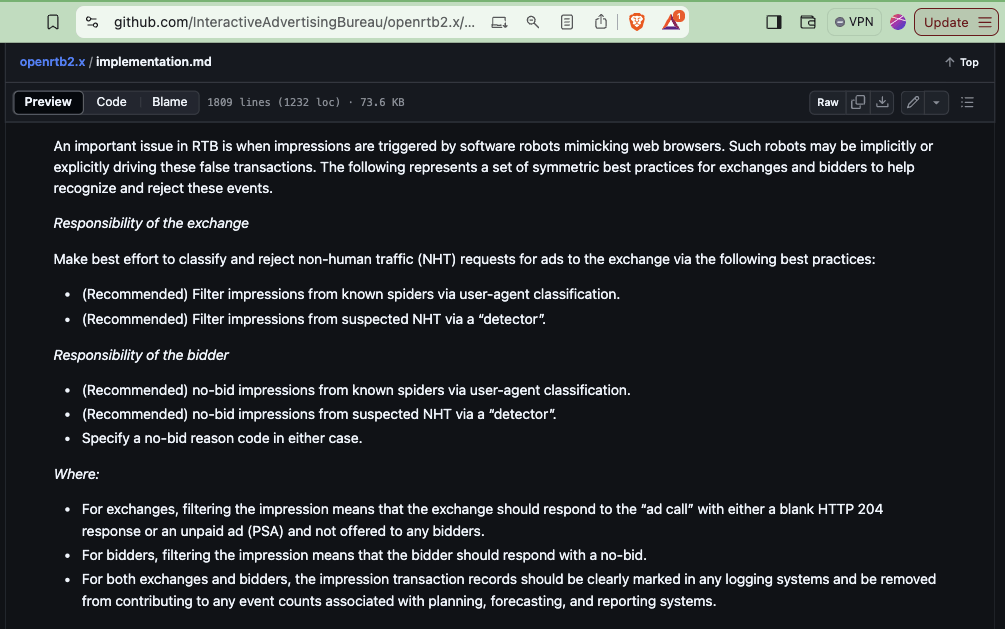

Section 7.1 of the IAB Tech Lab OpenRTB 2.x protocol specifies that ad exchanges and bidders should filter impressions from known bots who openly declare their user-agent. The IAB Tech Lab states:

Background: OpenRTB Protocol Guidance on ad bidding and serving to bots

The IAB Tech Lab is an advertising industry trade group which publishes the open real time bidding (OpenRTB) protocol which governs programmatic ad auctions that connect media publishers who sell ad spots to advertisers who wish to purchase and display ads.

The IAB Tech Lab OpenRTB protocol includes guidance on how ad exchanges and ad buying platforms should action bot traffic visiting websites and generating potential ad auction requests.

“An important issue in RTB is when impressions are triggered by software robots mimicking web browsers. Such robots may be implicitly or explicitly driving these false transactions. The following represents a set of symmetric best practices for exchanges and bidders to help recognize and reject these events.

Responsibility of the exchange - Make best effort to classify and reject non-human traffic (NHT) requests for ads to the exchange via the following best practices:

(Recommended) Filter impressions from known spiders via user-agent classification.

(Recommended) Filter impressions from suspected NHT via a “detector”.

Responsibility of the bidder:

(Recommended) no-bid impressions from known spiders via user-agent classification.

(Recommended) no-bid impressions from suspected NHT via a “detector”.

Specify a no-bid reason code in either case.

Where:

For exchanges, filtering the impression means that the exchange should respond to the “ad call” with either a blank HTTP 204 response or an unpaid ad (PSA) and not offered to any bidders.

For bidders, filtering the impression means that the bidder should respond with a no-bid.

For both exchanges and bidders, the impression transaction records should be clearly marked in any logging systems and be removed from contributing to any event counts associated with planning, forecasting, and reporting systems.”

Background - Trustworthy Accountability Group (TAG) - Certified Against Fraud

The Trustworthy Accountability Group (TAG) is a digital advertising initiative and trade group.

TAG launched its “Certified Against Fraud (CAF) Program in 2016 to combat invalid traffic in the digital advertising supply chain.”

Screenshot of a quote from P&G Chief Brand Officer Marc Pritchard on the TAG website

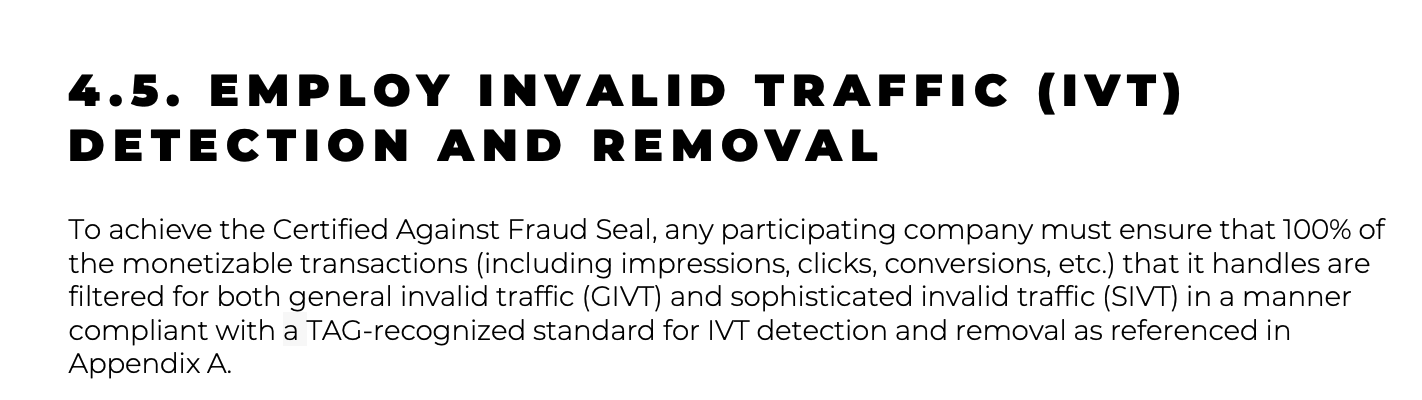

“To achieve the Certified Against Fraud Seal, any participating company must ensure that 100% of the monetizable transactions (including impressions, clicks, conversions, etc.) that it handles are filtered for both general invalid traffic (GIVT) and sophisticated invalid traffic (SIVT) in a manner compliant with a TAG-recognized standard for IVT detection and removal as referenced in Appendix A.”

Source: TAG

“All inventory handled by a participating company – including inventory on that company’s

owned and operated media properties as well as any inventory handled by that company on

behalf of a third-party partner – must be filtered for GIVT and SIVT in a manner compliant with a

TAG-recognized standard for IVT detection and removal as referenced in Appendix A.”

Background - Media Rating Council (MRC) - Invalid Traffic Detection and Filtration Standards Addendum

The Media Rating Council (MRC) is a US-based non profit organization that manages accreditations for media research and rating purposes. It performs accreditations for rating and research companies like Nielsen, comScore, and multiple digital measurement services. The MRC does not conduct the audit of the companies being accredited itself. The audits are done annually by accounting firms such as Ernst & Young. The company being accredited pays for the audit, with fees that could be in the hundreds of thousands or even millions of dollars.

The Media Rating Council has published Standards documents for “Invalid Traffic Detection and Filtration”.

The document “presents additional Standards for the detection and filtration of invalid traffic applicable to all measurement organizations of advertising, content and related media metrics (including outcome measurement) subject to accreditation or certification audit. MRC’s original guidance is contained in measurement guidelines maintained by the IAB.”

The document explains, “Among the reasons why traffic may be deemed invalid is it is a result of non-human traffic (spiders, bots, etc.).”

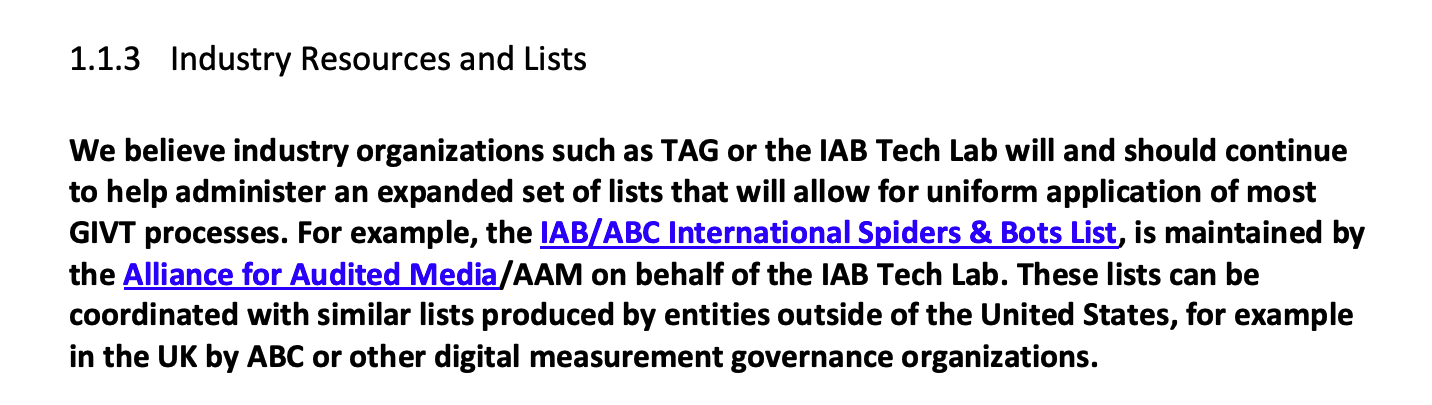

The MRC IVT Standards addendum discusses the role of IP address, user-agent, and industry reference lists in detecting invalid traffic (among many other topics and details).

Source: Media Rating Council

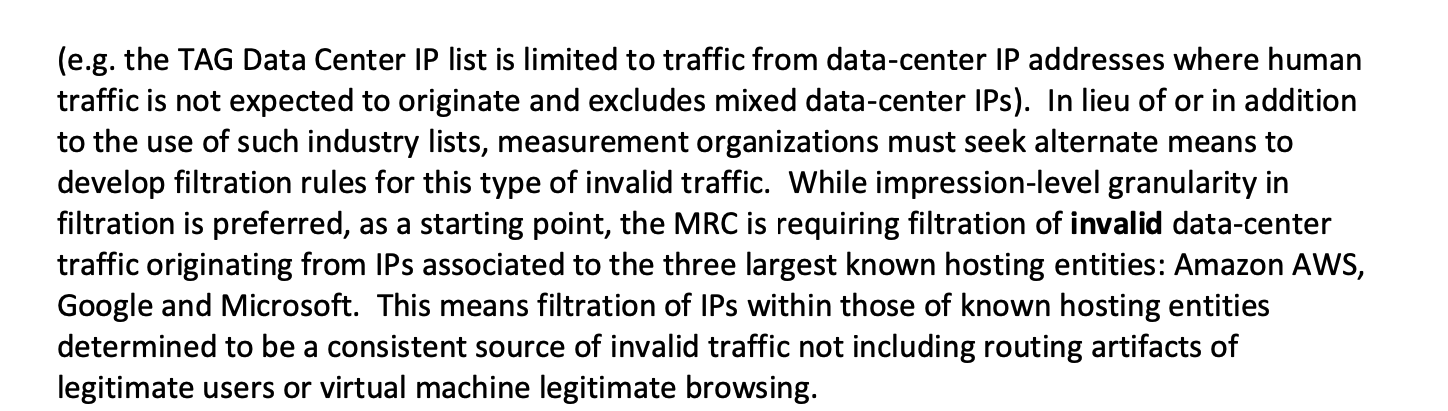

The “MRC is requiring filtration of invalid data-center traffic originating from IPs associated to the three largest known hosting entities: Amazon AWS, Google and Microsoft. This means filtration of IPs within those of known hosting entities determined to be a consistent source of invalid traffic not including routing artifacts of legitimate users or virtual machine legitimate browsing.”

Source: Media Rating Council

The Media Rating Council (MRC) states: “The following techniques shall be employed by the measurement organization to the extent necessary to filter material General Invalid Transactions.” The list includes a list of “parameter based detection”, which includes “non-Browser User-Agent Header” and “Known Invalid Data-Center Traffic”.

Background - Media Rating Council (MRC) Accreditation for IVT Detection

The Media Rating Council has provided accreditations to various vendors for invalid traffic detection and/or filtration.

One can visit the website of the Media Rating Council to see what accreditations various ad tech vendors have received from the MRC.

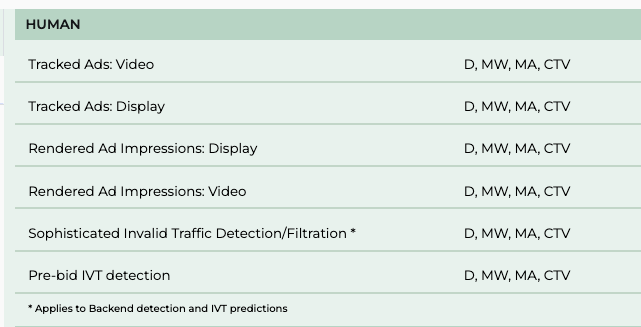

In 2021, HUMAN Security (f/k/a “White Ops) announced that it was the first company to “Receive MRC Accreditation for Both Pre-Bid and Post-Bid Invalid Traffic Detection and Mitigation Across Desktop, Mobile Web, Mobile In-App and Connected TV”. The announcement states this “includes the first-ever accreditation for pre-bid detection and mitigation of SIVT of its Advertising Integrity product (the solution formerly known as MediaGuard) across all platforms.”

HUMAN’s press release stated: “White Ops today verifies more than ten trillion digital interactions per week, working directly with the largest internet platforms, DSPs and exchanges. With White Ops Advertising Integrity, platforms can tap into the most comprehensive pre-bid prevention and post-bid detection capabilities to verify the validity of advertising efforts across all channels. The White Ops bot mitigation platform uses a multilayered detection methodology to spot and stop sophisticated bots and fraud by using technical evidence, continuous adaptation, machine learning and threat intelligence. In most cases, White Ops delivers responses to partners in 10 milliseconds or less before a bid is made, saving time and money and ensuring that their advertising inventory can be trusted and fraud-free.”

The MRC’s website indicates that HUMAN Security (f/k/a “White Ops) has received MRC accreditations for “Pre-bid IVT detection” and “Sophisticated Invalid Traffic Detection/Filtration”.

Screenshot showing that HUMAN Security has received an accreditation from the Media Rating Council for “Sophisticated Invalid Traffic Detection/Filtration”. Source: Media Rating Council https://mediaratingcouncil.org/accreditation/digital

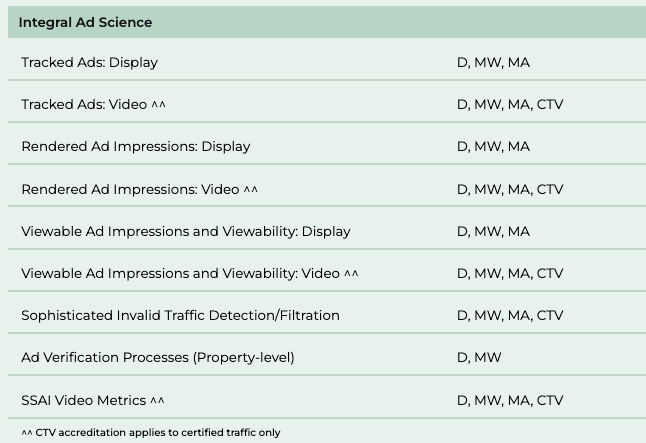

Other ad tech vendors that have received accreditations from the Media Rating Council include Integral Ad Science (IAS) and DoubleVerify.

In the screenshot below from the MRC’s website, one can see that IAS has received MRC accreditation for “Sophisticated Invalid Traffic Detection/Filtration”.

Screenshot showing that Integral Ad Science (IAS) has received an accreditation from the Media Rating Council for “Sophisticated Invalid Traffic Detection/Filtration”. Source: Media Rating Council https://mediaratingcouncil.org/accreditation/digital

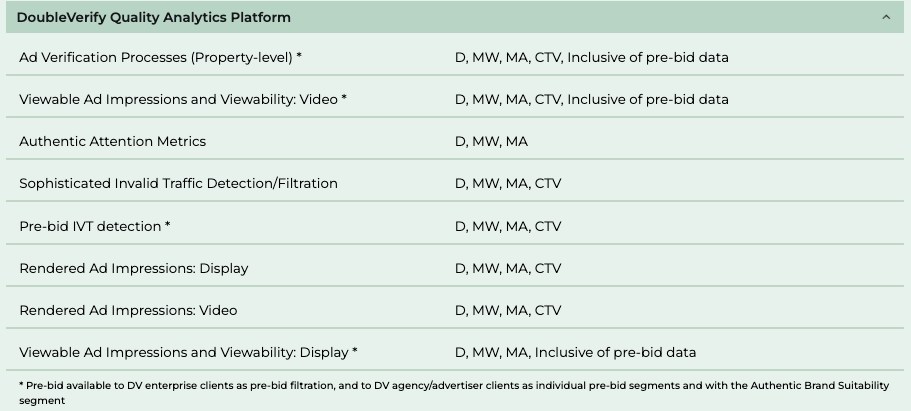

The screenshot below shows that DoubleVerify has received MRC accreditation for “Pre-bid IVT detection” and “Sophisticated Invalid Traffic Detection/Filtration”.

The Media Rating Council website explains that for DoubleVerify’s “Pre-bid IVT detection”, “Pre-bid available to DV enterprise clients as pre-bid filtration, and to DV agency/advertiser clients as individual pre-bid segments and with the Authentic Brand Suitability segment”.

Screenshot showing that the DoubleVerify Quality Analytics Platform has received an accreditation from the Media Rating Council for “Sophisticated Invalid Traffic Detection/Filtration” and “Pre-bid IVT detection”. Source: Media Rating Council https://mediaratingcouncil.org/accreditation/digital

Background - Ad tech vendors which partner with HUMAN Security

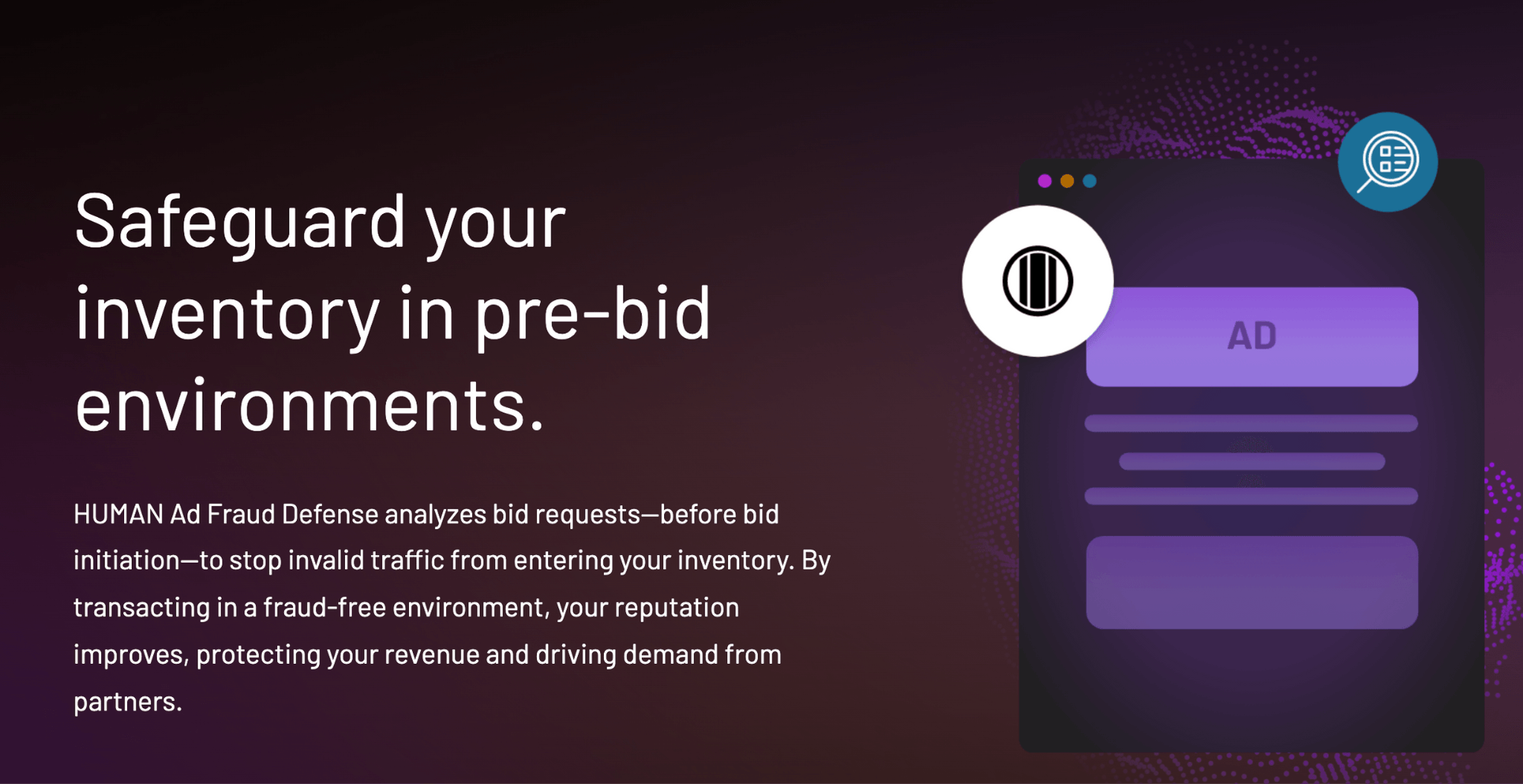

HUMAN Security’s “Ad Fraud Defense” marketing page declares: “Safeguard your inventory in pre-bid environments. HUMAN Ad Fraud Defense analyzes bid requests—before bid initiation—to stop invalid traffic from entering your inventory. By transacting in a fraud-free environment, your reputation improves, protecting your revenue and driving demand from partners.”

Source: HUMAN Security

HUMAN Security’s marketing page for its “MediaGuard” product states that “For each of the 2 trillion-plus interactions verified by HUMAN each day, up to 2,500 signals are parsed through over 350 algorithms to reach a single critical decision - bot or not.”

Source: HUMAN Security

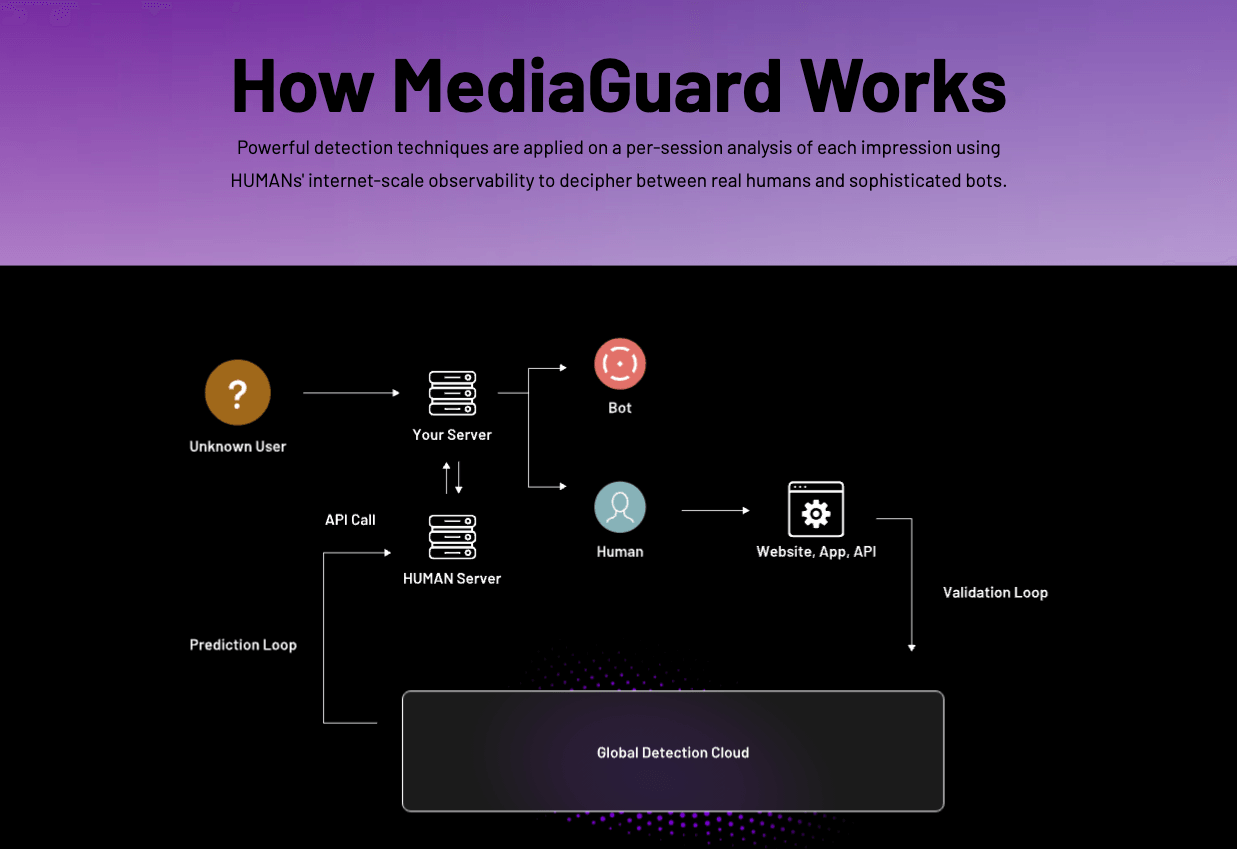

HUMAN Security’s website explains that the way that MediaGuard works is through “Powerful detection techniques are applied on a per-session analysis of each impression using HUMANs' internet-scale observability to decipher between real humans and sophisticated bots.”

Source: HUMAN Security

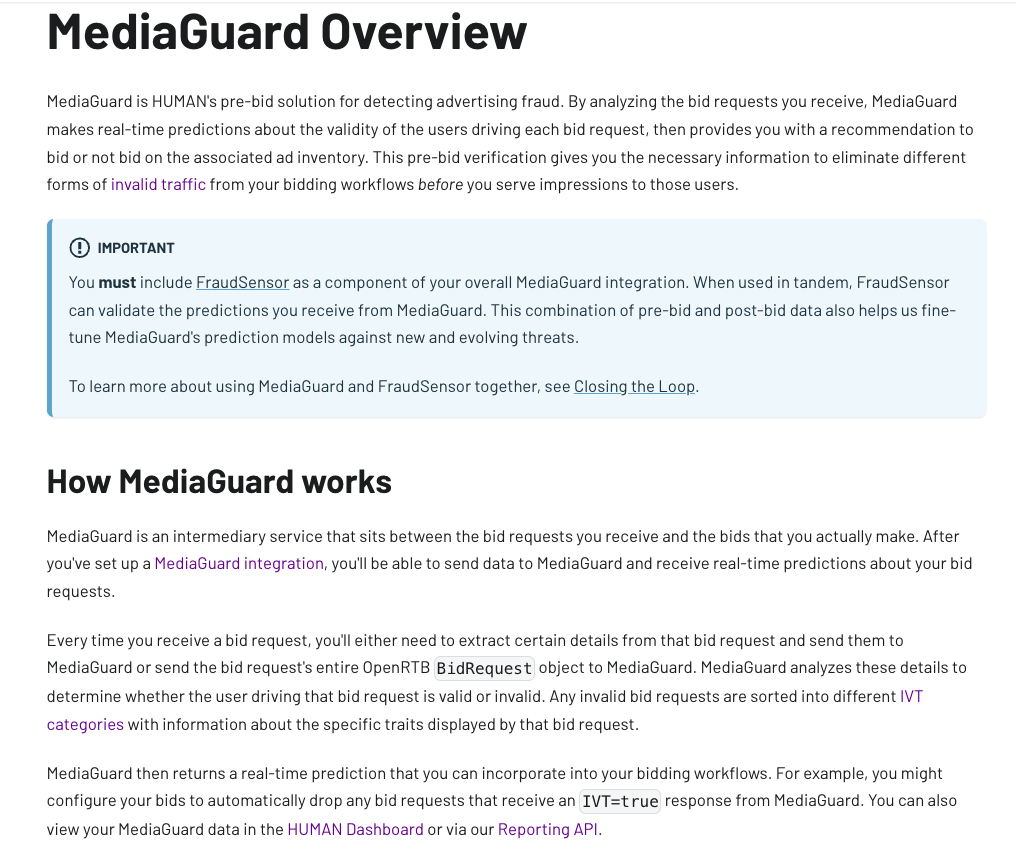

HUMAN Security’s public technical documentation states: “MediaGuard is HUMAN's pre-bid solution for detecting advertising fraud. By analyzing the bid requests you receive, MediaGuard makes real-time predictions about the validity of the users driving each bid request, then provides you with a recommendation to bid or not bid on the associated ad inventory. This pre-bid verification gives you the necessary information to eliminate different forms of invalid traffic from your bidding workflows before you serve impressions to those users.”

HUMAN further explains that “MediaGuard is an intermediary service that sits between the bid requests you receive and the bids that you actually make. After you've set up a MediaGuard integration, you'll be able to send data to MediaGuard and receive real-time predictions about your bid requests. Every time you receive a bid request, you'll either need to extract certain details from that bid request and send them to MediaGuard or send the bid request's entire OpenRTB BidRequest object to MediaGuard. MediaGuard analyzes these details to determine whether the user driving that bid request is valid or invalid. Any invalid bid requests are sorted into different IVT categories with information about the specific traits displayed by that bid request. MediaGuard then returns a real-time prediction that you can incorporate into your bidding workflows. For example, you might configure your bids to automatically drop any bid requests that receive an IVT=true response from MediaGuard. You can also view your MediaGuard data in the HUMAN Dashboard or via our Reporting API.”

Source: HUMAN Security Media Overview technical documentation

Many ad tech vendors have made public announcements about partnering with HUMAN Security (f/k/a “White Ops”), including using its MediaGuard product to check their ad inventory pre-bid.

For example, Index Exchange SSP stated in a press release:

“Index Exchange Partners with White Ops to Deliver Invalid Traffic Protection Against Sophisticated Bots Across All Global Inventory Channels”; “Index Exchange (IX), one of the world’s largest independent ad exchanges, and White Ops, the global leader in collective protection against sophisticated bot attacks and fraud, today announced an expanded partnership that enhances Index Exchange’s global inventory across all channels and regions. Through White Ops’ comprehensive protection, the partnership protects the entirety of Index Exchange’s global inventory. It allows buyers to purchase from IX’s emerging channels, such as mobile app and Connected TV (CTV), with confidence that its supply chain is protected against invalid traffic before a bid request is ever sent to a DSP and made eligible” (emphasis added).

Yieldmo SSP stated:

“White Ops, the global leader in bot mitigation, has announced a partnership with Yieldmo, one of the world’s largest independent mobile ad marketplaces, to protect its programmatic demand partners from sophisticated bot attacks. [...] MediaGuard is a bot prevention API using machine-learning algorithms that learn and adapt in real-time to accurately block bot-driven ad requests before a buyer has the opportunity to bid on them. Eliminating fraudulent ad requests earlier in the bid process results in better overall performance for advertisers and yield for publishers. By leveraging White Ops’ Bot Mitigation Platform, Yieldmo can accurately block sophisticated bots and pre-bid IVT without compromising the speed of their website and online operations. MediaGuard prevents bots and IVT across desktop, mobile web, mobile app, and connected TV (CTV) environments in real time before an impression is served.”

Triplelift SSP stated in 2018:

“Earlier this month our team announced that in partnership with White Ops, TripleLift would become the first native exchange to offer third-party verified pre-bid fraud prevention, across all of our inventory. This partnership allows TripleLift to leverage White Ops’s MediaGuard, to assist in the pre-bid prevention of fraud across the entire exchange.”

Pubmatic SSP stated:

“White Ops today announced its partnership with sell-side platform (SSP) PubMatic, to defend against fraudulent, non-human traffic impressions across PubMatic’s video and mobile inventory. Through this partnership, PubMatic has globally implemented White Ops' pre-bid and post-bid solutions to provide ad fraud detection and prevention.”

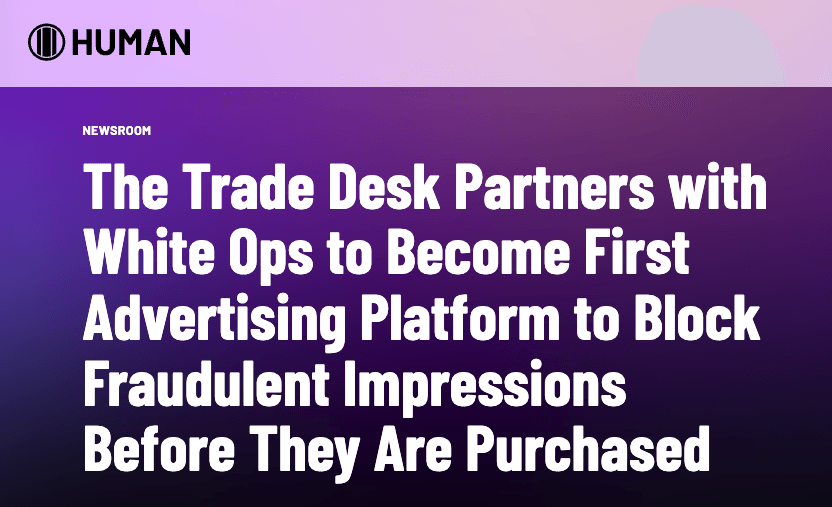

Demand side platform Trade Desk announced:

“White Ops and The Trade Desk [...] today announce a landmark deal that completely changes how the advertising industry tackles fraud. White Ops’ Human Verification technology will aim to ensure that there is a human on the other end of every impression served on The Trade Desk that runs through White Ops, in real time, protecting global advertisers and agencies from buying fraudulent impressions [...] For too long, invalid traffic has been part of our industry,” said Jeff Green, CEO and co-founder of The Trade Desk. “There’s no level of fraud that is acceptable. Our partnership with White Ops means that we are the first advertising platform to block non-human impressions at the front door. [...] As part of this initiative, White Ops and The Trade Desk will co-locate servers and data centers in North America, Europe and Asia, to scan every biddable ad impression in real-time. [...] When a non-human impression, known as “Sophisticated Invalid Traffic (SIVT)” within the advertising industry, is identified by White Ops, The Trade Desk will block that impression from serving. The intent is this technology will be applied to every impression The Trade Desk bids on that runs through White Ops, on a global basis. [...] Unlike other solutions, the goal here is to run all impressions across The Trade Desk’s platform through White Ops, not just sampled impressions. Additionally, the Trade Desk has collaborated with the leading SSPs to bring a unified solution to market.” (emphasis added).

Screenshot of HUMAN Security’s website, showing a press release from 2017

Screenshot of Trade Desk’s website, showing the firm partners with HUMAN Security

https://www.adweek.com/performance-marketing/the-trade-desk-and-white-ops-are-teaming-up-to-block-bot-traffic-before-advertisers-buy-it/

Xandr (f/k/a AppNexus) and now owned by Microsoft, announced:

“Xandr [...] and HUMAN, a cybersecurity company best known for collectively protecting enterprises from bot attacks, today announced an expansion to HUMAN’s existing pre-bid bot protection within the Xandr platform, to provide an additional layer of protection against fraud and sophisticated bot attacks as emerging formats like connected TV (CTV) scale in availability and demand. This integration connects the full breadth of HUMAN’s Advertising Integrity suite of ad fraud detection and prevention solutions to Xandr’s buy-and sell-side platforms [...] Xandr protects its platform before a bid is even made—including within CTV—to continue delivering success to its publishers and advertisers. HUMAN recently became the first company to receive accreditation from the Media Rating Council (MRC) for pre and post-bid protection against Sophisticated Invalid Traffic (SIVT) for desktop, mobile web, mobile in-app, and CTV.”

Many ad tech vendors are part of the HUMAN Security’s “Human Collective.”

List of Flagship and Founding Members of HUMAN Security’s “Human Collective”. Source: https://www.humansecurity.com/company/the-human-collective. Participation in the Human Collective entails: “Technology - Powered by HUMAN's proprietary technology and Modern Defense Platform, we can ensure members are protecting themselves and each other.”

A list of vendors who issued public statements or claimed to have partnered with HUMAN Security (f/k/a “White Ops”) can be seen below.

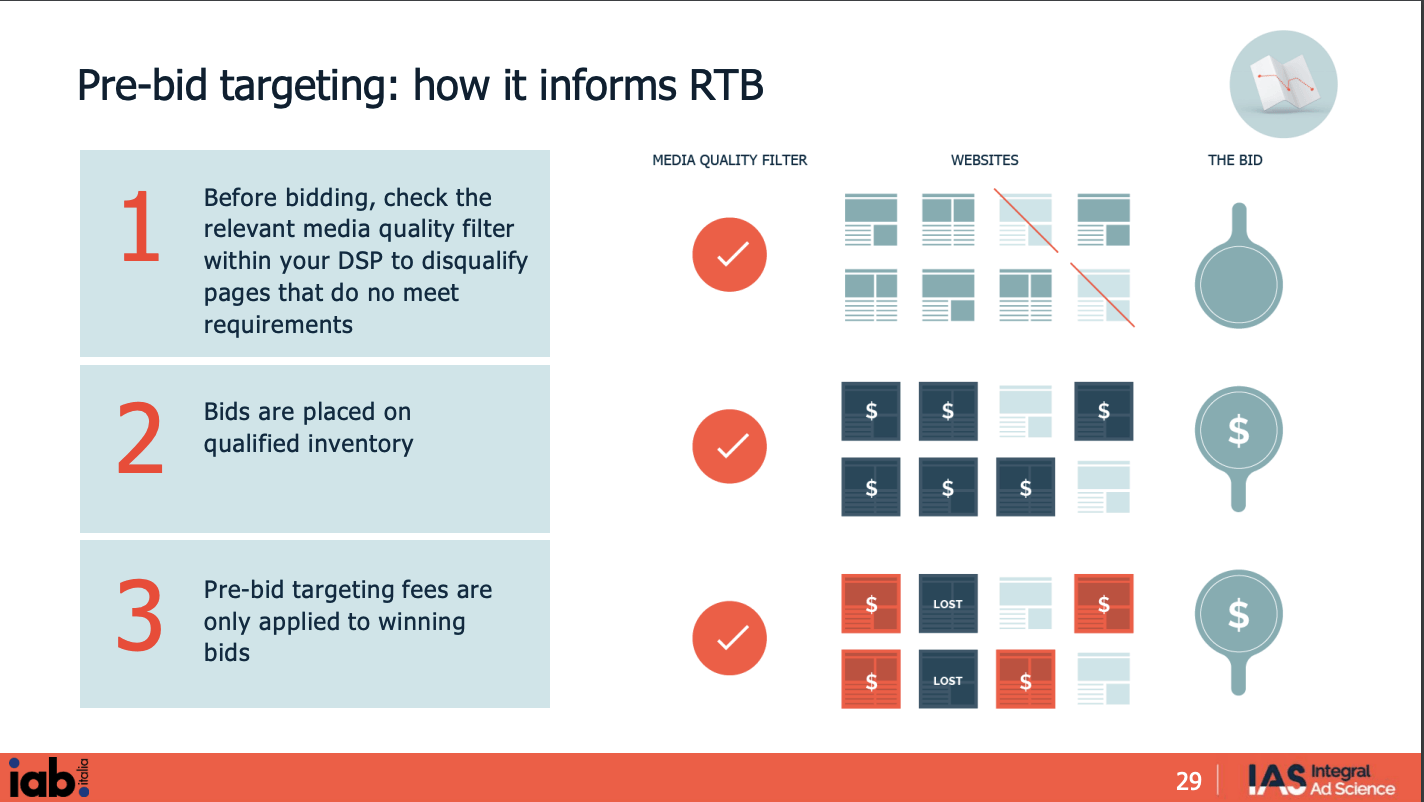

Background - What is “Pre-bid targeting” in digital advertising?

In programmatic digital advertising, “Real Time Bidding” (RTB or OpenRTB) is a protocol that governs digital ad auctions, where publishers and advertisers can offer to sell and buy ad placements (respectively).

Before an advertiser “bids” on a given programmatic ad auction, “pre-bid” data segments and solutions can inform the ad bidding process.

For example, according to the IAB and Integral Ad Science (IAS), an ad verification vendor, the way “Pre-bid targeting” informs real time bidding is as follows:

“Before bidding, check the relevant media quality filter within your DSP to disqualify pages that do not meet requirements”

“Bids are placed on qualified inventory”

“Pre-bid targeting fees are only applied to winning bids”

Source: IAB and IAS

Background - Integral Ad Science (IAS) ad fraud solutions

Integral Ad Science (IAS) is an ad verification company that has received multiple Media Rating Council accreditations, and is TAG “Certified Against Fraud”.

IAS provides ad verification solutions to both advertisers and to media publishers.

IAS offers “pre-bid” targeting data solutions in various ad buying platforms (known as “demand side platforms” or DSPs), such as Trade Desk, Beeswax, and Google DV360.

Source: IAS

IAS’s website states that “utilizing IAS pre-bid segments” can help advertisers “Only bid on quality Inventory” in programmatic ad auctions.

Source: IAS

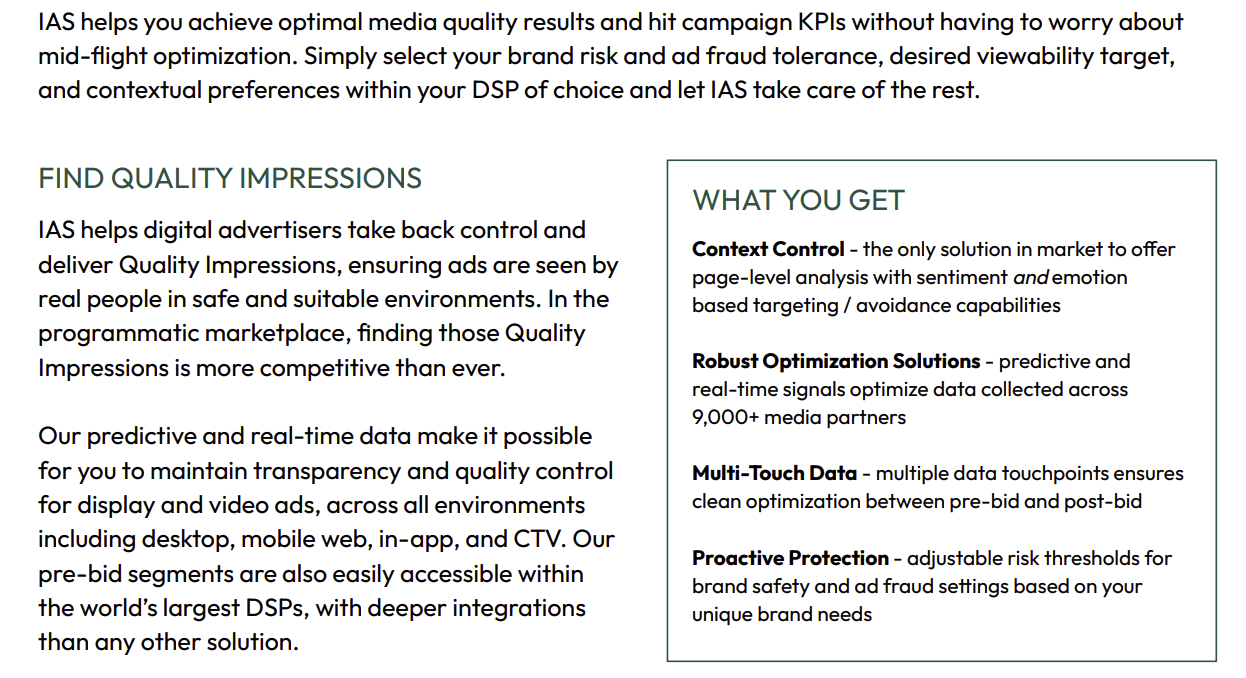

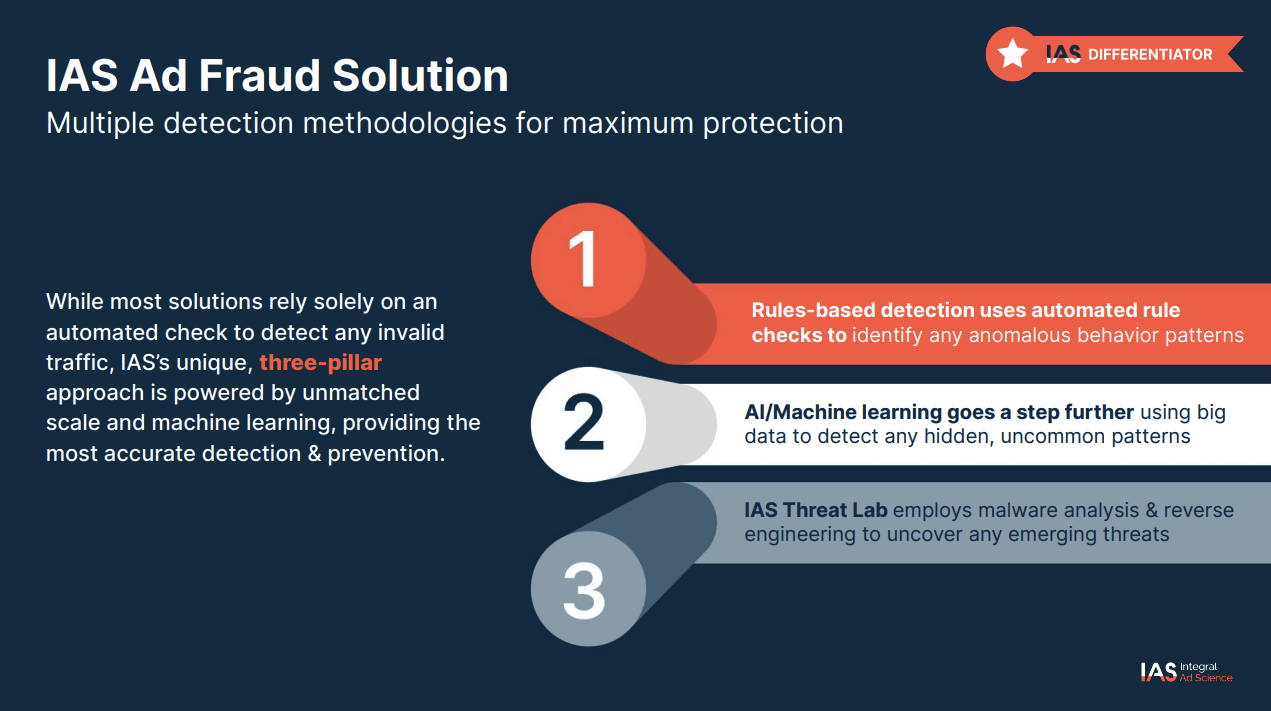

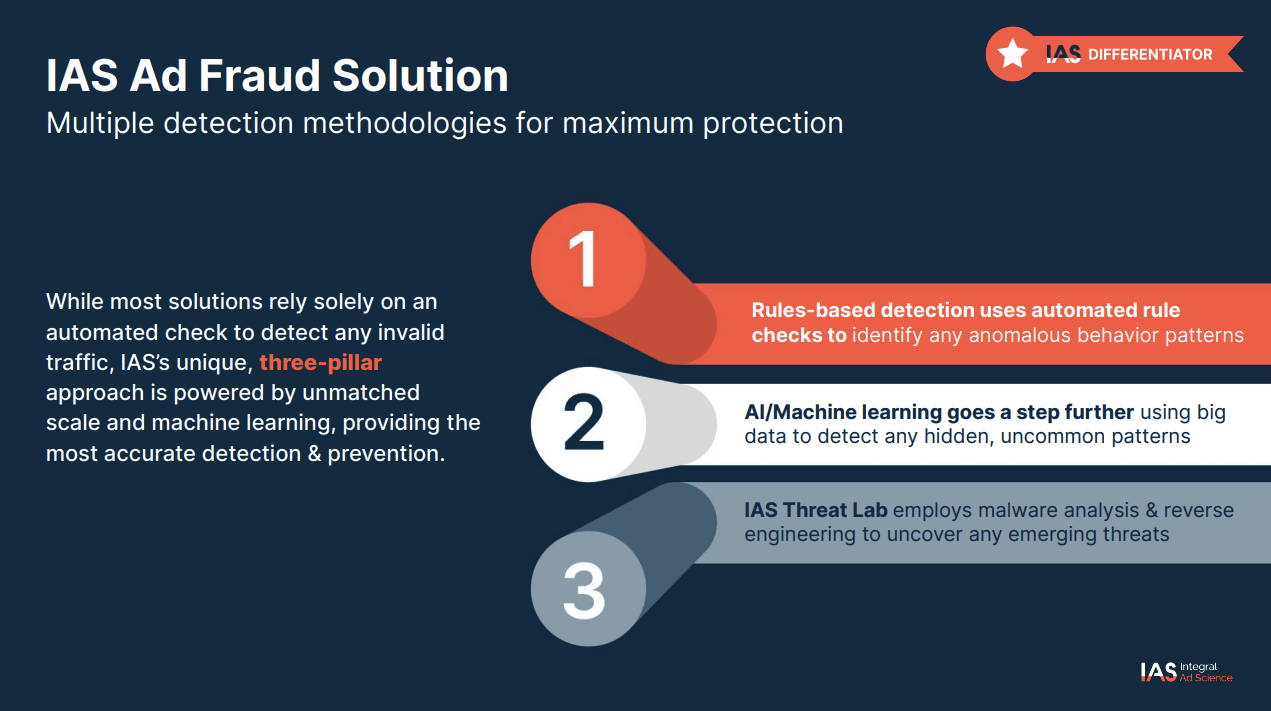

IAS’s website states: “IAS is a leader in the fight against ad fraud through a balanced approach and ongoing research. Our team of specialized analysts, engineers, white-hat-hackers, and data scientists is taking on invalid traffic from every angle to create the most advanced solutions in the market [...] Ensure the most precise fraud detection possible with our three-pillar approach. Our fraud technology is based on a set of methodologies that when used in tandem, detect the evolving threat of ad fraud with incredible accuracy.”

Source: IAS

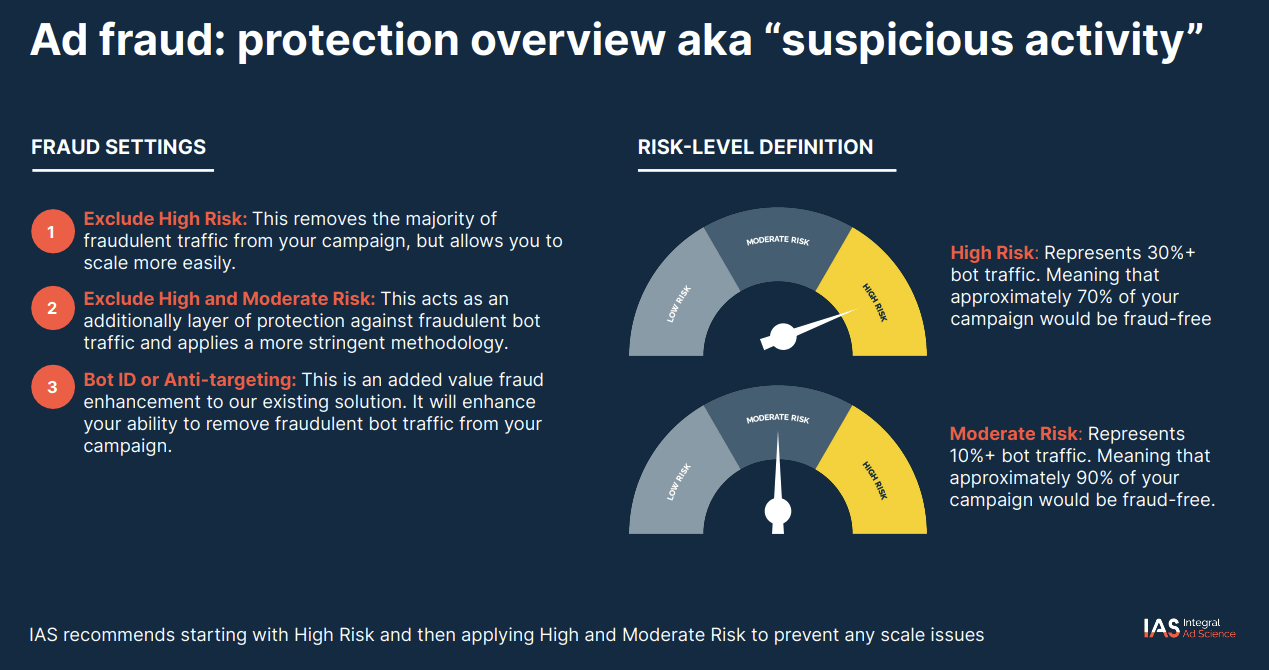

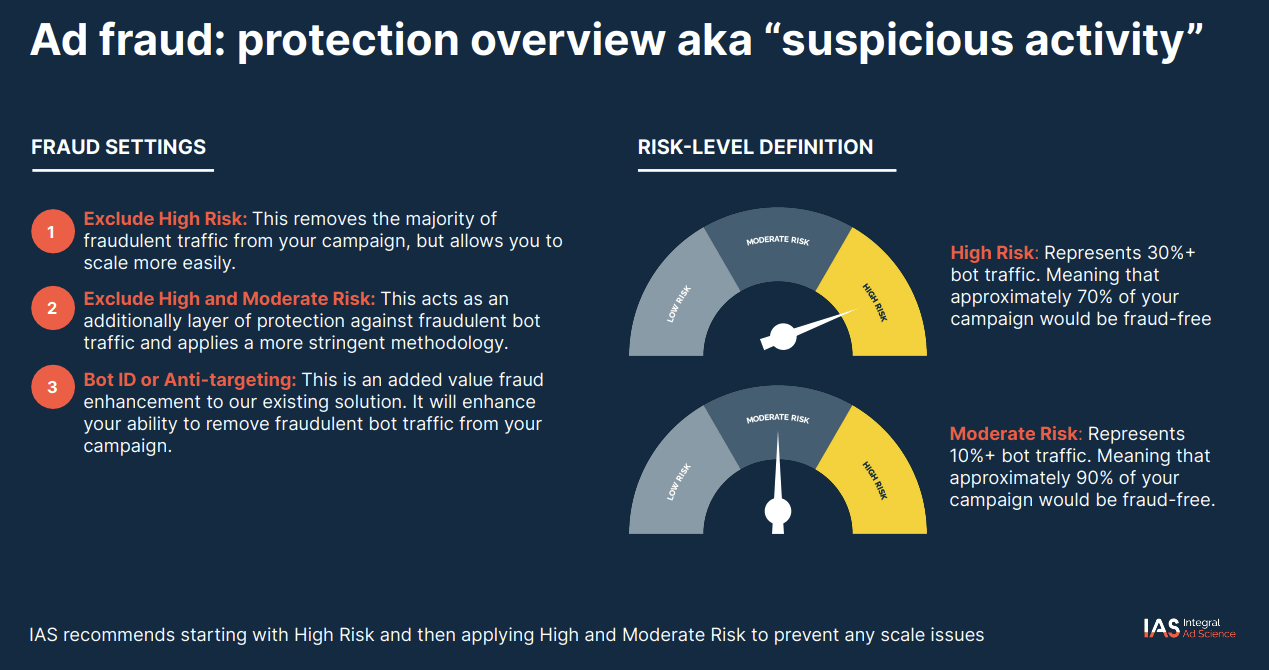

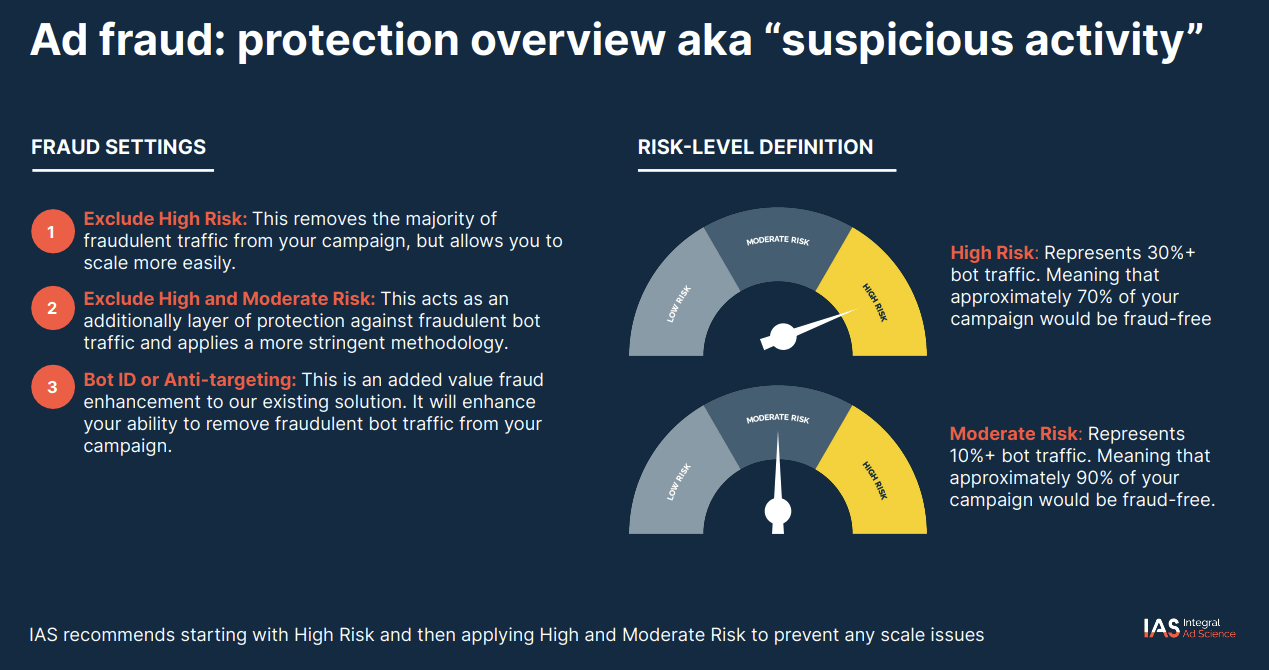

According to IAS’ public documentation, IAS offers a pre-bid segment to prevent ad fraud or “suspicious activity”. These segments can help “remove fraudulent bot traffic from your campaign.”

Screenshot of IAS technical documentation, describing IAS “Suspicious Activity” Pre-Bid Segments; Source: https://go.integralads.com/rs/469-VBI-606/images/IAS_Xandr_User_Guide.pdf; Archived: https://perma.cc/93NM-2S2Q

According to IAS’ public documentation, IAS’ Ad Fraud Solution utilized multiple detection methodologies for maximum protection. This three-pillar approach is marketed as being “powered by unmatched scale and machine learning, providing the most accurate detection & prevention.” The IAS Ad Fraud Solution claims to use “rules-based detection [...] to identify any anomalous behavior patterns”, “AI/Machine Learning [...] using big data to detect any hidden, uncommon patterns”, and “malware analysis & reverse engineering to uncover any emerging threats.”

Screenshot of IAS technical documentation, describing IAS Ad Fraud Solution; Source: https://go.integralads.com/rs/469-VBI-606/images/IAS_Xandr_User_Guide.pdf; Archived: https://perma.cc/93NM-2S2Q

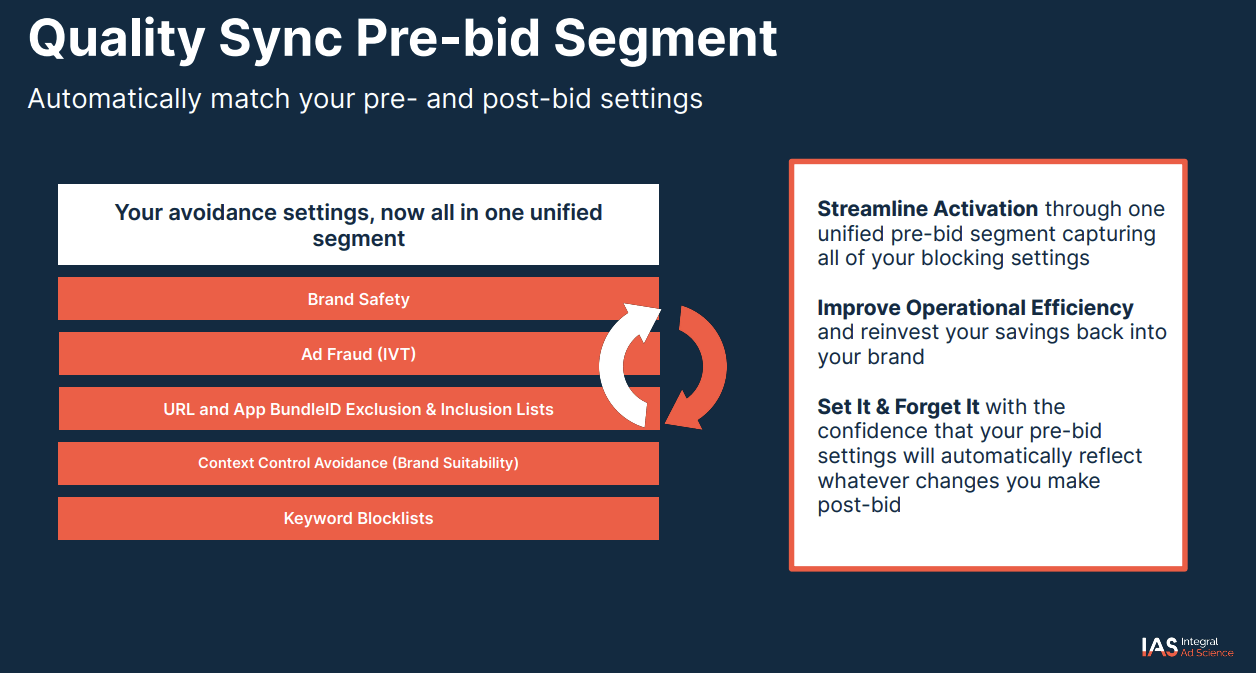

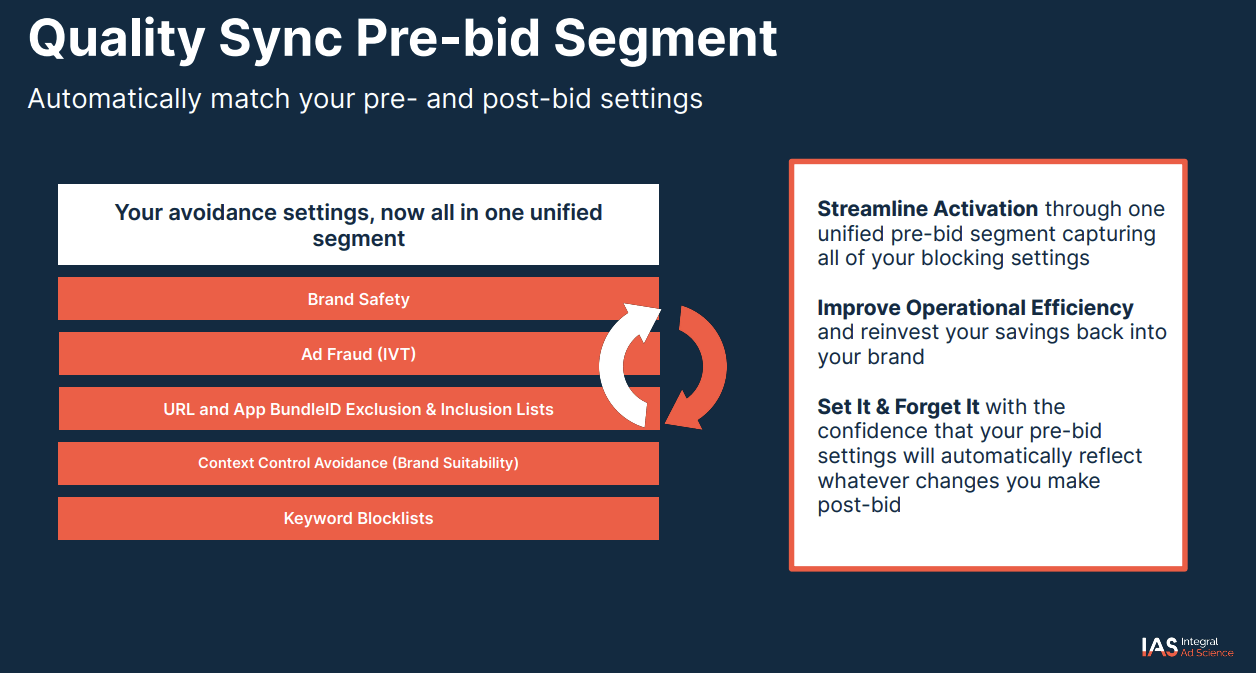

According to IAS’ public documentation, IAS offers a “Quality Sync” Pre-bid segment which “automatically matches your pre- and post-bid settings” including “Ad Fraud (IVT)” settings.

Screenshot of IAS technical documentation, describing IAS Quality Sync Pre-Bid Segments; Source: https://go.integralads.com/rs/469-VBI-606/images/IAS_Xandr_User_Guide.pdf; Archived: https://perma.cc/93NM-2S2Q

Screenshot of IAS technical documentation, describing IAS Quality Sync Pre-Bid Segments; Source: https://go.integralads.com/rs/469-VBI-606/images/IAS_Xandr_User_Guide.pdf; Archived: https://perma.cc/93NM-2S2Q

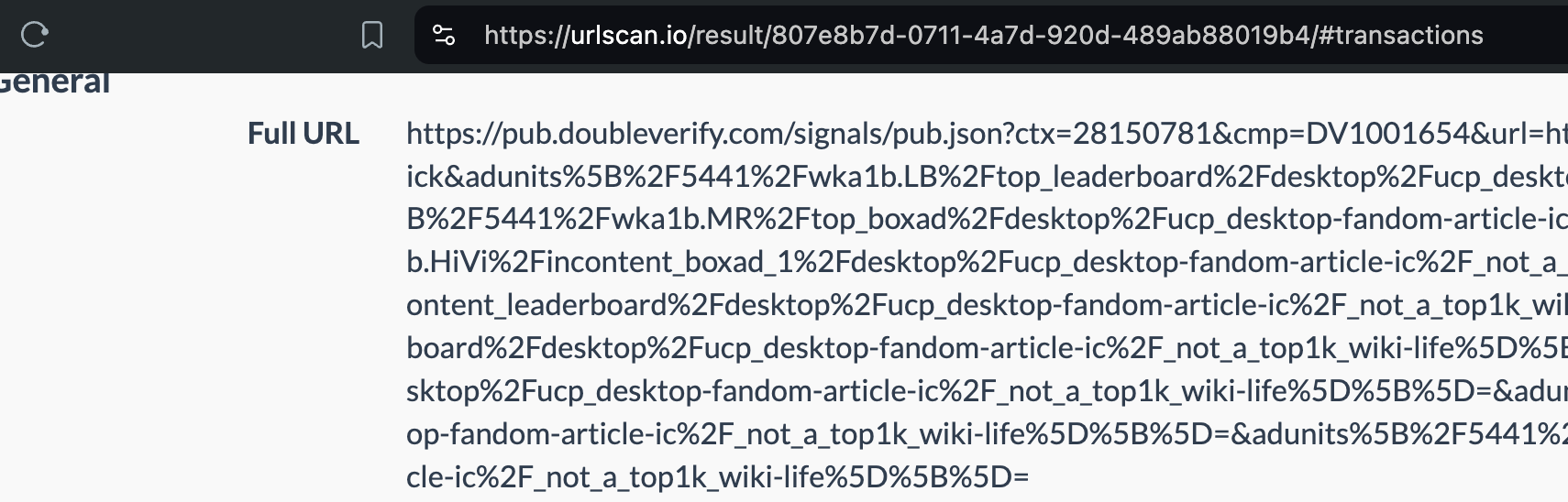

Background - DoubleVerify ad fraud solutions

DoubleVerify (DV) is an ad verification company that has received multiple Media Rating Council accreditations, and is TAG “Certified Against Fraud”. DoubleVerify’s website states “DV analyzes over 2 billion impressions daily, identifying comprehensive fraud and SIVT — from hijacked devices to bot fraud and injected ads. We're accredited by the Media Rating Council (MRC) for monitoring and blocking across devices — including mobile app, and our AI-backed deterministic methodology results in greater accuracy, fewer false positives and, ultimately, superior protection for brands.”

DoubleVerify provides ad verification solutions to both advertisers and to media publishers.

DoubleVerify offers “pre-bid targeting” solutions, which block before an “impression is purchased”, including “pre-bid avoidance segments”.

DoubleVerify’s public website states: “DV offers the most comprehensive and accurate pre-bid avoidance targeting available in the market. Pre-bid avoidance targeting helps brands drive efficiency from their programmatic media spend by preventing their bidding on auctions misaligned with their ad delivery standards. The solution also helps publishers by preventing the sale of impressions that ultimately result in a block and potential forfeit opportunity [...] Our millions of fraud signatures are updated nearly 100 times per day (every 15 minutes) in our DSP integrations to ensure near-immediate programmatic protection from invalid traffic.”

DoubleVerify further states: “DV analyzes over 2 billion display and video impressions daily and provides the fastest, most complete fraud identification and protection available — across web, mobile app and CTV environments. Our AI-backed deterministic methodology results in greater accuracy, fewer false positives and, ultimately, superior protection. We pre-qualify your supply to ensure you offer only quality inventory to your advertiser partners — maximizing buyer value and campaign effectiveness. DV works with platform partners in a variety of ways. We can integrate our data directly into your platform to evaluate inventory quality at its source, provide the data needed to package high-quality inventory into easily accessible segments for advertisers, or integrate our metrics into your platform to make optimization easier for buyers. In all instances, you benefit from DV's trusted data, helping to enhance the value and marketability of your inventory.”

Background - Prior research on bot detection and filtration vendors’ technology by Shailin Dhar - “Mystery Shopping Inside the Ad Fraud Verification Bubble”

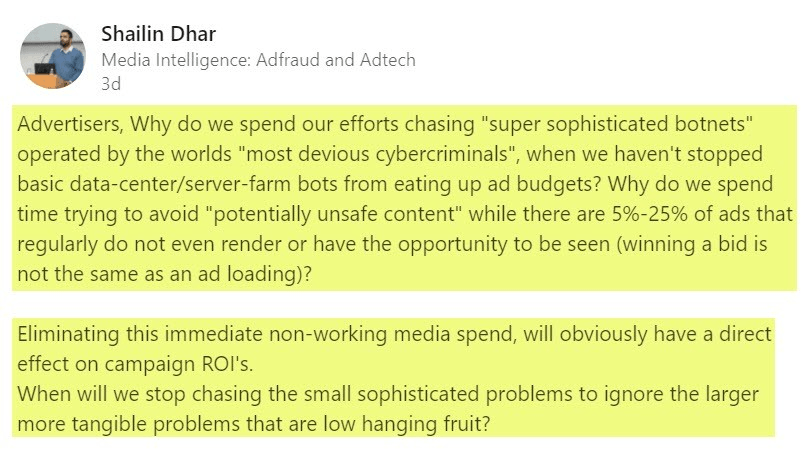

The Financial Times previously cited Shailin Dhar in 2017, an ad tech industry expert, who conducted research on detecting bots and ad fraud.

“Mr. Dhar remains skeptical about how far the industry will get in its fraud-fighting efforts, given that many constituents have a financial incentive to maintain the status quo. “Ad tech companies have made billions of dollars a year from fraudulent traffic,” he says. “Fraud is built into the foundation of advertising supply.””

Shailin Dhar previously commented in a Linkedin post: “

“Advertisers, Why do we spend our efforts chasing “super sophisticated botnets” operated by the worlds “most devious cybercriminals”, when we haven’t stopped basic data-center/server-farms from eating up ad budgets?”

Source: https://www.linkedin.com/pulse/marketers-stop-distracting-yourself-focus-ad-fraud/

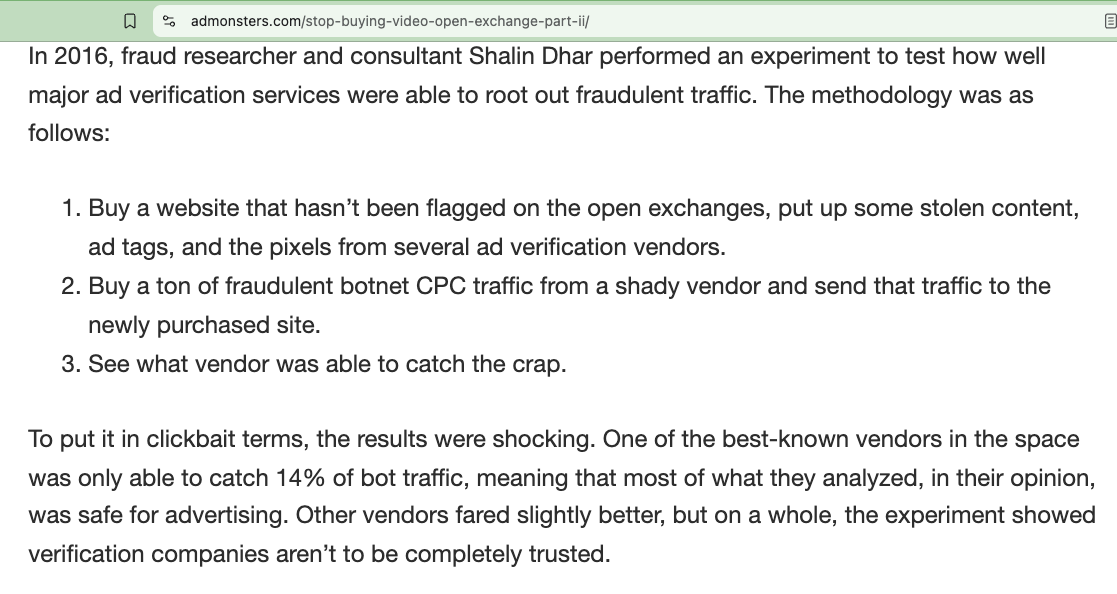

According to an AdMonsters article,

“In 2016, fraud researcher and consultant Shailin Dhar performed an experiment to test how well major ad verification services were able to root out fraudulent traffic. The methodology was as follows:

Buy a website that hasn’t been flagged on the open exchanges, put up some stolen content, ad tags, and the pixels from several ad verification vendors.

Buy a ton of fraudulent botnet CPC traffic from a shady vendor and send that traffic to the newly purchased site.

See what vendor was able to catch the crap.

To put it in clickbait terms, the results were shocking. One of the best-known vendors in the space was only able to catch 14% of bot traffic, meaning that most of what they analyzed, in their opinion, was safe for advertising. Other vendors fared slightly better, but on a whole, the experiment showed verification companies aren’t to be completely trusted.”

Screenshot of an AdMonsters.com article from 2017.

According to Shailin Dhar’s research from 2017, Integral Ad Science was able to correctly identify only 17% of the bot traffic Mr. Dhar paid to run against an artificial website he created. Mr. Dhar says: “83% of the robotic traffic we purchased was considered human by the Integral Ad Science filter. When I informed the source traffic vendor that the rate was 17% they said that the Integral Ad Science sampling got lucky because it’s usually around 5%”.

Source: “Mystery Shopping Inside the Ad Fraud Verification Bubble”

Source: “Mystery Shopping Inside the Ad Fraud Verification Bubble”

Background: US Senators Letters ask Federal Trade Commission to investigate “willful blindness to fraudulent activity in the online ad market”

In 2016, two US Senators - Mark Warner and Chuck Schumer - wrote a letter to the Federal Trade Commission (FTC), asking the agency to take a “closer look at the negative economic impact of digital advertising fraud on consumers and advertisers”.

According to AdExchanger, the “senators contend that ad fraud unchecked will trigger a rise in wasted marketing costs that will eventually get passed down to the consumer in the form of higher prices for goods and services.”

The Senators wrote: “The cost of pervasive fraud in the digital advertising space will ultimately be paid by the American consumer in the form of higher prices for goods and services [...] It remains to be seen whether voluntary, market-based oversight is sufficient to protect consumers and advertisers.”

The Senators also asked: "To the extent that criminal organizations are involved in perpetuating digital advertising fraud, how is the FTC coordinating with both law enforcement (e.g., the Department of Homeland Security or the Federal Bureau of Investigation) and the private sector formulate an appropriate response?"

The Senators wrote another letter in 2018, saying “I am writing to express my continued concern with the prevalence of digital advertising fraud, and in particular the inaction of major industry stakeholders in curbing these abuses. In 2016, Senator Schumer and I wrote Chairwoman Ramirez to express frustration with the growing phenomenon of digital ad fraud. Digital ad fraud has only grown since that time”.

The Senators asked the Federal Trade Commission to investigate “the extent to which major ecosystem stakeholders engage in willful blindness to fraudulent activity in the online ad market.”

Adalytics public interest research objectives

The following outlines Adalytics’ research objectives which are believed to be in the public interest.

Did any advertisers have their ads served to bots operating out of data center server farms?

Did any US government or US military advertisers have their ads served to bots by ad tech vendors?

Did any non-profit, NGO, or charity advertisers have their ads served to bots by ad tech vendors?

Were any ads served to bots by vendors who made public claims about filtering out bot traffic before an ad impression was served? If so, which vendors who made such public proclamations were observed serving ads to bots?

Research Methodology

This empirical, observational research study did not actively manipulate independent control variables or seek to generate new data. Rather, the study was premised on observing and analyzing data that was already generated by other entities.

Detecting bot traffic is an ever-changing discipline. Bot detection vendors continuously upgrade their detection algorithms, whilst entities who operate bots for benign and malicious purposes seek to circumvent those detections by applying increasingly sophisticated or novel evasions.

This study circumvents the difficulties of accurately detecting bot traffic by instead relying entirely on data wherein the ground truth is universally known. Specifically, this observational research study chose to source 100% of observations and data from three bot operators who are not actively seeking to commit ad fraud.

As such, there can be no ambiguity or debate about whether the ads served in these contexts were served to humans or bots; there is absolute certainty that every single one of these ad impressions is confirmed to have been served to a bot, given the provenance of the data.

To the best of our knowledge, this study constitutes the largest analysis of declared bot traffic in the context of digital advertising.

Research Methodology - Bot web traffic source #1 - HTTP Archive

The first source of bot traffic data was HTTP Archive. The HTTP Archive is part of the Internet Archive, a 501(c)(3) non-profit.

The HTTP Archive “Tracks How the Web is Built.” HTTP Archive states that they “periodically crawl the top sites on the web and record detailed information about fetched resources, used web platform APIs and features, and execution traces of each page. We then crunch and analyze this data to identify trends — learn more about our methodology.”

Screenshot of the HTTP Archive home page - https://httparchive.org/

The HTTP Archive publishes academic research reports about the state of web technology trends on the open internet.

Screenshot of the HTTP Archive “All Reports” page - https://httparchive.org/reports

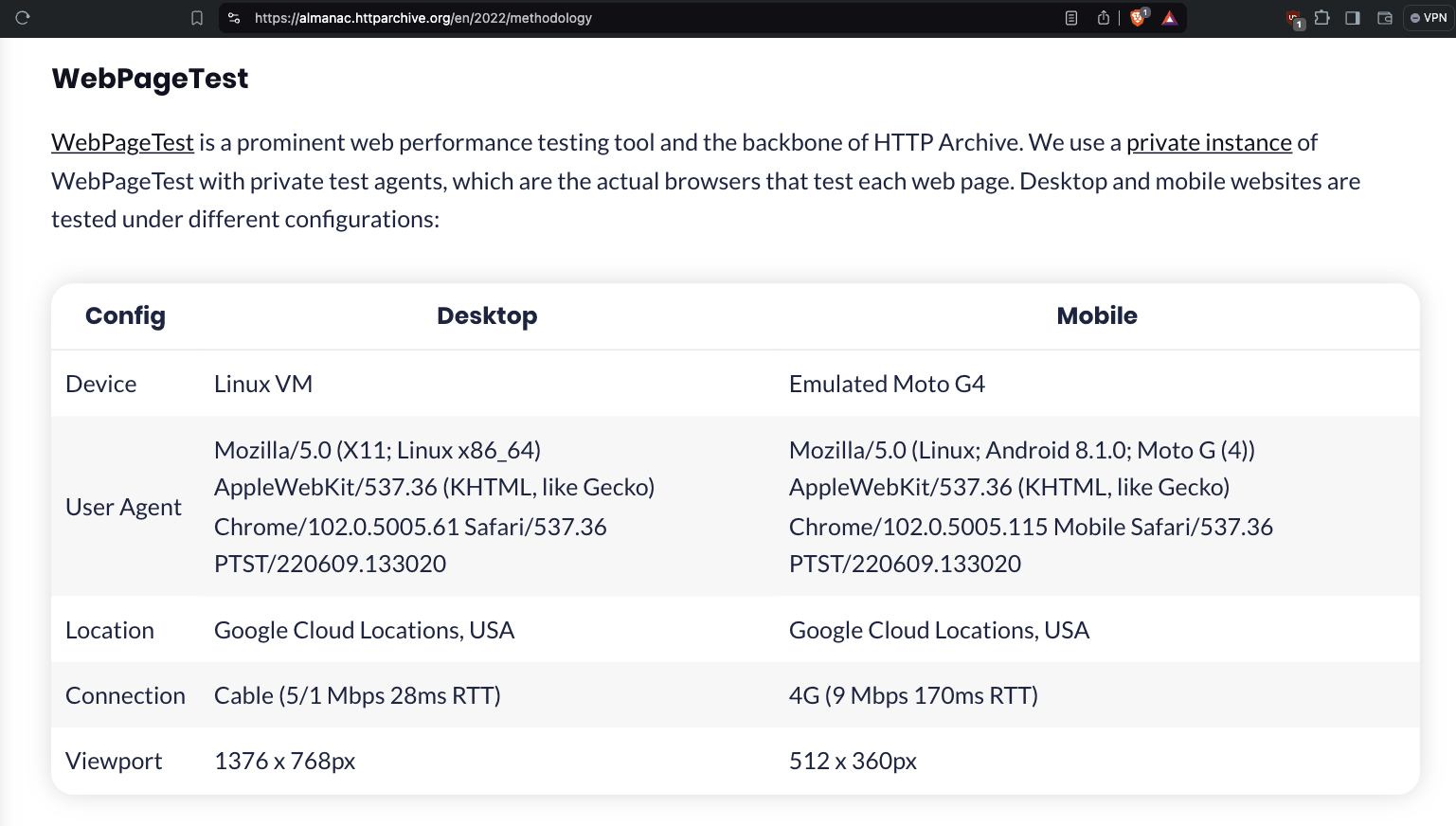

The HTTP Archive crawls millions of URLs on both desktop and mobile monthly. “The URLs come from the Chrome User Experience Report, a dataset of real user performance data of the most popular websites. The list of URLs is fed to our private instance of WebPageTest on the 1st of each month. As of March 1 2016, the tests are performed on Chrome for desktop and emulated Android (on Chrome) for mobile. The test agents are run from Google Cloud regions across the US. Each URL is loaded once with an empty cache ("first view") for normal metrics collection and again, in a clean browser profile, using Lighthouse. The data is collected via a HAR file. The HTTP Archive collects these HAR files, parses them, and populates various tables in BigQuery.

Screenshot of the HTTP Archive’s FAQ page - https://httparchive.org/faq

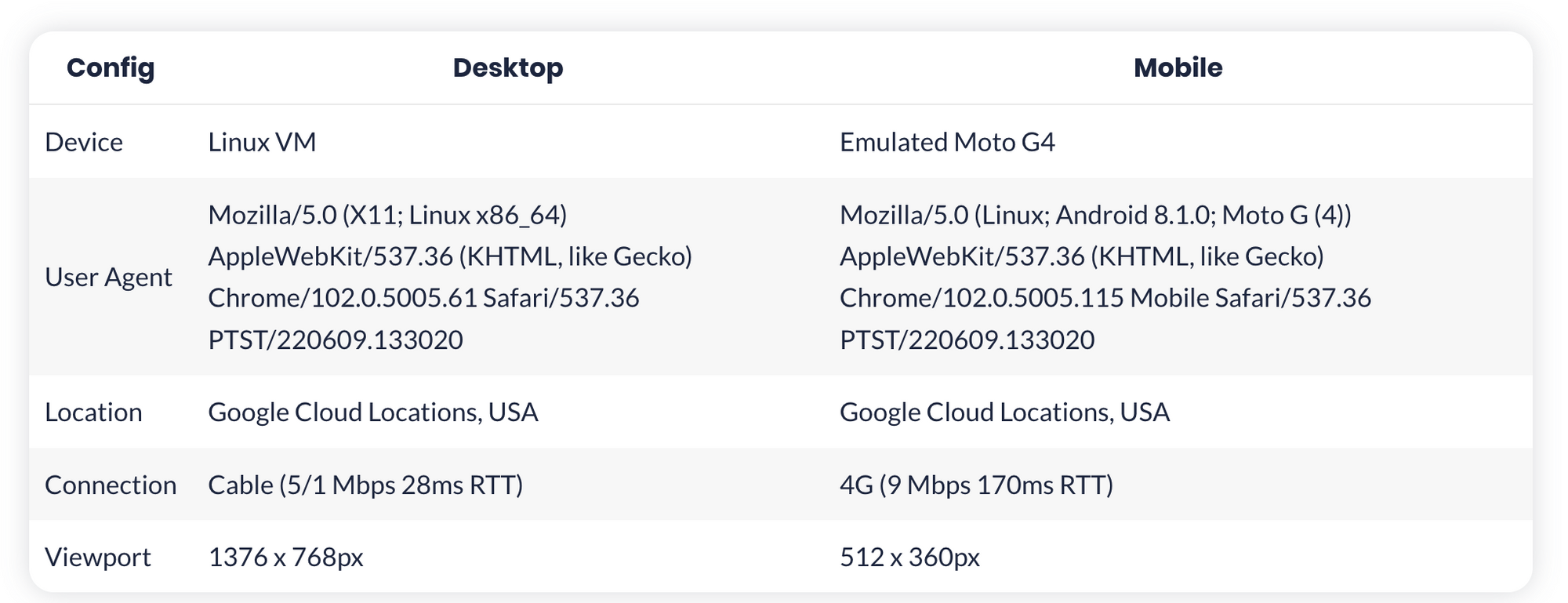

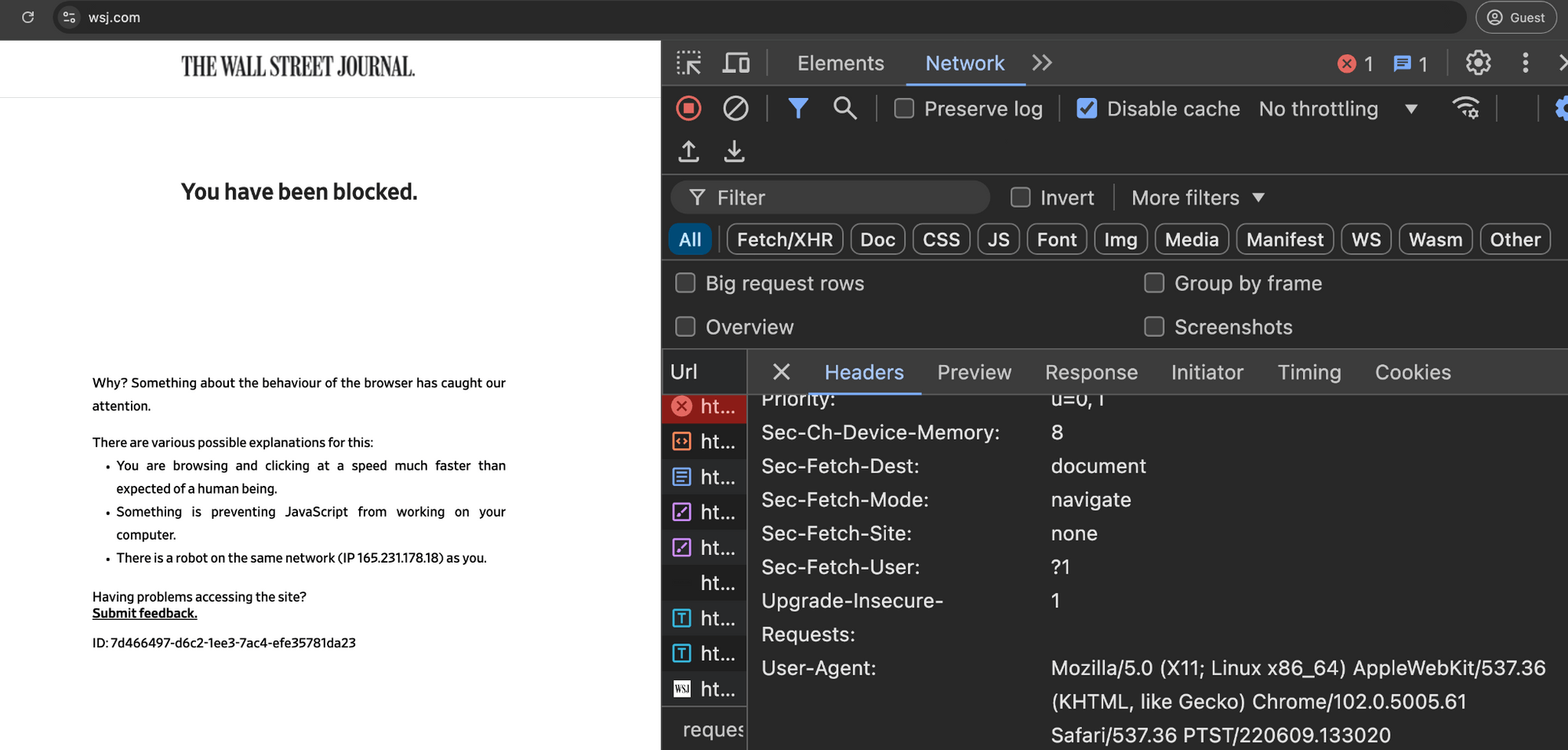

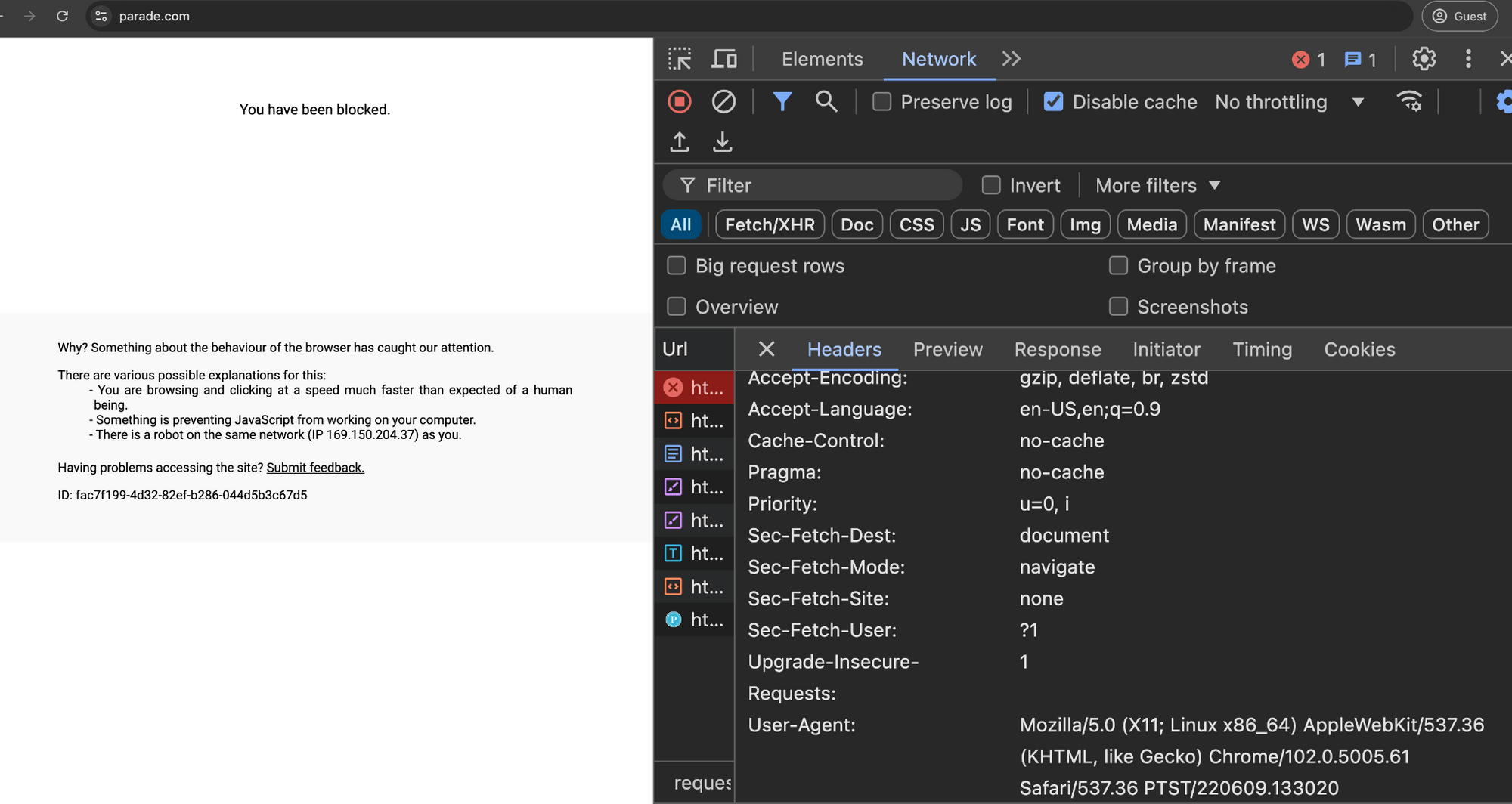

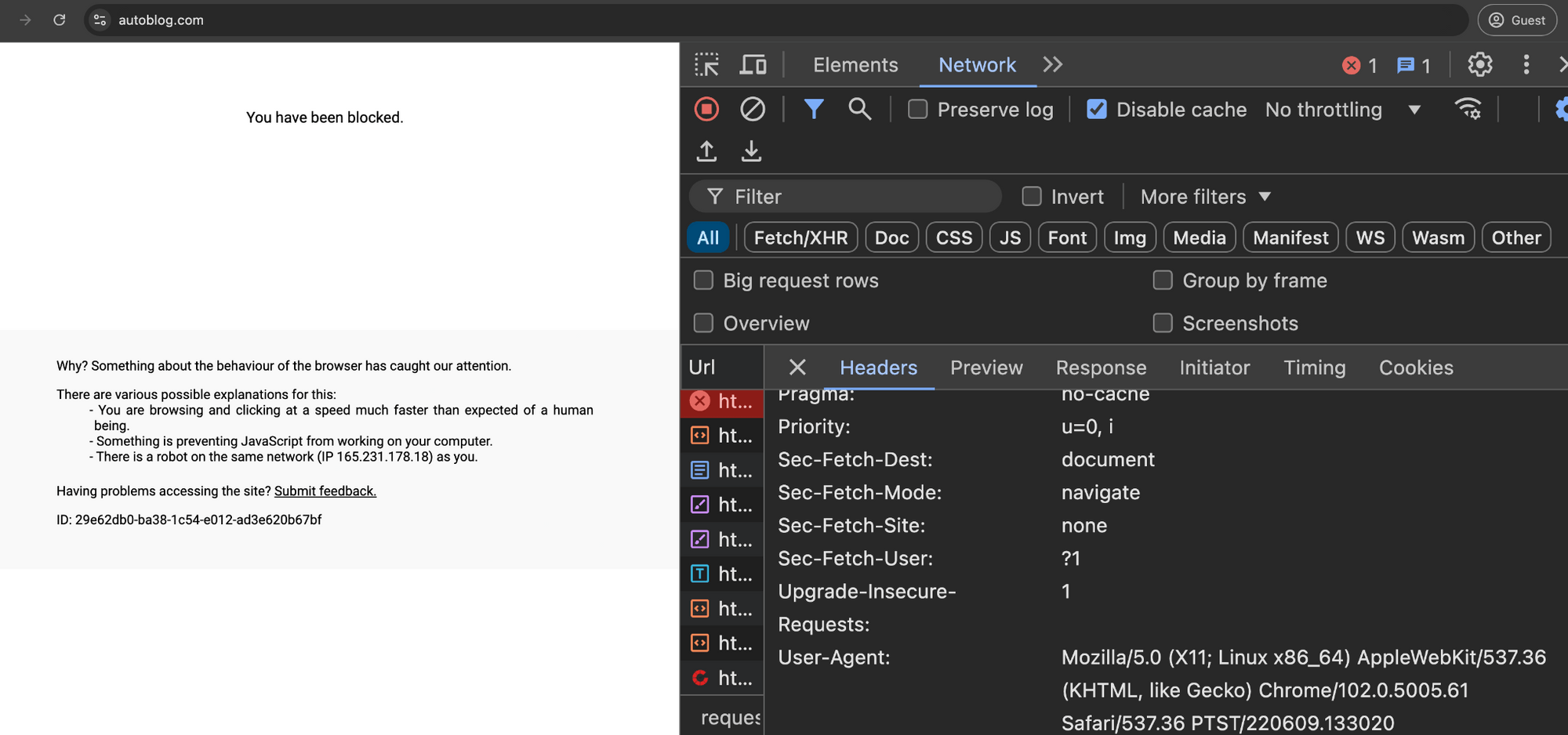

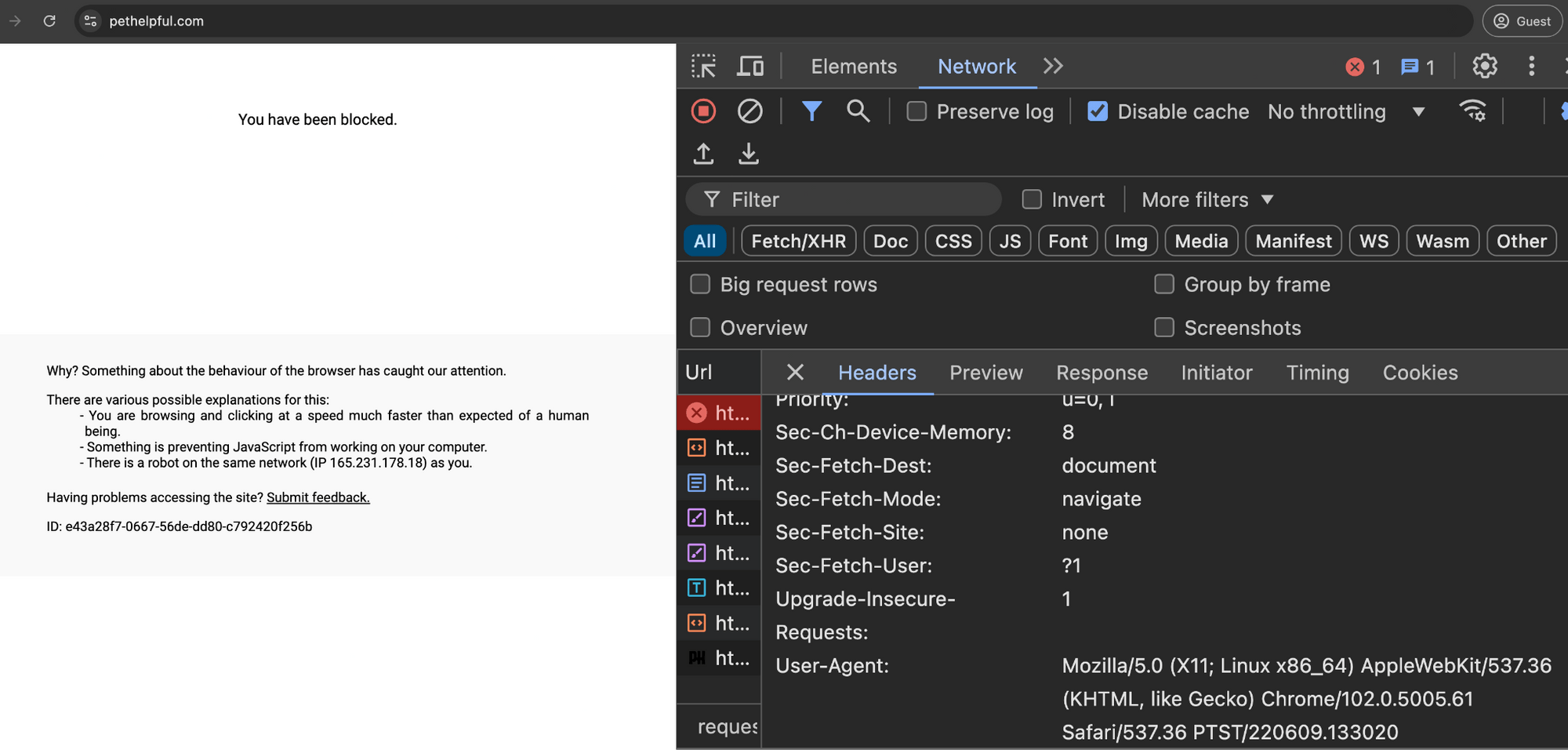

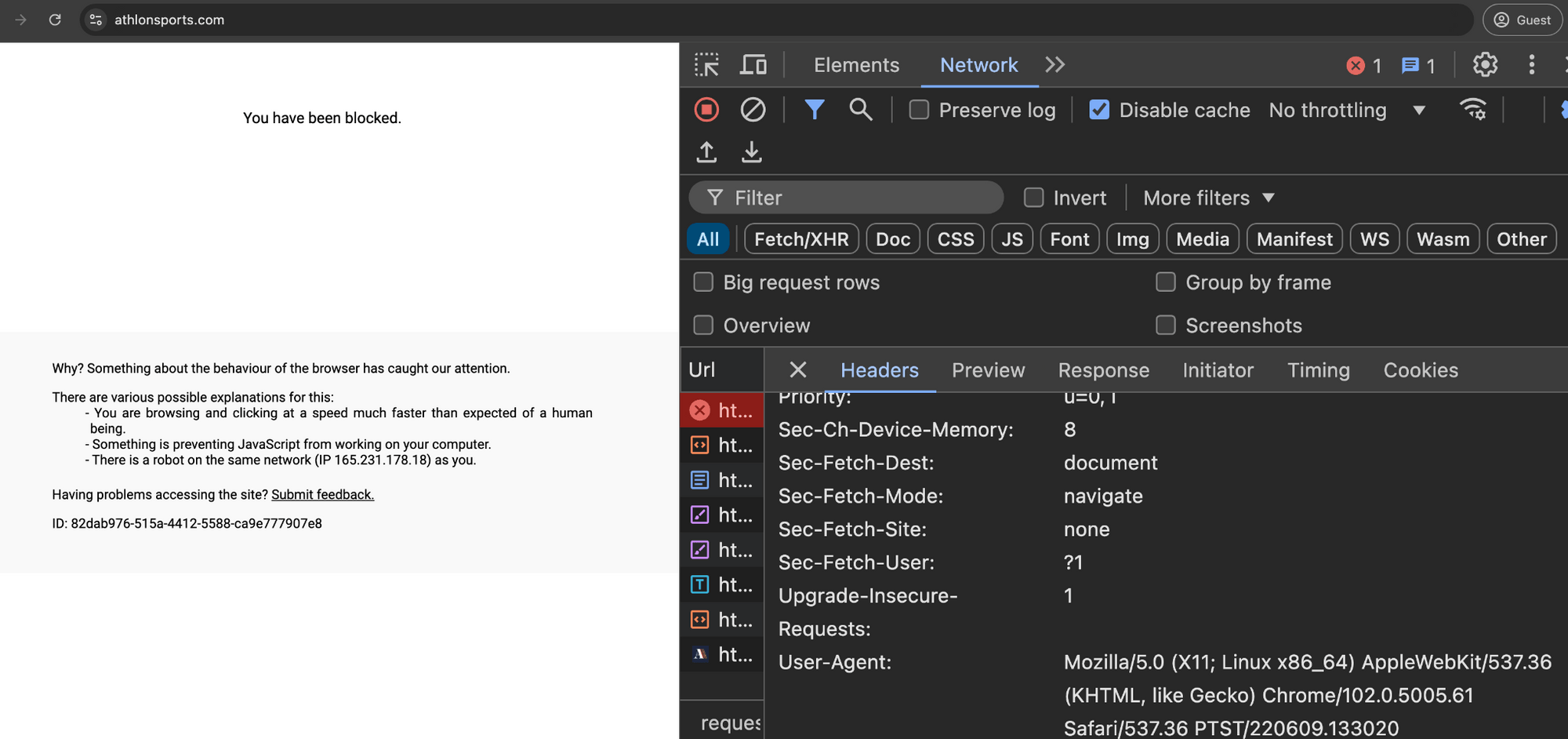

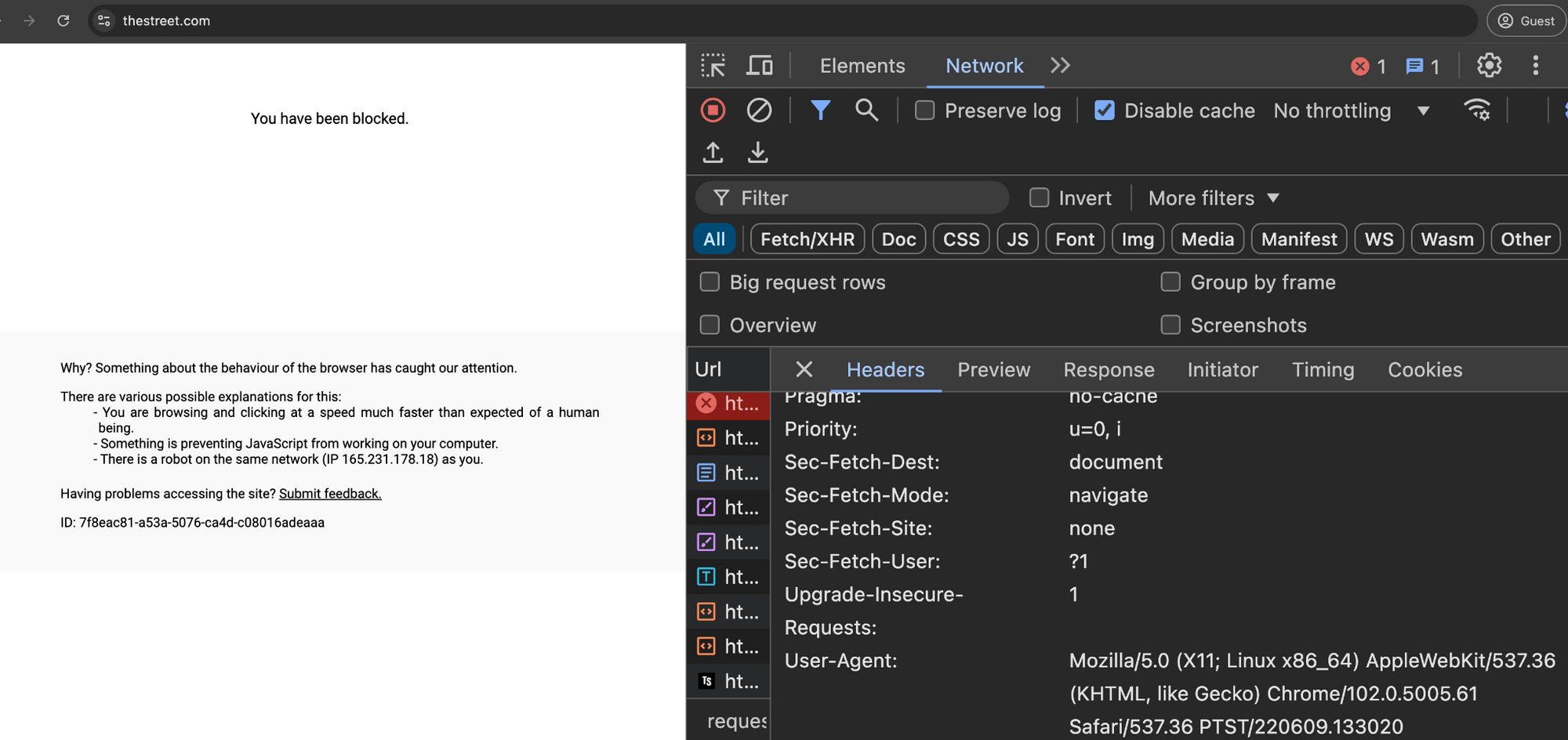

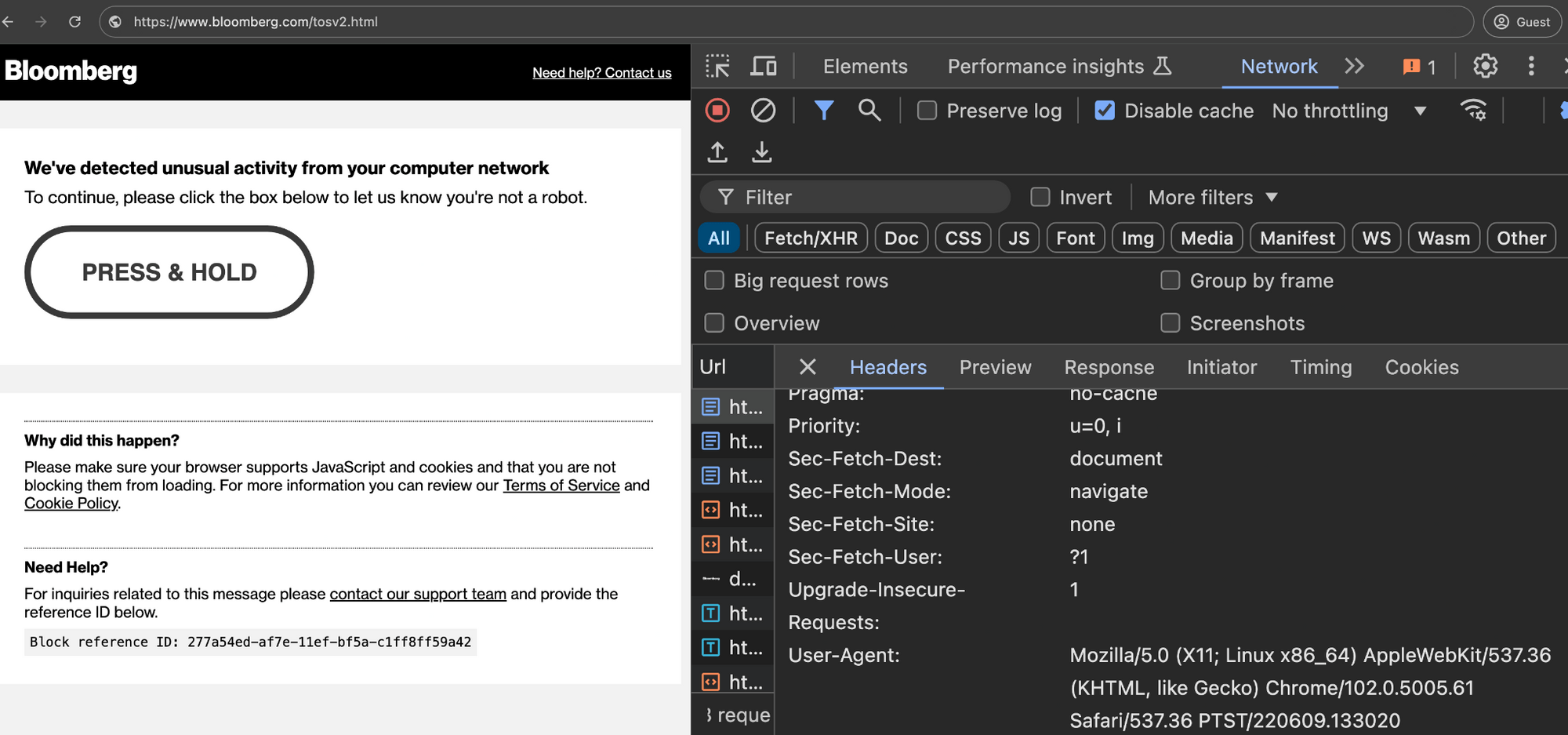

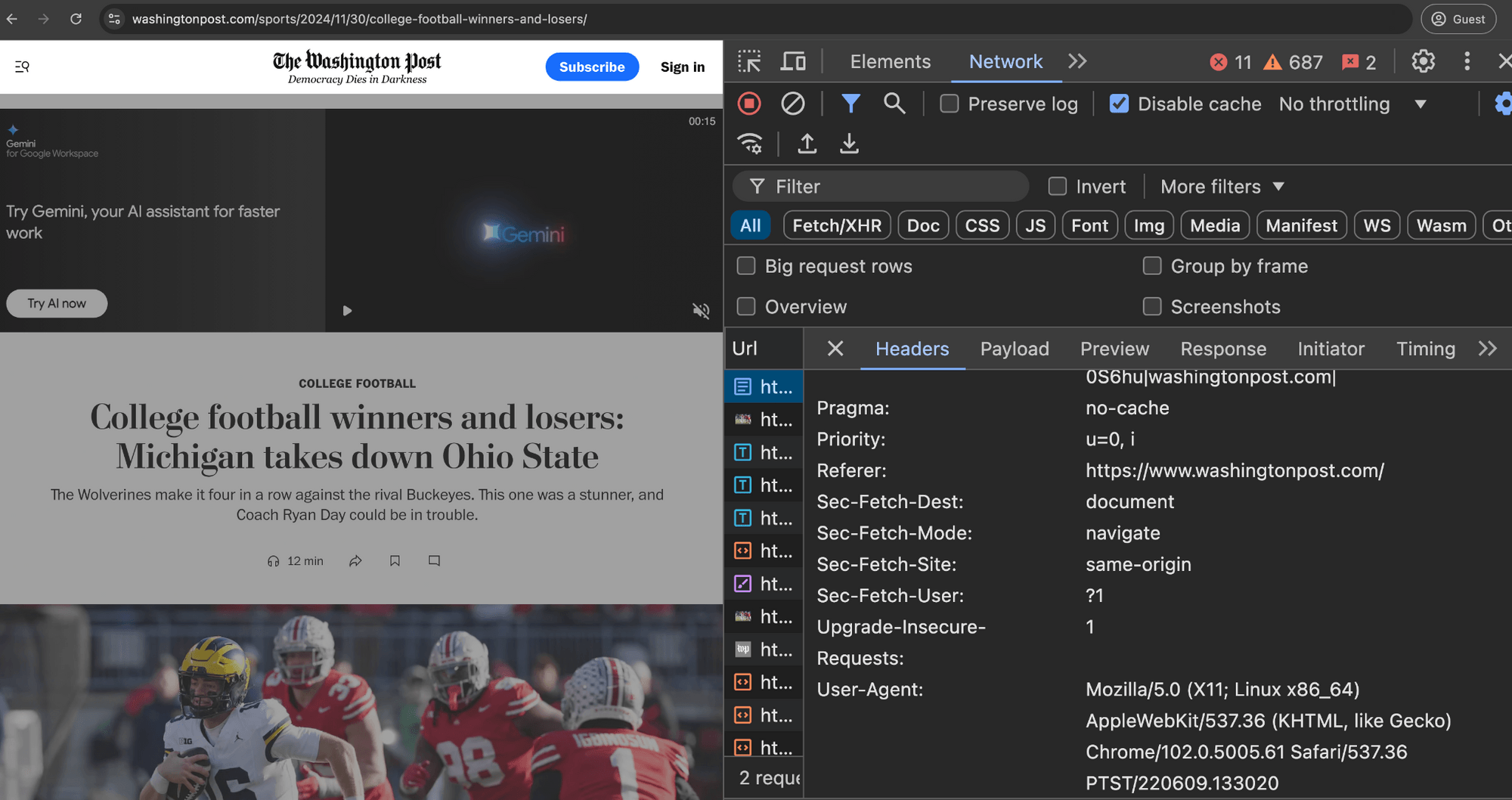

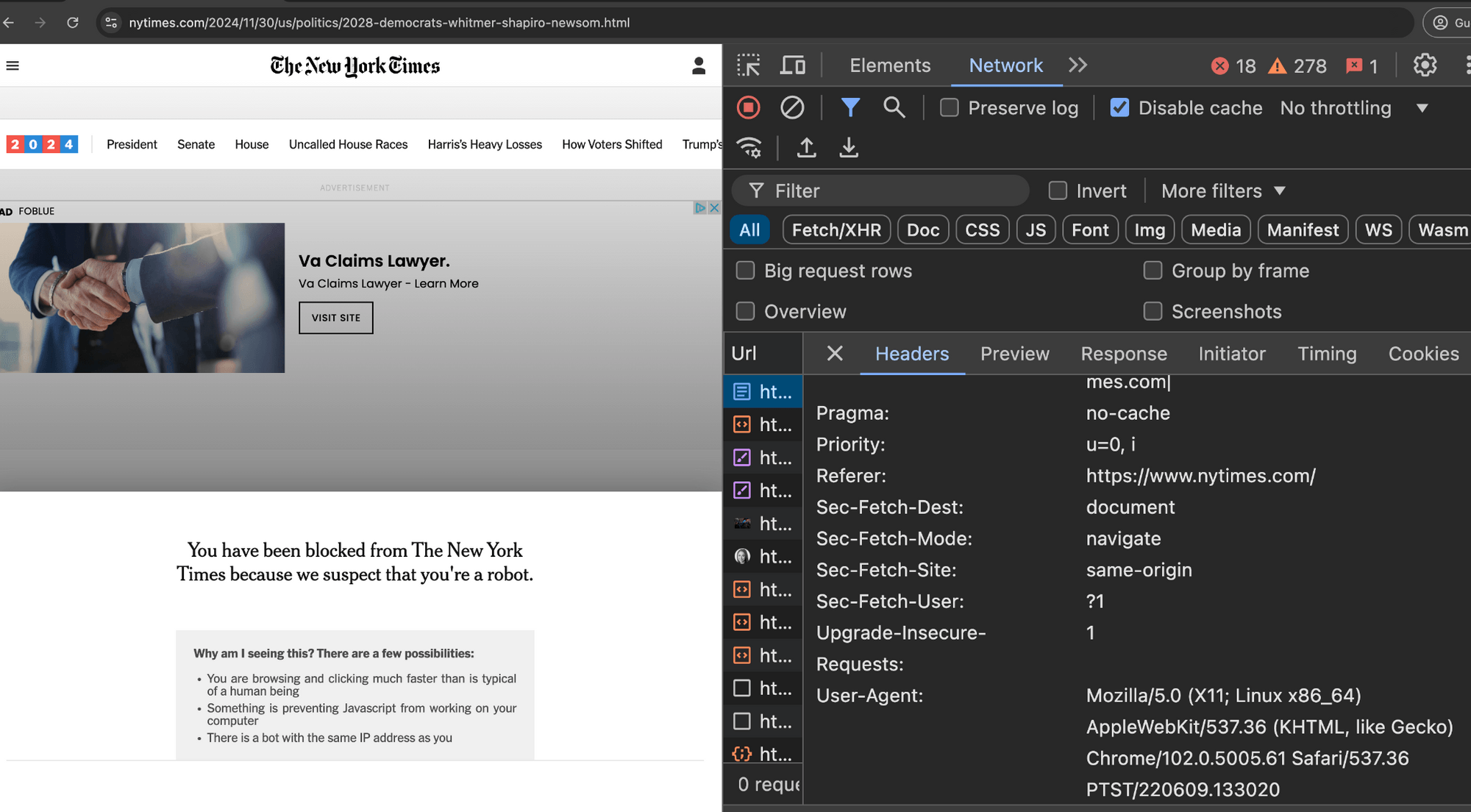

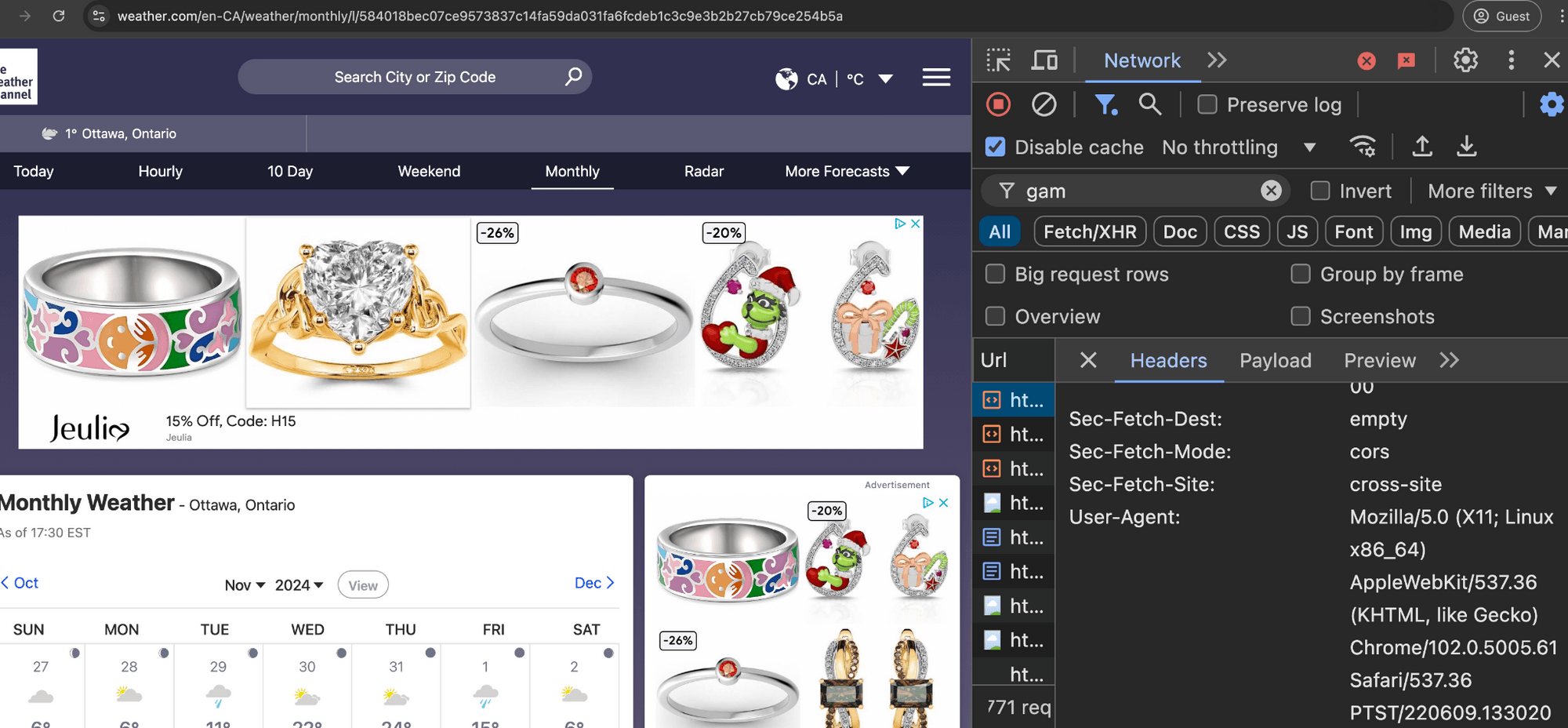

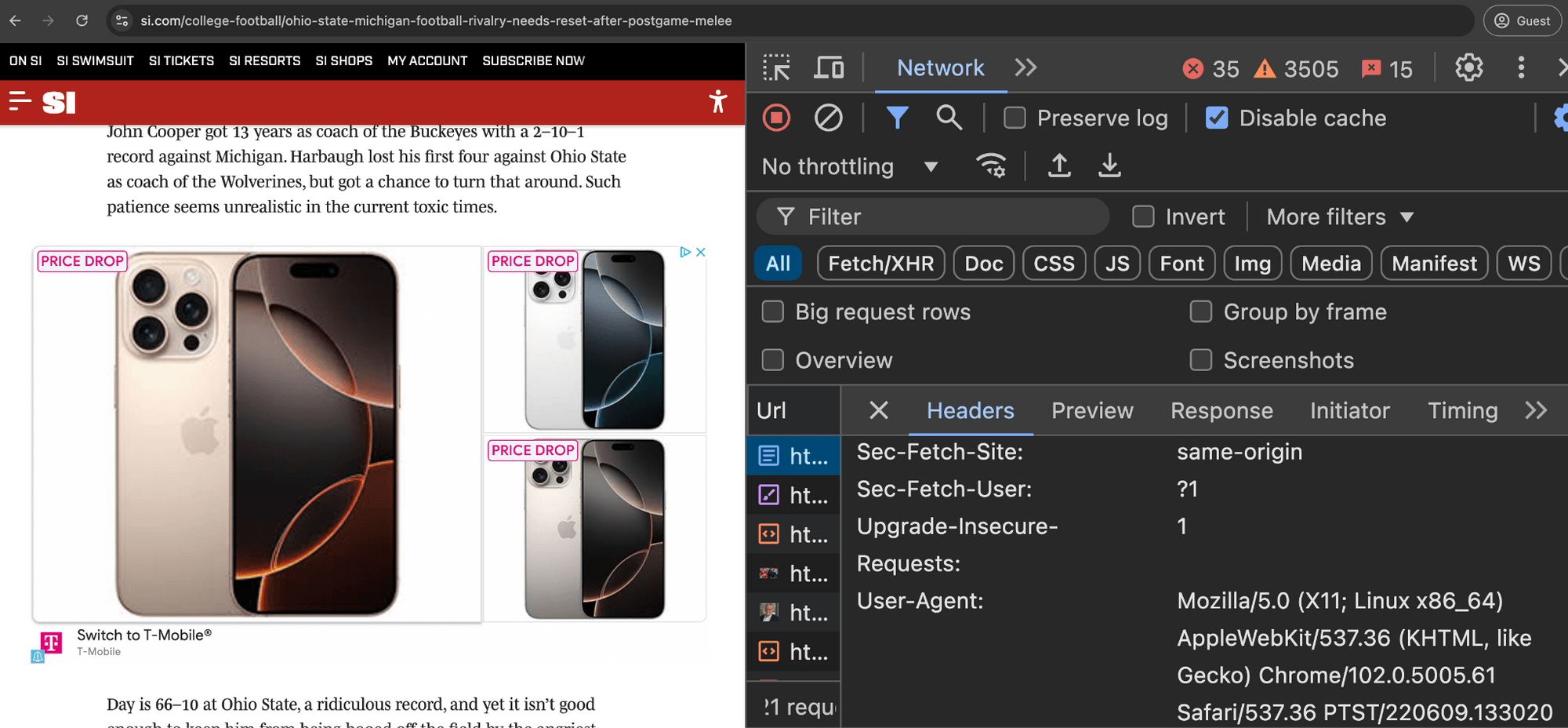

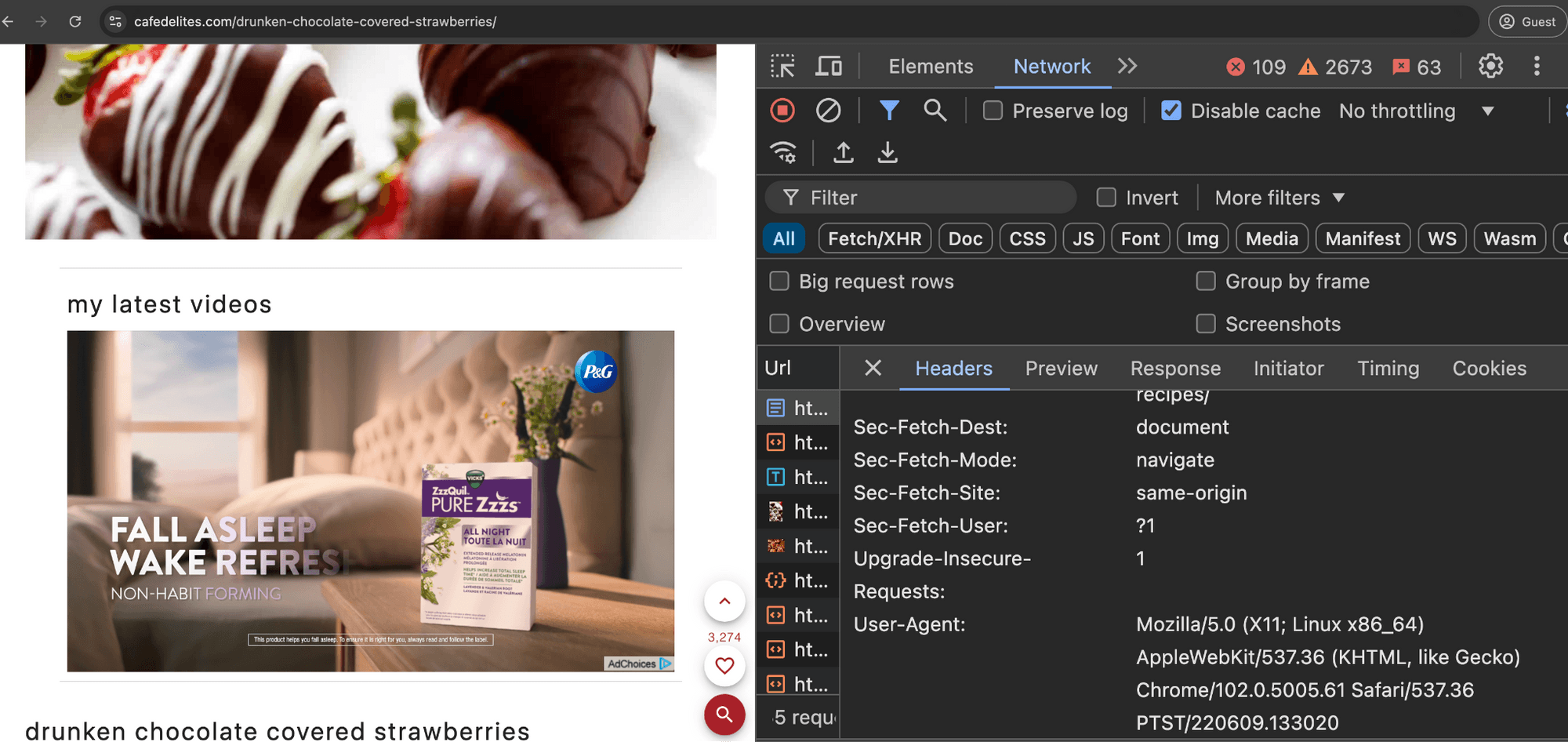

The HTTP Archive crawls websites using desktop and mobile user agents. Per the HTTP Archive’s methodology, it crawls from Google Cloud data centers. The HTTP Archive openly and transparently declares its crawlers via the HTTP User Agent request header. Specifically, HTTP Archive declares that its crawlers declare the following desktop and mobile user-agents:

Desktop: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.61 Safari/537.36 PTST/220609.133020

Mobile: Mozilla/5.0 (Linux; Android 8.1.0; Moto G (4)) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.115 Mobile Safari/537.36 PTST/220609.133020

Screenshot of the HTTP Archive’s methodology page - https://almanac.httparchive.org/en/2022/methodology

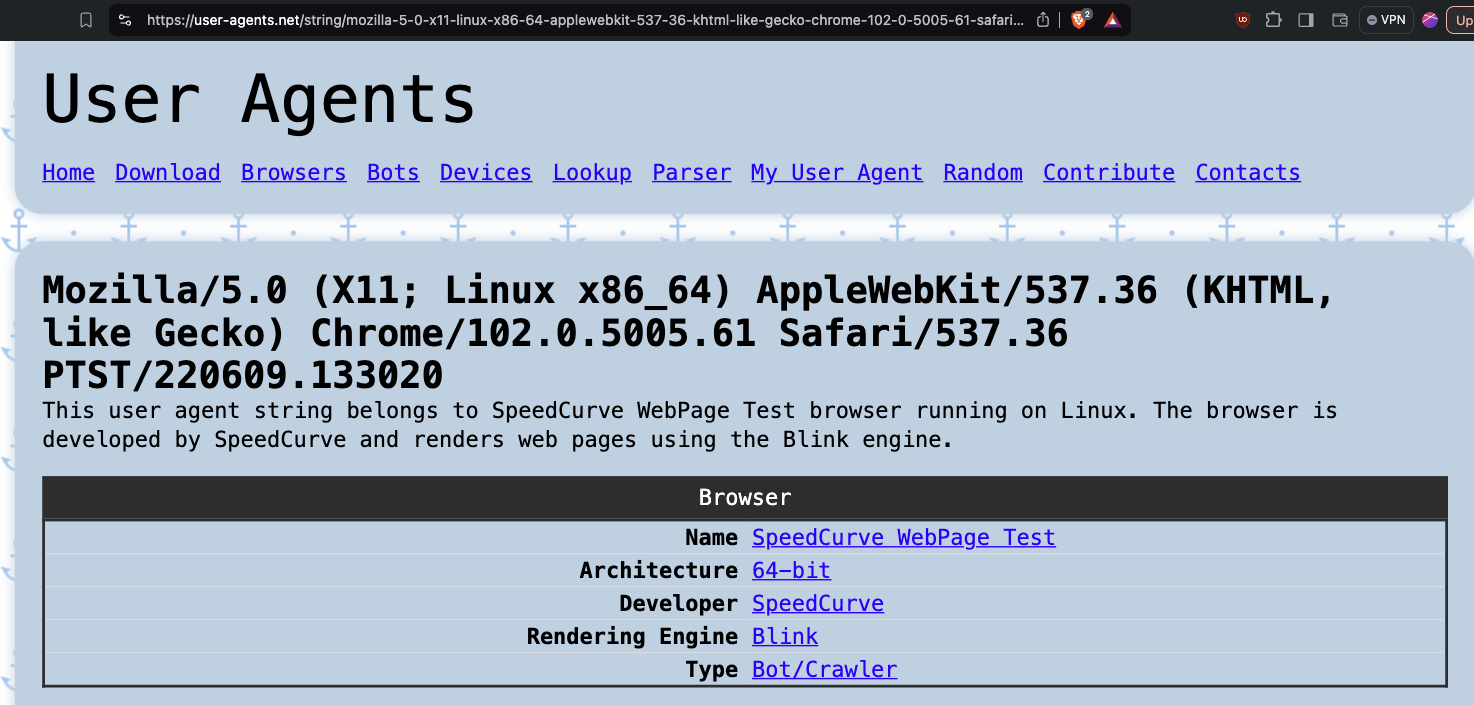

“PTST” is a well known and established bot user agent. One can check the publicly available user-agent.net database to confirm that “PTST” appears as a “Bot/Crawler”.

Screenshot of the User-Agents.net database, showing that “PTST” is a known “Bot/Crawler” - https://user-agents.net/string/mozilla-5-0-x11-linux-x86-64-applewebkit-537-36-khtml-like-gecko-chrome-102-0-5005-61-safari-537-36-ptst-220609-133020

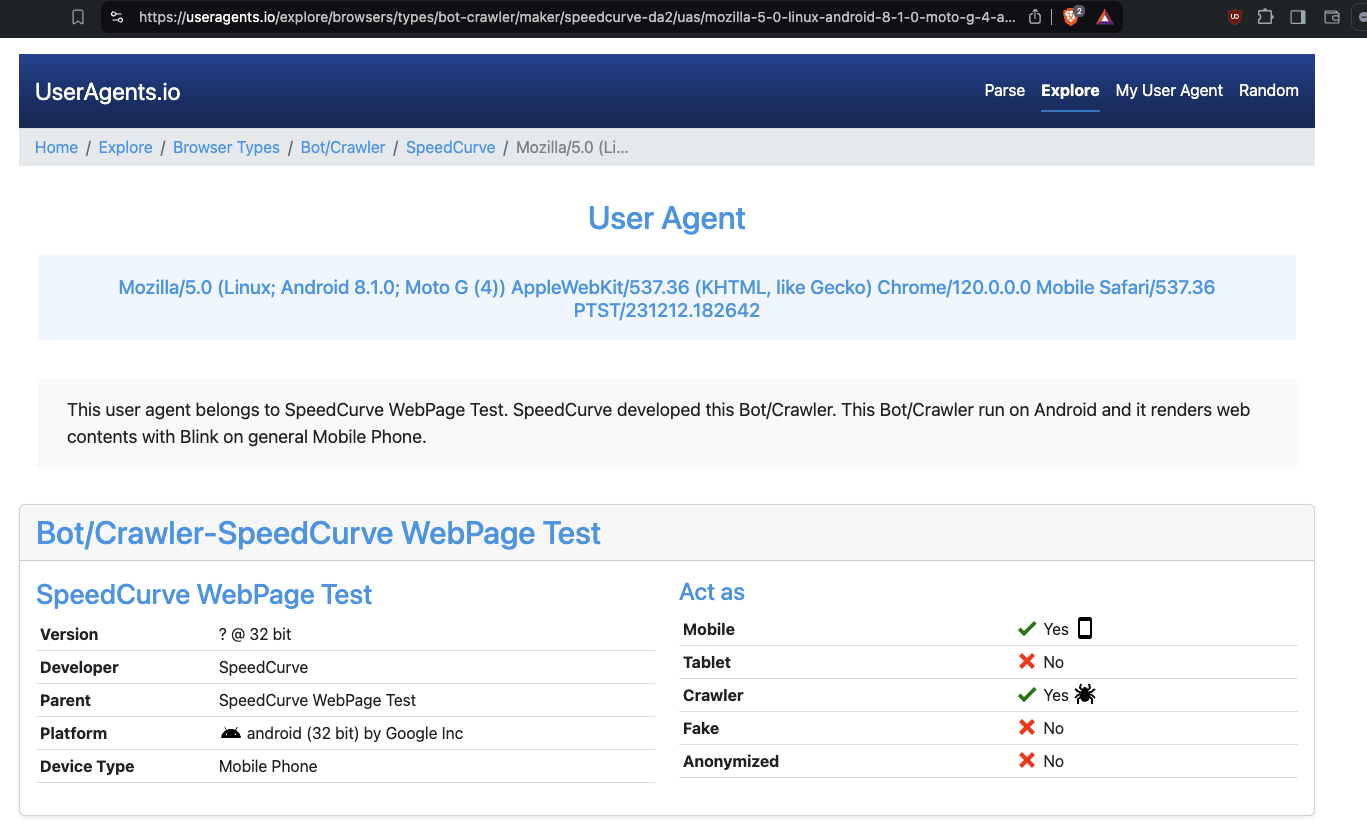

UserAgents.io - another public user agent database - also confirms that “PTST” is a known Crawler.

Screenshot of UserAgents.io - another public user agent database - showing that “PTST” is a known Crawler.

“PTST” has also been on the IAB Tech Lab Spiders and Bots robots list since 2013. “The IAB Tech Lab publishes a comprehensive list of such Spiders and Robots that helps companies identify automated traffic such as search engine crawlers, monitoring tools, and other non-human traffic that they don’t want included in their analytics and billable counts.” The “spiders and robots list supports the MRC’s General Invalid Traffic Detection and Filtration Standard by providing a common industry resource and list for facilitating IVT detection and filtration.”

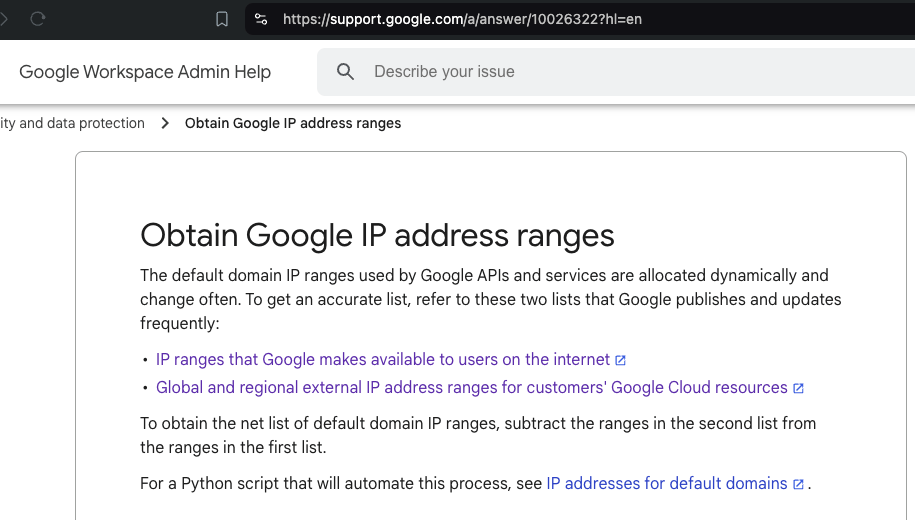

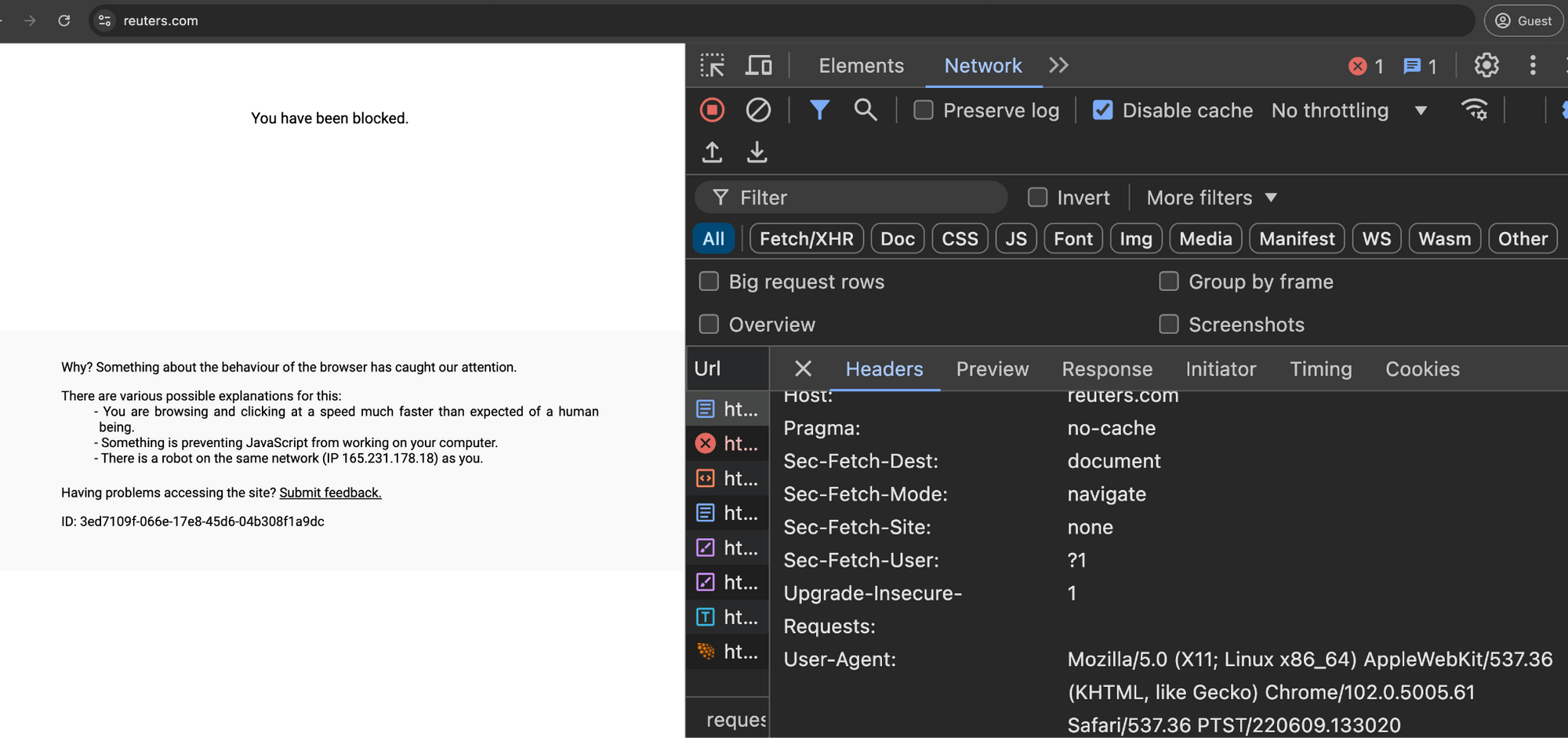

HTTP Archive crawls from Google Cloud data center server farms. The IPs operated by Google Cloud are publicly documented on Google’s website - https://support.google.com/a/answer/10026322?hl=en

Screenshot of Google’s public documentation, showing how one can obtain Google Cloud’s IP address ranges

As a reminder, the Media Rating Council (MRC) - an advertising accreditation body says “requiring filtration of invalid data-center traffic originating from IPs associated to the three largest known hosting entities: Amazon AWS, Google and Microsoft. This means filtration of IPs within those of known hosting entities determined to be a consistent source of invalid traffic not including routing artifacts of legitimate users or virtual machine legitimate browsing” (emphasis added).

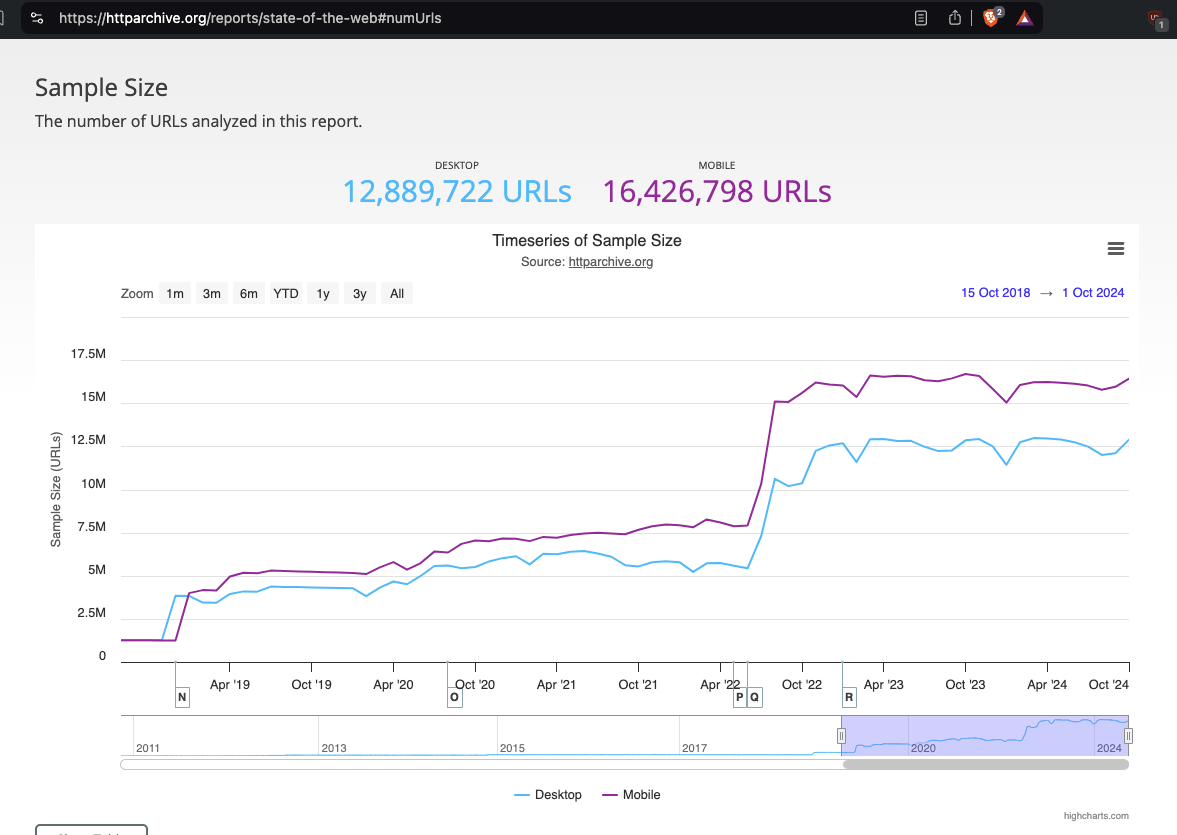

The HTTP Archive has crawled tens of millions of distinct page URLs over the course of several years.

Screenshot of the HTTP Archive Reports section, showing how many page URLs are crawled - https://httparchive.org/reports/state-of-the-web#numUrls

The HTTP Archive makes peta-bytes of web traffic data generated through its crawls as a Public Dataset on Google BigQuery.

Screenshot of the HTTP Archive Google BigQuery dataset - https://httparchive.org/faq#how-do-i-use-bigquery-to-write-custom-queries-over-the-data

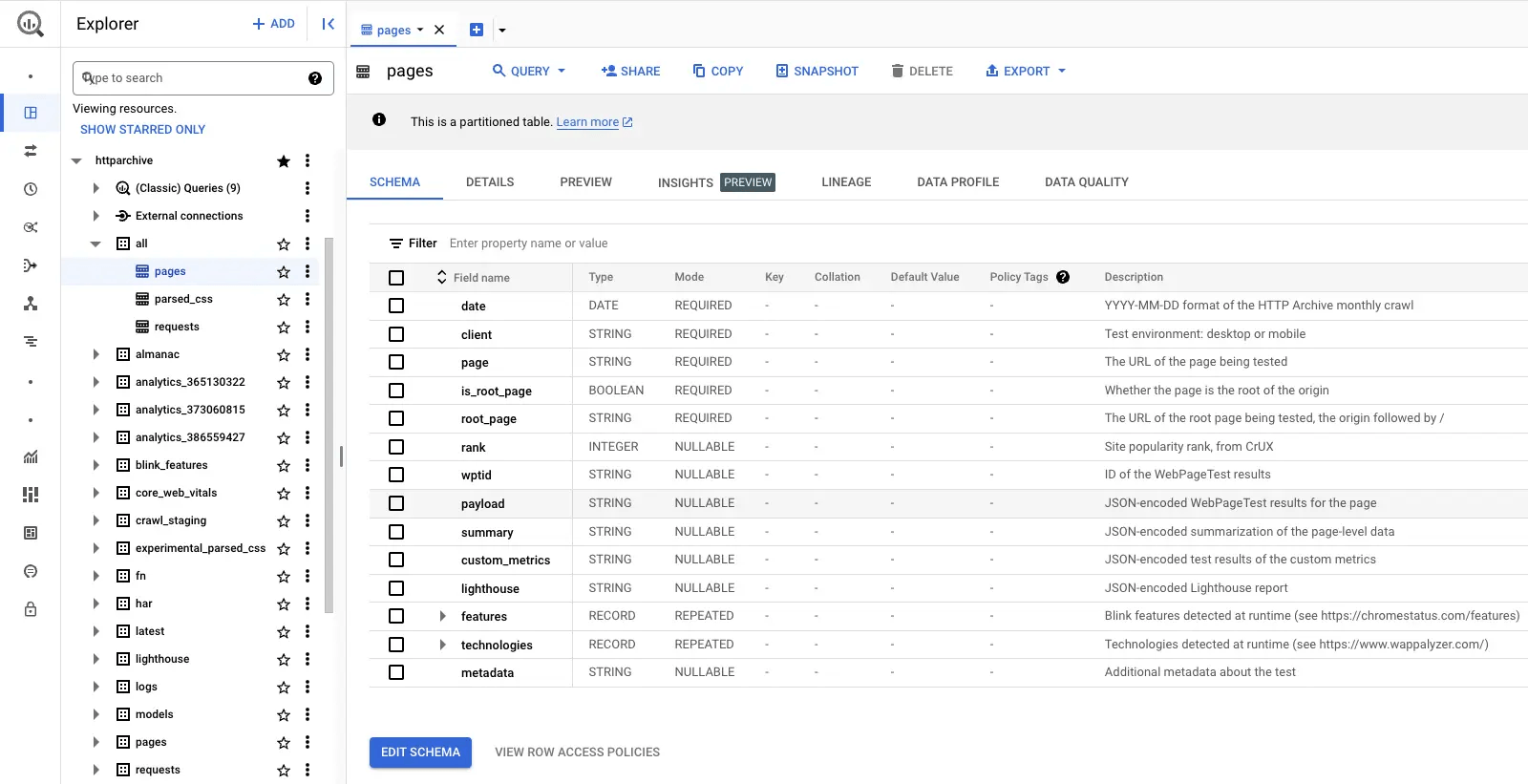

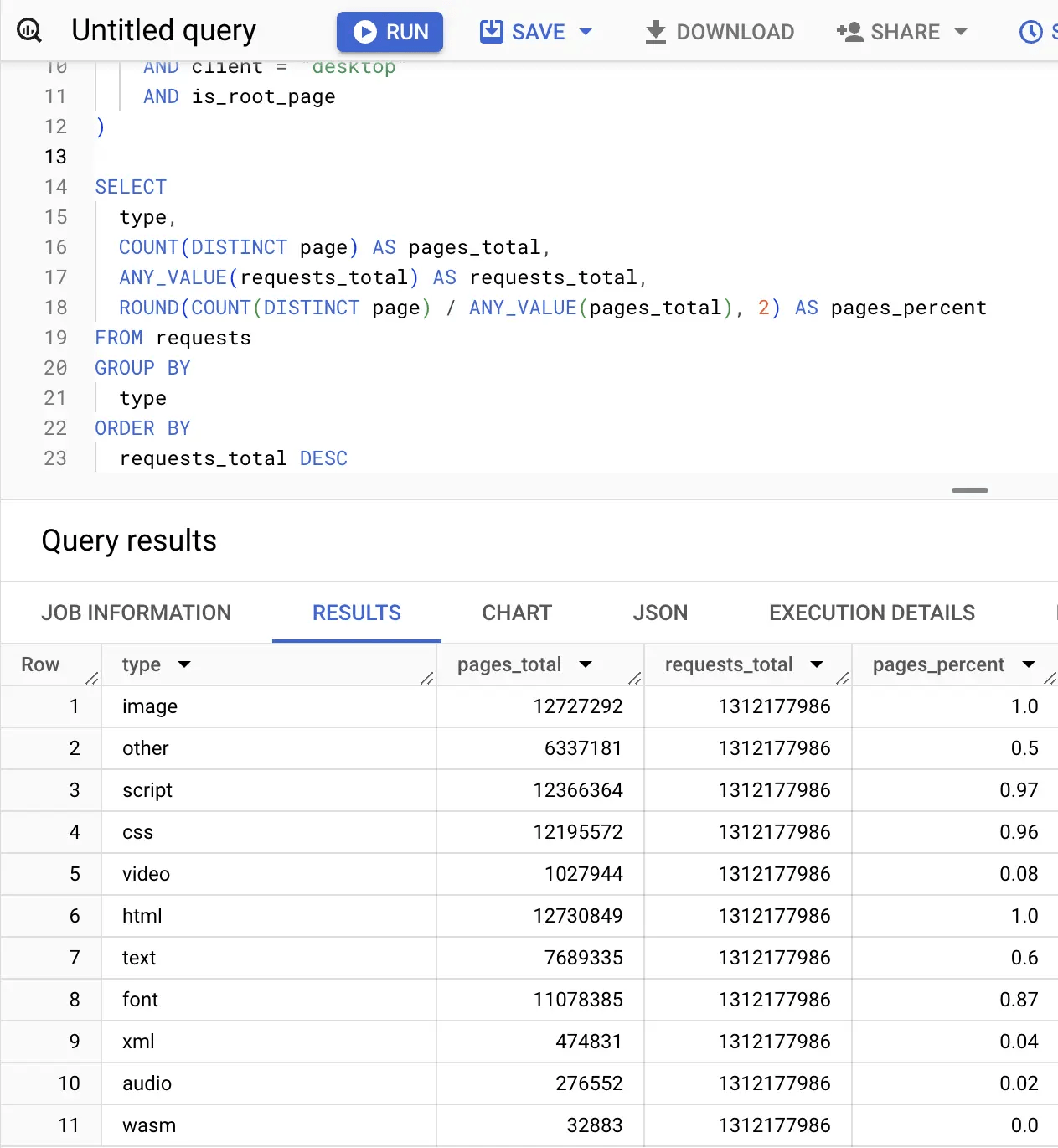

In the screenshot below, one can see examples of how a user can interface with and query the HTTP Archive dataset.

Example screenshot showing how to use Google BigQuery to query the public HTTP Archive dataset - https://har.fyi/guides/getting-started/

Example screenshot showing how to use Google BigQuery to query the public HTTP Archive dataset - https://har.fyi/guides/getting-started/

Querying the HTTP Archive dataset allows one to observe whether specific ad tech vendors transacted and/or served ads to declared bots in a known Google Cloud data center.

The Media Rating Council (MRC) states in its “Invalid Traffic Detection and Filtration Standards Addendum” that the “MRC is requiring filtration of invalid data-center traffic originating from IPs associated to the three largest known hosting entities: Amazon AWS, Google and Microsoft” (emphasis added).

Research Methodology - Bot web traffic source #2 - Anonymous web crawler vendor

The second bot dataset analyzed for the purposes of this research report came from an anonymous web crawler vendor. This vendor shared their web crawling data under condition of anonymity. The vendor crawls the web for various benign reasons - their purpose is not to commit ad fraud or generate ad revenue.

The given vendor crawls approximately seven million websites each month, from several dozen known data center IP addresses, such as Hertzner and Linode data center IPs.

The vendor does not declare via its user-agent HTTP request header that it is a bot; instead, the vendor declares “valid, human” browser user agents when visiting various websites. Thus, the bot’s user HTTP User-Agent request header would likely not be located on the IAB’s list of known bots and spiders. The bot utilizes different IP addresses when initiating its crawl. The bot will occasionally scan the web from data center IPs as well as from residential proxy IPs. Thus, this bot’s browsing activity may possibly in some cases meet the MRC definition of “Sophisticated Invalid Traffic” (SIVT).

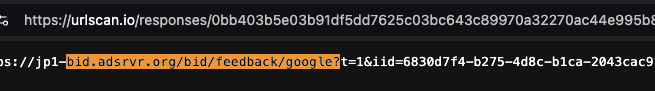

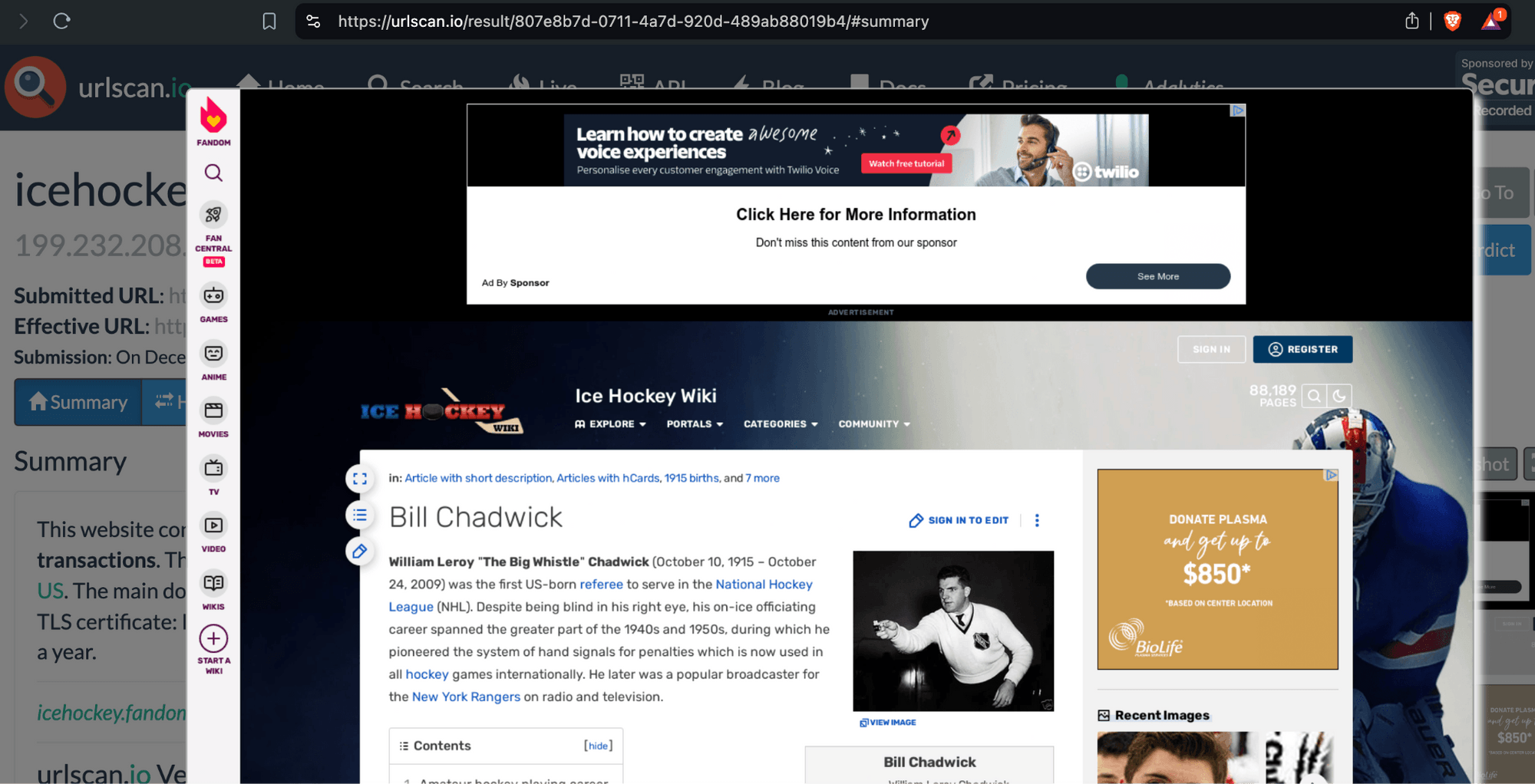

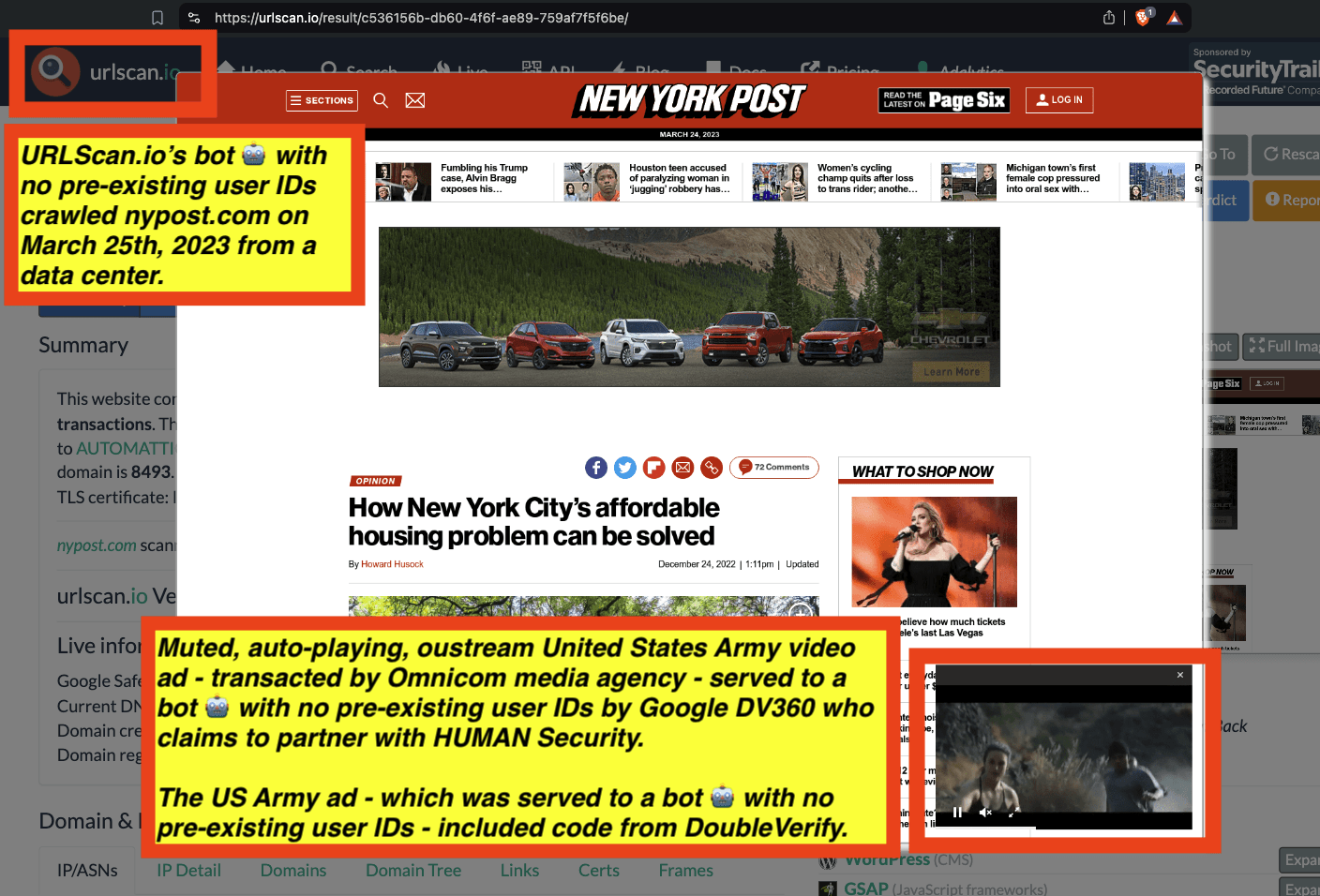

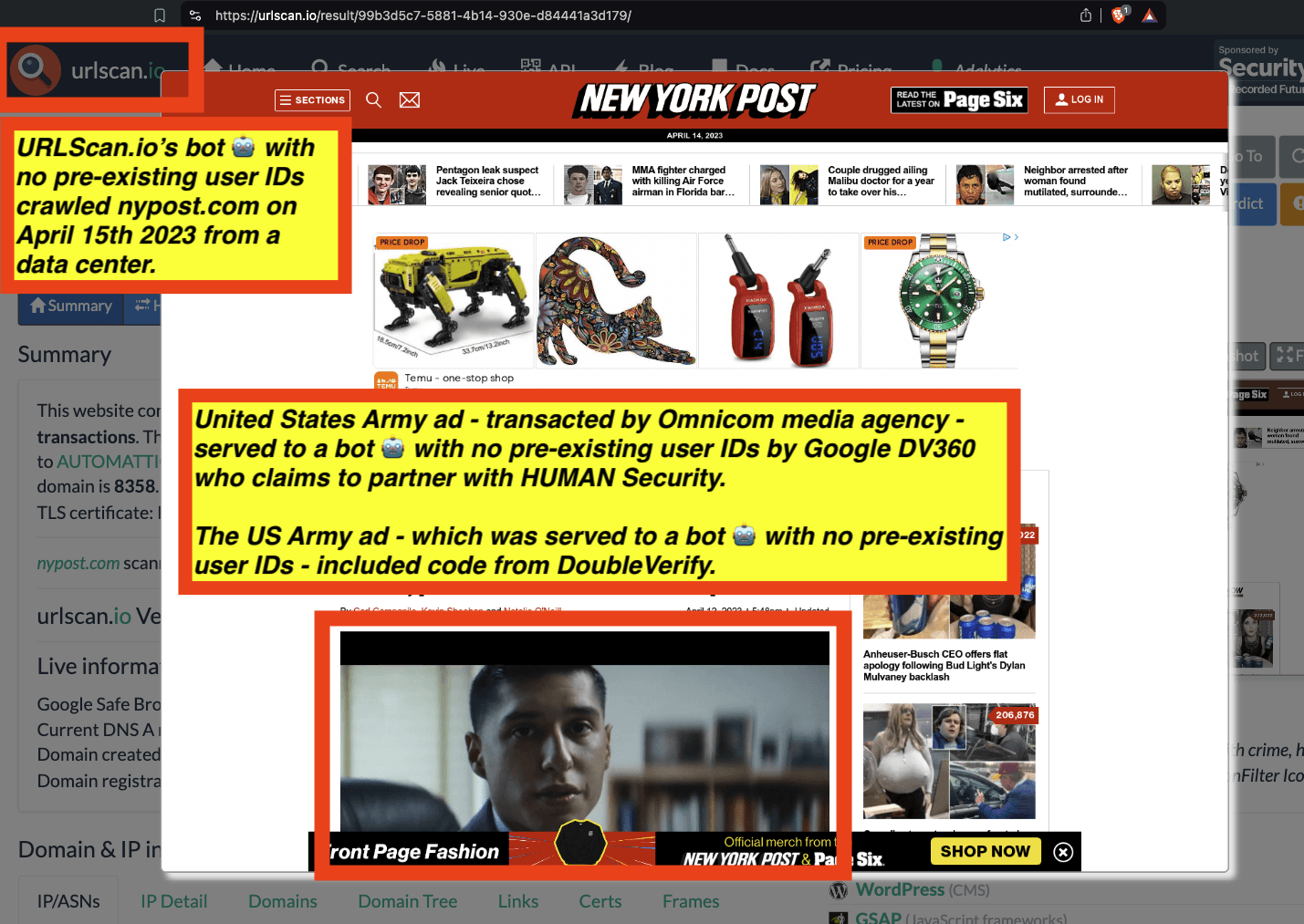

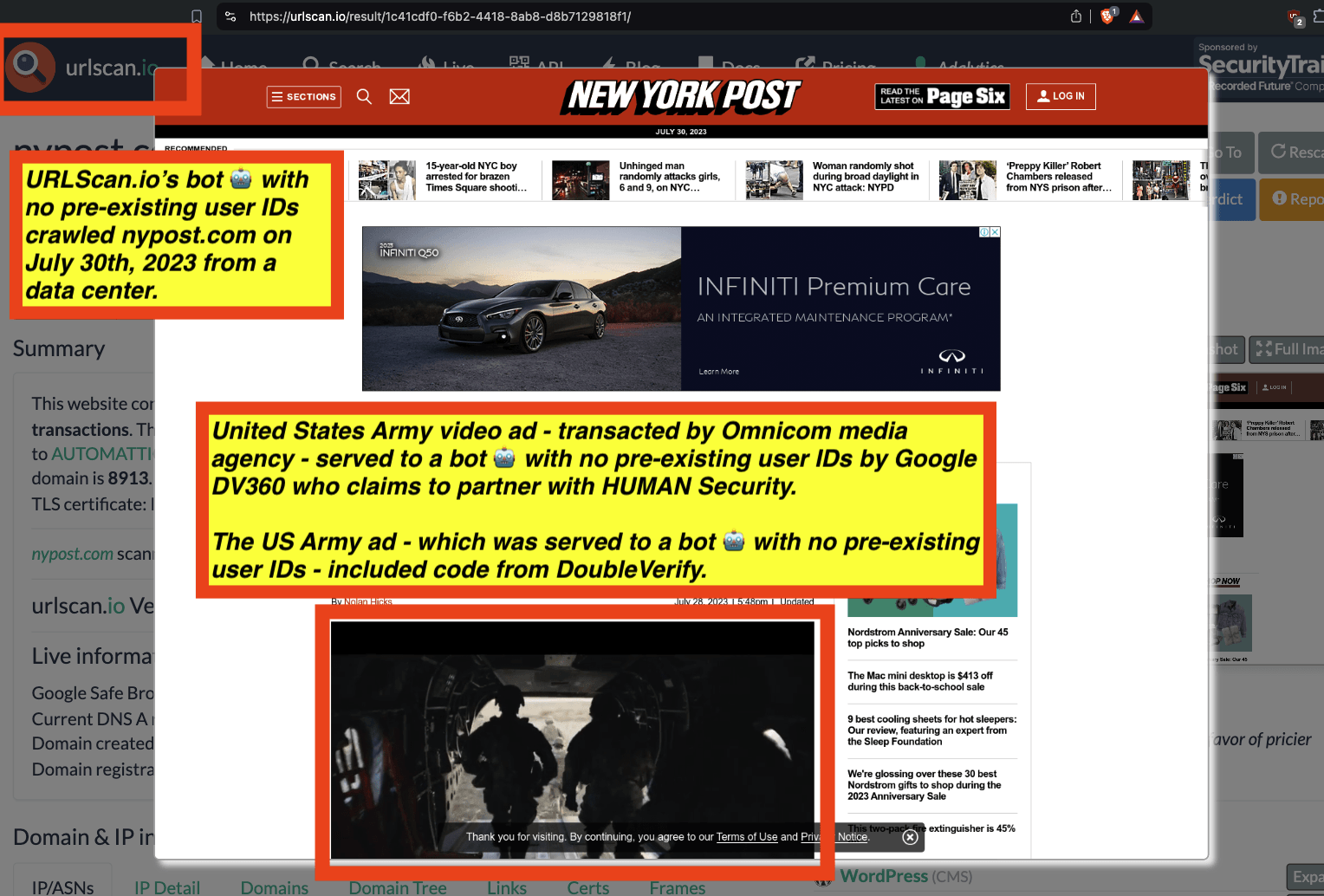

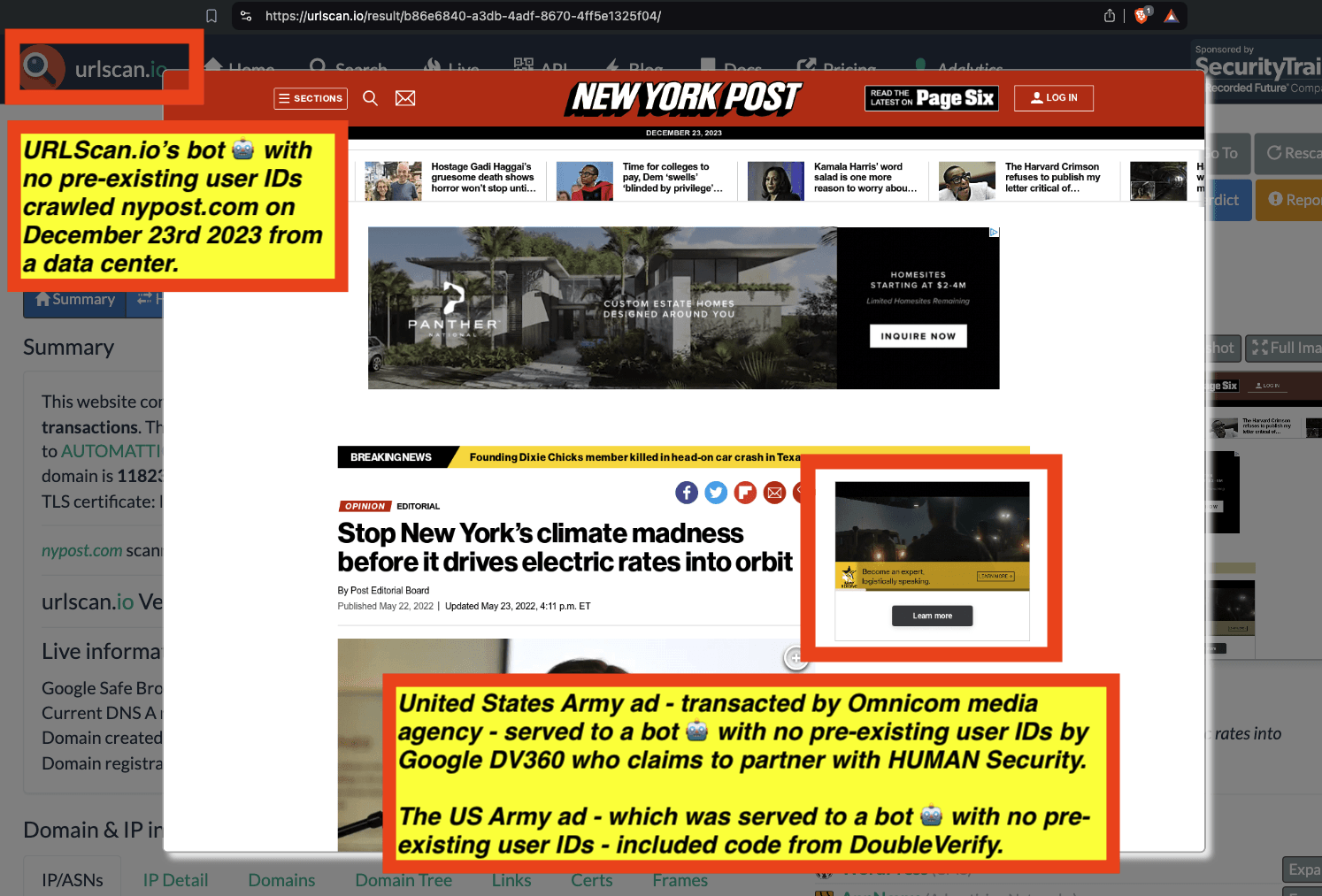

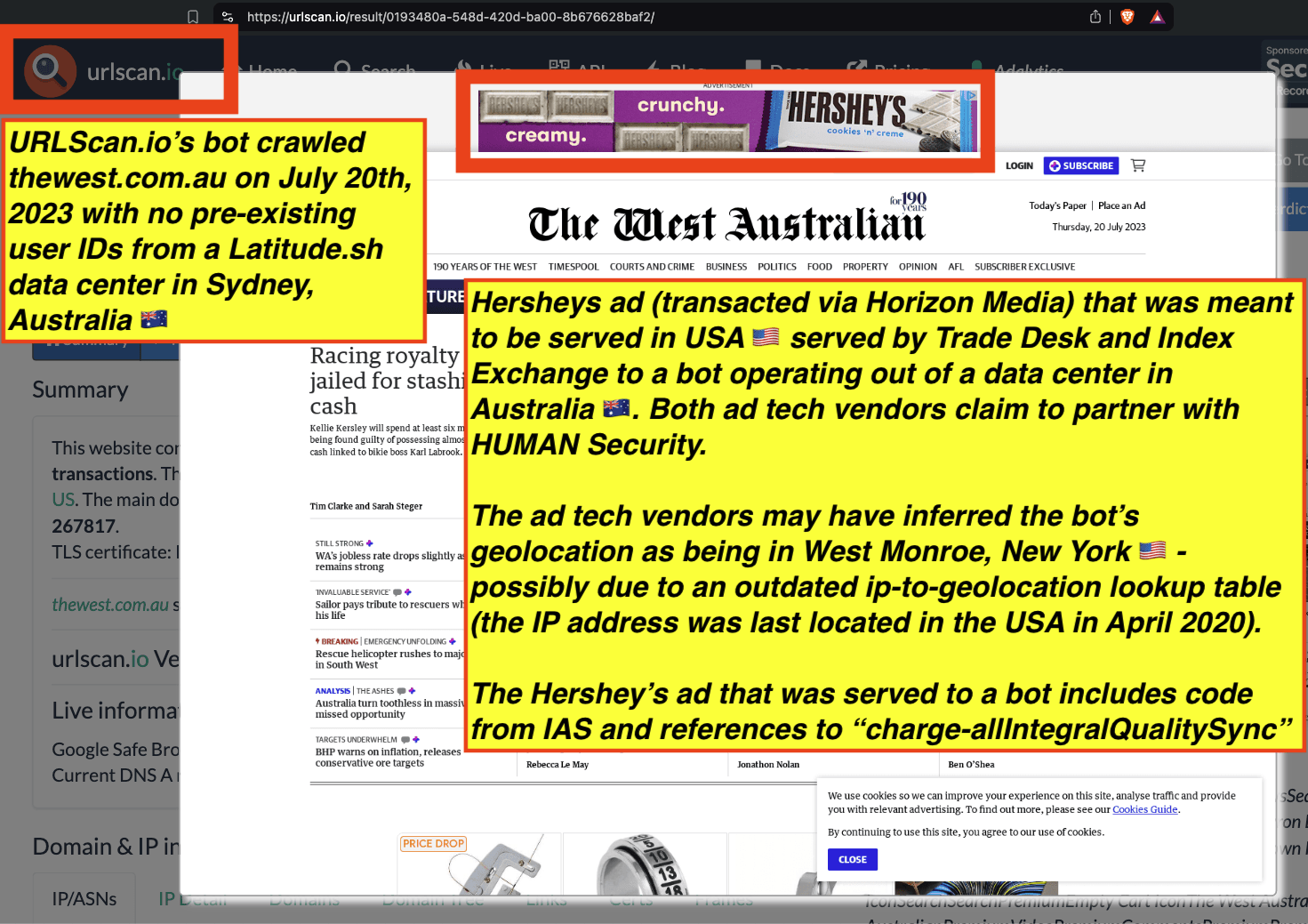

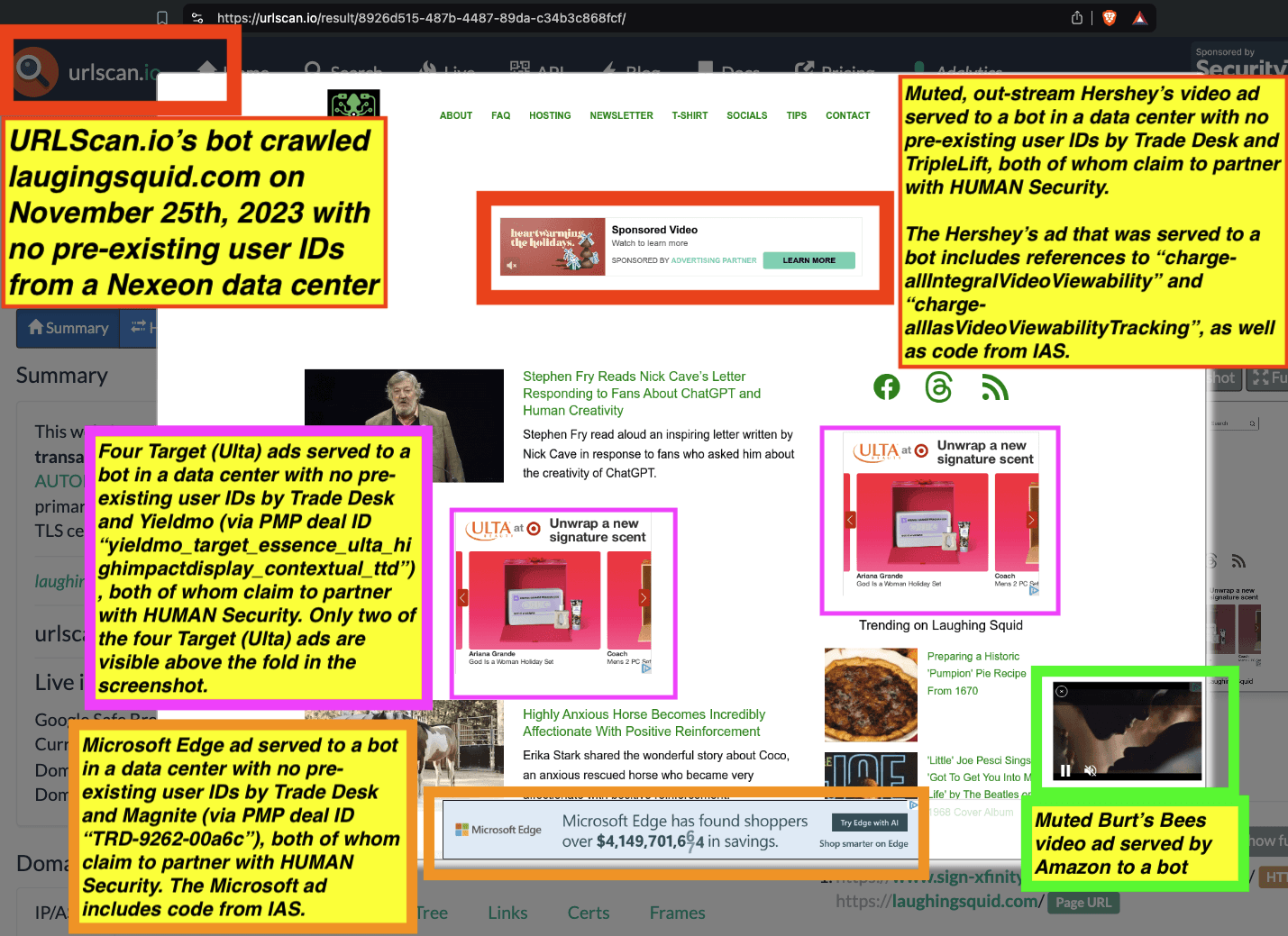

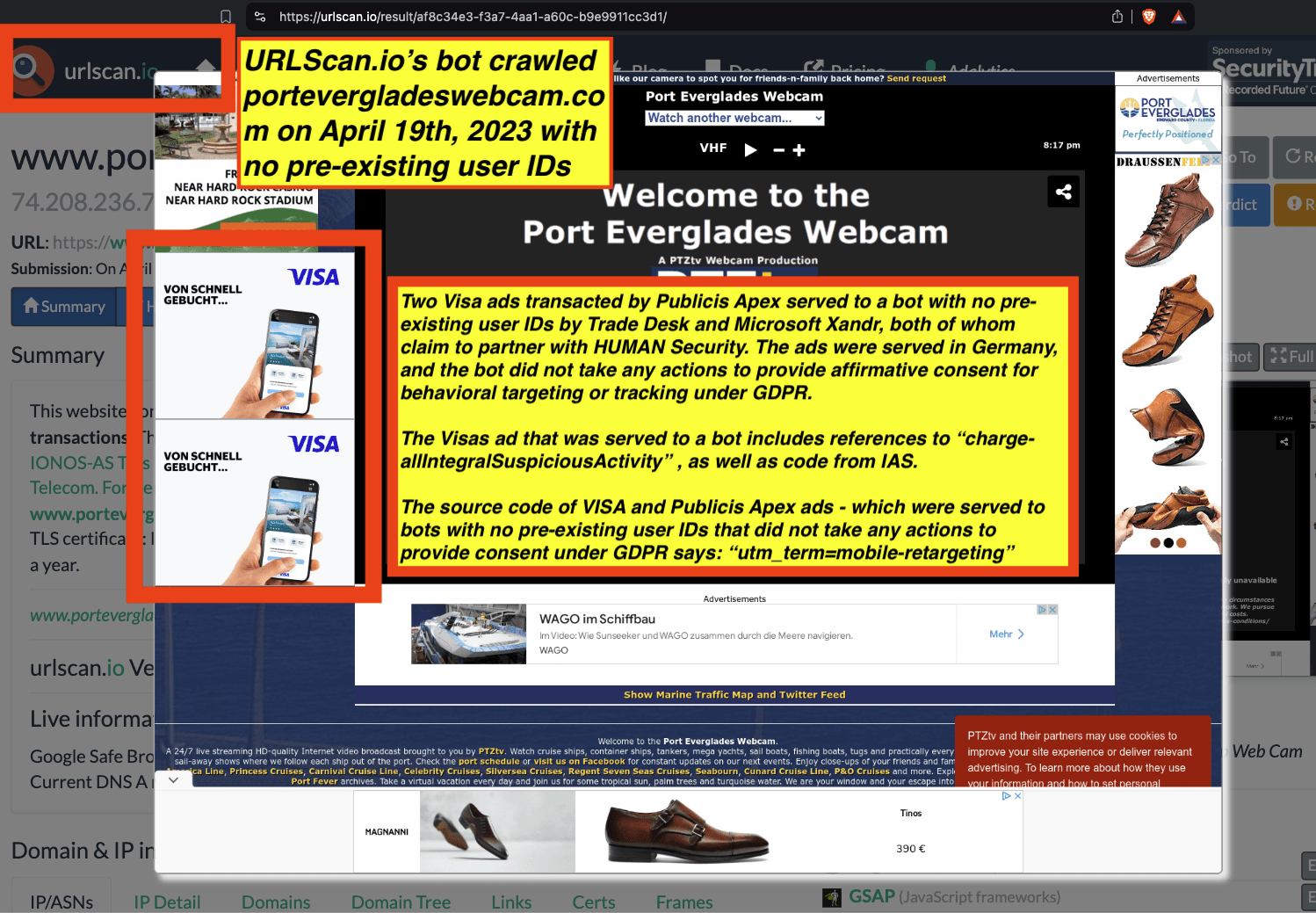

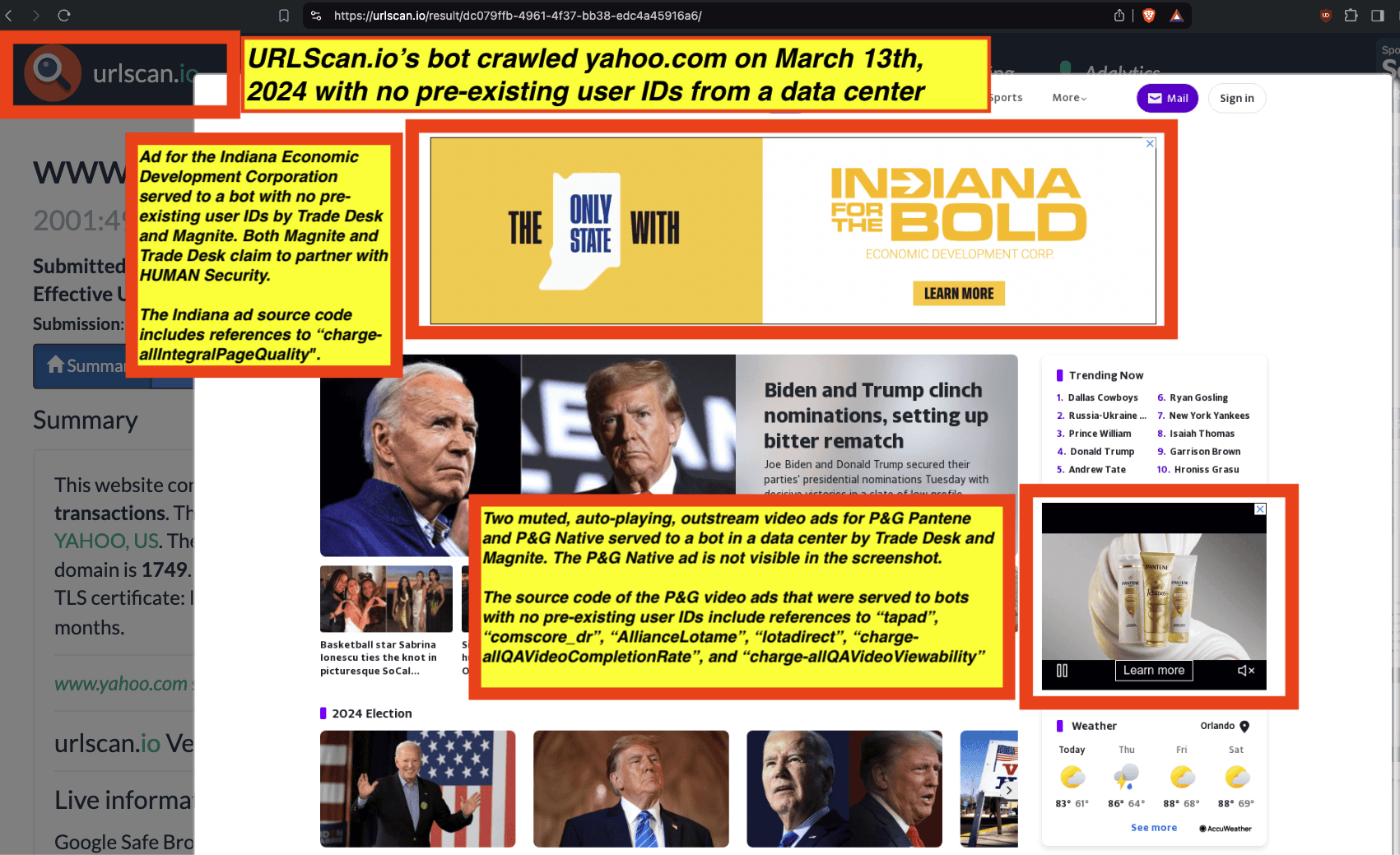

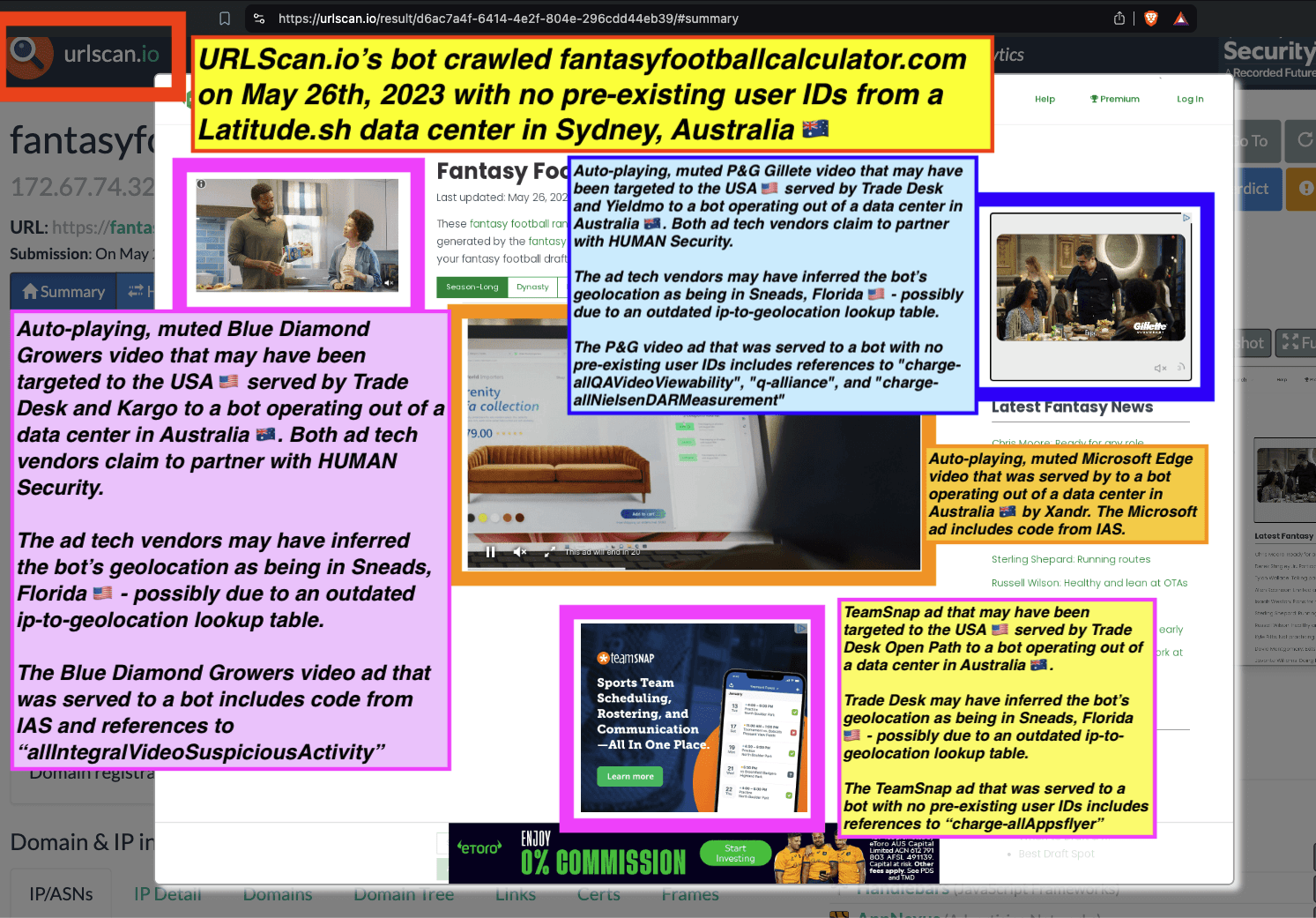

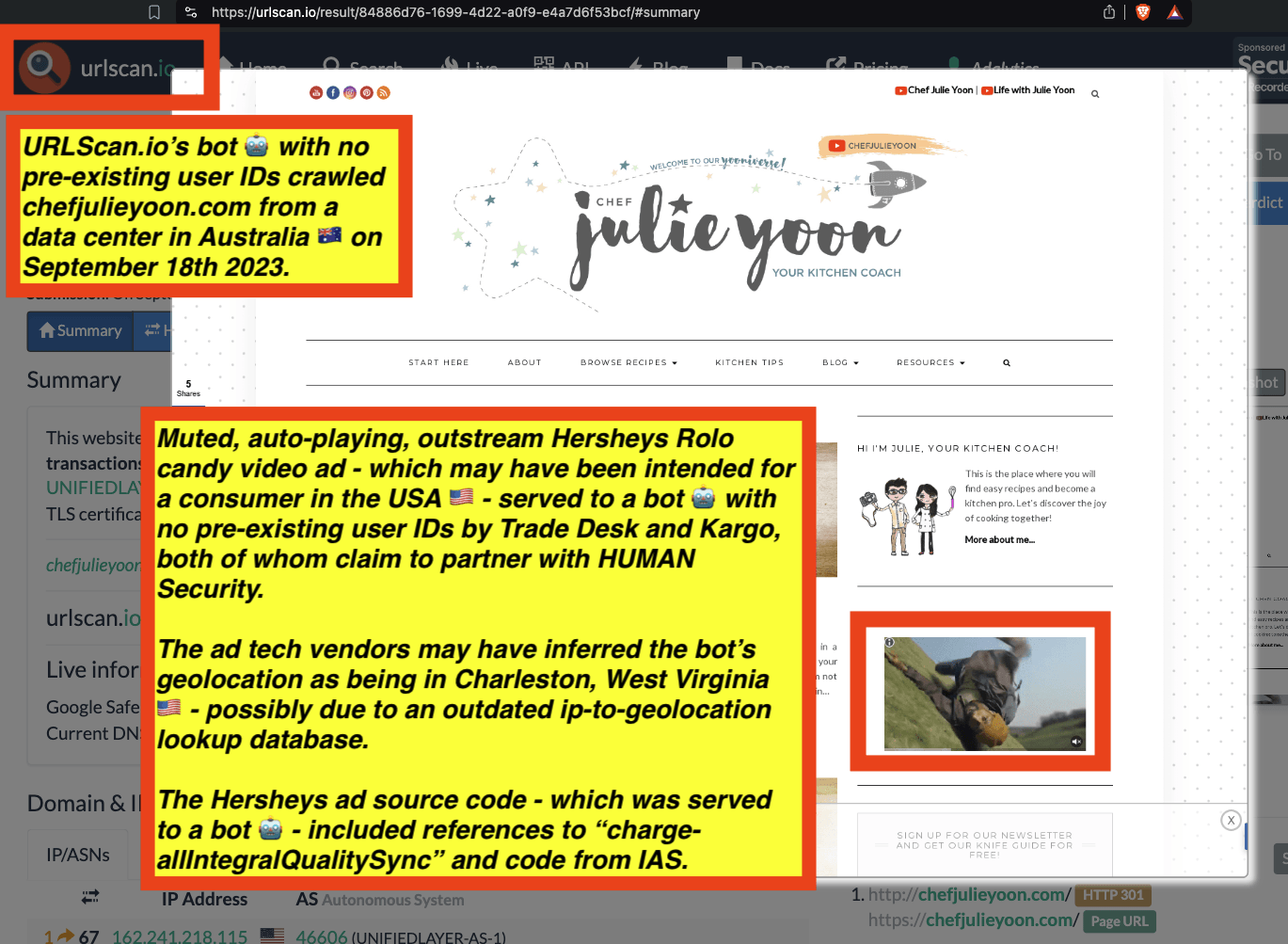

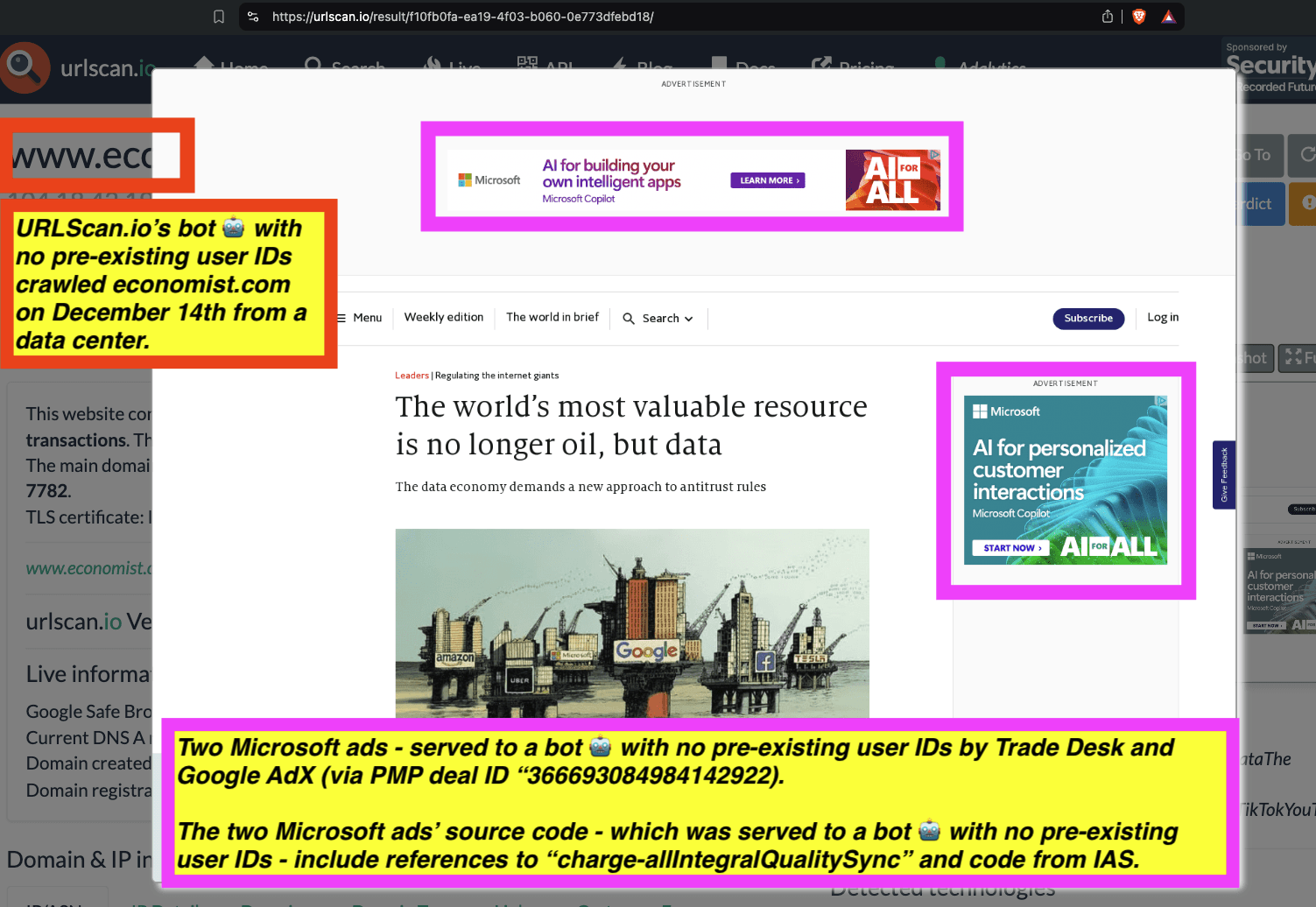

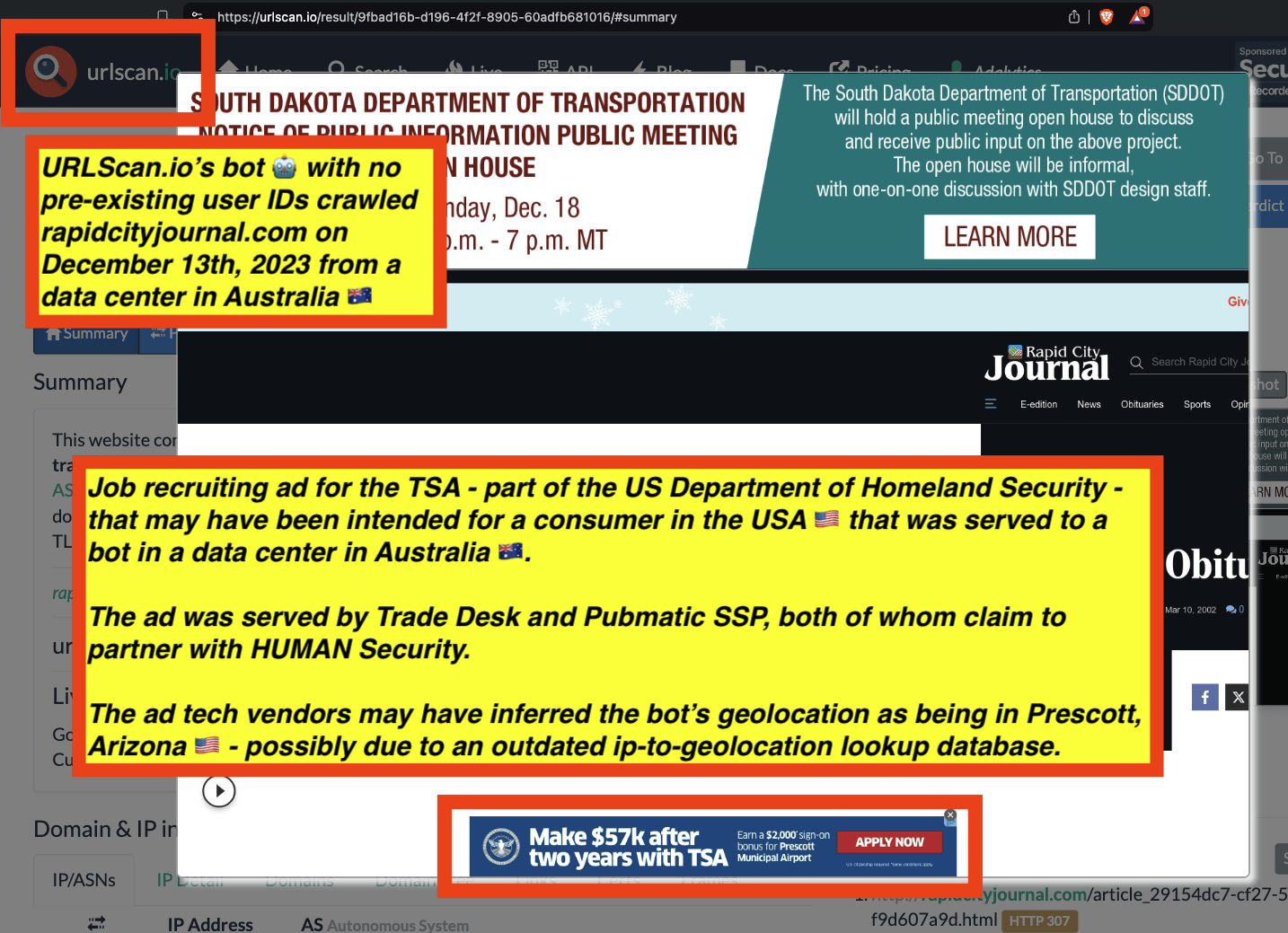

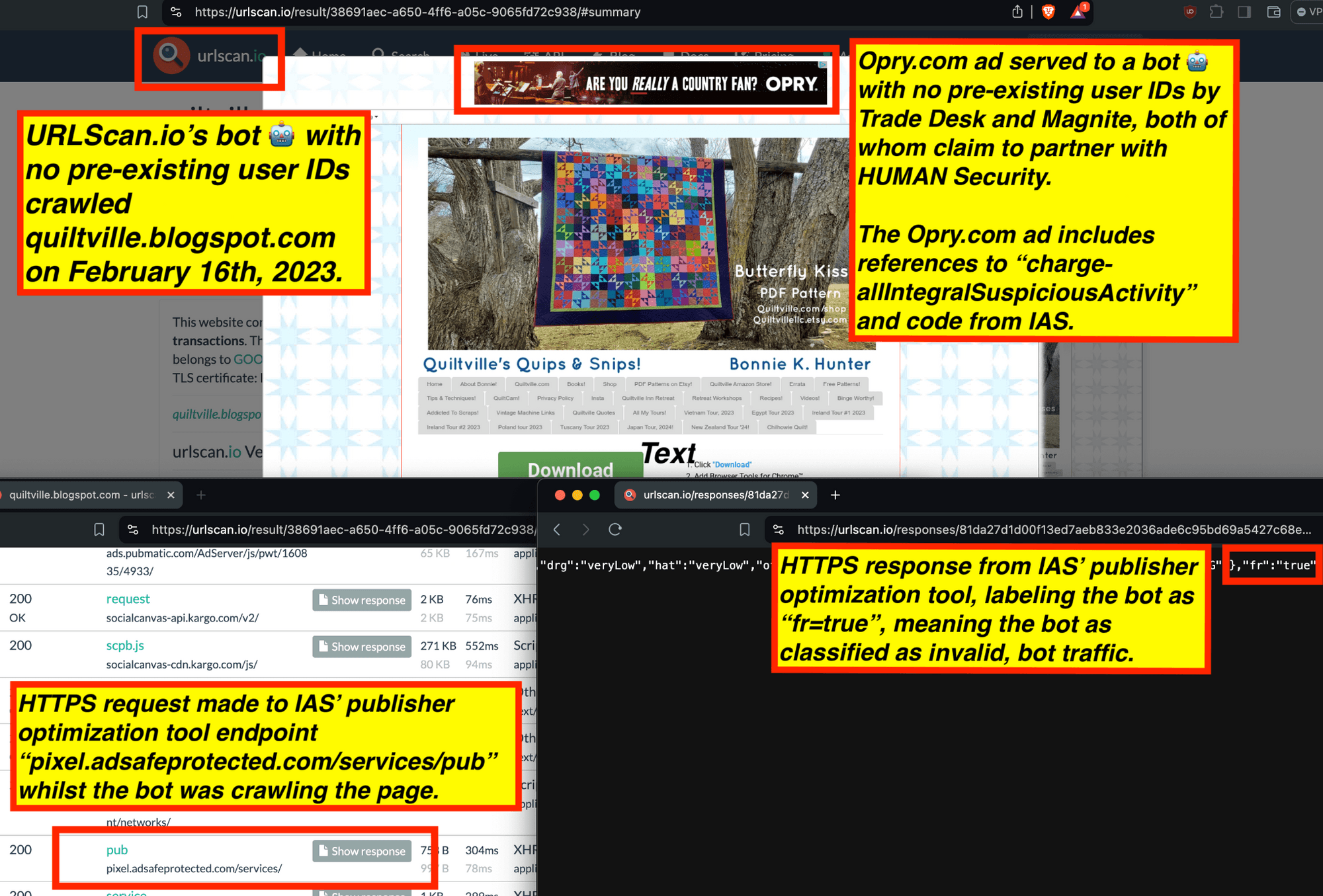

Research Methodology - Bot web traffic source #3 - URLScan.io

The third bot dataset used for this empirical research report was URLScan.io. URLScan.io is a “website scanner for suspicious and malicious URLs.” Its mission is to “allow anyone to easily and confidently analyze unknown and potentially malicious websites.”

“When a URL is submitted to urlscan.io, an automated process will browse to the URL like a regular user and record the activity that this page navigation creates. This includes the domains and IPs contacted, the resources (JavaScript, CSS, etc) requested from those domains, as well as additional information about the page itself. urlscan.io will take a screenshot of the page, record the DOM content, JavaScript global variables, cookies created by the page, and a myriad of other observations.”

URLScan.io does not always declare via its user-agent HTTP header that it is a bot; instead, the vendor’s bot appears to declare “valid, human” browser user agents sometimes when visiting various websites. For example, the URLScan.io bot will declare itself to be a “normal” Chrome browser on a Windows or Linux desktop machine, rather than identifying itself as a bot. Thus, in some cases the URLScan.io’s bot’s HTTP user-agent request header would likely not be found on the IAB’s list of known bots and spiders. Furthermore, the URLScan.io bot appears to utilize different IP addresses when initiating its crawl. URLScan.io appears to occasionally scan the web from data center IPs as well as from datacenter-based Virtual Private Network (VPN) or residential proxy IPs. Thus, ads served to URLScan.io’s crawler may possibly in some cases meet the MRC definition of “Sophisticated Invalid Traffic” (SIVT).

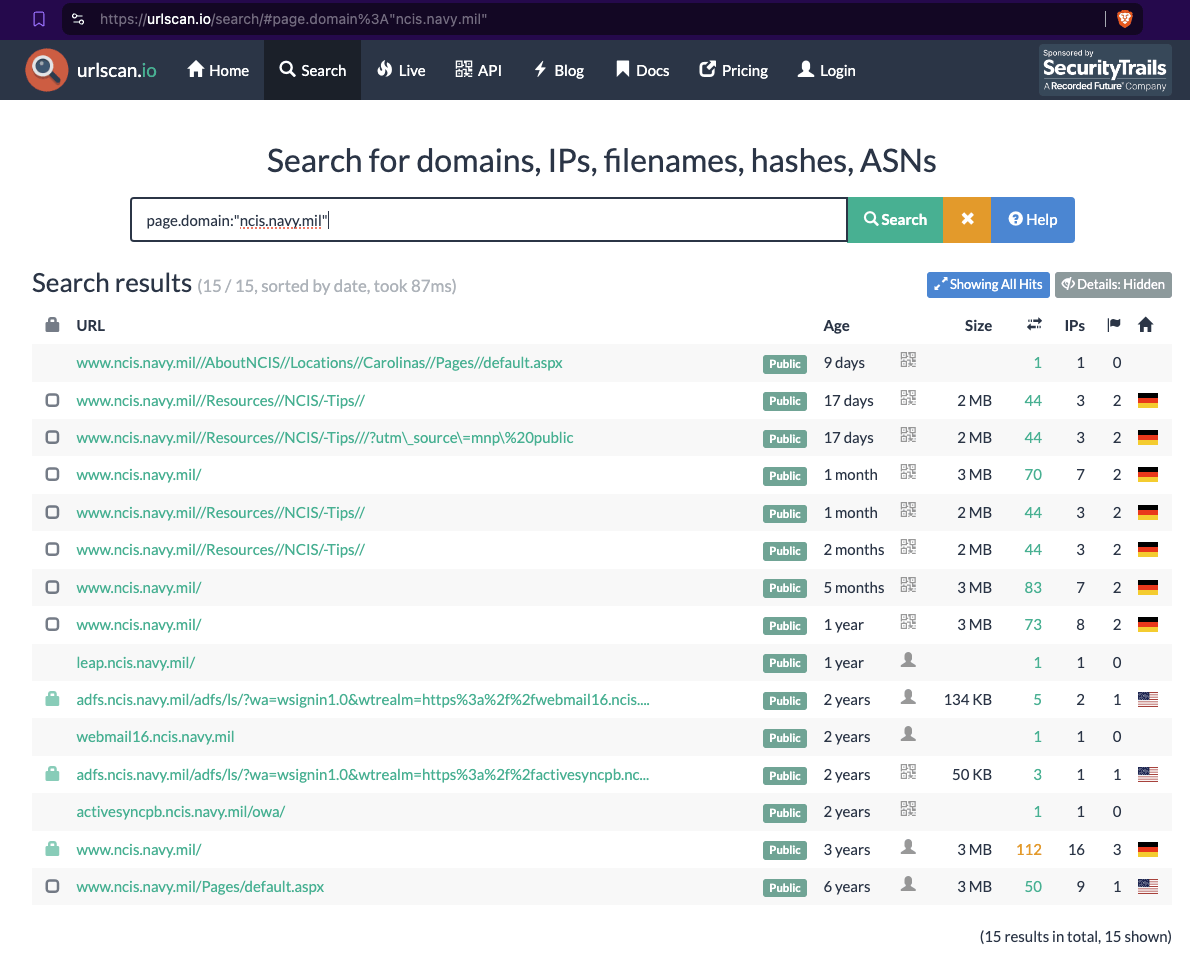

URLScan.io has a powerful search API that allows users to look for specific previous or historical URLScan.io bot crawls. For example, in the screenshot below, one can see a search to find all instances where URLScan.io scanned the website of the Naval Criminal Investigative Service (ncis.navy.mil). One can see that URLScan.io scanned the NCIS website fifteen times in the last six years.

Screenshot of URLScan.io’s search page, showing fifteen instances where URLScan.io’s bot scanned and screenshotted the NCIS website.

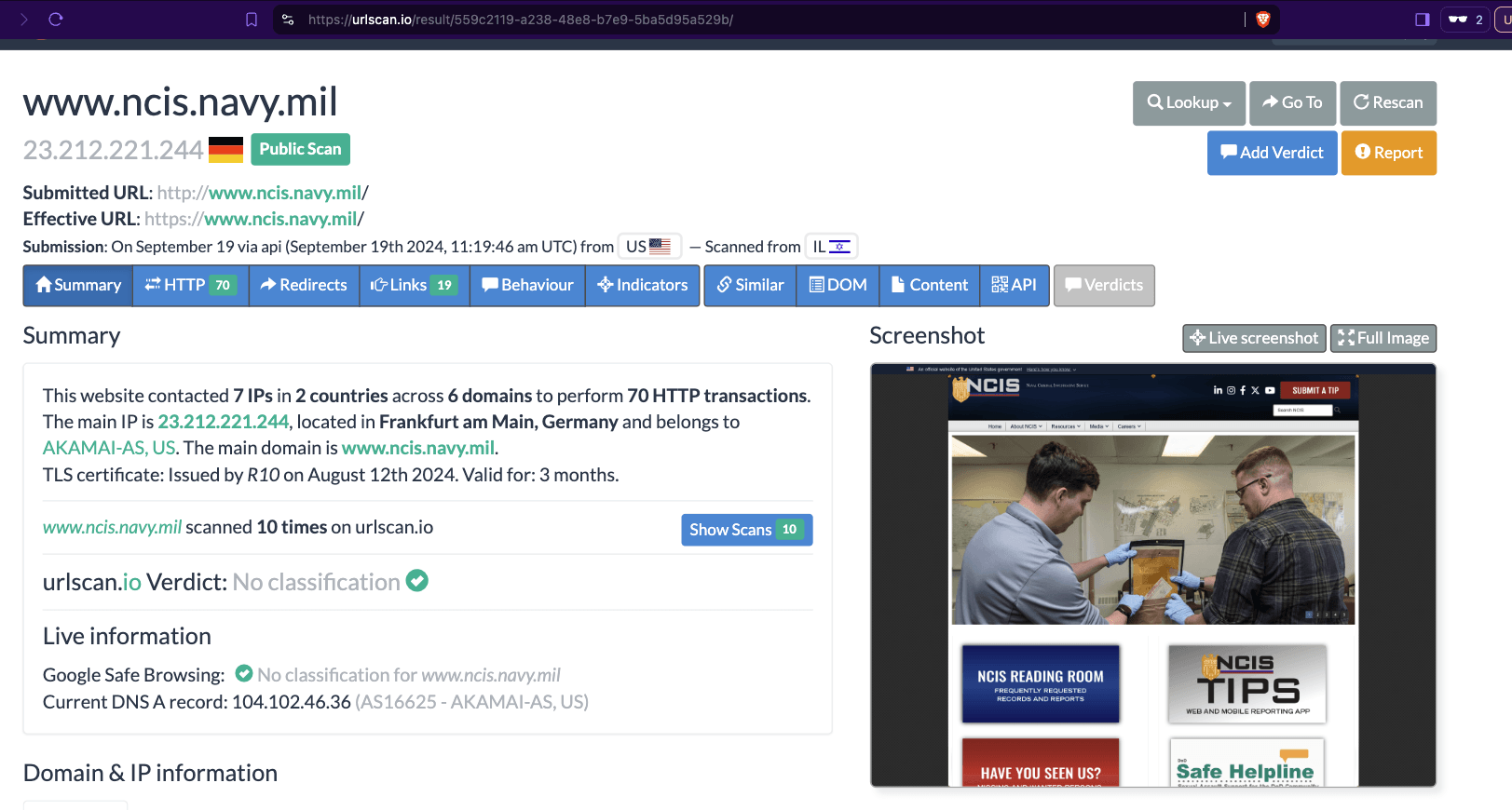

One can click on each individual URLScan.io scan to visualize the results of that scan. For example, one can see that URLScan.io’s bot crawled ncis.navy.mil on September 19th, 2024, and created a screenshot of the page.

Screenshot of a URLScan.io bot crawling and screenshotting the ncis.navy.mil website on September 19th, 2024. Source: https://urlscan.io/result/559c2119-a238-48e8-b7e9-5ba5d95a529b/

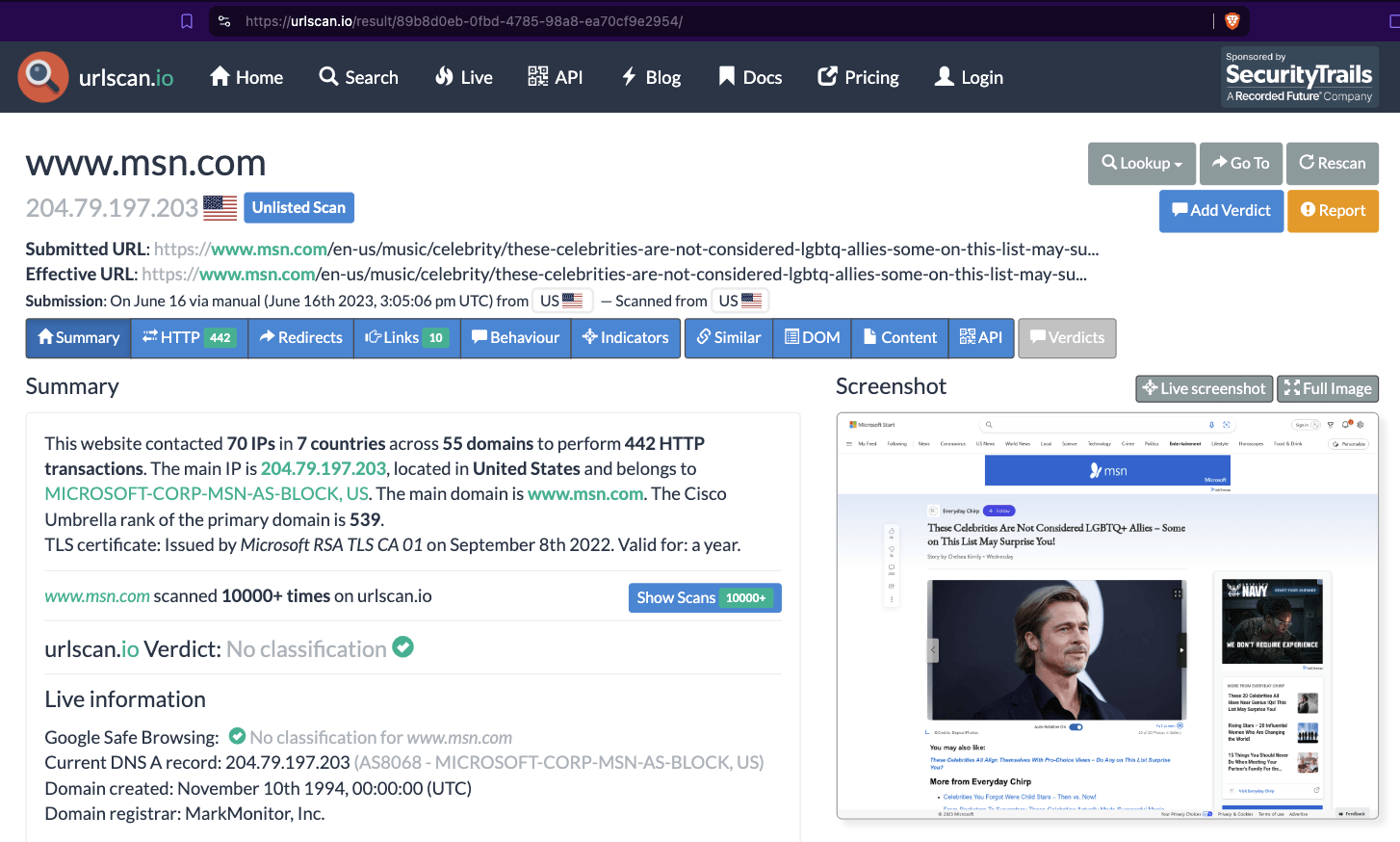

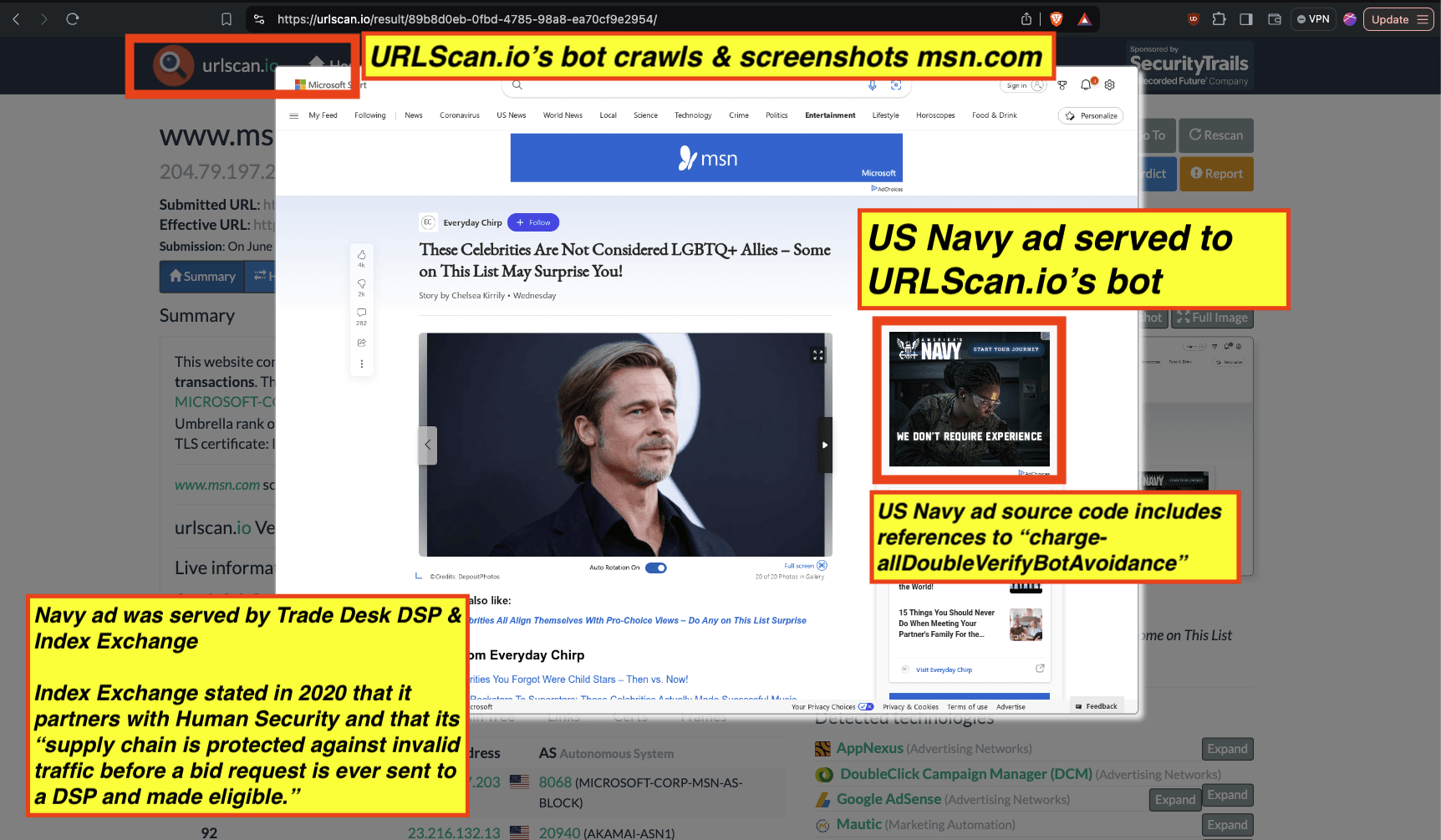

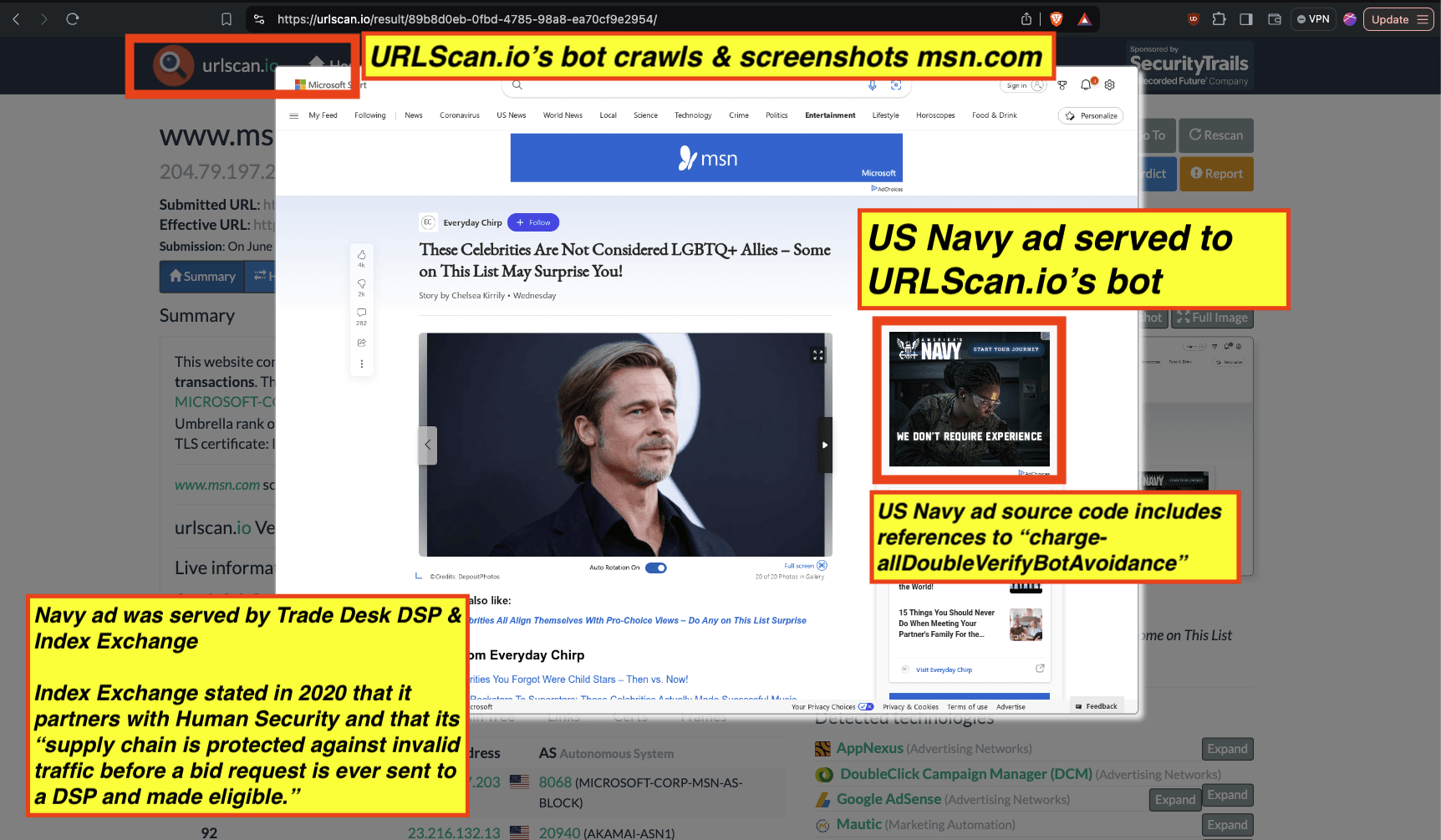

One can use URLScan.io to identify instances where various ad tech vendors served ads to bots. For example, in the screenshot below, one can see a US Navy recruiting ad that was served to URLScan.io’s bot on June 16th, 2023, when the bot was scanning msn.com.

Screenshot of a URLScan.io bot crawl of msn.com on June 16th, 2023, with a US Navy recruiting ad served to the bot. Source: https://urlscan.io/result/89b8d0eb-0fbd-4785-98a8-ea70cf9e2954/

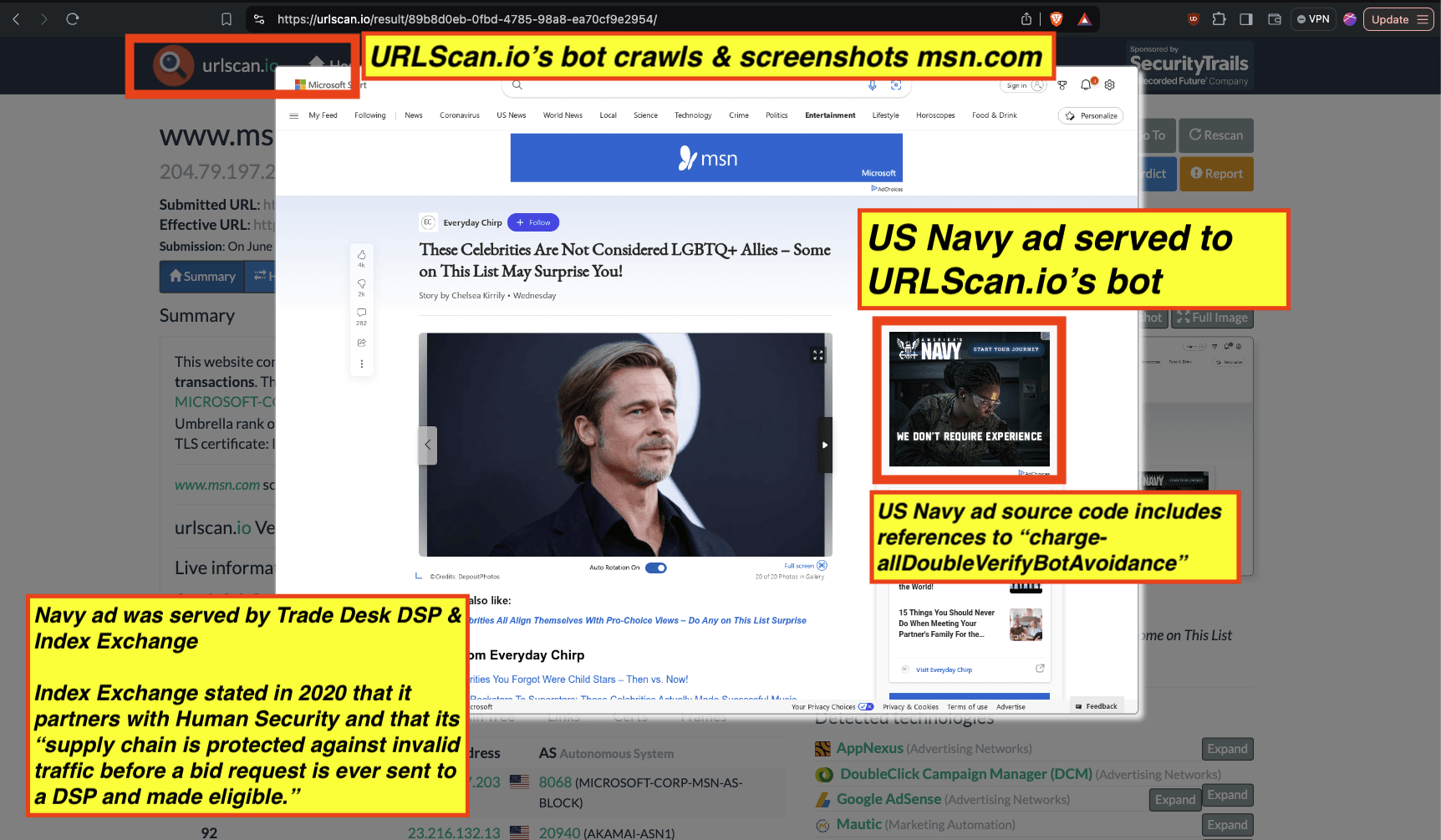

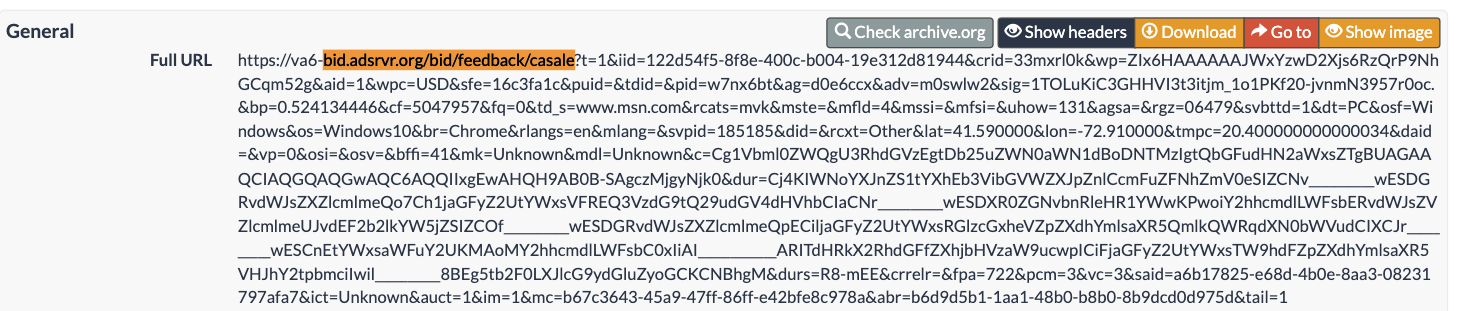

Analyzing the source code of the US Navy ad shows that the ad was transacted by the Trade Desk DSP and Index Exchange SSP (seller ID 185185). The source code of the US Navy ads contains references to “charge-allDoubleVerifyBotAvoidance”. Index Exchange and HUMAN Security also has issued public statements that: it has an “expanded partnership that enhances Index Exchange’s global inventory across all channels and regions. Through White Ops’ comprehensive protection, the partnership protects the entirety of Index Exchange’s global inventory. It allows buyers to purchase from IX’s emerging channels, such as mobile app and Connected TV (CTV), with confidence that its supply chain is protected against invalid traffic before a bid request is ever sent to a DSP and made eligible” (emphasis added).

Screenshot of URLScan.io’s website, showing a URLScan.io bot crawl from June 16th, 2023. One can see a US Navy ad that was mediated by Trade Desk, Index Exchange, DoubleVerify, and/or HUMAN Security on msn.com.

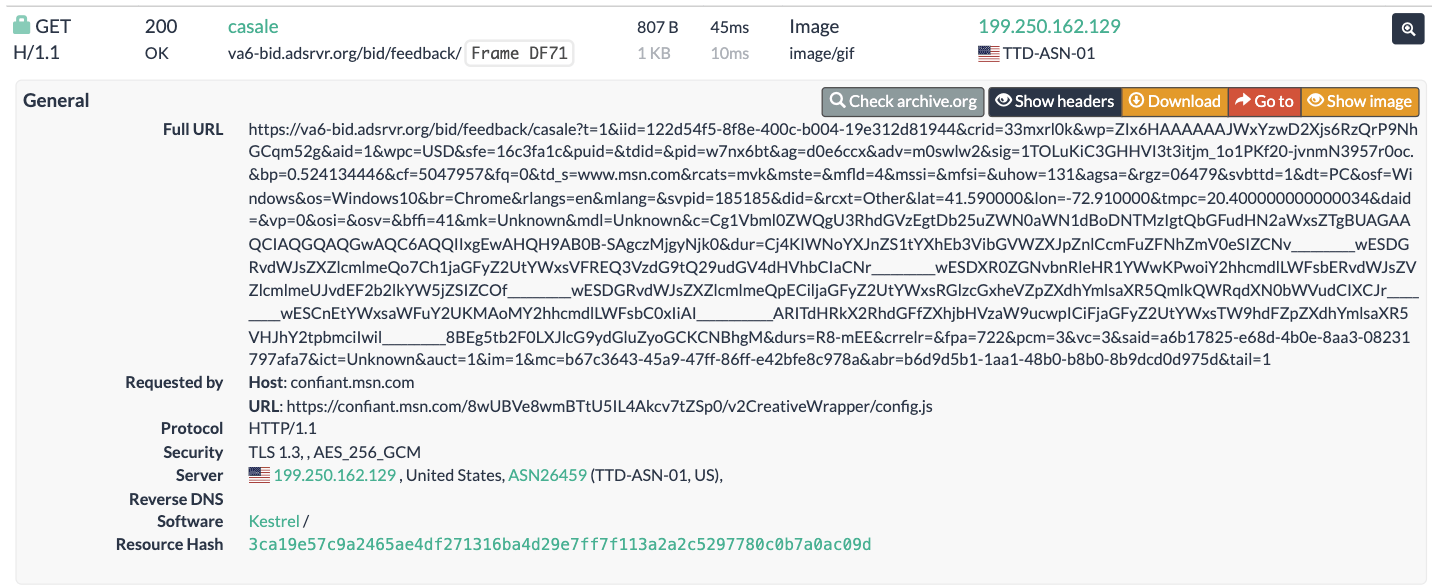

Screenshot of URLScan.io’s HTTP requests tab for a URLScan.io bot crawl on June 16th, 2023. The screenshot shows an HTTP request to “bid.adsrv.org/bid/feedback/casale” - a “win notification” pixel that fires when a Trade Desk ad transacts via Index Exchange SSP on msn.com

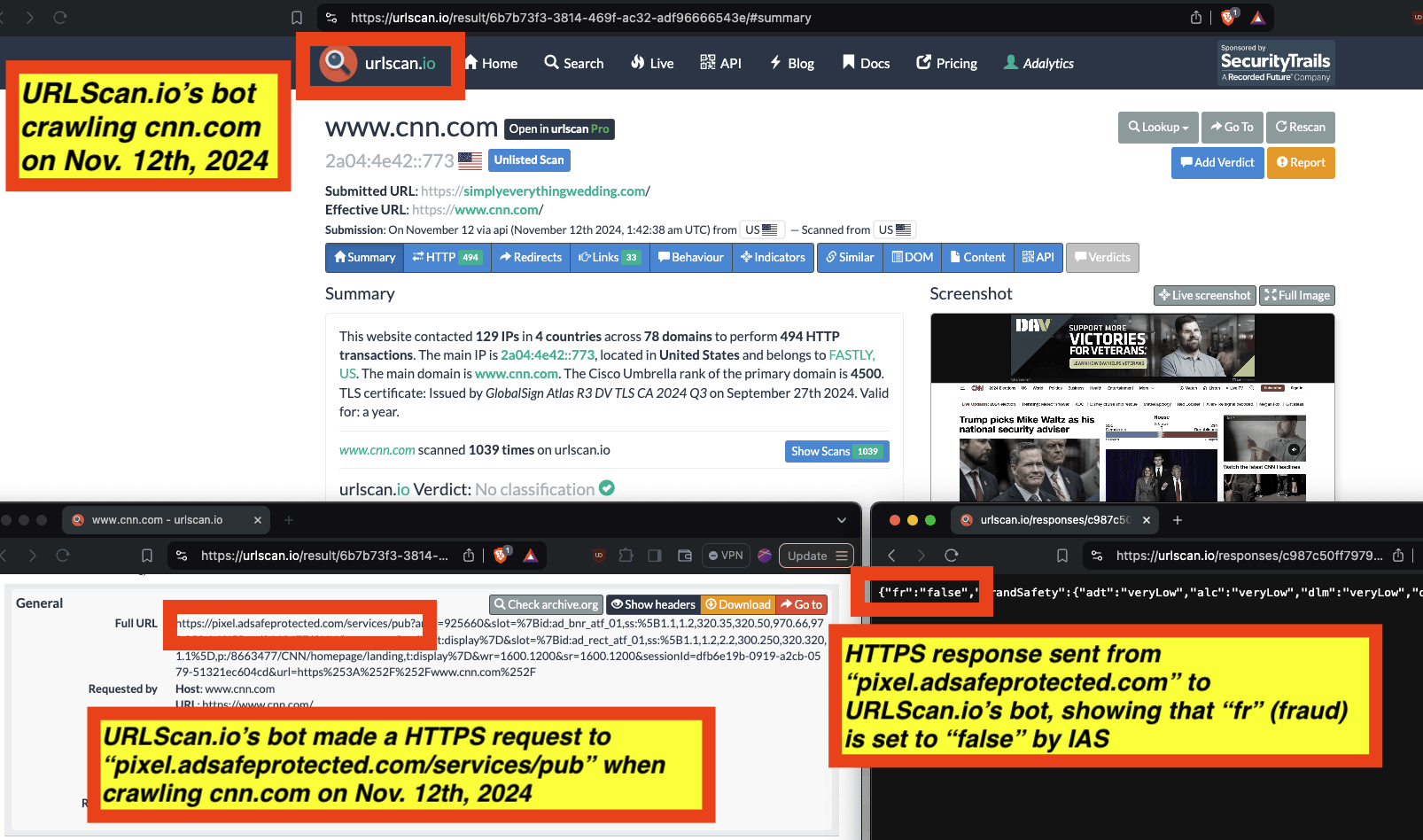

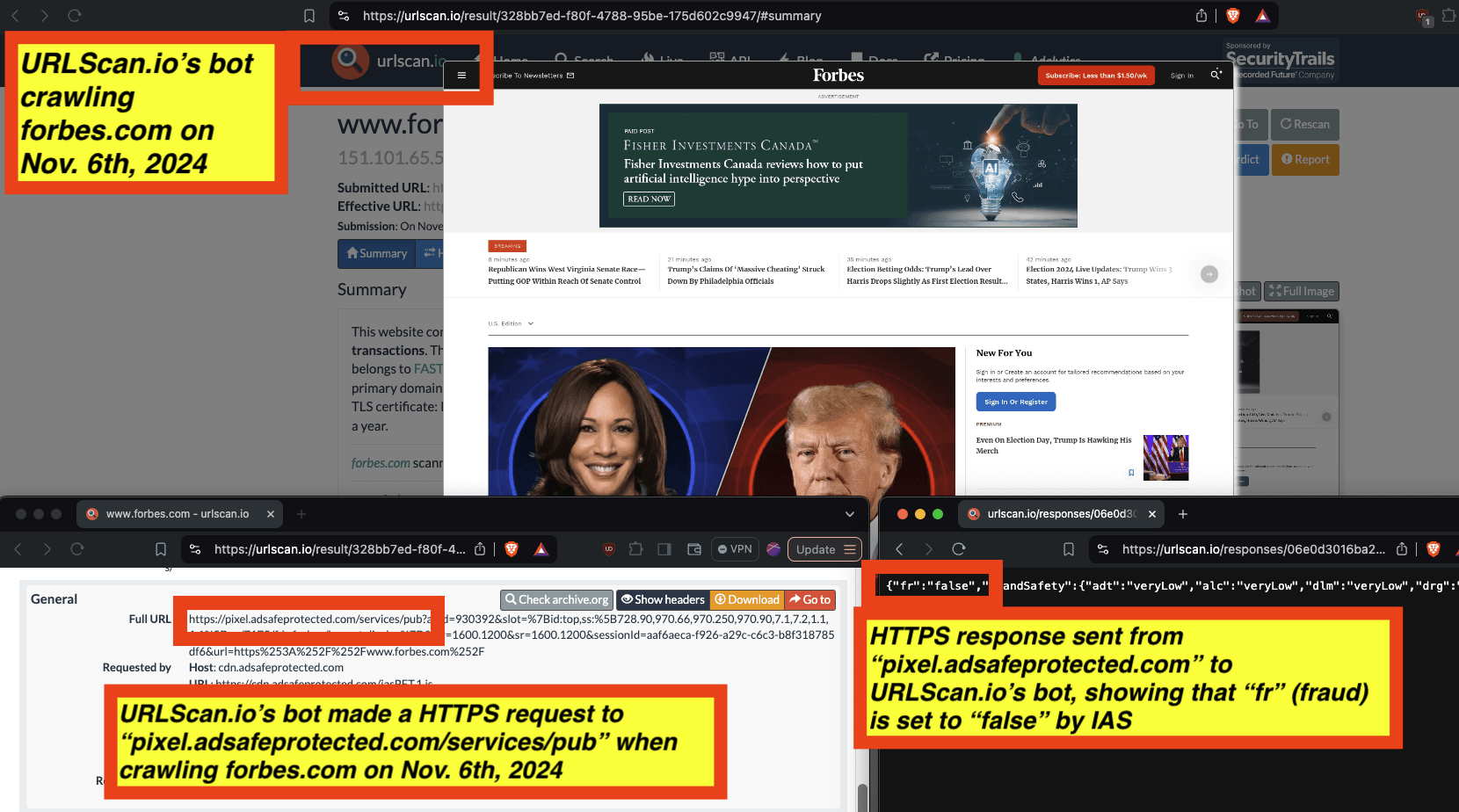

Research Methodology - Examination of putative bot traffic classifications from IAS on various sites

In addition to providing services to advertisers, Integral Ad Science (IAS) also accepts financial payments from and provides services to media publishers.

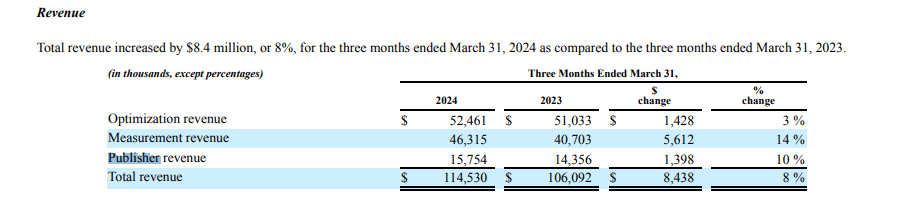

For example, one can see that IAS made $15.7 million dollars in revenue from publishers in Q1 2024, according to IAS’s 10-Q form.

Screenshot of IAS’s Q1 2024 10-Q form, showing that IAS made $15.7 million in revenue providing services to publishers

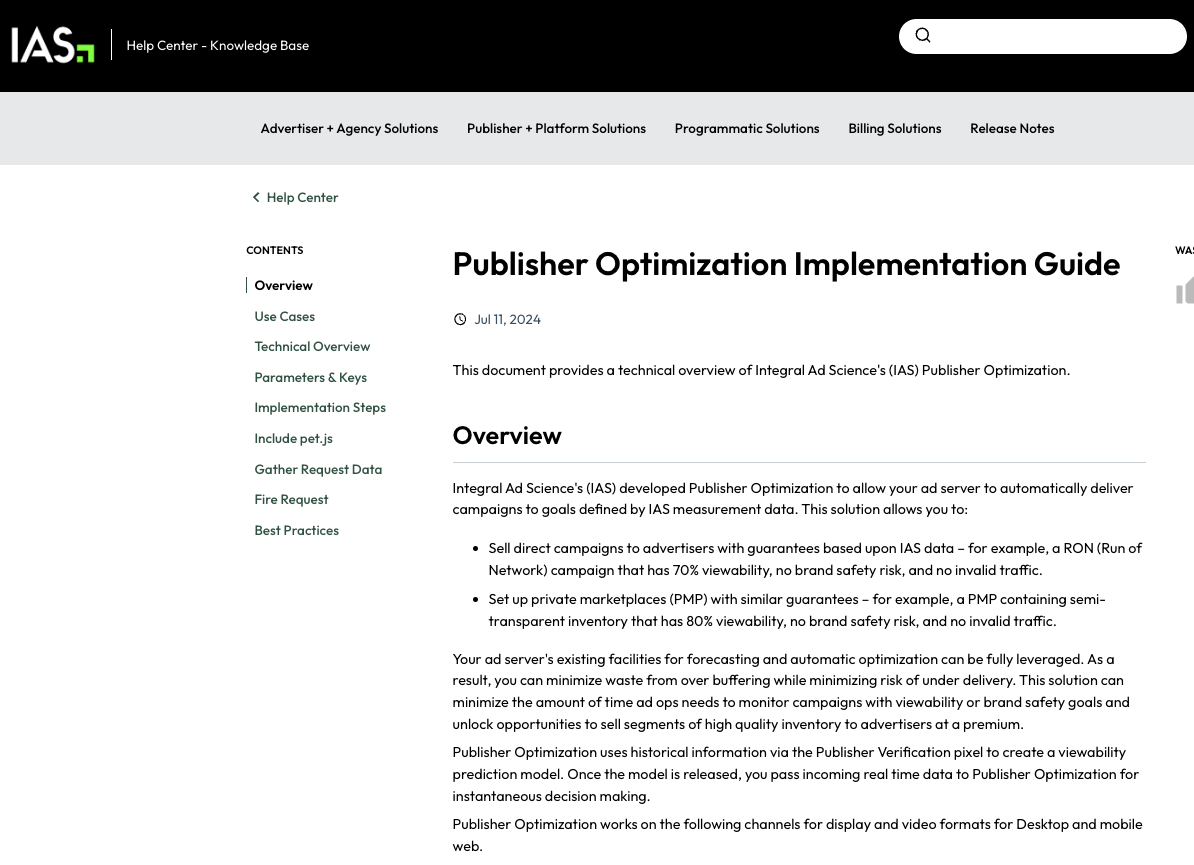

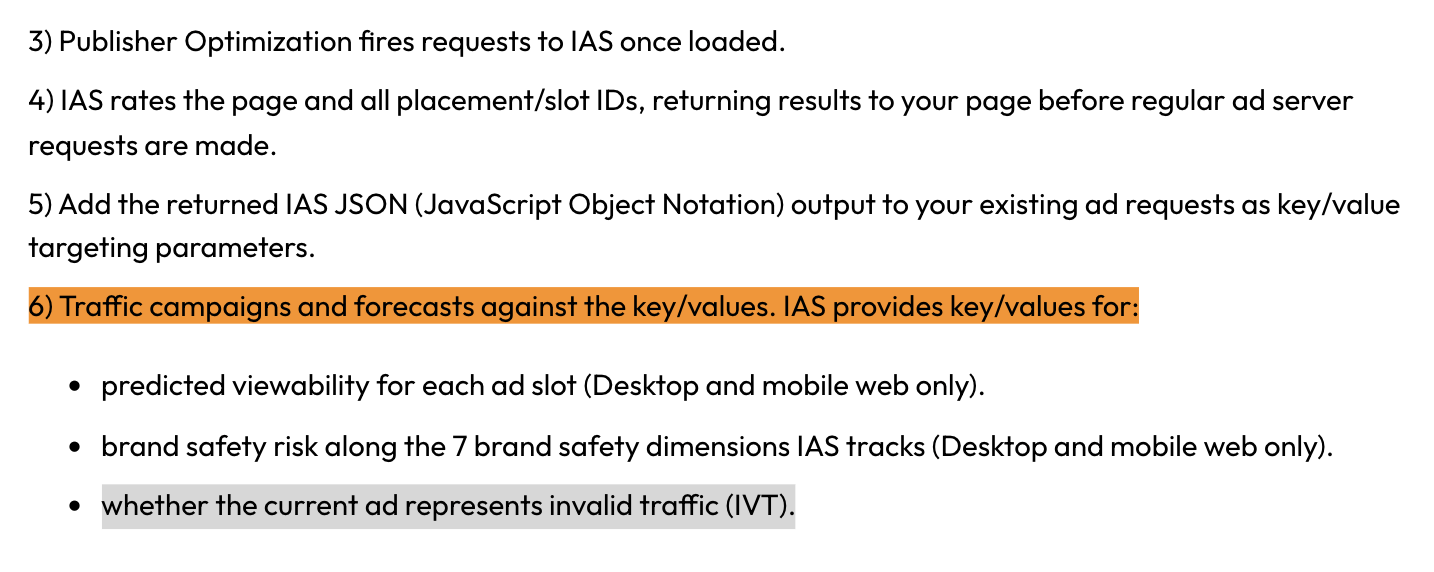

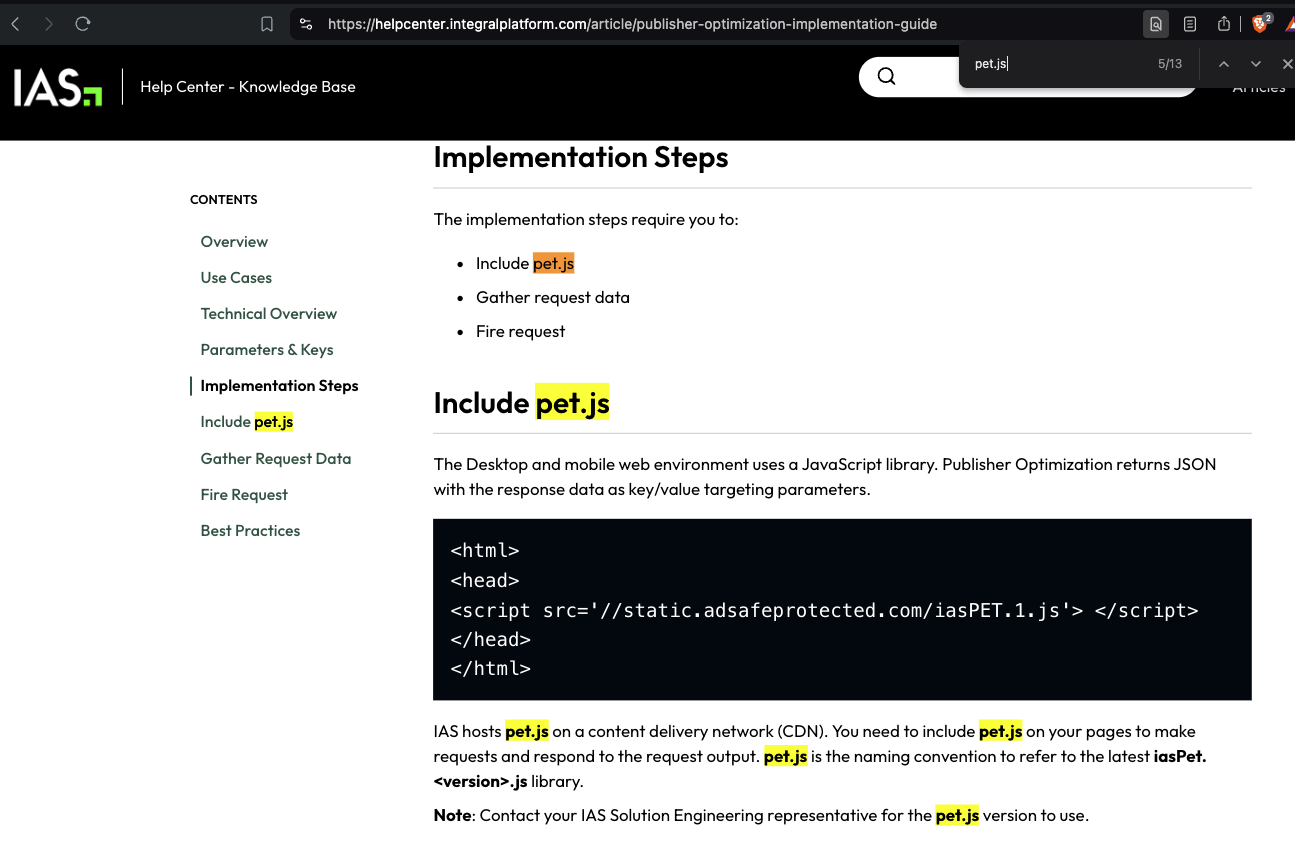

IAS releases publisher specific tools, some of which appear to be invoked before any ads are served or ad auctions run on a given page. Specifically, IAS’s public “publisher optimization implementation guide” provides a technical overview of its publisher tools.

Screenshot of Integral Ad Science (IAS)’s public “Publisher Optimization Implementation Guide” - Original Link: https://helpcenter.integralplatform.com/article/publisher-optimization-implementation-guide; Archived: https://perma.cc/G6EZ-76VR;

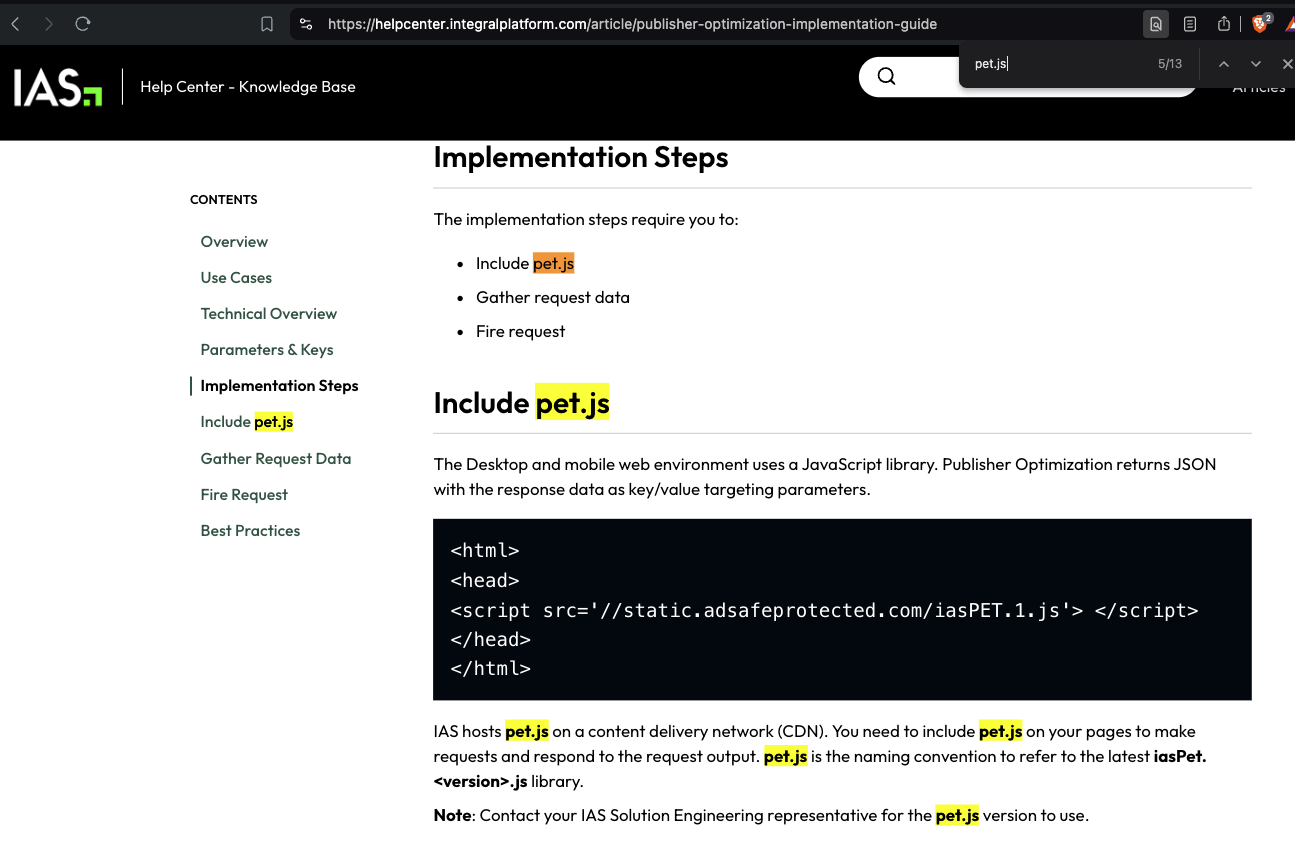

IAS’s public “Publisher Optimization Implementation Guide” explains that the “The desktop and mobile web environment uses a JavaScript library. Publisher Optimization returns JSON with the response data as key/value targeting parameters.” This is loaded via a specific Javascript file called: “static.adsafeprotected.com/iasPET.1.js”.

Screenshot of Integral Ad Science (IAS)’s public “Publisher Optimization Implementation Guide”

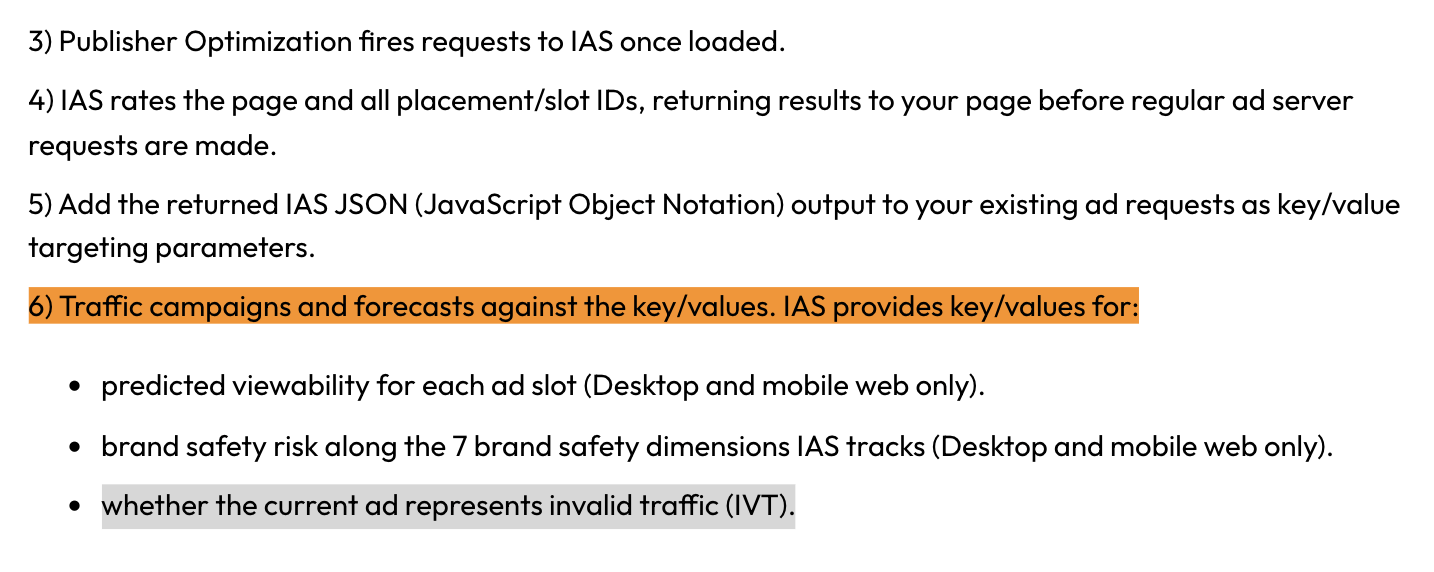

The IAS Publisher Optimization tool “provides key/values for: [...] whether the current ad represents invalid traffic (IVT).”

Screenshot of Integral Ad Science (IAS)’s public “Publisher Optimization Implementation Guide” - Original Link: https://helpcenter.integralplatform.com/article/publisher-optimization-implementation-guide; Archived: https://perma.cc/G6EZ-76VR;

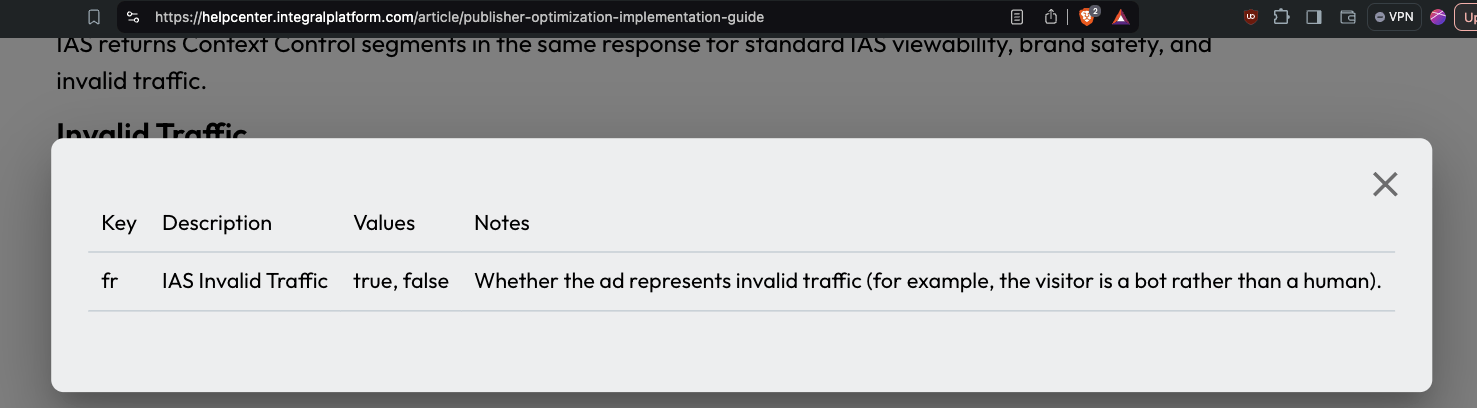

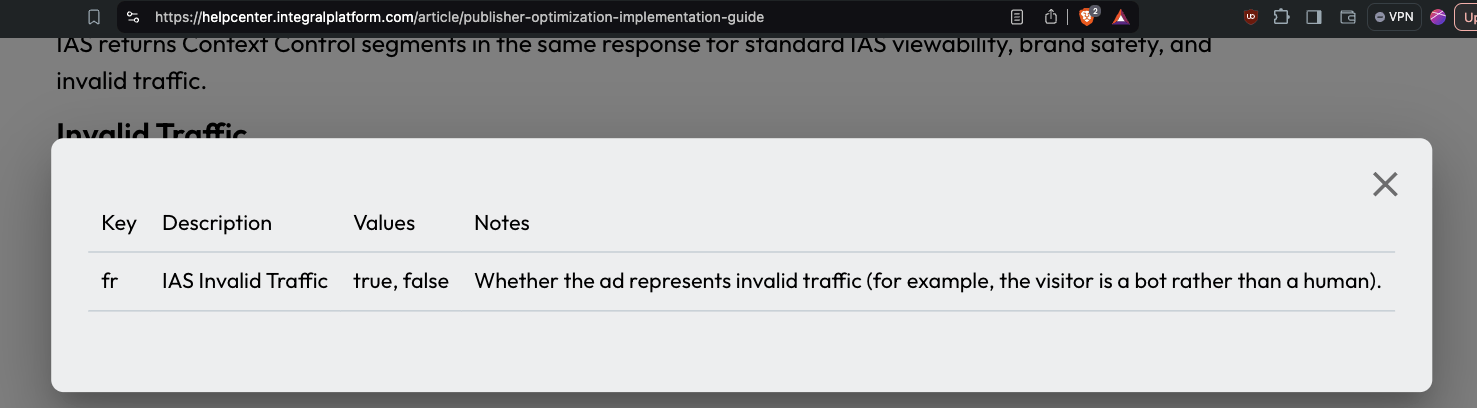

IAS’s “publisher optimization implementation guide” reveals that the vendor uses the “fr” key to indicate “IAS Invalid Traffic”. Possible values for the “fr” key include “fr=true” or “fr=false. The guide explains that this indicates “Whether the ad represents invalid traffic (for example, the visitor is a bot rather than a human).” “fr=true” represents the user is classified as a “bot”, whilst “fr=false” represents the user is classified as a human.

Screenshot of Integral Ad Science (IAS)’s public “Publisher Optimization Implementation Guide” - Original Link: https://helpcenter.integralplatform.com/article/publisher-optimization-implementation-guide; Archived: https://perma.cc/G6EZ-76VR;

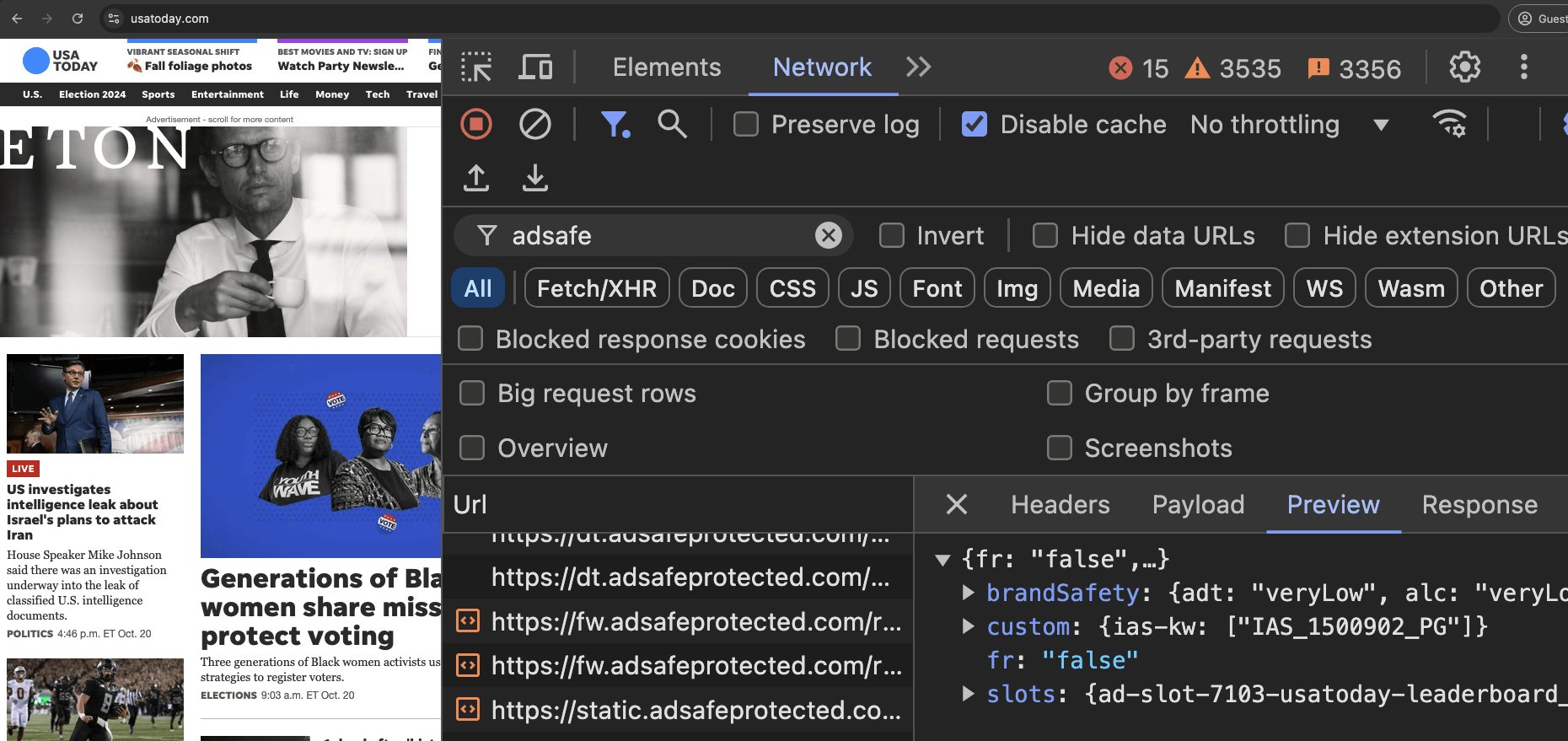

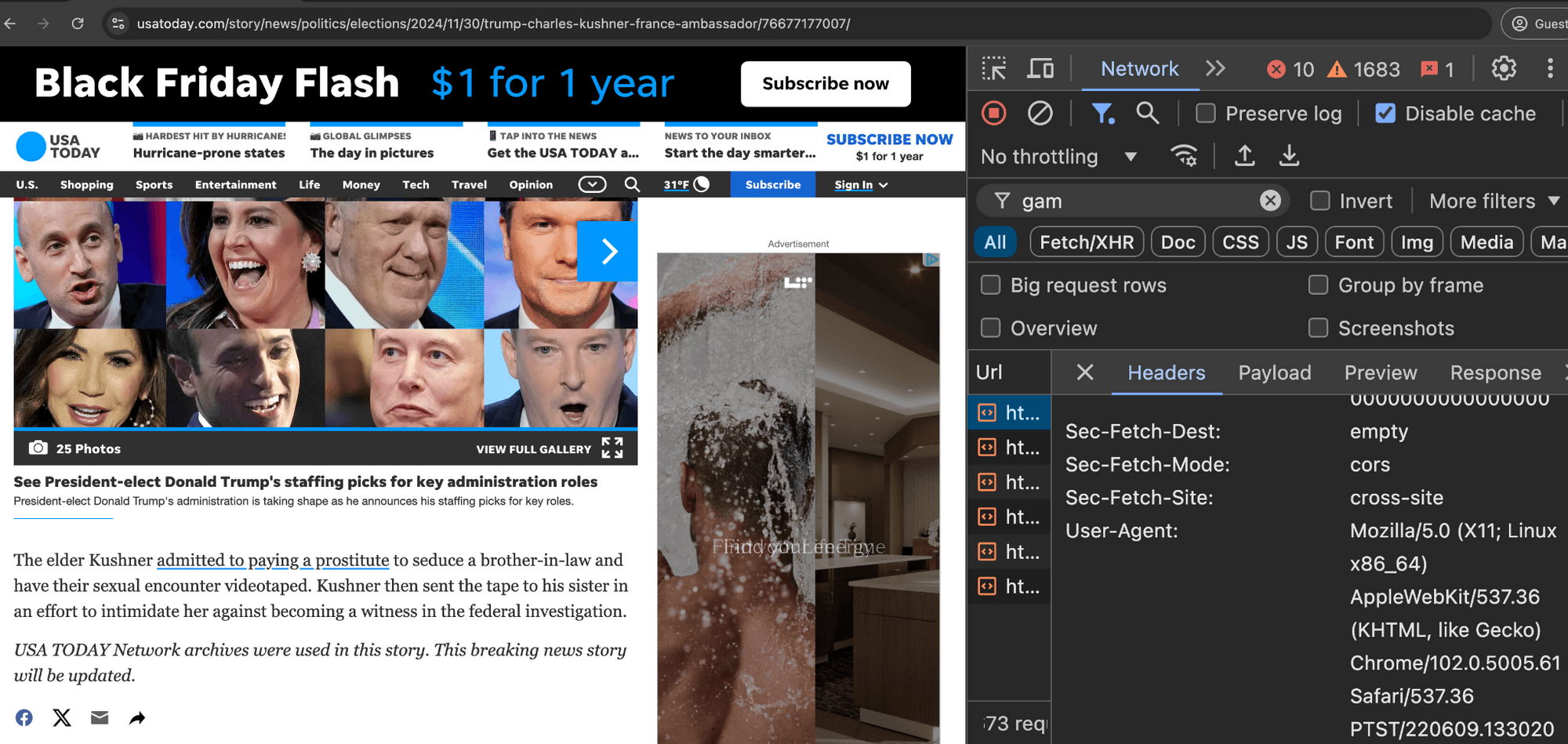

In the screenshot below, one can see an example of IAS’s publisher optimization tools returning an HTTP response from the endpoint “pixel.adsafeprotected.com/services/pub”, wherein the “fr” value is set to “fr=false”.

Screenshot of chrome developer tools, showing that “fr: false” in the lower right hand corner, for IAS’s endpoint “pixel.adsafeprotected.com/services/pub” on usatoday.com.

Given a sample dataset of 100% confirmed bot traffic as a ground truth baseline, one can perform a calibration exercise to statistically estimate the statistical sensitivity (“True Positive Rate”) of the IAS publisher optimization software for detecting bot traffic.

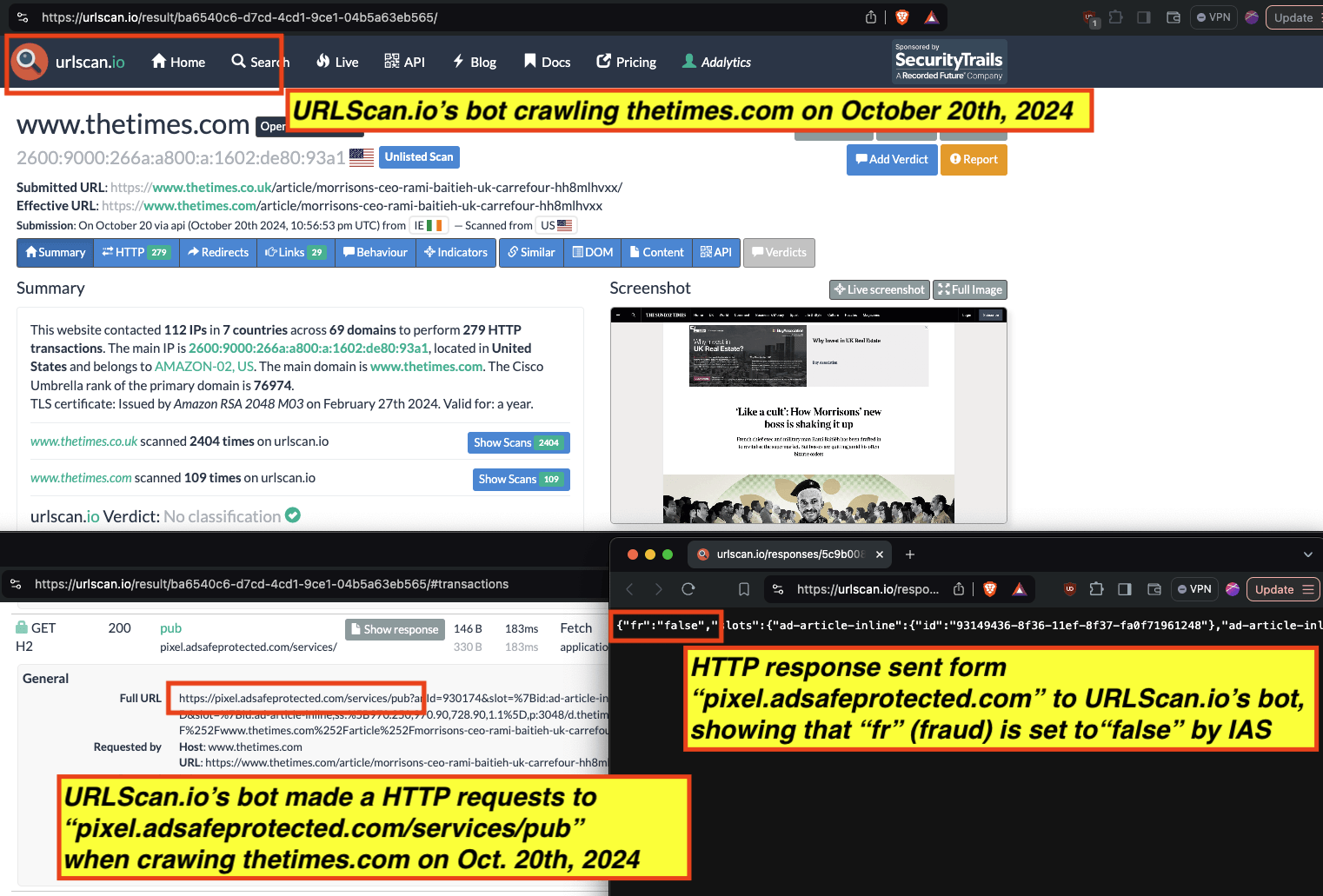

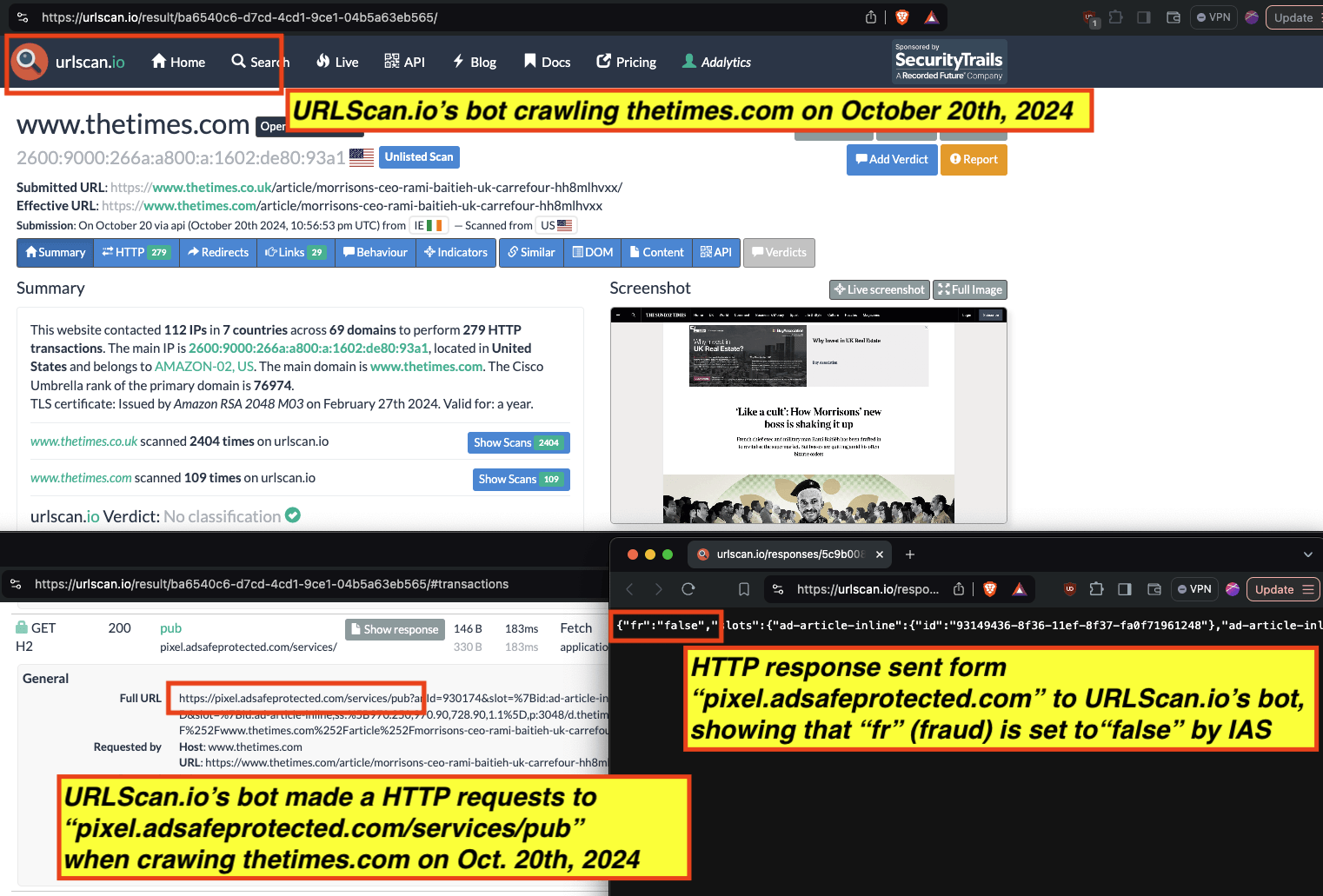

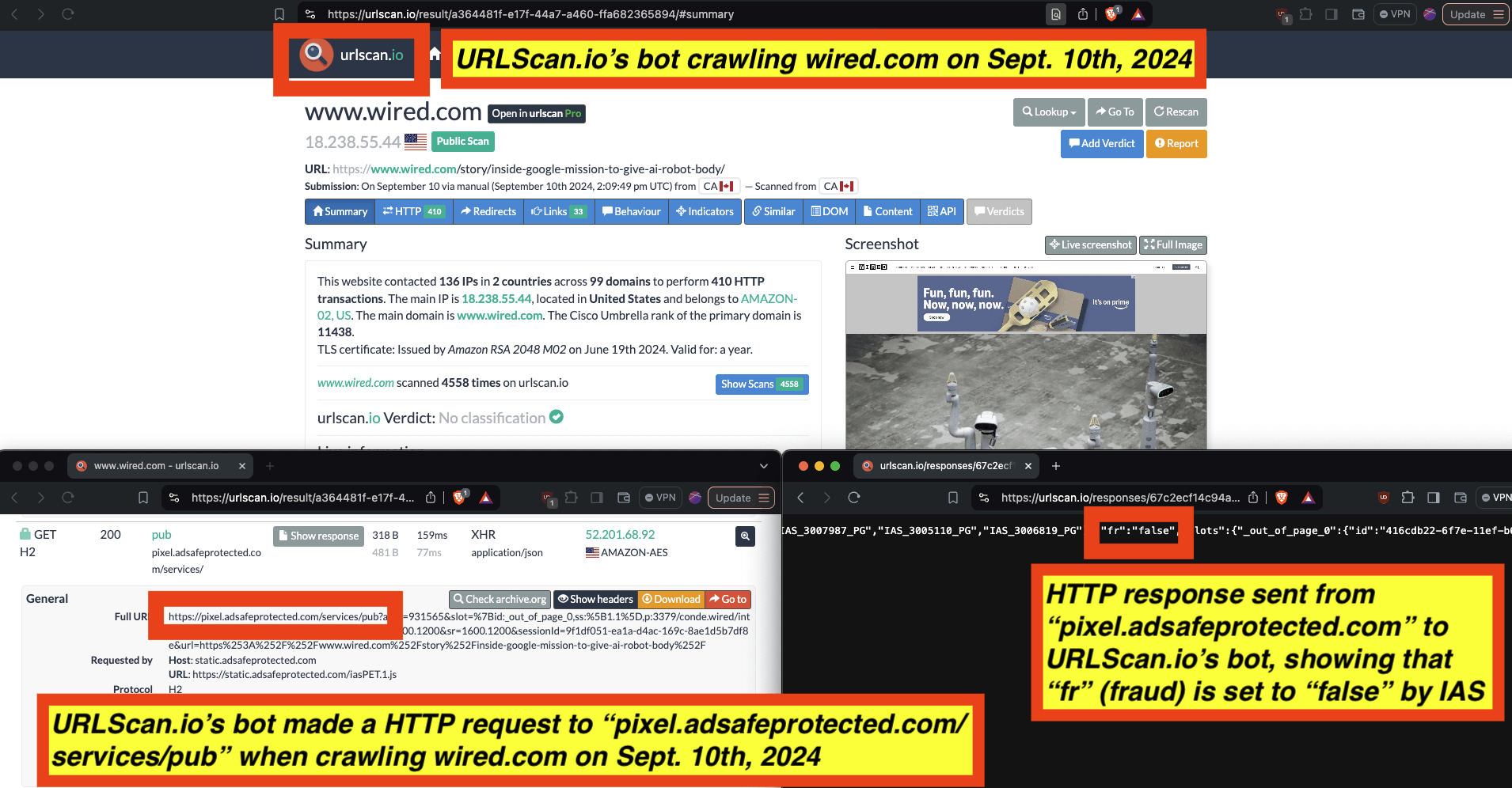

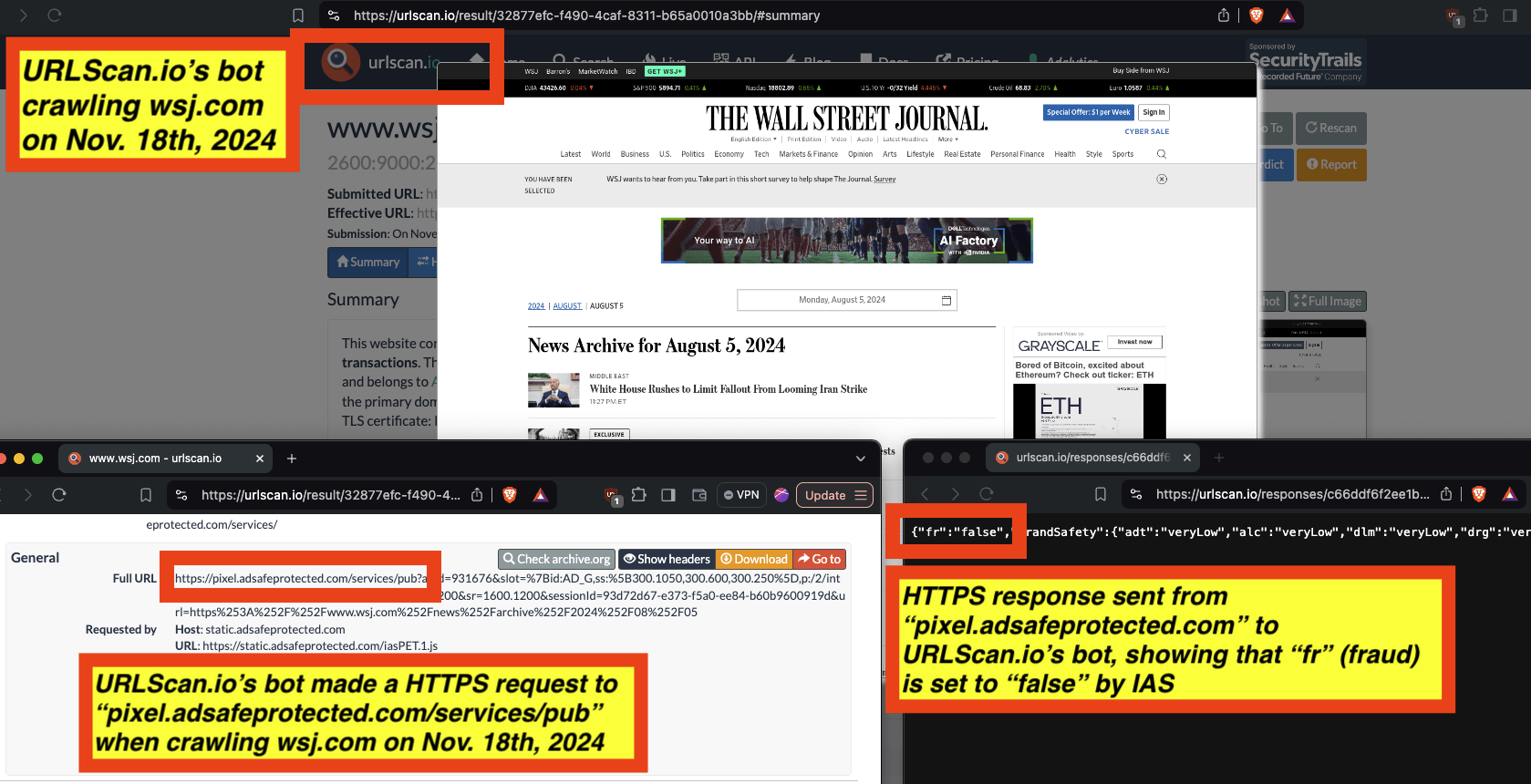

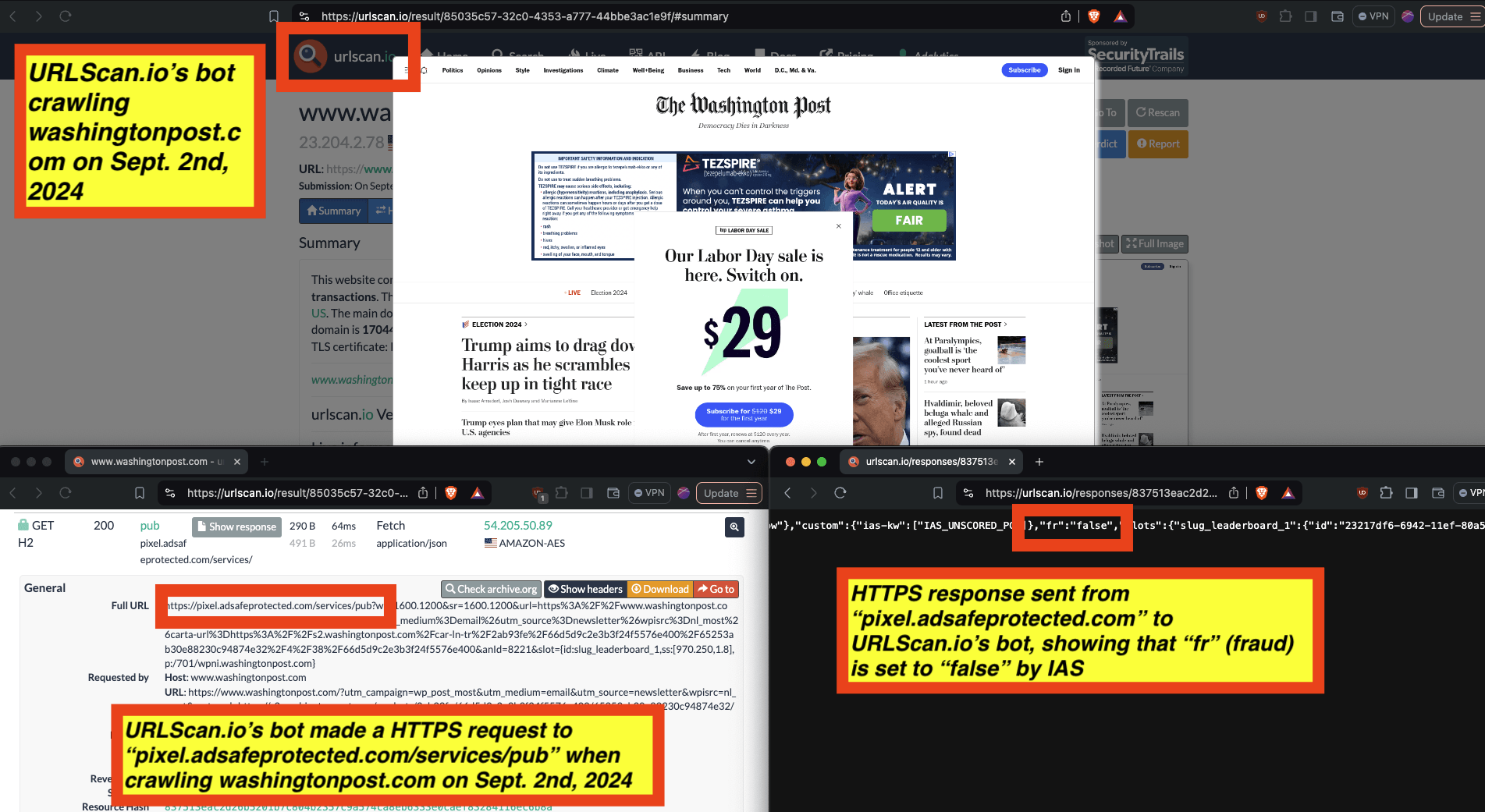

For example, for a set of bot crawls, one can see whether IAS’s “pixel.adsafeprotected.com” endpoint returned a value of “fr=true” or “fr=false”. In the screenshot below, one can see an instance where URLScan.io’s bot crawled thetimes.com on October 20th, 2024. One can see that, when the bot was crawling thetimes.com, IAS’s server endpoint “pixel.adsafeprotected.com/services/pub” was invoked. That HTTP endpoint returned a JSON response, with the “fr” key value set to “false”, as in “fr=false”.

Composite screenshot of URLScan.io’s bot crawling thetimes.com on October 20th, 2024. The screenshot shows HTTPS requests to “pixel.adsafeprotected.com”, which elicited an HTTPS response with “fr”: “false”.

Research Methodology - Digital forensics of ad source code

When an ad is served to a bot in a data center and recorded by the bot (in the form of an HTTP network traffic logfile), one can inspect the source code of the HTTP requests and resources used to serve the ad to the given bot.

Analyzing source code of digital ads allows one to identify which Supply Side Platforms (SSPs) or ad exchanges were involved in transacting a given ad. For example, if an ad was served via the TripleLift header bidding adapter or contained an image pixel whose “src” (source) attribute was the endpoint “tlx.3lift.com/s2s/notify” or “tlx.3lift.com/header/notify”, the given ad was labeled as having been transacted by TripleLift ad exchange. Similarly, if an ad was served contained an image pixel whose “src” attribute was the endpoint “bid.adsrvr.org/bid/feedback” or “us-east-1.event.prod.bidr.io/log/imp/”, the given ad was was labeled as having been transacted by Trade Desk or Beeswax DSP, respectively.

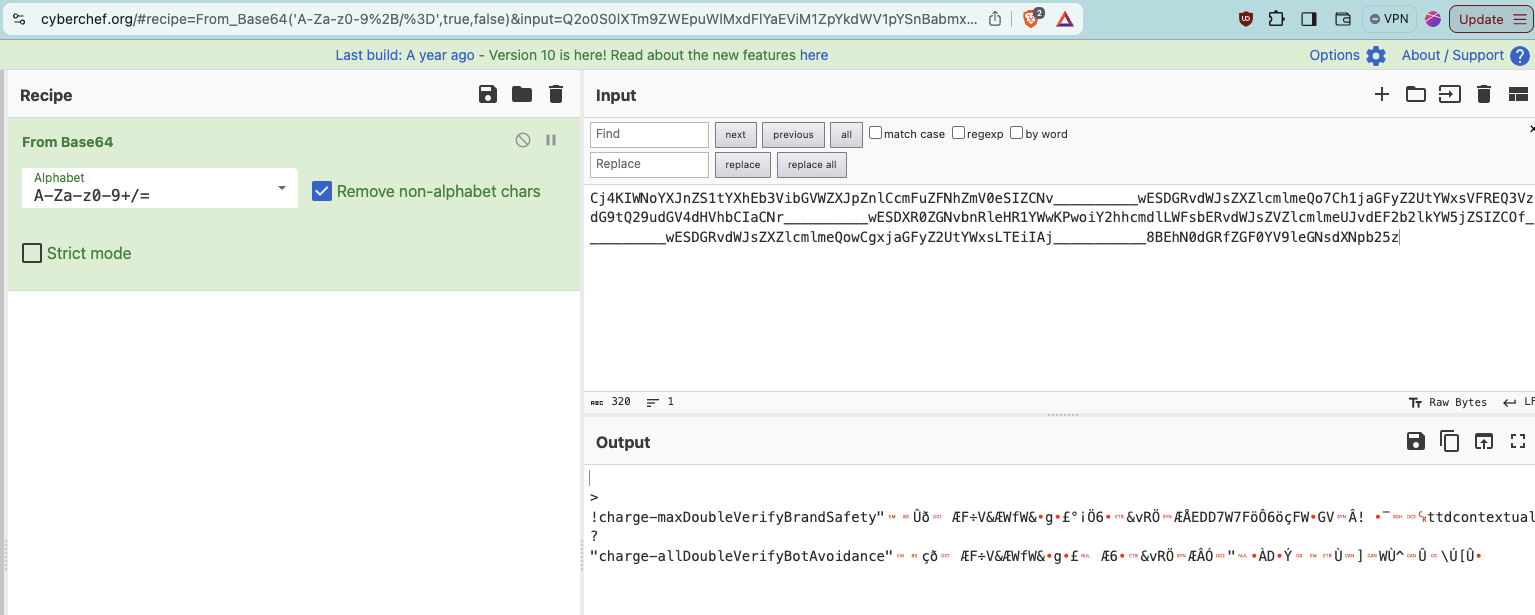

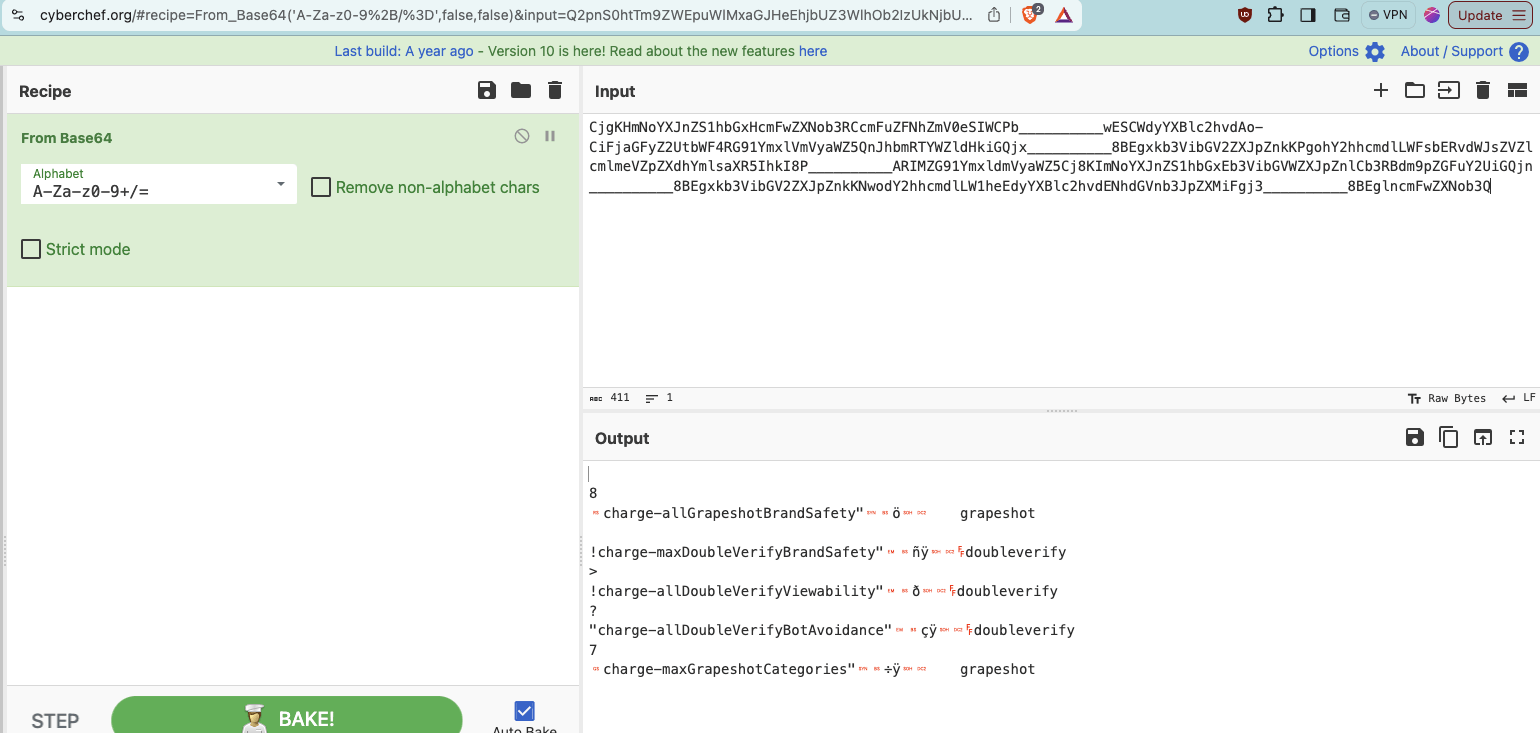

For a subset of digital ads observed, it appears to be possible to extract from the source code of the ad if a given brand appeared to be “charged” (invoiced) for bot avoidance or page quality services from a given vendor. The source of some ads appear to contain (in Base64 encoded text) references to specific data segments, vendors, or services that were applied for a given ad campaign.

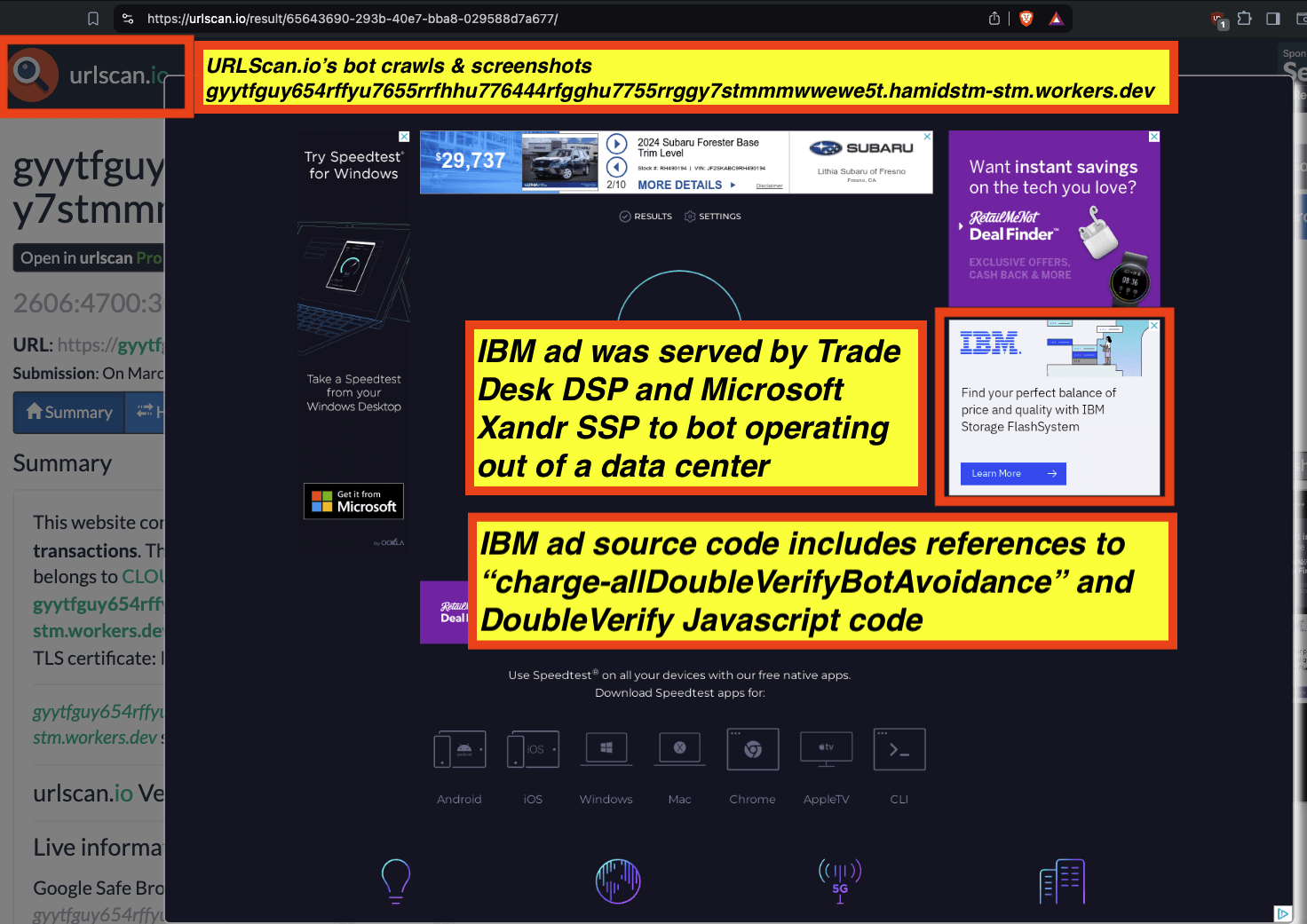

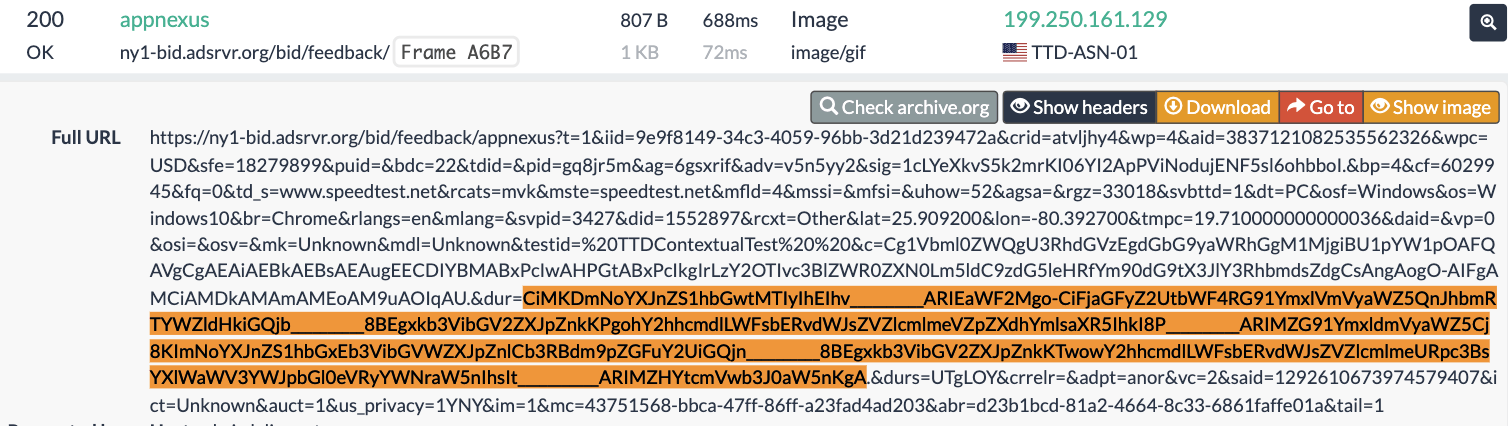

For example, many US Navy ads observed being served to bots in data centers contained Javascript from doubleverify.com and source code from a demand side platform, which included Base64 encoded text. For example, in the screenshot below, one can see a US Navy ad served to a bot that was crawling msn.com (source: https://urlscan.io/result/89b8d0eb-0fbd-4785-98a8-ea70cf9e2954/#summary).

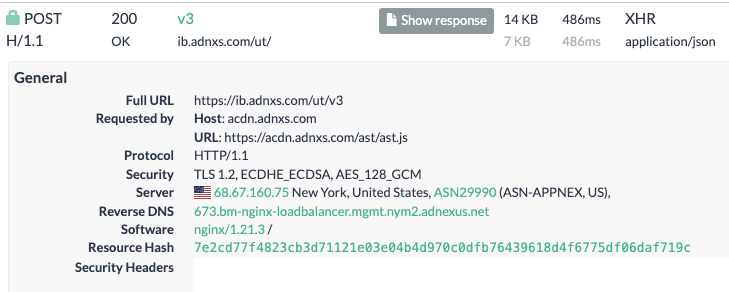

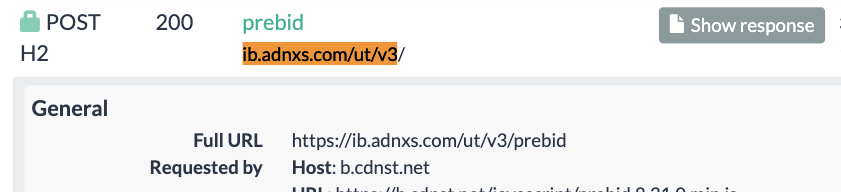

Inspecting the source code of the HTTP traffic that served the ad shows that the bid response was served via Microsoft Xandr’s (formerly known as AppNexus) Prebid server via the endpoint: https://ib.adnxs.com/ut/v3.

Screenshot from URLScan.io showing the individual HTTP POST request that served the US Navy ad to a bot. Source: https://urlscan.io/result/89b8d0eb-0fbd-4785-98a8-ea70cf9e2954/#transactions

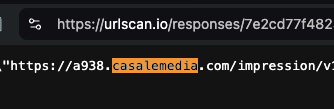

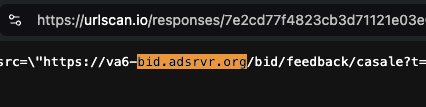

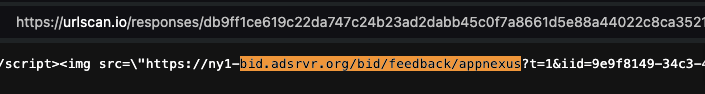

One can inspect the HTTP response from Microsoft Xandr’s Prebid server to see that the bid response was transacted by Index Exchange supply side platform (which owns the domain casalemedia.com) and by the demand side platform Trade Desk.

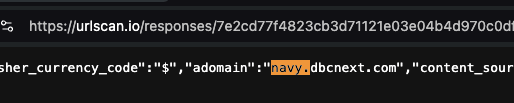

Screenshots of source code of an HTTP bid response from Microsoft Xandr’s Prebid server, when serving a US Navy ad to a bot. Source: https://urlscan.io/responses/7e2cd77f4823cb3d71121e03e04b4d970c0dfb76439618d4f6775df06daf719c/

One can carefully analyze the Trade Desk demand side platform win notification pixel that was triggered when the US Navy ad was served to the bot.

The US Navy ad includes a DSP win notification pixel with the “dur=” query string parameter.

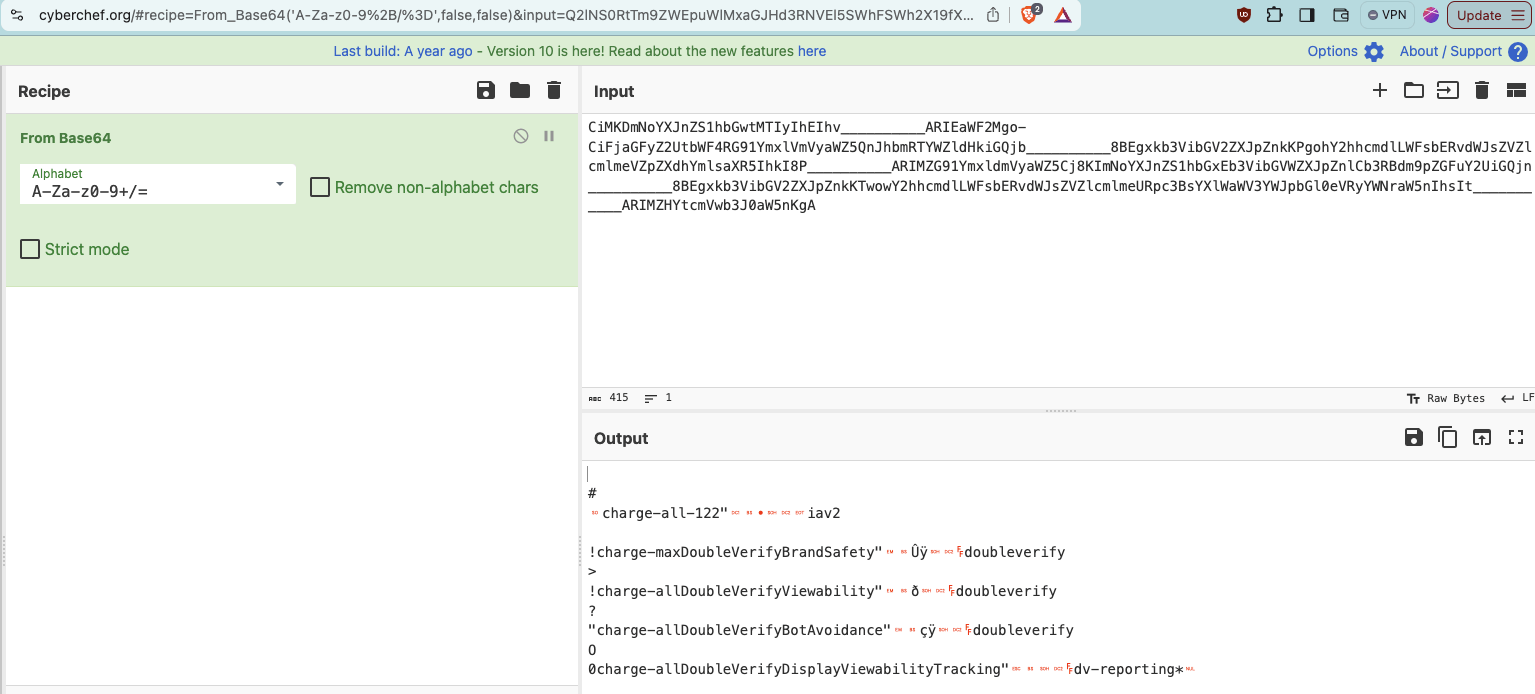

The value of the “dur=” query string parameter from the US Navy DSP win notification pixel is a base64 encoded string: “Cj4KIWNoYXJnZS1tYXhEb3VibGVWZXJpZnlCcmFuZFNhZmV0eSIZCNv__________wESDGRvdWJsZXZlcmlmeQo7Ch1jaGFyZ2UtYWxsVFREQ3VzdG9tQ29udGV4dHVhbCIaCNr__________wESDXR0ZGNvbnRleHR1YWwKPwoiY2hhcmdlLWFsbERvdWJsZVZlcmlmeUJvdEF2b2lkYW5jZSIZCOf__________wESDGRvdWJsZXZlcmlmeQpECiljaGFyZ2UtYWxsRGlzcGxheVZpZXdhYmlsaXR5QmlkQWRqdXN0bWVudCIXCJr__________wESCnEtYWxsaWFuY2UKMAoMY2hhcmdlLWFsbC0xIiAI____________ARITdHRkX2RhdGFfZXhjbHVzaW9ucwpICiFjaGFyZ2UtYWxsTW9hdFZpZXdhYmlsaXR5VHJhY2tpbmciIwil__________8BEg5tb2F0LXJlcG9ydGluZyoGCKCNBhgM”.

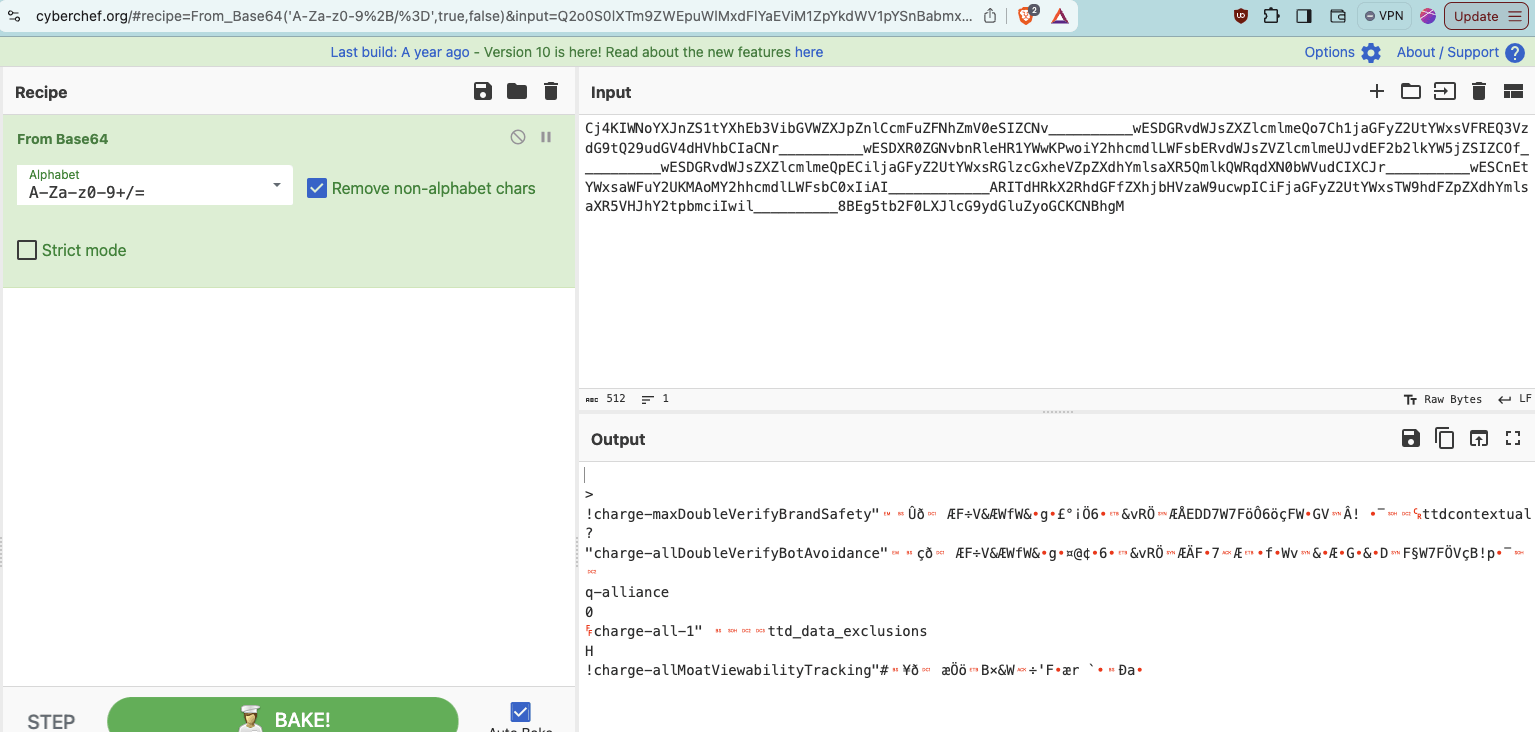

If one copies that specific “dur=” query string parameter and opens the website cyberchef.org (an online web utility or tool provided by the UK signals intelligence agency GCHQ that helps Base64 decode text), one can see the decoded value of this specific “dur=” query string parameter. cyberchef.org shows that the decoded value of the “dur=” query string parameter from the Ikea ad contains references to “charge-allDoubleVerifyBotAvoidance”.

Screenshot of the website cyberchef.org, with the “dur=” query string parameter text from the same US Navy ad that was served to a bot inputted, and the Base64 decoded text in the lower part of the screen. In the lower part of the screenshot, one can observe a reference to “charge-allDoubleVerifyBotAvoidance”. Full link: https://cyberchef.org/#recipe=From_Base64('A-Za-z0-9%2B/%3D',true,false)&input=Q2o0S0lXTm9ZWEpuWlMxdFlYaEViM1ZpYkdWV1pYSnBabmxDY21GdVpGTmhabVYwZVNJWkNOdl9fX19fX19fX193RVNER1J2ZFdKc1pYWmxjbWxtZVFvN0NoMWphR0Z5WjJVdFlXeHNWRlJFUTNWemRHOXRRMjl1ZEdWNGRIVmhiQ0lhQ05yX19fX19fX19fX3dFU0RYUjBaR052Ym5SbGVIUjFZV3dLUHdvaVkyaGhjbWRsTFdGc2JFUnZkV0pzWlZabGNtbG1lVUp2ZEVGMmIybGtZVzVqWlNJWkNPZl9fX19fX19fX193RVNER1J2ZFdKc1pYWmxjbWxtZVFwRUNpbGphR0Z5WjJVdFlXeHNSR2x6Y0d4aGVWWnBaWGRoWW1sc2FYUjVRbWxrUVdScWRYTjBiV1Z1ZENJWENKcl9fX19fX19fX193RVNDbkV0WVd4c2FXRnVZMlVLTUFvTVkyaGhjbWRsTFdGc2JDMHhJaUFJX19fX19fX19fX19fQVJJVGRIUmtYMlJoZEdGZlpYaGpiSFZ6YVc5dWN3cElDaUZqYUdGeVoyVXRZV3hzVFc5aGRGWnBaWGRoWW1sc2FYUjVWSEpoWTJ0cGJtY2lJd2lsX19fX19fX19fXzhCRWc1dGIyRjBMWEpsY0c5eWRHbHVaeW9HQ0tDTkJoZ00

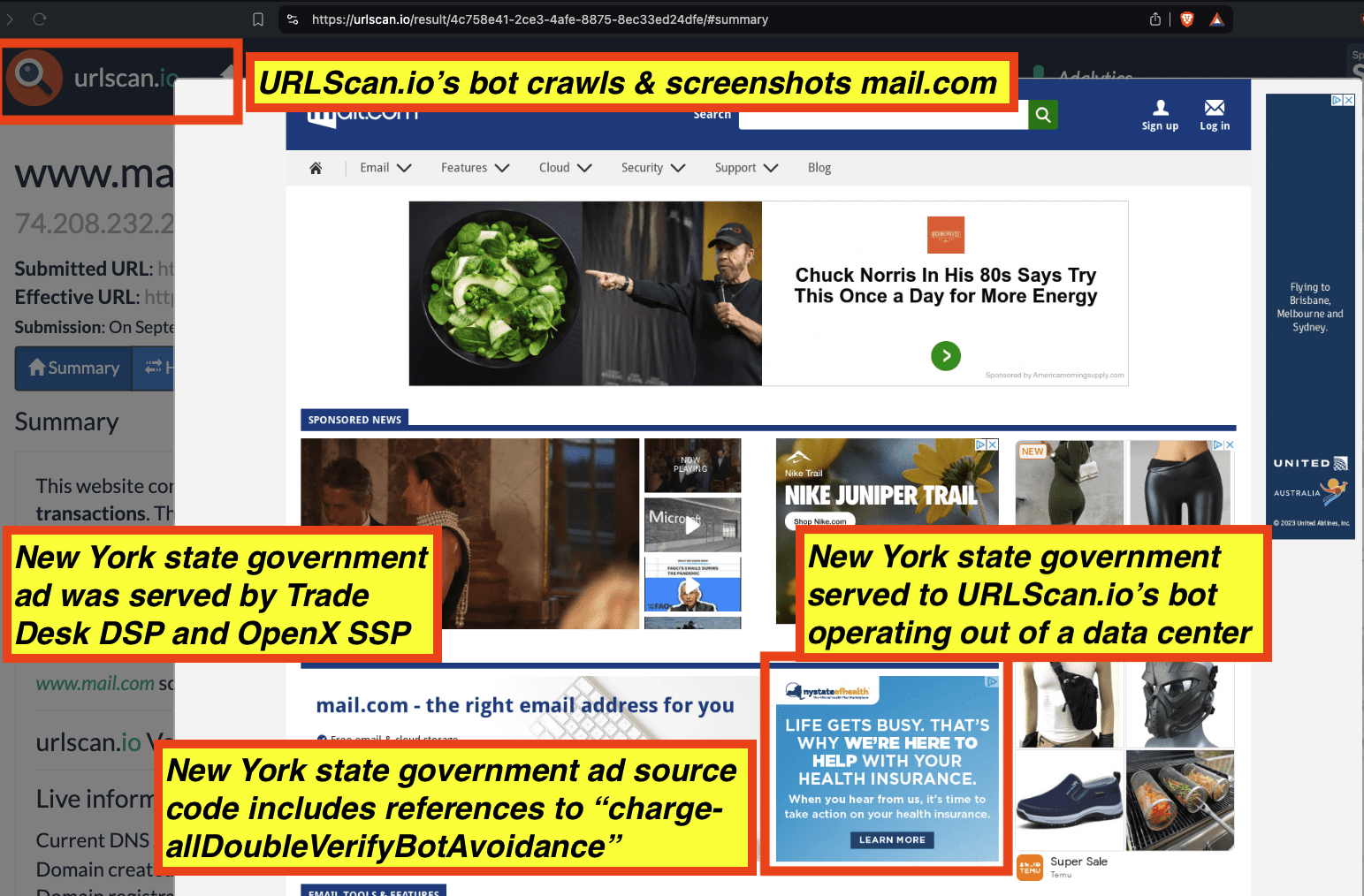

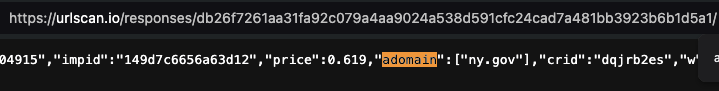

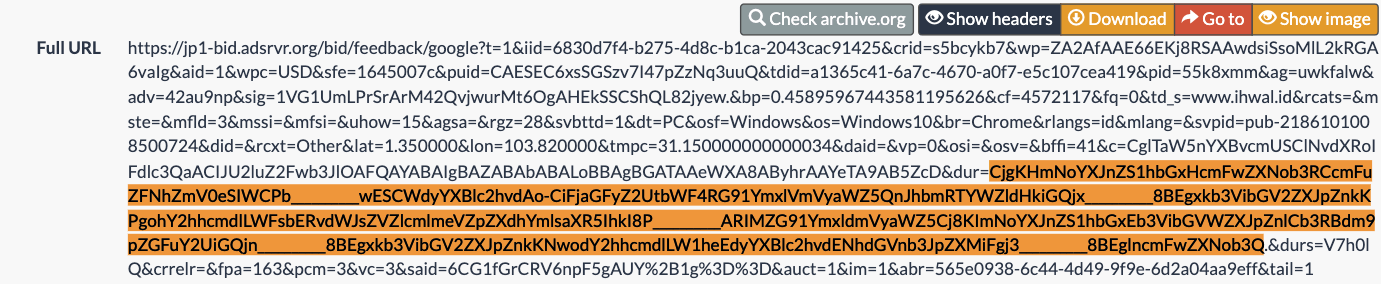

As another example, New York state government (nystateofhealth.ny.gov) ads were observed being served to bots operating out of data centers. Many of the New York state government ads observed being served to bots in data centers contained Javascript from doubleverify.com and source code from a demand side platform, which included Base64 encoded text.

Screenshot of a New York state government ad creative that was served to a bot operating out of a data center by Trade Desk and OpenX. The source code of the New York state government ad contains base64 encoded references to: “charge-allDoubleVerifyBotAvoidance”

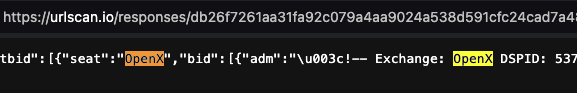

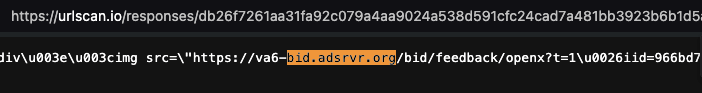

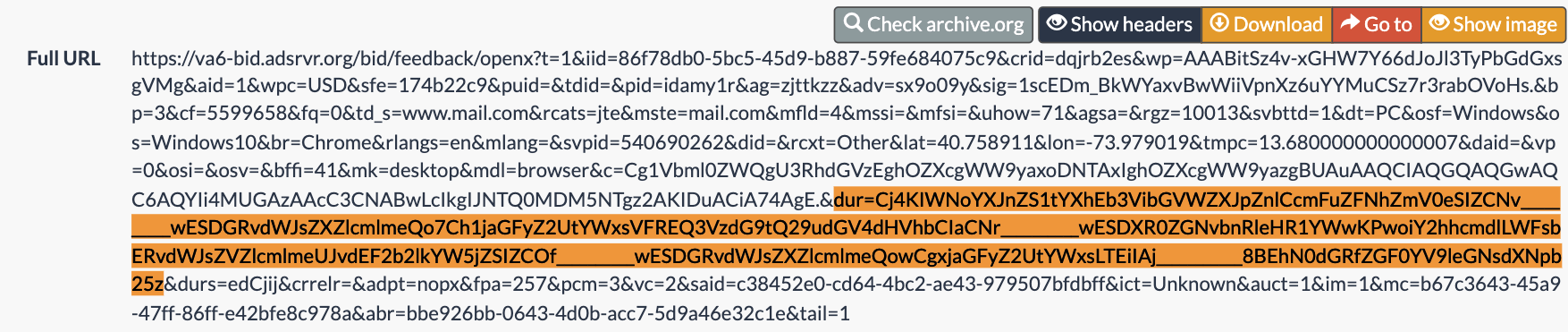

For example, a New York state government ad served was served to a bot that was crawling mail.com (source: https://urlscan.io/result/4c758e41-2ce3-4afe-8875-8ec33ed24dfe/#summary). The URLScan.io bot was operating out of an M247 data center when it was served the New York state government ad. The ad was transacted by Trade Desk and OpenX SSP.

URLScan.io bot screenshot generated when the bot was operating out of a data center and crawling mail.com. The bot was served a New York state government ad by the Trade Desk and OpenX SSP. The source code of the New York state government ad includes base64 encoded references to “charge-allDoubleVerifyBotAvoidance”

One can inspect the HTTP response from OpenX’s server to see that the bid response was transacted by OpenX supply side platform (which owns the domain openx.net) and by the demand side platform Trade Desk.

Screenshots of source code of an HTTP bid response from OpenX’s prebid endpoint, when serving a New York state government ad to a bot in a data center. Source: https://urlscan.io/responses/db26f7261aa31fa92c079a4aa9024a538d591cfc24cad7a481bb3923b6b1d5a1/

One can carefully analyze the Trade Desk demand side platform win notification pixel that was triggered when the New York state government ad was served to the bot operating out of the M247 data center.

The New York state government ad includes a DSP win notification pixel with the “dur=” query string parameter.

The value of the “dur=” query string parameter from the US Navy DSP win notification pixel is a base64 encoded string: “Cj4KIWNoYXJnZS1tYXhEb3VibGVWZXJpZnlCcmFuZFNhZmV0eSIZCNv__________wESDGRvdWJsZXZlcmlmeQo7Ch1jaGFyZ2UtYWxsVFREQ3VzdG9tQ29udGV4dHVhbCIaCNr__________wESDXR0ZGNvbnRleHR1YWwKPwoiY2hhcmdlLWFsbERvdWJsZVZlcmlmeUJvdEF2b2lkYW5jZSIZCOf__________wESDGRvdWJsZXZlcmlmeQowCgxjaGFyZ2UtYWxsLTEiIAj___________8BEhN0dGRfZGF0YV9leGNsdXNpb25z”.